Seata 处理分布式事务

文章目录

-

-

-

- 1 Seata 简介2

- 2 Seata的安装

-

- 2.1 修改配置文件

- 2.2 在nacos上创建配置文件 seataServer.yaml

- 2.3 安装路径seata\seata-server-1.6.0\seata\script\config-center下有一个config.txt文件,修改后复制到seata路径下

- 2.4 通过nacos-config.sh将config.txt文件的内容上传到nacos上

- 2.5 通过seata-server.bat启动

- 3 业务说明

- 4 应用

-

- 4.1 依赖

- 4.2 配置文件

- 4.3 订单服务主业务TOrderServiceImpl

- 4.4 库存服务 TStorageServiceImpl

- 4.5 账户服务 TStorageServiceImpl

- 12.4.6 故障情况

- 12.4.7 使用Seata对数据源进行代理

- 5 seata原理

-

- 5.1 业务执行流程

- 5.2 AT模式

-

- 一阶段加载

- 二阶段提交

- 二阶段回滚

-

-

想要学习完整SpringCloud架构可跳转: SpringCloud Alibaba微服务分布式架构

单体应用被拆分成微服务应用,原来的三个模块被拆分成三个独立的应用,分别使用三个独立的数据源,业务操作需要调用三个服务来完成。

此时每个服务内部的数据一致性由本地事务来保证,但是全局的数据一致性问题没法保证。

一次业务操作需要跨多个数据源或者需要跨多个系统进行远程调用,就会产生分布式事务问题。

案例:

用户购买商品的业务逻辑:

- 仓储服务:对给定的商品扣除仓储数量。

- 订单服务:根据采购需求创建订单。

- 账户服务:从用户账户中扣除余额。

![]()

1 Seata 简介2

概念:Seata是一款开源的分布式事务解决方案,致力于在微服务架构下提供高性能和简单易用的分布式事务服务。

分布式事务的处理过程:1 ID+ 3 组件模型

1 ID:全剧唯一的事务ID

术语3组件:

- Tc-事务协调者:维护全局和分支事务的状态,驱动全局事务提交或回滚。

- TM-事务管理器:定义全局事务的范围︰开始全局事务、提交或回滚全局事务。

- RM-资源管理器:管理分支事务处理的资源,与TC交谈以注册分支事务和报告分支事务的状态,并驱动分支事务提交或回滚。

![]()

![]()

2 Seata的安装

2.1 修改配置文件

# Copyright 1999-2019 Seata.io Group.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

server:

port: 7091

spring:

application:

name: seata-server

logging:

config: classpath:logback-spring.xml

file:

path: ${user.home}/logs/seata

extend:

logstash-appender:

destination: 127.0.0.1:4560

kafka-appender:

bootstrap-servers: 127.0.0.1:9092

topic: logback_to_logstash

console:

user:

username: seata

password: seata

seata:

config:

# support: nacos, consul, apollo, zk, etcd3

type: nacos

nacos:

server-addr: 127.0.0.1:8848

namespace: c7e4e5e4-8693-40e1-b75e-1ce1b46bf976

group: SEATA_GROUP

##if use MSE Nacos with auth, mutex with username/password attribute

#access-key: ""

#secret-key: ""

data-id: seataServer.yaml

username: nacos

password: nacos

registry:

# support: nacos, eureka, redis, zk, consul, etcd3, sofa

type: nacos

# preferred-networks: 30.240.*

nacos:

application: seata-server

server-addr: 127.0.0.1:8848

group: SEATA_GROUP

namespace: c7e4e5e4-8693-40e1-b75e-1ce1b46bf976

cluster: default

username: nacos

password: nacos

##if use MSE Nacos with auth, mutex with username/password attribute

#access-key: ""

#secret-key: ""

store:

# support: file 、 db 、 redis

mode: db

db:

datasource: druid

db-type: mysql

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://127.0.0.1:3306/seata?useUnicode=true&rewriteBatchedStatements=true&serverTimezone=GMT

user: root

password: 123456

min-conn: 5

max-conn: 100

global-table: global_table

branch-table: branch_table

lock-table: lock_table

distributed-lock-table: distributed_lock

query-limit: 100

max-wait: 5000

# server:

# service-port: 8091 #If not configured, the default is '${server.port} + 1000'

security:

secretKey: SeataSecretKey0c382ef121d778043159209298fd40bf3850a017

tokenValidityInMilliseconds: 1800000

ignore:

urls: /,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-fe/public/**,/api/v1/auth/login

2.2 在nacos上创建配置文件 seataServer.yaml

metrics:

enabled: false

exporterList: prometheus

exporterPrometheusPort: 9898

registryType: compact

server:

maxCommitRetryTimeout: -1

maxRollbackRetryTimeout: -1

recovery:

asynCommittingRetryPeriod: 3000

committingRetryPeriod: 3000

rollbackingRetryPeriod: 3000

timeoutRetryPeriod: 3000

rollbackRetryTimeoutUnlockEnable: false

undo:

logDeletePeriod: 86400000

logSaveDays: 7

store:

db:

branchTable: branch_table

datasource: druid

dbType: mysql

driverClassName: com.mysql.cj.jdbc.Driver

globalTable: global_table

lockTable: lock_table

maxConn: 30

maxWait: 5000

minConn: 5

password: root

queryLimit: 100

url: jdbc:mysql://127.0.0.1:3306/seata?useUnicode=true&rewriteBatchedStatements=true&serverTimezone=GMT

user: root

mode: db

transport:

compressor: none

serialization: seata

2.3 安装路径seata\seata-server-1.6.0\seata\script\config-center下有一个config.txt文件,修改后复制到seata路径下

#For details about configuration items, see https://seata.io/zh-cn/docs/user/configurations.html

#Transport configuration, for client and server

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableTmClientBatchSendRequest=false

transport.enableRmClientBatchSendRequest=true

transport.enableTcServerBatchSendResponse=false

transport.rpcRmRequestTimeout=30000

transport.rpcTmRequestTimeout=30000

transport.rpcTcRequestTimeout=30000

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

transport.serialization=seata

transport.compressor=none

#Transaction routing rules configuration, only for the client

service.vgroupMapping.my_test_tx_group=default

#If you use a registry, you can ignore it

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

#Transaction rule configuration, only for the client

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=true

client.rm.tableMetaCheckerInterval=60000

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.rm.sagaJsonParser=fastjson

client.rm.tccActionInterceptorOrder=-2147482648

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

client.tm.interceptorOrder=-2147482648

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.undo.compress.enable=true

client.undo.compress.type=zip

client.undo.compress.threshold=64k

#For TCC transaction mode

tcc.fence.logTableName=tcc_fence_log

tcc.fence.cleanPeriod=1h

#Log rule configuration, for client and server

log.exceptionRate=100

#Transaction storage configuration, only for the server. The file, db, and redis configuration values are optional.

store.mode=db

store.lock.mode=db

store.session.mode=db

#Used for password encryption

store.publicKey=

#If `store.mode,store.lock.mode,store.session.mode` are not equal to `file`, you can remove the configuration block.

store.file.dir=file_store/data

store.file.maxBranchSessionSize=16384

store.file.maxGlobalSessionSize=512

store.file.fileWriteBufferCacheSize=16384

store.file.flushDiskMode=async

store.file.sessionReloadReadSize=100

#These configurations are required if the `store mode` is `db`. If `store.mode,store.lock.mode,store.session.mode` are not equal to `db`, you can remove the configuration block.

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.cj.jdbc.Driver

store.db.url=jdbc:mysql://127.0.0.1:3306/seata?useUnicode=true&rewriteBatchedStatements=true

store.db.user=root

store.db.password=root

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.distributedLockTable=distributed_lock

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

#These configurations are required if the `store mode` is `redis`. If `store.mode,store.lock.mode,store.session.mode` are not equal to `redis`, you can remove the configuration block.

store.redis.mode=single

store.redis.single.host=127.0.0.1

store.redis.single.port=6379

store.redis.sentinel.masterName=

store.redis.sentinel.sentinelHosts=

store.redis.maxConn=10

store.redis.minConn=1

store.redis.maxTotal=100

store.redis.database=0

store.redis.password=123456

store.redis.queryLimit=100

#Transaction rule configuration, only for the server

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

server.distributedLockExpireTime=10000

server.xaerNotaRetryTimeout=60000

server.session.branchAsyncQueueSize=5000

server.session.enableBranchAsyncRemove=false

server.enableParallelRequestHandle=false

#Metrics configuration, only for the server

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

2.4 通过nacos-config.sh将config.txt文件的内容上传到nacos上

![]()

2.5 通过seata-server.bat启动

3 业务说明

- 这里我们会创建三个服务,一个订单服务,一个库存服务,一个账户服务。

- 当用户下单时,会在订单服务中创建一个订单,然后通过远程调用库存服务来扣减下单商品的库存,

- 再通过远程调用账户服务来扣减用户账户里面的余额,

- 最后在订单服务中修改订单状态为已完成。

- 该操作跨越三个数据库,有两次远程调用,很明显会有分布式事务问题。

4 应用

4.1 依赖

<dependency>

<groupId>com.alibaba.cloudgroupId>

<artifactId>spring-cloud-starter-alibaba-seataartifactId>

<exclusions>

<exclusion>

<artifactId>seata-allartifactId>

<groupId>io.seatagroupId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>io.seatagroupId>

<artifactId>seata-allartifactId>

<version>1.6.0version>

dependency>

4.2 配置文件

spring:

# 服务名称

application:

# 订单服务

name: seata-order-service

cloud:

nacos:

discovery:

server-addr: localhost:8848 # Nacos 服务注册中心地址

namespace: c7e4e5e4-8693-40e1-b75e-1ce1b46bf976

config:

server-addr: localhost:8848 # Nacos 配置中心地址

file-extension: yaml # 指定yaml格式的配置

group: SEATA_GROUP

namespace: c7e4e5e4-8693-40e1-b75e-1ce1b46bf976

sentinel:

transport:

# 配置Sentinel dashboard地址

dashboard: localhost:8080

# 默认8719端口,加入被占用会自动从8719开始依次+1扫描,知道找到未被占用的端口

port: 8719

alibaba:

seata:

# 自定义事务组名称需要与seata-server中的对应

tx-service-group: default_tx_group

# seata 配置, 代替file.conf和registry.conf配置

sfs:

nacos:

server-addr: 127.0.0.1:8848

namespace: c7e4e5e4-8693-40e1-b75e-1ce1b46bf976

group: SEATA_GROUP

username: nacos

password: nacos

seata:

enabled: true

application-id : ${spring.application.name}

tx-service-group: default_tx_group

use-jdk-proxy: true

enable-auto-data-source-proxy: true

registry:

type: nacos

nacos:

application: seata-server

server-addr: ${sfs.nacos.server-addr}

namespace: ${sfs.nacos.namespace}

group: ${sfs.nacos.group}

username: ${sfs.nacos.username}

password: ${sfs.nacos.username}

config:

type: nacos

nacos:

server-addr: ${sfs.nacos.server-addr}

namespace: ${sfs.nacos.namespace}

group: ${sfs.nacos.group}

username: ${sfs.nacos.username}

password: ${sfs.nacos.username}

service:

vgroupMapping:

default_tx_group: default

4.3 订单服务主业务TOrderServiceImpl

@Service

@Slf4j

public class TOrderServiceImpl implements TOrderService {

@Resource

private TOrderDao tOrderDao;

@Resource

private StorageService storageService;

@Resource

private AccountService accountService;

/**

* 创建订单->调用库存服务扣减库存->调用账户服务扣减账户余额->修改订单状态

* @param tOrder

* @return

*/

@Override

public boolean create(TOrder tOrder) {

//1.创建订单

log.info("----->开始创建订单");

tOrder.setStatus(0);

tOrderDao.insert(tOrder);

//2.扣减库存

log.info("----->订单微服务开始调用库存,做扣减Count");

storageService.decrease(tOrder.getProductId(),tOrder.getCount());

log.info("----->订单微服务开始调用库存,做扣减end");

//3.扣减账户

log.info("----->订单微服务开始调用账户,做扣减Money");

accountService.decrease(tOrder.getUserId(),tOrder.getMoney());

log.info("----->订单微服务开始调用账户,做扣减end");

//4.修改订单状态,0=>1

log.info("----->修改订单状态开始");

boolean flag = this.updateStatus(tOrder.getUserId(), 1);

log.info("----->修改订单状态结束");

log.info("----->下订单,结束了,O(∩_∩)O哈哈~");

return flag;

}

@Override

public boolean updateStatus(Long userId,Integer status) {

QueryWrapper<TOrder> wrapper = new QueryWrapper<>();

wrapper.eq("user_id", userId);

TOrder tOrder = new TOrder();

tOrder.setStatus(status);

return tOrderDao.update(tOrder, wrapper)>0;

}

}

4.4 库存服务 TStorageServiceImpl

@Service

@Slf4j

public class TStorageServiceImpl implements TStorageService {

@Resource

private TStorageDao dao;

/**

* 扣减库存

*

* @param productId

* @param count

* @return

*/

@Override

public boolean decrease(Long productId, Integer count) {

log.info("------>seata-storage-service中扣减库存开始");

QueryWrapper<TStorage> wrapper = new QueryWrapper<>();

wrapper.eq("product_id", productId);

TStorage tStorage = dao.selectOne(wrapper);

TStorage newTStorage = new TStorage();

newTStorage.setUsed(tStorage.getUsed() + count);

newTStorage.setResidue(tStorage.getResidue() - count);

return dao.update(newTStorage, wrapper) > 0;

}

}

4.5 账户服务 TStorageServiceImpl

@Service

@Slf4j

public class TAccountServiceImpl implements TAccountService {

@Resource

private TAccountDao dao;

@Override

public Boolean decrease(Long userId, BigDecimal money) {

log.info("------>开始扣减账户");

//模拟超时异常,全局事务回滚

try {

TimeUnit.SECONDS.sleep(20);

}catch (InterruptedException e){

e.printStackTrace();

}

QueryWrapper<TAccount> param = new QueryWrapper<>();

param.eq("user_id",userId);

TAccount tAccount = dao.selectOne(param);

QueryWrapper<TAccount> wapper = new QueryWrapper<>();

wapper.eq("user_id",userId);

TAccount newTAccount = new TAccount();

newTAccount.setUsed(tAccount.getUsed().add(money));

newTAccount.setResidue(new BigDecimal(Double.toString(tAccount.getResidue().subtract(money).doubleValue())));

log.info("------>结束扣减账户");

return dao.update(newTAccount,wapper)>0;

}

}

12.4.6 故障情况

当库存和账户扣减后,订单状态并没有改变,二期由于Feign的重试机制,账户余额还有可能重复扣减

12.4.7 使用Seata对数据源进行代理

MyBatis版

@Configuration

public class DataSourceMyBatisConfig {

@Value("${mybatis-Plus.mapper-locations}")

private String mapperLocations;

@Bean

@Primary//让MyBatis-Plus优先使用我们配置的数据源

@ConfigurationProperties(prefix = "spring.datasource")

public DataSource druidDatasource() {

return new DruidDataSource();

}

@Bean

public DataSourceProxy dataSourceProxy(DataSource dataSource) {

return new DataSourceProxy(dataSource);

}

@Bean

public SqlSessionFactory sqlSessionFactoryBean(DataSourceProxy dataSourceProxy) throws Exception {

SqlSessionFactoryBean sqlSessionFactoryBean = new SqlSessionFactoryBean();

sqlSessionFactoryBean.setDataSource(dataSourceProxy);

sqlSessionFactoryBean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources(mapperLocations));

sqlSessionFactoryBean.setTransactionFactory(new SpringManagedTransactionFactory());

return sqlSessionFactoryBean.getObject();

}

}

MyBatis-Plus版

/**

* DataSourceProxyConfig :

* 使用Seata对数据源进行代理

*

* @author zyw

* @create 2023/7/10

*/

@Configuration

@MapperScan("com.zyw.springcloud.dao")

public class DataSourceMyBatisPlusConfig {

@Value("${mybatis-Plus.mapper-locations}")

private String mapperLocations;

@Bean

@ConfigurationProperties(prefix = "spring.datasource")

public DataSource druidDataSource(){

return new DruidDataSource();

}

@Primary

@Bean("dataSource")

public DataSourceProxy dataSource(DataSource druidDataSource){

return new DataSourceProxy(druidDataSource);

}

/**

* 配置mybatis-plus的分页

* @return

*/

@Bean

public MybatisPlusInterceptor mybatisPlusInterceptor(){

MybatisPlusInterceptor interceptor = new MybatisPlusInterceptor();

interceptor.addInnerInterceptor(new PaginationInnerInterceptor(DbType.MYSQL));//指定数据库

return interceptor;

}

@Bean

public SqlSessionFactory sqlSessionFactory(DataSourceProxy dataSourceProxy)throws Exception{

MybatisSqlSessionFactoryBean sqlSessionFactoryBean = new MybatisSqlSessionFactoryBean();

sqlSessionFactoryBean.setDataSource(dataSourceProxy);

sqlSessionFactoryBean.setMapperLocations(new PathMatchingResourcePatternResolver()

.getResources(mapperLocations));

// 配置spring的本地事务

sqlSessionFactoryBean.setTransactionFactory(new SpringManagedTransactionFactory());

// 配置mybatis-plus的log打印

MybatisConfiguration cfg = new MybatisConfiguration();

cfg.setJdbcTypeForNull(JdbcType.NULL);

cfg.setMapUnderscoreToCamelCase(true);

cfg.setCacheEnabled(false);

cfg.setLogImpl(StdOutImpl.class);

sqlSessionFactoryBean.setConfiguration(cfg);

return sqlSessionFactoryBean.getObject();

}

}

5 seata原理

5.1 业务执行流程

- TM开启分布式事务(TM向TC注册全局事务记录);

- 按业务场景,编排数据库、服务等事务内资源(RM向TC汇报资源准备状态);

- TM结束分布式事务,事务一阶段结束(TM通知TC提交/回滚分布式事务);

- TC汇总事务信息,决定分布式事务是提交还是回滚;

- TC通知所有RM提交/回滚资源,事务二阶段结束。

5.2 AT模式

前提:

-

给予支持本地ACID事务的关系型数据库。

-

Java应用,通过JDBC访问数据库。

整体机制:

-

一阶段:业务数据和回归日志记录在同一个本地事务中提交,释放本地锁和连接资源

-

二阶段:提交异步化,非常快速的完成;回滚通过一阶段的回滚日志进行反向补偿

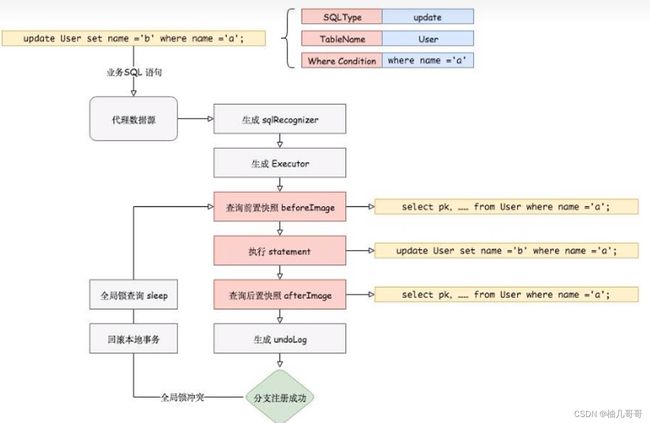

一阶段加载

在一阶段,Seata会拦截“业务SQL” ,

1解析SQL语义,找到“业务SQL”要更新的业务数据,在业务数据被更新前,将其保存成“before image”,(前置镜像)

2执行“业务SQL”更新业务数据,在业务数据更新之后,

3其保存成“after image”,最后生成行锁。

以上操作全部在一个数据库事务内完成,这样保证了一阶段操作的原子性

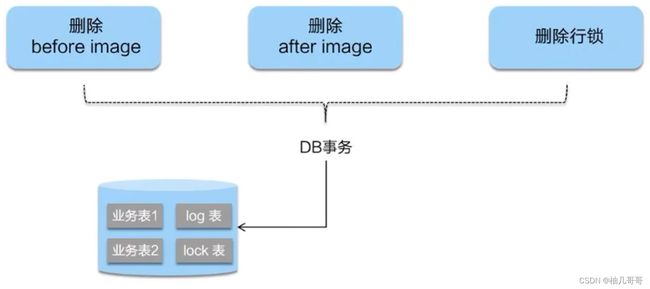

二阶段提交

因为业务SQL在一阶段已经提交至数据库,所有Seata框架只需将一阶段保存的快照数据和行锁删除,完成数据清理即可。

二阶段回滚

二阶段如果是回滚的话,Seata就需要回滚一阶段已经执行的“业务SQL”,还原业务数据。

回滚方式便是用“before image”还原业务数据;但在还原前要首先要校验脏写,对比“数据库当前业务数据”和“after image”,如果两份数据完全一致就说明没有脏写,可以还原业务数据,如果不一致就说明有脏写,出现脏写就需要转人工处理。