11.RocketMQ--10万级吞吐的原因

文章目录

-

- MappedByteBuffer

- MessageStore

- 总结

转载:https://blog.csdn.net/w7sss/article/details/123757467

rocketmq能够抗住10万级吞吐的高性能消息持久化组件—MessageStore

它是一个commitlog存储服务,底层使用了c语言的内存映射函数mmap,实现了内存零拷贝

MappedByteBuffer

MappedByteBuffer是mmap的java实现,继承自ByteBuffer,它是一个映射了的字节缓冲区,可以将一个文件映射到直接内存中,java程序可以通过在这个MappedByteBuffer的引用直接操作堆外内存,省去了将数据复制到堆内存这一步,即内存零拷贝。

你可以像这样创建一个MappedByteBuffer:

try(RandomAccessFile aFile = new RandomAccessFile("E:\\test.txt", "rw")){

try(FileChannel inChannel = aFile.getChannel()) {

MappedByteBuffer buffer=inChannel.map(FileChannel.MapMode.READ_WRITE,0,aFile.length());

//..TO DO

}

}

对MappedByteBuffer的操作会在某个时刻持久化到磁盘,当然也可以手动强制刷盘,如:

buffer.force();

而且使用MappedByteBuffer写入文件的方式是顺序读写,相比于磁盘的随机IO效率大幅提高。

在rocketmq中对MappedByteBuffer做了封装得到了MappedFile,下面分析MessageStore的工作原理。

MessageStore

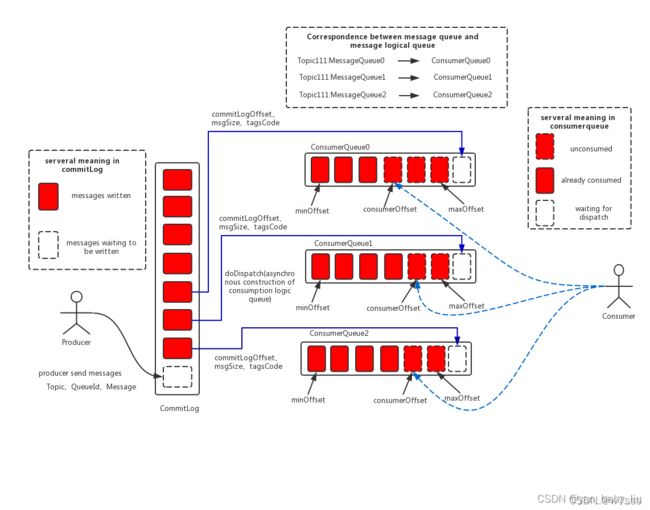

首先看下rocketmq的存储结构,主要为三个组成部分:

- CommitLog:存储消息的元数据。所有消息都会顺序存入到CommitLog文件当中。CommitLog由多个文件组成,每个文件固定大小1G。以第一条消息的偏移量为文件名。

- ConsumerQueue:存储消息在CommitLog的索引。一个MessageQueue一个文件,记录当前MessageQueue被哪些消费者组消费到了哪一条CommitLog。

- IndexFile:为了消息查询提供了一种通过key或时间区间来查询消息的方法,这种通过IndexFile来查找消息的方法不影响发送与消费消息的主流程

RocketMQ的混合型存储结构(多个Topic的消息实体内容都存储于一个CommitLog中)针对Producer和Consumer分别采用了数据和索引部分相分离的存储结构,Producer发送消息至Broker端,然后Broker端使用同步或者异步的方式对消息刷盘持久化,保存至CommitLog中。只要消息被刷盘持久化至磁盘文件CommitLog中,那么Producer发送的消息就不会丢失。

Broker端的后台服务线程—ReputMessageService会不停地分发请求并异步构建ConsumeQueue(逻辑消费队列)和IndexFile(索引文件)数据。

接下来看下MessageStore是如何工作的,默认实现子类DefaultMessageStore,看其中几个关键的属性:

public DefaultMessageStore(final MessageStoreConfig messageStoreConfig, final BrokerStatsManager brokerStatsManager,

final MessageArrivingListener messageArrivingListener, final BrokerConfig brokerConfig) throws IOException {

//省略...

//分配MappedFile的服务,负责提供内存映射

this.allocateMappedFileService = new AllocateMappedFileService(this);

if (messageStoreConfig.isEnableDLegerCommitLog()) {

this.commitLog = new DLedgerCommitLog(this);

} else {

//创建CommitLog

this.commitLog = new CommitLog(this);

}

this.consumeQueueTable = new ConcurrentHashMap<>(32);

//ConsumeQueue刷盘服务

this.flushConsumeQueueService = new FlushConsumeQueueService();

this.cleanCommitLogService = new CleanCommitLogService();

this.cleanConsumeQueueService = new CleanConsumeQueueService();

this.storeStatsService = new StoreStatsService();

this.indexService = new IndexService(this);

if (!messageStoreConfig.isEnableDLegerCommitLog()) {

this.haService = new HAService(this);

} else {

this.haService = null;

}

//分发请求并异步构建ConsumeQueue(逻辑消费队列)和IndexFile(索引文件)数据

this.reputMessageService = new ReputMessageService();

this.scheduleMessageService = new ScheduleMessageService(this);

this.transientStorePool = new TransientStorePool(messageStoreConfig);

if (messageStoreConfig.isTransientStorePoolEnable()) {

this.transientStorePool.init();

}

//开启allocateMappedFileService

this.allocateMappedFileService.start();

this.indexService.start();

//添加了两个CommitLog分发器,分别对应ConsumeQueue和Index

this.dispatcherList = new LinkedList<>();

this.dispatcherList.addLast(new CommitLogDispatcherBuildConsumeQueue());

this.dispatcherList.addLast(new CommitLogDispatcherBuildIndex());

//省略...

}

着重看下Commitlog的创建、加载、和开启的过程:

public CommitLog(final DefaultMessageStore defaultMessageStore) {

String storePath = defaultMessageStore.getMessageStoreConfig().getStorePathCommitLog();

if (storePath.contains(MessageStoreConfig.MULTI_PATH_SPLITTER)) {

this.mappedFileQueue = new MultiPathMappedFileQueue(defaultMessageStore.getMessageStoreConfig(),

defaultMessageStore.getMessageStoreConfig().getMappedFileSizeCommitLog(),

defaultMessageStore.getAllocateMappedFileService(), this::getFullStorePaths);

} else {

//创建mappedFile队列,后面会分析这个队列

this.mappedFileQueue = new MappedFileQueue(storePath,

defaultMessageStore.getMessageStoreConfig().getMappedFileSizeCommitLog(),

defaultMessageStore.getAllocateMappedFileService());

}

this.defaultMessageStore = defaultMessageStore;

if (FlushDiskType.SYNC_FLUSH == defaultMessageStore.getMessageStoreConfig().getFlushDiskType()) {

this.flushCommitLogService = new GroupCommitService();

} else {

//CommitLog异步刷盘的服务

this.flushCommitLogService = new FlushRealTimeService();

}

//commitlog提交服务,这里虽然创建了,但是默认不会开启,只有配置允许开启堆外内存缓存池才会开启

this.commitLogService = new CommitRealTimeService();

//...

}

public boolean load() {

boolean result = this.mappedFileQueue.load();

log.info("load commit log " + (result ? "OK" : "Failed"));

return result;

}

下面进入mappedFileQueue的load方法,主要为每个commitlog创建mappedfile:

public boolean load() {

File dir = new File(this.storePath);

//拿到存储目录下所有的commitlog

File[] ls = dir.listFiles();

if (ls != null) {

return doLoad(Arrays.asList(ls));

}

return true;

}

public boolean doLoad(List files) {

// ascending order

files.sort(Comparator.comparing(File::getName));

for (File file : files) {

if (file.length() != this.mappedFileSize) {

log.warn(file + "\t" + file.length()

+ " length not matched message store config value, ignore it");

return true;

}

try {

//对于每个commitlog文件,创建一个MappedFile,每个文件默认占用1GB

MappedFile mappedFile = new MappedFile(file.getPath(), mappedFileSize);

//...

//把创建一个MappedFile加入mappedFileQueue的list中

this.mappedFiles.add(mappedFile);

log.info("load " + file.getPath() + " OK");

} catch (IOException e) {

log.error("load file " + file + " error", e);

return false;

}

}

return true;

}

接下看一下MappedFile的创建,主要是init方法:

private void init(final String fileName, final int fileSize) throws IOException {

this.fileName = fileName;

this.fileSize = fileSize;

this.file = new File(fileName);

this.fileFromOffset = Long.parseLong(this.file.getName());

boolean ok = false;

ensureDirOK(this.file.getParent());

try {

//读取commitlog文件并拿到对应的FileChannel

this.fileChannel = new RandomAccessFile(this.file, "rw").getChannel();

//FileChannel的map方法,创建一块固定大小的堆外内存映射空间mappedByteBuffer

this.mappedByteBuffer = this.fileChannel.map(MapMode.READ_WRITE, 0, fileSize);

TOTAL_MAPPED_VIRTUAL_MEMORY.addAndGet(fileSize);

TOTAL_MAPPED_FILES.incrementAndGet();

ok = true;

} catch (FileNotFoundException e) {

log.error("Failed to create file " + this.fileName, e);

throw e;

} catch (IOException e) {

log.error("Failed to map file " + this.fileName, e);

throw e;

} finally {

if (!ok && this.fileChannel != null) {

this.fileChannel.close();

}

}

}

开启CommitLog:

public void start() {

//开启刷盘线程

this.flushCommitLogService.start();

//默认不会进入这个if

if (defaultMessageStore.getMessageStoreConfig().isTransientStorePoolEnable()) {

this.commitLogService.start();

}

}

关于CommitLog的transientStorePoolEnable参数:

1)transientStorePoolEnable = true

消息在追加时,先放入到 writeBuffer 中,然后定时 commit 到 FileChannel,然后定时flush。

2)transientStorePoolEnable=false(默认)

消息追加时,直接存入 MappedByteBuffer() 中,然后定时 flush。

接下来看下刷盘线程是怎么工作的:

public void run() {

CommitLog.log.info(this.getServiceName() + " service started");

//死循环

while (!this.isStopped()) {

//判断是否定时刷盘,默认为ture

boolean flushCommitLogTimed = CommitLog.this.defaultMessageStore.getMessageStoreConfig().isFlushCommitLogTimed();

//刷盘间隔,默认500毫秒

int interval = CommitLog.this.defaultMessageStore.getMessageStoreConfig().getFlushIntervalCommitLog();

//每次刷盘持久化的最少数据页,默认4页

int flushPhysicQueueLeastPages = CommitLog.this.defaultMessageStore.getMessageStoreConfig().getFlushCommitLogLeastPages();

//略...

try {

if (flushCommitLogTimed) {

//如果是定时刷盘,那么每次睡眠固定间隔

Thread.sleep(interval);

} else {

//如果不是定时刷盘,那么在rocketmq自己实现的CountDownlatch上等待,存在提前被唤醒并刷盘的可能

this.waitForRunning(interval);

}

//...

long begin = System.currentTimeMillis();

//mappedFileQueue刷盘操作

CommitLog.this.mappedFileQueue.flush(flushPhysicQueueLeastPages);

long storeTimestamp = CommitLog.this.mappedFileQueue.getStoreTimestamp();

if (storeTimestamp > 0) {

CommitLog.this.defaultMessageStore.getStoreCheckpoint().setPhysicMsgTimestamp(storeTimestamp);

}

long past = System.currentTimeMillis() - begin;

if (past > 500) {

log.info("Flush data to disk costs {} ms", past);

}

} catch (Throwable e) {

CommitLog.log.warn(this.getServiceName() + " service has exception. ", e);

this.printFlushProgress();

}

}

//下面的操作保证关闭时刷一次盘

boolean result = false;

for (int i = 0; i < RETRY_TIMES_OVER && !result; i++) {

result = CommitLog.this.mappedFileQueue.flush(0);

CommitLog.log.info(this.getServiceName() + " service shutdown, retry " + (i + 1) + " times " + (result ? "OK" : "Not OK"));

}

this.printFlushProgress();

CommitLog.log.info(this.getServiceName() + " service end");

}

下面时刷盘操作,MappedFileQueue#flush:

public boolean flush(final int flushLeastPages) {

boolean result = true;

//根据上次刷盘点即偏移量从mappedFileQueue中找到最近一个commitlog文件对应的MappedFile

MappedFile mappedFile = this.findMappedFileByOffset(this.flushedWhere, this.flushedWhere == 0);

if (mappedFile != null) {

long tmpTimeStamp = mappedFile.getStoreTimestamp();

//刷盘

int offset = mappedFile.flush(flushLeastPages);

//更新偏移量

long where = mappedFile.getFileFromOffset() + offset;

result = where == this.flushedWhere;

this.flushedWhere = where;

if (0 == flushLeastPages) {

this.storeTimestamp = tmpTimeStamp;

}

}

return result;

}

//mappedFile.flush:

public int flush(final int flushLeastPages) {

if (this.isAbleToFlush(flushLeastPages)) {

//同步锁

if (this.hold()) {

//拿到mappedByteBuffer中可读的最大位置,也就是有效数据最后的位置

int value = getReadPosition();

try {

//调用force方法强制刷盘,最后会调用本地方法

if (writeBuffer != null || this.fileChannel.position() != 0) {

this.fileChannel.force(false);

} else {

this.mappedByteBuffer.force();

}

} catch (Throwable e) {

log.error("Error occurred when force data to disk.", e);

}

//更新刷盘位置

this.flushedPosition.set(value);

this.release();

} else {

log.warn("in flush, hold failed, flush offset = " + this.flushedPosition.get());

this.flushedPosition.set(getReadPosition());

}

}

return this.getFlushedPosition();

}

准备工作结束,下面看下消息写入的过程,首先上游业务线程池拿到传输层接收到的消息,调用存储服务写入消息,来到DefaultMessageStore#asyncPutMessage:

public CompletableFuture asyncPutMessage(MessageExtBrokerInner msg) {

//校验工作...

long beginTime = this.getSystemClock().now();

//commitLog写入消息,这是一个异步方法

CompletableFuture putResultFuture = this.commitLog.asyncPutMessage(msg);

//...

return putResultFuture;

}

//commitLog.asyncPutMessage:

public CompletableFuture asyncPutMessage(final MessageExtBrokerInner msg) {

//...

String topic = msg.getTopic();//消息主题

int queueId = msg.getQueueId();//消息投递队列id

//是否时事务消息,事务消息比较复杂,后面另开篇幅分析,关注普通消息

final int tranType = MessageSysFlag.getTransactionValue(msg.getSysFlag());

if (tranType == MessageSysFlag.TRANSACTION_NOT_TYPE

|| tranType == MessageSysFlag.TRANSACTION_COMMIT_TYPE) {

//非事务消息

if (msg.getDelayTimeLevel() > 0) {

//DelayTimeLevel大于0说明时延时消息

if (msg.getDelayTimeLevel() > this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel()) {

msg.setDelayTimeLevel(this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel());

}

//延时消息则改变当前消息主题为RMQ_SYS_SCHEDULE_TOPIC

topic = TopicValidator.RMQ_SYS_SCHEDULE_TOPIC;

queueId = ScheduleMessageService.delayLevel2QueueId(msg.getDelayTimeLevel());

//把消息原来的主题队列先存起来,到期后会重新投递

MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_TOPIC, msg.getTopic());

MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_QUEUE_ID, String.valueOf(msg.getQueueId()));

msg.setPropertiesString(MessageDecoder.messageProperties2String(msg.getProperties()));

//设置延时消息的主题,队列

msg.setTopic(topic);

msg.setQueueId(queueId);

}

}

//...

//下面操作对消息进行编码,构建消息对应的byteBuffer

PutMessageThreadLocal putMessageThreadLocal = this.putMessageThreadLocal.get();

PutMessageResult encodeResult = putMessageThreadLocal.getEncoder().encode(msg);

if (encodeResult != null) {

return CompletableFuture.completedFuture(encodeResult);

}

msg.setEncodedBuff(putMessageThreadLocal.getEncoder().encoderBuffer);

PutMessageContext putMessageContext = new PutMessageContext(generateKey(putMessageThreadLocal.getKeyBuilder(), msg));

long elapsedTimeInLock = 0;

MappedFile unlockMappedFile = null;

putMessageLock.lock(); //spin or ReentrantLock ,depending on store config

try {

//拿到最近的一个MappedFile

MappedFile mappedFile = this.mappedFileQueue.getLastMappedFile();

long beginLockTimestamp = this.defaultMessageStore.getSystemClock().now();

this.beginTimeInLock = beginLockTimestamp;

msg.setStoreTimestamp(beginLockTimestamp);

if (null == mappedFile || mappedFile.isFull()) {

//如果mappedFile空间满了,则创建新的mappedFile

mappedFile = this.mappedFileQueue.getLastMappedFile(0);

}

//...

//在mappedFile上追加消息

result = mappedFile.appendMessage(msg, this.appendMessageCallback, putMessageContext);

switch (result.getStatus()) {

case PUT_OK:

break;

case END_OF_FILE:

unlockMappedFile = mappedFile;

//如果返回END_OF_FILE,则说明空间不够了,创建一个新的mappedFile,再次appendMessage

mappedFile = this.mappedFileQueue.getLastMappedFile(0);

if (null == mappedFile) {

// XXX: warn and notify me

log.error("create mapped file2 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());

beginTimeInLock = 0;

return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, result));

}

result = mappedFile.appendMessage(msg, this.appendMessageCallback, putMessageContext);

break;

case MESSAGE_SIZE_EXCEEDED:

case PROPERTIES_SIZE_EXCEEDED:

beginTimeInLock = 0;

return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, result));

case UNKNOWN_ERROR:

beginTimeInLock = 0;

return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result));

default:

beginTimeInLock = 0;

return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result));

}

elapsedTimeInLock = this.defaultMessageStore.getSystemClock().now() - beginLockTimestamp;

beginTimeInLock = 0;

} finally {

putMessageLock.unlock();

}

if (null != unlockMappedFile && this.defaultMessageStore.getMessageStoreConfig().isWarmMapedFileEnable()) {

this.defaultMessageStore.unlockMappedFile(unlockMappedFile);

}

//构建返回结果

PutMessageResult putMessageResult = new PutMessageResult(PutMessageStatus.PUT_OK, result);

//提交刷盘请求

CompletableFuture flushResultFuture = submitFlushRequest(result, msg);

//提交向slave同步消息请求

CompletableFuture replicaResultFuture = submitReplicaRequest(result, msg);

return flushResultFuture.thenCombine(replicaResultFuture, (flushStatus, replicaStatus) -> {

if (flushStatus != PutMessageStatus.PUT_OK) {

putMessageResult.setPutMessageStatus(flushStatus);

}

if (replicaStatus != PutMessageStatus.PUT_OK) {

putMessageResult.setPutMessageStatus(replicaStatus);

if (replicaStatus == PutMessageStatus.FLUSH_SLAVE_TIMEOUT) {

log.error("do sync transfer other node, wait return, but failed, topic: {} tags: {} client address: {}",

msg.getTopic(), msg.getTags(), msg.getBornHostNameString());

}

}

return putMessageResult;

});

}

下面看下追加消息mappedFile#appendMessage#appendMessagesInner:

public AppendMessageResult appendMessagesInner(final MessageExt messageExt, final AppendMessageCallback cb,

PutMessageContext putMessageContext) {

assert messageExt != null;

assert cb != null;

//获取文件上次写入的位置

int currentPos = this.wrotePosition.get();

//上次写入的位置小于文件大小,可写

if (currentPos < this.fileSize) {

//writeBuffer为null,拿到对应mappedByteBuffer可写的那一段缓冲区

ByteBuffer byteBuffer = writeBuffer != null ? writeBuffer.slice() : this.mappedByteBuffer.slice();

byteBuffer.position(currentPos);

AppendMessageResult result;

if (messageExt instanceof MessageExtBrokerInner) {

//单条消息,doAppend写入消息

result = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos,

(MessageExtBrokerInner) messageExt, putMessageContext);

} else if (messageExt instanceof MessageExtBatch) {

//批量消息

result = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos,

(MessageExtBatch) messageExt, putMessageContext);

} else {

return new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR);

}

//更新文件写入的位置

this.wrotePosition.addAndGet(result.getWroteBytes());

this.storeTimestamp = result.getStoreTimestamp();

return result;

}

log.error("MappedFile.appendMessage return null, wrotePosition: {} fileSize: {}", currentPos, this.fileSize);

return new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR);

}

//AppendMessageCallback#doAppend:

public AppendMessageResult doAppend(final long fileFromOffset, final ByteBuffer byteBuffer, final int maxBlank,

final MessageExtBrokerInner msgInner, PutMessageContext putMessageContext) {

// STORETIMESTAMP + STOREHOSTADDRESS + OFFSET

//fileFromOffset为第一条消息的偏移量,也就是本commitlog的文件名,加上在本commitlog中的偏移量,得到真正的总的偏移量

long wroteOffset = fileFromOffset + byteBuffer.position();

//计算得到一个msgId

Supplier msgIdSupplier = () -> {

int sysflag = msgInner.getSysFlag();

int msgIdLen = (sysflag & MessageSysFlag.STOREHOSTADDRESS_V6_FLAG) == 0 ? 4 + 4 + 8 : 16 + 4 + 8;

ByteBuffer msgIdBuffer = ByteBuffer.allocate(msgIdLen);

MessageExt.socketAddress2ByteBuffer(msgInner.getStoreHost(), msgIdBuffer);

msgIdBuffer.clear();//because socketAddress2ByteBuffer flip the buffer

msgIdBuffer.putLong(msgIdLen - 8, wroteOffset);

return UtilAll.bytes2string(msgIdBuffer.array());

};

//记录一下消费队列信息

String key = putMessageContext.getTopicQueueTableKey();

//要写入队列的的偏移量

Long queueOffset = CommitLog.this.topicQueueTable.get(key);

if (null == queueOffset) {

queueOffset = 0L;

CommitLog.this.topicQueueTable.put(key, queueOffset);

}

//争对事务消息,略过

final int tranType = MessageSysFlag.getTransactionValue(msgInner.getSysFlag());

switch (tranType) {

// Prepared and Rollback message is not consumed, will not enter the

// consumer queuec

case MessageSysFlag.TRANSACTION_PREPARED_TYPE:

case MessageSysFlag.TRANSACTION_ROLLBACK_TYPE:

queueOffset = 0L;

break;

case MessageSysFlag.TRANSACTION_NOT_TYPE:

case MessageSysFlag.TRANSACTION_COMMIT_TYPE:

default:

break;

}

//消息对应的ByteBuffer

ByteBuffer preEncodeBuffer = msgInner.getEncodedBuff();

final int msgLen = preEncodeBuffer.getInt(0);

//判断一下空间是否足够,不够则返回END_OF_FILE

if ((msgLen + END_FILE_MIN_BLANK_LENGTH) > maxBlank) {

//...

}

//下面再在preEncodeBuffer中添加一些信息,QUEUEOFFSET,PHYSICALOFFSET等,之前对消息编码的时候已经把对应positin的位置空出来了

int pos = 4 + 4 + 4 + 4 + 4;

// 6 QUEUEOFFSET

preEncodeBuffer.putLong(pos, queueOffset);

pos += 8;

// 7 PHYSICALOFFSET

preEncodeBuffer.putLong(pos, fileFromOffset + byteBuffer.position());

int ipLen = (msgInner.getSysFlag() & MessageSysFlag.BORNHOST_V6_FLAG) == 0 ? 4 + 4 : 16 + 4;

// 8 SYSFLAG, 9 BORNTIMESTAMP, 10 BORNHOST, 11 STORETIMESTAMP

pos += 8 + 4 + 8 + ipLen;

// refresh store time stamp in lock

preEncodeBuffer.putLong(pos, msgInner.getStoreTimestamp());

final long beginTimeMills = CommitLog.this.defaultMessageStore.now();

//真正把消息追加到MappedByteBuffer

byteBuffer.put(preEncodeBuffer);

msgInner.setEncodedBuff(null);

AppendMessageResult result = new AppendMessageResult(AppendMessageStatus.PUT_OK, wroteOffset, msgLen, msgIdSupplier,

msgInner.getStoreTimestamp(), queueOffset, CommitLog.this.defaultMessageStore.now() - beginTimeMills);

switch (tranType) {

case MessageSysFlag.TRANSACTION_PREPARED_TYPE:

case MessageSysFlag.TRANSACTION_ROLLBACK_TYPE:

break;

case MessageSysFlag.TRANSACTION_NOT_TYPE:

case MessageSysFlag.TRANSACTION_COMMIT_TYPE:

// The next update ConsumeQueue information

CommitLog.this.topicQueueTable.put(key, ++queueOffset);

break;

default:

break;

}

return result;

}

到这里消息就以异步方式写入成功了,其实是写入了对应commitlog的mappedByteBuffer,在后面某个时间点会刷入磁盘,再看下提交刷盘请求,CommmitLog#submitFlushRequest:

public CompletableFuture submitFlushRequest(AppendMessageResult result, MessageExt messageExt) {

//同步刷盘,默认不进入

if (FlushDiskType.SYNC_FLUSH == this.defaultMessageStore.getMessageStoreConfig().getFlushDiskType()) {

final GroupCommitService service = (GroupCommitService) this.flushCommitLogService;

if (messageExt.isWaitStoreMsgOK()) {

GroupCommitRequest request = new GroupCommitRequest(result.getWroteOffset() + result.getWroteBytes(),

this.defaultMessageStore.getMessageStoreConfig().getSyncFlushTimeout());

service.putRequest(request);

return request.future();

} else {

service.wakeup();

return CompletableFuture.completedFuture(PutMessageStatus.PUT_OK);

}

}

//默认的异步刷盘

else {

if (!this.defaultMessageStore.getMessageStoreConfig().isTransientStorePoolEnable()) {

//默认transientStorePoolEnable为false,如果配置的不是定时刷盘,那么这里会唤醒刷盘线程

flushCommitLogService.wakeup();

} else {

commitLogService.wakeup();

}

return CompletableFuture.completedFuture(PutMessageStatus.PUT_OK);

}

}

刷盘线程在上面的源码中分析过了。 最后,看一下reputMessageService,负责分发commitlog到consumerQueue和IndexFile,每隔1毫秒做一次分发:

public void run() {

DefaultMessageStore.log.info(this.getServiceName() + " service started");

while (!this.isStopped()) {

try {

Thread.sleep(1);

this.doReput();

} catch (Exception e) {

DefaultMessageStore.log.warn(this.getServiceName() + " service has exception. ", e);

}

}

DefaultMessageStore.log.info(this.getServiceName() + " service end");

}

doReput中会分别调用各个Dispatcher将消息分发到consumerQueue和indexFile,consumerQueue和indexFile也是和commitlog相同的消息写入方式,即mappedByteBuffer追加。由于这个过程内容也比较多,篇幅有限,在后面在详细介绍。

总结

`RocketMQ主要通过MappedByteBuffer对文件进行读写操作。其中,利用了NIO中的FileChannel模型将磁盘上的物理文件直接映射到用户态的内存地址中(这种Mmap的方式减少了传统IO将磁盘文件数据在操作系统内核地址空间的缓冲区和用户应用程序地址空间的缓冲区之间来回进行拷贝的性能开销),将对文件的操作转化为直接对内存地址进行操作,从而极大地提高了文件的读写效率(正因为需要使用内存映射机制,故RocketMQ的文件存储都使用定长结构来存储,方便一次将整个文件映射至内存)

关于异步刷盘,能够充分利用OS的PageCache的优势,只要消息写入PageCache即可将成功的ACK返回给Producer端。消息刷盘采用后台异步线程提交的方式进行,降低了读写延迟,提高了MQ的性能和吞吐量 `