Hadoop 3.2.4 本机伪分布式安装

Hadoop 3.2.4 伪分布式安装

文章目录

-

-

- Hadoop 3.2.4 伪分布式安装

-

- 前言

- 配置ssh免密登录

- 下载安装包

- 解压并调整配置文件

-

- 解压安装包到当前位置

- 调整配置文件

-

- hadoop-env.sh

- yarn-env.sh

- core-site.xml

- hdfs-site.xml

- mapred-site.xml

- yarn-site.xml

- workers 配置

- 启动验证

-

-

- 启动与命令查验

- web页面查看

-

-

前言

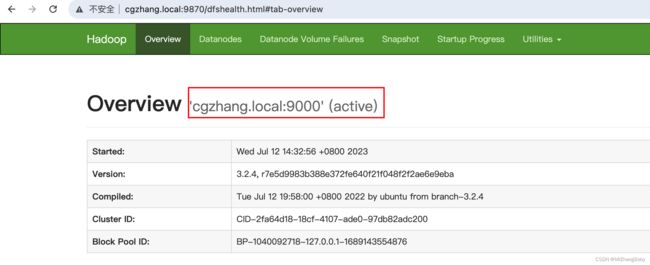

基本上操作步骤跟2.x的版本内容差异不大,我的理解除了能力强了外,就是把默认的http端口改了,有点意外,安装完成验证的时候,用50070试了半天,还以为自己安装错了呢,原来调整为了9870端口。在此不再多bb了。

配置ssh免密登录

直接参考之前的帖子即可:https://blog.csdn.net/MrZhangBaby/article/details/128329959?spm=1001.2014.3001.5502

其他(主机名、jdk、环境变量等)杂七杂八的,在此不再详细赘述

下载安装包

直接贴地址:https://downloads.apache.org/hadoop/common/hadoop-3.2.4/

解压并调整配置文件

解压安装包到当前位置

tar -xzvf ~/Downloads/hadoop-3.2.4.tar.gz -C .

调整配置文件

hadoop-env.sh

# 首行新增jdk安装目录

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_261.jdk/Contents/Home

yarn-env.sh

# 首行新增jdk安装目录

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_261.jdk/Contents/Home

core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://cgzhang.local:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>file:/Users/zhangchenguang/software/hadoop-3.2.4/tmpvalue>

property>

configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>1value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>file:/Users/zhangchenguang/software/hadoop-3.2.4/datas/namevalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>file:/Users/zhangchenguang/software/hadoop-3.2.4/datas/datavalue>

property>

configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>mapreduce.application.classpathname>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*value>

property>

configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>cgzhang.localvalue>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.env-whitelistname>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME,PATH,LANG,TZ,HADOOP_MAPRED_HOMEvalue>

property>

configuration>

workers 配置

echo `hostname` > workers

cgzhang.local

启动验证

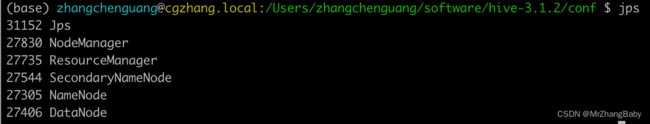

启动与命令查验

# 格式化namenode 会自动出创建相关目录及文件

hdfs namenode -format

# 启动所有服务

start-all.sh

# 查看进程是否已启动

jps

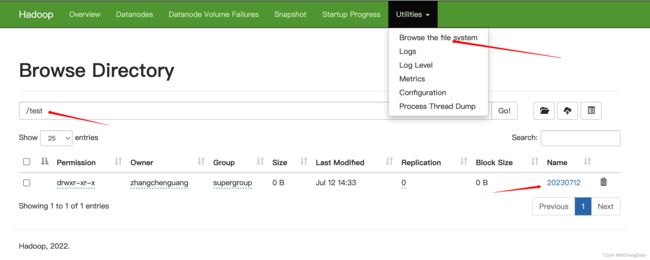

web页面查看

# 查看命令help

hdfs hdfs

# 创建一个目录试下

hdfs dfs -mkdir -p /test/20230712

# 验证是否创建成功

hdfs dfs -lsr /

到此已经成功安装了,可以瞅瞅这个神秘的hadoop3.x咯。