【CS231n assignment 2022】Assignment 3 - Part 1,RNN for Image Caption

前言

- 博客主页:睡晚不猿序程

- ⌚首发时间:2022.8.7

- ⏰最近更新时间:2023.2.8

- 本文由 睡晚不猿序程 原创,首发于 CSDN

- 作者是蒻蒟本蒟,如果文章里有任何错误或者表述不清,请 tt 我,万分感谢!orz

相关文章目录 :

- 【CS231n assignment 2022】Assignment 2 - Part 1,全连接网络的初始化以及正反向传播

- 【CS231n assignment 2022】Assignment 2 - Part 2,优化器,批归一化以及层归一化

文章目录

- 前言

- 1. 内容简介

- 2. RNN_Captioning

-

- 2.1 COCO Dataset

- 2.2 Inspect the Data

- 2.3 Vanilla RNN:Step Forward

- 2.4 Vanilla RNN: Step Back ward

- 2.5 Vanilla RNN: Forward

- 2.6 Vanilla RNN: Backward

- 2.7 Word Embedding: Forward

- 2.8 Word Embedding: Backward

- 2.9 Temporal Softmax Loss

- 2.10 RNN for Image Captioning

- 2.11 Overfit RNN Captioning Model on Small Data

- 2.12 RNN Sampling at Test Time

- 3. 总结、预告

1. 内容简介

作业二的文章只写了一半,成功的咕咕咕了呜呜呜

反倒是这次作业三的文章一边做一边写竟然写完了一篇,于是就先发出来啦!

下一次必须发作业二的呜呜,对不起大家

本次的内容将会是:

- 完成 RNN,并使用 RNN 进行 image caption 任务

好了让我们开始吧!

2. RNN_Captioning

2.1 COCO Dataset

COCO 数据集是一个常用的图片描述数据集,80,000 训练图片和40,000验证图片

图片特征已经被提取出来,以便于减少硬件要求

特征的维度从4086降低至512(使用了PCA)

分别存放于train2014_urls.txt 和val2014_urls.txt

虽然没有 RAW 图片,但是我们把链接都放到了train2014_urls.txt和val2014_urls.txt里面去了,可以自己下载下来看看

Caption:处理字符很困难,所以也帮我们处理了,我们只需要专心构建网络就好。每一个词都有一个独立的ID,我们可以用一系列 integers 来代表一个caption,对应关系存放于coco2014_vocab.jason,使用函数decode_captions来将存放 integer IDs 的 np 数组转化成字符串

tokens:我们添加了一系列特殊tokens在字典中

我们可以使用load_coco_data函数来载入COCO数据集

返回值:(captions, features, URLs, vocabulary)

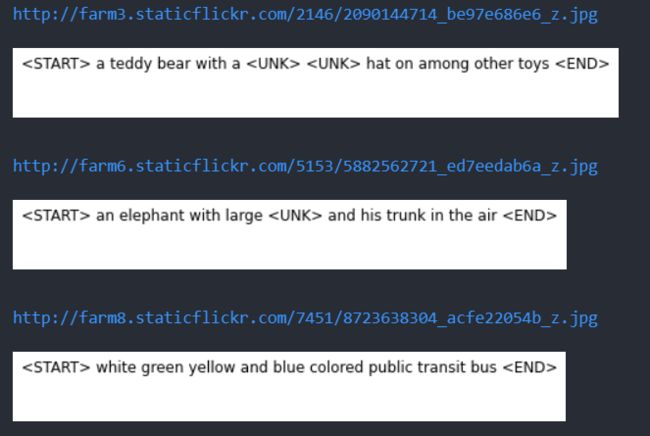

2.2 Inspect the Data

我们可以先查看一下数据集

注意要对 cell 中的代码做如下的修改

# Sample a minibatch and show the images and captions.

# If you get an error, the URL just no longer exists, so don't worry!

# You can re-sample as many times as you want.

batch_size = 3

captions, features, urls = sample_coco_minibatch(data, batch_size=batch_size)

for i, (caption, url) in enumerate(zip(captions, urls)):

# plt.imshow(image_from_url(url)) # 上一行代码运行到这里就会阻塞,所以我们直接输出url即可

print(url)

plt.figure(figsize=(5, 0.5))

plt.axis('off')

caption_str = decode_captions(caption, data['idx_to_word'])

plt.title(caption_str)

plt.show()

可以看出我们的输出的描述

接下来我们就要完成 RNN 了,为了完成图像描述的任务,cs231n/rnn_layers.py中包含着我们构造RNN所需要的不同层,cs231n/classifiers/rnn.py将会使用这些层来完成一个图像描述模型

我们将首先在cs231n/rnn_layers.py中构造不同层

LSTM是RNN的一个变种,我们现在不考虑LSTM

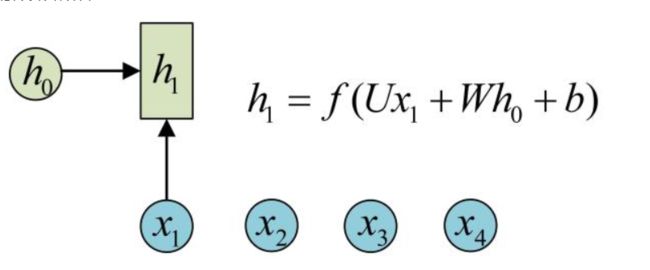

2.3 Vanilla RNN:Step Forward

我们首先要完成单步前向传播

按照 RNN 的前向传播,就是输入的 x 乘上权重 Wx 加上上一次的隐藏状态 h 乘以权重 Wh,然后加上偏置 b,最后经过 tanh 的激活,得到本时间段的输出

def rnn_step_forward(x, prev_h, Wx, Wh, b):

"""Run the forward pass for a single timestep of a vanilla RNN using a tanh activation function.

The input data has dimension D, the hidden state has dimension H,

and the minibatch is of size N.

Inputs:

- x: Input data for this timestep, of shape (N, D)

- prev_h: Hidden state from previous timestep, of shape (N, H)

- Wx: Weight matrix for input-to-hidden connections, of shape (D, H)

- Wh: Weight matrix for hidden-to-hidden connections, of shape (H, H)

- b: Biases of shape (H,)

Returns a tuple of:

- next_h: Next hidden state, of shape (N, H)

- cache: Tuple of values needed for the backward pass.

"""

next_h, cache = None, None

##############################################################################

# TODO: Implement a single forward step for the vanilla RNN. Store the next #

# hidden state and any values you need for the backward pass in the next_h #

# and cache variables respectively. #

##############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

hidden_x = x.dot(Wx) # (N,H)

hidden_prev_h = prev_h.dot(Wh) # (N,H)

next_h = np.tanh(hidden_x+hidden_prev_h+b.reshape(1, -1))

cache = (x, prev_h, Wx, Wh, next_h)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

##############################################################################

# END OF YOUR CODE #

##############################################################################

return next_h, cache

代码详解

前向传播较为简单,只需要直接按照上面的步骤进行前向传播即可

接下来我们验证一下我们的结果

![]()

结果正确

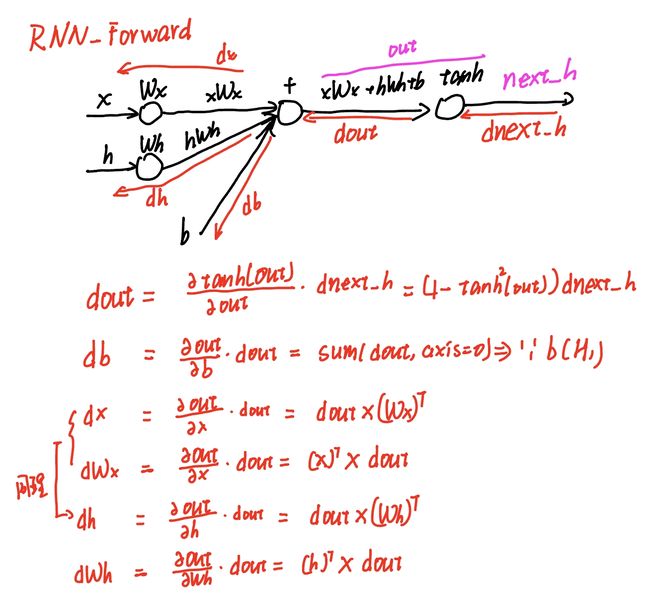

2.4 Vanilla RNN: Step Back ward

接下来我们我们要完成单步反向传播,RNN 的反向传播较为简单,只需要慢慢求导就可以得到我们想要的答案了

上面就是反向传播的公式求导,这时候我们可以按照这样的步骤把反向传播回来的梯度求出来

def rnn_step_backward(dnext_h, cache):

"""Backward pass for a single timestep of a vanilla RNN.

Inputs:

- dnext_h: Gradient of loss with respect to next hidden state, of shape (N, H)

- cache: Cache object from the forward pass

Returns a tuple of:

- dx: Gradients of input data, of shape (N, D)

- dprev_h: Gradients of previous hidden state, of shape (N, H)

- dWx: Gradients of input-to-hidden weights, of shape (D, H)

- dWh: Gradients of hidden-to-hidden weights, of shape (H, H)

- db: Gradients of bias vector, of shape (H,)

"""

dx, dprev_h, dWx, dWh, db = None, None, None, None, None

##############################################################################

# TODO: Implement the backward pass for a single step of a vanilla RNN. #

# #

# HINT: For the tanh function, you can compute the local derivative in terms #

# of the output value from tanh. #

##############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

x, prev_h, Wx, Wh, next_h = cache

dout = (1-next_h**2)*dnext_h # (N,H) 注意tanh求导是1-tanh^2

db = np.sum(dout, axis=0) # (N,H)->(H,)

dx = dout.dot(Wx.T) # (N,H)*(H,D)->(N,D)

dWx = x.T.dot(dout) # (D,N)*(N,H)->(D,H)

dprev_h = dout.dot(Wh.T)

dWh = prev_h.T.dot(dout)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

##############################################################################

# END OF YOUR CODE #

##############################################################################

return dx, dprev_h, dWx, dWh, db

代码详解

按部就班就可以完成任务!

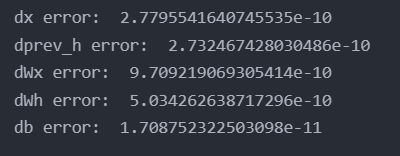

我们接下来就可以验证一下我们的实现是否正确

经过验证,答案正确

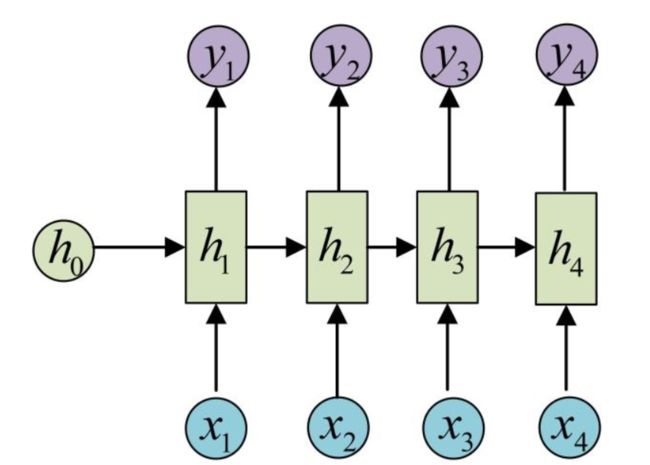

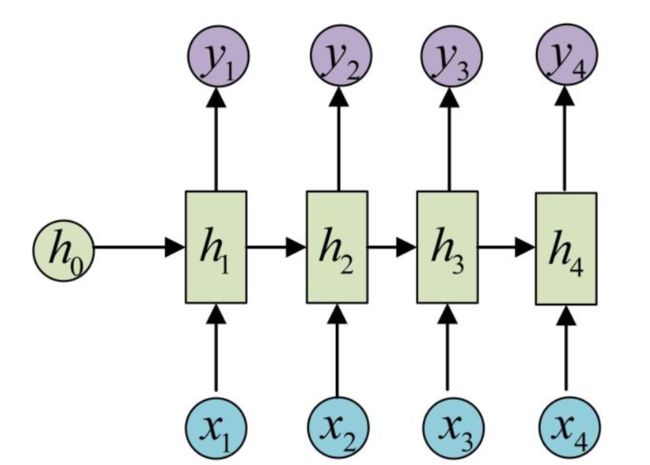

2.5 Vanilla RNN: Forward

现在我们完成了单个时间步的前反向传播,接下来我们会把这些零件组合起来实现 RNN,它可以处理一个序列数据

现在我们要打开cs231n/rnn_layers.py,完成函数 rnn_forward ,我们会使用到我们完成的 rnn_step_forward 函数

首先我们回忆一下 RNN 前向传播的结构

然后看一下函数的 API,才能知道我们接下来要干什么

def rnn_forward(x, h0, Wx, Wh, b):

"""Run a vanilla RNN forward on an entire sequence of data.

We assume an input sequence composed of T vectors, each of dimension D. The RNN uses a hidden

size of H, and we work over a minibatch containing N sequences. After running the RNN forward,

we return the hidden states for all timesteps.

Inputs:

- x: Input data for the entire timeseries, of shape (N, T, D)

- h0: Initial hidden state, of shape (N, H)

- Wx: Weight matrix for input-to-hidden connections, of shape (D, H)

- Wh: Weight matrix for hidden-to-hidden connections, of shape (H, H)

- b: Biases of shape (H,)

Returns a tuple of:

- h: Hidden states for the entire timeseries, of shape (N, T, H)

- cache: Values needed in the backward pass

"""

输入:

- x:输入的数据,大小为(N,T,D)

- h0:初始状态,大小(N,H)

- Wx:x 的权重,大小(D,H)

- Wh:h 的权重,大小(H,H)

- b:偏置,大小(H,)

返回:

- h:全部时间点的隐藏状态,大小为(N,T,H)

- cache:用于反向传播的中间状态

思路

- 输入数据 x 的排列是(N,T,D),所以我们需要把它转换成(T,N,D),同理 h

- 经过了 T 个时间点,我们将会得到 T 个状态,所以初始隐藏状态不会被放入 h 中

- 使用一个 for 循环,循环计算隐藏状态即可

代码解析

def rnn_forward(x, h0, Wx, Wh, b):

"""Run a vanilla RNN forward on an entire sequence of data.

We assume an input sequence composed of T vectors, each of dimension D. The RNN uses a hidden

size of H, and we work over a minibatch containing N sequences. After running the RNN forward,

we return the hidden states for all timesteps.

Inputs:

- x: Input data for the entire timeseries, of shape (N, T, D)

- h0: Initial hidden state, of shape (N, H)

- Wx: Weight matrix for input-to-hidden connections, of shape (D, H)

- Wh: Weight matrix for hidden-to-hidden connections, of shape (H, H)

- b: Biases of shape (H,)

Returns a tuple of:

- h: Hidden states for the entire timeseries, of shape (N, T, H)

- cache: Values needed in the backward pass

"""

h, cache = None, None

##############################################################################

# TODO: Implement forward pass for a vanilla RNN running on a sequence of #

# input data. You should use the rnn_step_forward function that you defined #

# above. You can use a for loop to help compute the forward pass. #

##############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

cache = []

N, T, D = x.shape

H = h0.shape[1]

h = np.zeros((T, N, H)) # h(T,N,H)

x_trans = np.swapaxes(x, 0, 1) # x_trans(T,N,D)

for i in range(T):

x_now = x_trans[i] # (N,D)

if i == 0:

h_now = h0

else:

h_now = h[i-1, :, :]

next_h, cache_now = rnn_step_forward(x_now, h_now, Wx, Wh, b)

h[i, :, :] = next_h

cache.append(cache_now)

h = np.swapaxes(h, 0, 1)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

##############################################################################

# END OF YOUR CODE #

##############################################################################

return h, cache

-

使用

np.swapaxes()函数来进行维度转换 -

cache初始化为空列表,之后经过一次运算就使用append进行插入 -

最后记得将h的维度转换回来

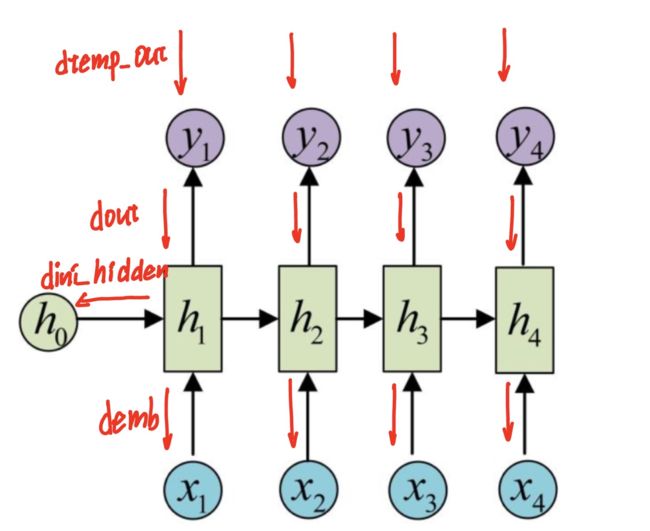

2.6 Vanilla RNN: Backward

接下来进行反向传播,首先要阅读 API

def rnn_backward(dh, cache):

"""Compute the backward pass for a vanilla RNN over an entire sequence of data.

Inputs:

- dh: Upstream gradients of all hidden states, of shape (N, T, H)

NOTE: 'dh' contains the upstream gradients produced by the

individual loss functions at each timestep, *not* the gradients

being passed between timesteps (which you'll have to compute yourself

by calling rnn_step_backward in a loop).

Returns a tuple of:

- dx: Gradient of inputs, of shape (N, T, D)

- dh0: Gradient of initial hidden state, of shape (N, H)

- dWx: Gradient of input-to-hidden weights, of shape (D, H)

- dWh: Gradient of hidden-to-hidden weights, of shape (H, H)

- db: Gradient of biases, of shape (H,)

"""

- dh,每一个隐藏状态的上游梯度

- 注意,dh是每一个时间段用独立的损失函数计算出来的,所以还是要通过

for循环计算梯度

- 注意,dh是每一个时间段用独立的损失函数计算出来的,所以还是要通过

- dx:输入的梯度

- dh0:初始隐藏状态的梯度

- dWx:x 的权重 W 梯度

- dWh:h 的权重 W 梯度

- db:偏置 b 的梯度

思路

- 和DNN的反向传播相类似,将 RNN 按时间展开,可以看作是共享权重的 DNN

- 我们要仔细观察一下梯度流,注意梯度的传播有两个方向,一个是当前传播下来的梯度,另一个是由下一个时间节点传播下来的梯度

def rnn_backward(dh, cache):

"""Compute the backward pass for a vanilla RNN over an entire sequence of data.

Inputs:

- dh: Upstream gradients of all hidden states, of shape (N, T, H)

NOTE: 'dh' contains the upstream gradients produced by the

individual loss functions at each timestep, *not* the gradients

being passed between timesteps (which you'll have to compute yourself

by calling rnn_step_backward in a loop).

Returns a tuple of:

- dx: Gradient of inputs, of shape (N, T, D)

- dh0: Gradient of initial hidden state, of shape (N, H)

- dWx: Gradient of input-to-hidden weights, of shape (D, H)

- dWh: Gradient of hidden-to-hidden weights, of shape (H, H)

- db: Gradient of biases, of shape (H,)

"""

dx, dh0, dWx, dWh, db = None, None, None, None, None

##############################################################################

# TODO: Implement the backward pass for a vanilla RNN running an entire #

# sequence of data. You should use the rnn_step_backward function that you #

# defined above. You can use a for loop to help compute the backward pass. #

##############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

N, T, H = dh.shape # (N,T,H)

x, h, Wx, Wh, b = cache[0]

D = x.shape[1]

dx = np.zeros((N, T, D))

dWx = np.zeros((D, H))

dWh = np.zeros((H, H))

db = np.zeros(H)

dh_i = np.zeros((N, H))

for i in range(T-1, -1, -1):

dh_i += dh[:, i, :] # 这里是加号,注意想一下为什么,反向传播的梯度流有两个!

dx_i, dh_i, dWx_i, dWh_i, db_i = rnn_step_backward(dh_i, cache[i])

dx[:, i, :] = dx_i

dWx += dWx_i

dWh += dWh_i

db += db_i

if i == 0:

dh0 = dh_i

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

##############################################################################

# END OF YOUR CODE #

##############################################################################

return dx, dh0, dWx, dWh, db

代码详解

- 注意我们定义的

dh_i,在循环的开始记录的是从后一个时间节点传入的梯度,然后循环开始的时候就加上了本时间节点输出的梯度(独立的损失),因为是加法,所以求导后也是加法,因此直接相加然后进行反向传播 - 到最后一层,

dh_i就是我们想要的dh0了

2.7 Word Embedding: Forward

在深度学习系统中,我们通常用一个向量来代表一个词语,词典中的每个词语都由一个向量来表示,且每个向量都会被一起学习

接下来我们完成 cs231n/rnn_layers.py 中的 word_embedding_forward 来将词语转换成向量

def word_embedding_forward(x, W):

"""Forward pass for word embeddings.

We operate on minibatches of size N where

each sequence has length T. We assume a vocabulary of V words, assigning each

word to a vector of dimension D.

Inputs:

- x: Integer array of shape (N, T) giving indices of words. Each element idx

of x muxt be in the range 0 <= idx < V.

- W: Weight matrix of shape (V, D) giving word vectors for all words.

Returns a tuple of:

- out: Array of shape (N, T, D) giving word vectors for all input words.

- cache: Values needed for the backward pass

"""

out, cache = None, None

##############################################################################

# TODO: Implement the forward pass for word embeddings. #

# #

# HINT: This can be done in one line using NumPy's array indexing. #

##############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

out, cache = W[x], (x, W)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

##############################################################################

# END OF YOUR CODE #

##############################################################################

return out, cache

代码详解

词嵌入:

就是把一个词语转换成一个向量表示,也可以称为**“word2vec”**

使用“独热编码”表示单词,也就是用一个向量来表示词语,那么如果单词有10000个,则向量长度就变成了(10000,)。并且这种表示忽略了单词之间的联系,也就是平等的看待每个单词

“word2vec”

就是学习一个映射,把单词变成向量表示(vec=f(word)),接下来我们就是用这个向量来表示单词量

在这里,我们学习这个映射了,minibatch大小为N,里面的内容是词语的坐标,W 数组中行表示的就是向量化表示的词

- 它这里给出提示说,可以使用 numpy 数组索引来一行解决,我们来重点解释一下

- 使用索引数组,就是在

[]里面可以放数组,生成的数组情况一般和里面的数组形状一样(把多为数组看成一个“对象”,比如这一题,我们后面会解释这个嘿嘿 - W(V, D):代表着 V 个词语的向量表示,每一行都代表着一个词向量

- x(N,T):一个 minibatch,一个训练输入有 T 个词,其中内容为该词语在 W 中的位置 idx

- W[x]:使用数组

x来进行索引,假设x的内容是array([[0,1,2,3]]),也就是一个(1,4)大小的数组- 生成的形状将会和

x相同,也就是 array([[W[0], W[1], W[2], W[3]]]) - 其中W[idx]是一个D大小的向量,我们把他当成了一个“对象”,所以我们说结果和

x的形状一样 - 但是 W[x] 的形状为(N,T,D)

- 生成的形状将会和

- 使用索引数组,就是在

2.8 Word Embedding: Backward

在上面我们实现了正向传播,接下来我们要实现反向传播算法啦

首先还是阅读一下给我们的提示

def word_embedding_backward(dout, cache):

"""Backward pass for word embeddings.

We cannot back-propagate into the words

since they are integers, so we only return gradient for the word embedding

matrix.

HINT: Look up the function np.add.at

Inputs:

- dout: Upstream gradients of shape (N, T, D)

- cache: Values from the forward pass

Returns:

- dW: Gradient of word embedding matrix, of shape (V, D)

"""

- 我们的反向传播不能传播到词,因为他们代表的词语所在的位置坐标,所以我们只会放回梯度矩阵

- 提示:看一下函数

np.add.at - 输入

- dout:上游梯度,大小(N,T,D)

- cache:前向传播的值

- 输出

- dW:词嵌入矩阵的梯度,大小为(V,D)

np.add.at

感觉这位博主讲的非常好,给一个参考链接:https://blog.csdn.net/qq120633269/article/details/110039585

首先使用 tuple 和 list进行索引是会导致不一样的结果的

- tuple 进行索引,每个 tuple 会被当成是坐标

- 如果有多个 list 进行索引(且数量等于被索引的数组的维数),则第一个 list 作为第一个维度的坐标,第二个 list 作为第二个维度的坐标,以此类推

- 如果多个 list 进行索引(且数量小于被索引的数组的维度),少的维度全选

np.add.at(a,b,c)的整个过程如下:

- 创建一个形状为

a[b].shape的一个全零数组,然后把 c 广播到其中 - 让其和 a[b] 进行相加,然后再把相加的结果对应回到原来 a 的索引位置就可以了

def word_embedding_backward(dout, cache):

"""Backward pass for word embeddings.

We cannot back-propagate into the words

since they are integers, so we only return gradient for the word embedding

matrix.

HINT: Look up the function np.add.at

Inputs:

- dout: Upstream gradients of shape (N, T, D)

- cache: Values from the forward pass

Returns:

- dW: Gradient of word embedding matrix, of shape (V, D)

"""

dW = None

##############################################################################

# TODO: Implement the backward pass for word embeddings. #

# #

# Note that words can appear more than once in a sequence. #

# HINT: Look up the function np.add.at #

##############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

x, W = cache # x(N,T) W(V,D)

dW = np.zeros_like(W) #(V,D)

np.add.at(dW, x, dout) # dW[x](N,T,D) dout(N,T,D)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

##############################################################################

# END OF YOUR CODE #

##############################################################################

return dW

代码详解

按照上面的讲解,np.add.at 会生成 dw[x],这是一个大小为(N,T,D)的张量,和 dout 的大小一致,和 dout 相加,然后再把它转化为 dW 的大小,这就是我们想要的答案啦

2.9 Temporal Softmax Loss

在 RNN 语言模型中,每一个时间步会为词典中的每一个词输出一个得分。我们知道每个时间步应该输出的 ground-truth word,所以我们可以用 Softmax 损失函数来计算每个时间步的损失和梯度,我们可以累积损失并在 minibatch 上计算平均

有个问题:不同的图像描述可能会有不同的长度,我们用 mask 来选择要计算损失的部分

因为 Softmax Loss 和 Assignment 1 的做法差不多,所以已经帮我们实现了,我们要自己去阅读一下代码

首先阅读一下他的说明

def temporal_softmax_loss(x, y, mask, verbose=False):

"""A temporal version of softmax loss for use in RNNs.

We assume that we are making predictions over a vocabulary of size V for each timestep of a

timeseries of length T, over a minibatch of size N. The input x gives scores for all vocabulary

elements at all timesteps, and y gives the indices of the ground-truth element at each timestep.

We use a cross-entropy loss at each timestep, summing the loss over all timesteps and averaging

across the minibatch.

As an additional complication, we may want to ignore the model output at some timesteps, since

sequences of different length may have been combined into a minibatch and padded with NULL

tokens. The optional mask argument tells us which elements should contribute to the loss.

Inputs:

- x: Input scores, of shape (N, T, V)

- y: Ground-truth indices, of shape (N, T) where each element is in the range

0 <= y[i, t] < V

- mask: Boolean array of shape (N, T) where mask[i, t] tells whether or not

the scores at x[i, t] should contribute to the loss.

Returns a tuple of:

- loss: Scalar giving loss

- dx: Gradient of loss with respect to scores x.

"""

- 假设词典有 V 个词语,时间长度 T(也就是一个句子中有 T 个词),minibatch 大小为 N

x(大小(N,T,V))给出了词典中每一个词在每一个时间步的分数y(大小(N,T))给出了每个时间步应该输出的词语的坐标- 每个时间步计算交叉熵损失,累加损失并计算平均值

mask(大小(N,T))告诉算法那些需要计算损失,而那些不需要

Softmax损失

我们先来回忆一下 Softmax 损失

L i = − f y i + l o g ( ∑ j e f j ) 或者 L i = − l o g ( e f y i ∑ j e f j ) L_i=-f_{y_i}+log(\sum_je^{f_j}) \\ 或者\\ L_i=-log(\frac{e^{f_{y_i}}}{\sum_je^{f_j}}) Li=−fyi+log(j∑efj)或者Li=−log(∑jefjefyi)

f f f 表示得分,按照上面的方式进行反向传播会简单很多

其中 l o g log log 求导结束就是每个类别的概率,对于正确的类别还要额外-1

代码解析

def temporal_softmax_loss(x, y, mask, verbose=False):

"""A temporal version of softmax loss for use in RNNs.

We assume that we are making predictions over a vocabulary of size V for each timestep of a

timeseries of length T, over a minibatch of size N. The input x gives scores for all vocabulary

elements at all timesteps, and y gives the indices of the ground-truth element at each timestep.

We use a cross-entropy loss at each timestep, summing the loss over all timesteps and averaging

across the minibatch.

As an additional complication, we may want to ignore the model output at some timesteps, since

sequences of different length may have been combined into a minibatch and padded with NULL

tokens. The optional mask argument tells us which elements should contribute to the loss.

Inputs:

- x: Input scores, of shape (N, T, V)

- y: Ground-truth indices, of shape (N, T) where each element is in the range

0 <= y[i, t] < V

- mask: Boolean array of shape (N, T) where mask[i, t] tells whether or not

the scores at x[i, t] should contribute to the loss.

Returns a tuple of:

- loss: Scalar giving loss

- dx: Gradient of loss with respect to scores x.

"""

N, T, V = x.shape

x_flat = x.reshape(N * T, V) # (N,T,V)->(N*T,V)

y_flat = y.reshape(N * T) # (N,T)->(N*T)

mask_flat = mask.reshape(N * T) # (N,T)->(N*T)

probs = np.exp(x_flat - np.max(x_flat, axis=1, keepdims=True)) # 平移

probs /= np.sum(probs, axis=1, keepdims=True) # softmax 计算每个词语的概率,probs(N*T,V)

loss = -np.sum(mask_flat * np.log(probs[np.arange(N * T), y_flat])) / N # loss 就是不是正确类别的概率和进行log运算加上负号

dx_flat = probs.copy()

dx_flat[np.arange(N * T), y_flat] -= 1 # 正确类别要-1

dx_flat /= N # 别忘了 loss 是取了平均的,所以导数也要取平均

dx_flat *= mask_flat[:, None] # 这里的[:,None] 将向量mask处理为列向量,进行遮盖

if verbose:

print("dx_flat: ", dx_flat.shape)

dx = dx_flat.reshape(N, T, V)

return loss, dx

2.10 RNN for Image Captioning

我们现在已经完成了构建一个用于图片描述的 RNN 所需要的层,现在我们可以将其组合起来。打开 cs231n/classifiers/rnn.py 并查看 CaptioniingRNN 类

我们需要在loss函数中完成前反向传播,我们只需要完成cell_type='rnn'的部分就可以了

代码详解

我们需要仔细阅读已经给出的部分,我们才可以更快的完成所需要的部分

- 类描述

class CaptioningRNN:

"""

A CaptioningRNN produces captions from image features using a recurrent

neural network.

描述RNN使用一个循环神经网络,利用图片特征生成描述

The RNN receives input vectors of size D, has a vocab size of V, works on

sequences of length T, has an RNN hidden dimension of H, uses word vectors

of dimension W, and operates on minibatches of size N.

RNN

输入向量大小 D(有D行向量)

词汇大小 V(有V个词)

长度为 T(一句话T个词)

隐状态维度 H

词向量维度 W

batch 大小N

Note that we don't use any regularization for the CaptioningRNN.

不使用正则化

"""

- 初始化

def __init__(

self,

word_to_idx,

input_dim=512,

wordvec_dim=128,

hidden_dim=128,

cell_type="rnn",

dtype=np.float32,

):

"""

Construct a new CaptioningRNN instance.

Inputs:

- word_to_idx: A dictionary giving the vocabulary. It contains V entries,

and maps each string to a unique integer in the range [0, V).

给出了词语的字典,大小为 V,把词映射到对应的向量坐标

- input_dim: Dimension D of input image feature vectors.

图像特征向量的维度

- wordvec_dim: Dimension W of word vectors.

词向量的维度

- hidden_dim: Dimension H for the hidden state of the RNN.

隐藏状态维度

- cell_type: What type of RNN to use; either 'rnn' or 'lstm'.

使用rnn或lstm

- dtype: numpy datatype to use; use float32 for training and float64 for

numeric gradient checking.

数据类型

"""

if cell_type not in {"rnn", "lstm"}:

raise ValueError('Invalid cell_type "%s"' % cell_type)

self.cell_type = cell_type

self.dtype = dtype

self.word_to_idx = word_to_idx

self.idx_to_word = {i: w for w, i in word_to_idx.items()}

self.params = {}

vocab_size = len(word_to_idx)

self._null = word_to_idx["" ]

self._start = word_to_idx.get("" , None)

self._end = word_to_idx.get("" , None)

# Initialize word vectors 初始化词向量

self.params["W_embed"] = np.random.randn(vocab_size, wordvec_dim)

self.params["W_embed"] /= 100

# Initialize CNN -> hidden state projection parameters 初始化CNN->隐藏状态的参数

self.params["W_proj"] = np.random.randn(input_dim, hidden_dim)

self.params["W_proj"] /= np.sqrt(input_dim)

self.params["b_proj"] = np.zeros(hidden_dim)

# Initialize parameters for the RNN 初始化RNN参数

dim_mul = {"lstm": 4, "rnn": 1}[cell_type]

self.params["Wx"] = np.random.randn(wordvec_dim, dim_mul * hidden_dim)

self.params["Wx"] /= np.sqrt(wordvec_dim)

self.params["Wh"] = np.random.randn(hidden_dim, dim_mul * hidden_dim)

self.params["Wh"] /= np.sqrt(hidden_dim)

self.params["b"] = np.zeros(dim_mul * hidden_dim)

# Initialize output to vocab weights 初始化暑输出词语权重(隐藏状态->词)

self.params["W_vocab"] = np.random.randn(hidden_dim, vocab_size)

self.params["W_vocab"] /= np.sqrt(hidden_dim)

self.params["b_vocab"] = np.zeros(vocab_size)

# Cast parameters to correct dtype

for k, v in self.params.items():

self.params[k] = v.astype(self.dtype)

接下来到了我们要自己完成的内容了,阅读一下 API:

def loss(self, features, captions):

"""

Compute training-time loss for the RNN. We input image features and

ground-truth captions for those images, and use an RNN (or LSTM) to compute

loss and gradients on all parameters.

Inputs:

- features: Input image features, of shape (N, D)

- captions: Ground-truth captions; an integer array of shape (N, T + 1) where

each element is in the range 0 <= y[i, t] < V

Returns a tuple of:

- loss: Scalar loss

- grads: Dictionary of gradients parallel to self.params

"""

# Cut captions into two pieces: captions_in has everything but the last word

# and will be input to the RNN; captions_out has everything but the first

# word and this is what we will expect the RNN to generate. These are offset

# by one relative to each other because the RNN should produce word (t+1)

# after receiving word t. The first element of captions_in will be the START

# token, and the first element of captions_out will be the first word.

# 把描述分为两个部分,captions_in不包含最后一个词,将会输入进入RNN

# caption_out 不包含第一个词,是我们希望RNN生成的内容

# 为何彼此偏移一个的原因是 RNN 应该在接收到 t 个单词后生成 t+1 个单词

# caption_in 的第一个元素是 需要的变量初始化

captions_in = captions[:, :-1] # 输入的词

captions_out = captions[:, 1:] # 输出的词

# You'll need this 遮罩

mask = captions_out != self._null

# Weight and bias for the affine transform from image features to initial

# hidden state

# 把图片特征转化成隐藏状态的全连接网路

W_proj, b_proj = self.params["W_proj"], self.params["b_proj"]

# Word embedding matrix

# 词嵌入矩阵

W_embed = self.params["W_embed"]

# Input-to-hidden, hidden-to-hidden, and biases for the RNN

Wx, Wh, b = self.params["Wx"], self.params["Wh"], self.params["b"]

# Weight and bias for the hidden-to-vocab transformation.

W_vocab, b_vocab = self.params["W_vocab"], self.params["b_vocab"]

loss, grads = 0.0, {}

接下来我们开始实现前向传播,作业非常贴心的给我们提供了提示,我们只需要跟着提示做就可以了

############################################################################

# TODO: Implement the forward and backward passes for the CaptioningRNN. #

# In the forward pass you will need to do the following: #

# (1) Use an affine transformation to compute the initial hidden state #

# from the image features. This should produce an array of shape (N, H)#

# (2) Use a word embedding layer to transform the words in captions_in #

# from indices to vectors, giving an array of shape (N, T, W). #

# (3) Use either a vanilla RNN or LSTM (depending on self.cell_type) to #

# process the sequence of input word vectors and produce hidden state #

# vectors for all timesteps, producing an array of shape (N, T, H). #

# (4) Use a (temporal) affine transformation to compute scores over the #

# vocabulary at every timestep using the hidden states, giving an #

# array of shape (N, T, V). #

# (5) Use (temporal) softmax to compute loss using captions_out, ignoring #

# the points where the output word is using the mask above. #

# #

# #

# Do not worry about regularizing the weights or their gradients! #

# #

# In the backward pass you will need to compute the gradient of the loss #

# with respect to all model parameters. Use the loss and grads variables #

# defined above to store loss and gradients; grads[k] should give the #

# gradients for self.params[k]. #

# #

# Note also that you are allowed to make use of functions from layers.py #

# in your implementation, if needed. #

############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 构建初始状态(通过输入的图片进行运算)

ini_hidden_state, ini_cache = affine_forward(features, W_proj, b_proj)

# 进行词嵌入(把输入转化成词向量表示)

word_emb_in, word_emb_cache = word_embedding_forward(captions_in, W_embed)

# 使用RNN进行前向传播(随着时间,也就是“向左”)

hidden_state, hidden_cache = rnn_forward(word_emb_in, ini_hidden_state, Wx, Wh, b)

# 求出每个时间节点的输出(也就是“向上”)

temp_out, temp_cache = temporal_affine_forward(hidden_state, W_vocab, b_vocab)

# 求出每个时间节点的损失

loss, grads = temporal_softmax_loss(temp_out, captions_out, mask)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

############################################################################

# END OF YOUR CODE #

############################################################################

return loss, grads

反向传播

接下来我们要实现反向传播,我们再一次把这个图挂在这里,以便于我们理解接下来的代码

代码详解

# 求出每个时间节点的损失

loss, dtemp_out = temporal_softmax_loss(temp_out, captions_out, mask)

# 反向传播

dout, grads["W_vocab"], grads["b_vocab"] = temporal_affine_backward(dtemp_out, temp_cache)

demb, dini_hidden, grads["Wx"], grads["Wh"], grads["b"] = rnn_backward(dout, hidden_cache)

grads["W_embed"] = word_embedding_backward(demb, word_emb_cache)

dfeatures, grads["W_proj"], grads["b_proj"] = affine_backward(dini_hidden, ini_cache)

- 第一行是梯度流是“从上到下”,根据输出单词的差异算出梯度并反向传播

- 第二行的梯度是 “根据时间从后往前” + “从上到下”(两个梯度方向)

- 第三行的梯度流仍然是“从上到下”,根据上面隐层计算出的梯度传播给词嵌入

- 第四行梯度流仍然是“根据时间从后往前”,根据隐层计算出的梯度传播给初始化权重

挺绕的一个步骤把,所以叫做循环神经网络啦!

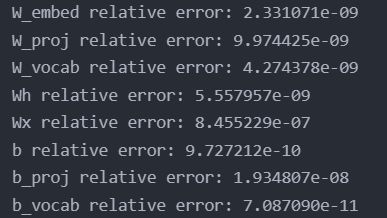

代码验证

接下来我们运行 jupyter notebook 中的代码来验证我们的实现有效性

恭喜,完成任务

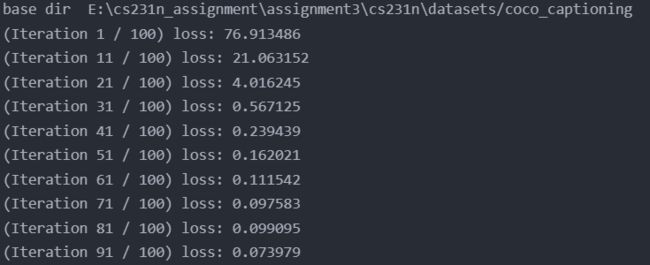

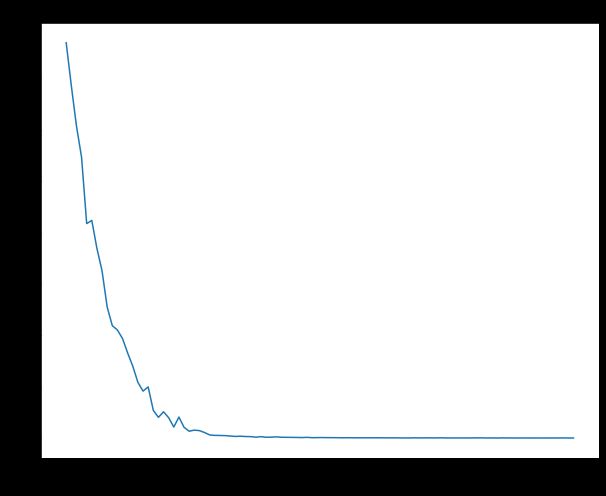

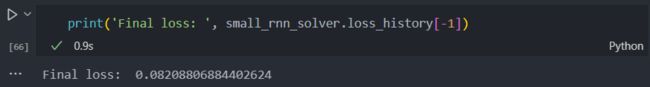

2.11 Overfit RNN Captioning Model on Small Data

就像我们之前用来训练图像分类模型的 Solver 类一样,我们这次作业用 CaptioningSolver 类来训练图像描述模型,打开 cs231n/captioning_solver.py ,并阅读 CaptioningSolver 类,你会发现非常相似的

一旦你熟悉了 API,运行下面的单元来保证你的模型会在一个小的样本(100个训练样本)下出现过拟合,最后的损失小于0.1

代码验证

答案确实小于0.1了

最后输出训练损失

完成过拟合,说明模型有效

2.12 RNN Sampling at Test Time

胜利就在眼前,最后一个小作业了

和图像分类不同,图像描述模型在训练和测试的时候表现会非常不同

训练的时候,我们有标准的描述,所以我们把 ground-truth words 当作输入喂给 RNN(不然会出现问题)

测试的时候,我们从词典分布中取样,并喂给RNN

在文件 cs231n/classifiers/rnn.py ,完成 sample 函数来完成测试时的取样,完成之后,运行下面的代码来使模型在训练集和验证集过拟合

思路

这里有什么区别呢?在训练的时候,我们的训练数据是一次性输入的,也就是说我们的输入x为(N,T,D),但是此时我们的输入是一个一个输入的,也就是我们的输入x为(N,),我们需要使用 rnn_step_forward 函数来一步步串行的进行输出才可以,有了这个想法,我们来看代码

代码详解

hidden_state, _ = affine_forward(features, W_proj, b_proj)

word = self._start*np.ones(N, dtype=np.int32) # (N,)

for i in range(max_length):

word_embed, _ = word_embedding_forward(word, W_embed)

hidden_state, _ = rnn_step_forward(

word_embed, hidden_state, Wx, Wh, b)

scores, _ = affine_forward(hidden_state, W_vocab, b_vocab)

word = np.argmax(scores, axis=1)

captions[:, i] = word

- 首先要使用输入的图像特征来计算初始的隐藏状态(一次性输入N张图)

- 将初始的词坐标放入word向量中,word向量一次性保存N张图片描述的当前输出

- 准备好初始状态后进入循环,进行RNN的运算

- 进行词嵌入

- 根据之前的隐藏状态和当前的输入运算下一个隐藏状态

- 根据当前的隐藏状态计算每个词的得分

- 把得分最高的词的坐标取出

- 将输入记录到captions张量中

接下来我们放出全部代码

class CaptioningRNN:

"""

A CaptioningRNN produces captions from image features using a recurrent

neural network.

描述RNN使用一个循环神经网络,利用图片特征生成描述

The RNN receives input vectors of size D, has a vocab size of V, works on

sequences of length T, has an RNN hidden dimension of H, uses word vectors

of dimension W, and operates on minibatches of size N.

RNN

输入大小 D

词汇大小 V(有V个词)

长度为 T

隐状态维度 H

词向量维度 W

batch 大小N

Note that we don't use any regularization for the CaptioningRNN.

不使用正则化

"""

def __init__(

self,

word_to_idx,

input_dim=512,

wordvec_dim=128,

hidden_dim=128,

cell_type="rnn",

dtype=np.float32,

):

"""

Construct a new CaptioningRNN instance.

Inputs:

- word_to_idx: A dictionary giving the vocabulary. It contains V entries,

and maps each string to a unique integer in the range [0, V).

给出了词语的字典,大小为 V,把词映射到对应的向量坐标

- input_dim: Dimension D of input image feature vectors. 图像特征向量的维度

- wordvec_dim: Dimension W of word vectors. 词向量的维度

- hidden_dim: Dimension H for the hidden state of the RNN.隐藏状态维度

- cell_type: What type of RNN to use; either 'rnn' or 'lstm'.使用rnn或lstm

- dtype: numpy datatype to use; use float32 for training and float64 for

numeric gradient checking.

"""

if cell_type not in {"rnn", "lstm"}:

raise ValueError('Invalid cell_type "%s"' % cell_type)

self.cell_type = cell_type

self.dtype = dtype

self.word_to_idx = word_to_idx

self.idx_to_word = {i: w for w, i in word_to_idx.items()}

self.params = {}

vocab_size = len(word_to_idx)

self._null = word_to_idx["" ]

self._start = word_to_idx.get("" , None)

self._end = word_to_idx.get("" , None)

# Initialize word vectors 初始化词向量

self.params["W_embed"] = np.random.randn(vocab_size, wordvec_dim)

self.params["W_embed"] /= 100

# Initialize CNN -> hidden state projection parameters 初始化CNN->隐藏状态的参数

self.params["W_proj"] = np.random.randn(input_dim, hidden_dim)

self.params["W_proj"] /= np.sqrt(input_dim)

self.params["b_proj"] = np.zeros(hidden_dim)

# Initialize parameters for the RNN 初始化RNN参数

dim_mul = {"lstm": 4, "rnn": 1}[cell_type]

self.params["Wx"] = np.random.randn(wordvec_dim, dim_mul * hidden_dim)

self.params["Wx"] /= np.sqrt(wordvec_dim)

self.params["Wh"] = np.random.randn(hidden_dim, dim_mul * hidden_dim)

self.params["Wh"] /= np.sqrt(hidden_dim)

self.params["b"] = np.zeros(dim_mul * hidden_dim)

# Initialize output to vocab weights 初始化暑输出词语权重(隐藏状态->词)

self.params["W_vocab"] = np.random.randn(hidden_dim, vocab_size)

self.params["W_vocab"] /= np.sqrt(hidden_dim)

self.params["b_vocab"] = np.zeros(vocab_size)

# Cast parameters to correct dtype

for k, v in self.params.items():

self.params[k] = v.astype(self.dtype)

def loss(self, features, captions):

"""

Compute training-time loss for the RNN. We input image features and

ground-truth captions for those images, and use an RNN (or LSTM) to compute

loss and gradients on all parameters.

Inputs:

- features: Input image features, of shape (N, D)

- captions: Ground-truth captions; an integer array of shape (N, T + 1) where

each element is in the range 0 <= y[i, t] < V

Returns a tuple of:

- loss: Scalar loss

- grads: Dictionary of gradients parallel to self.params

"""

# Cut captions into two pieces: captions_in has everything but the last word

# and will be input to the RNN; captions_out has everything but the first

# word and this is what we will expect the RNN to generate. These are offset

# by one relative to each other because the RNN should produce word (t+1)

# after receiving word t. The first element of captions_in will be the START

# token, and the first element of captions_out will be the first word.

# 把描述分为两个部分,captions_in不包含最后一个词,将会输入进入RNN

# caption_out 不包含第一个词,是我们希望RNN生成的内容

# 为何彼此偏移一个的原因是 RNN 应该在接收到 t 个单词后生成 t+1 个单词

# caption_in 的第一个元素是 using the mask above. #

# #

# #

# Do not worry about regularizing the weights or their gradients! #

# #

# In the backward pass you will need to compute the gradient of the loss #

# with respect to all model parameters. Use the loss and grads variables #

# defined above to store loss and gradients; grads[k] should give the #

# gradients for self.params[k]. #

# #

# Note also that you are allowed to make use of functions from layers.py #

# in your implementation, if needed. #

############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 前向传播

# 构建初始状态(通过输入的图片进行运算)

ini_hidden_state, ini_cache = affine_forward(features, W_proj, b_proj)

# 进行词嵌入(把输入转化成词向量表示)

word_emb_in, word_emb_cache = word_embedding_forward(

captions_in, W_embed)

# 使用RNN进行前向传播(随着时间,也就是“向右”)

hidden_state, hidden_cache = rnn_forward(

word_emb_in, ini_hidden_state, Wx, Wh, b)

# 求出每个时间节点的输出(也就是“向上”)

temp_out, temp_cache = temporal_affine_forward(

hidden_state, W_vocab, b_vocab)

# 求出每个时间节点的损失

loss, dtemp_out = temporal_softmax_loss(temp_out, captions_out, mask)

# 反向传播

dout, grads["W_vocab"], grads["b_vocab"] = temporal_affine_backward(

dtemp_out, temp_cache)

demb, dini_hidden, grads["Wx"], grads["Wh"], grads["b"] = rnn_backward(

dout, hidden_cache)

grads["W_embed"] = word_embedding_backward(demb, word_emb_cache)

dfeatures, grads["W_proj"], grads["b_proj"] = affine_backward(

dini_hidden, ini_cache)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

############################################################################

# END OF YOUR CODE #

############################################################################

return loss, grads

def sample(self, features, max_length=30):

"""

Run a test-time forward pass for the model, sampling captions for input

feature vectors.

At each timestep, we embed the current word, pass it and the previous hidden

state to the RNN to get the next hidden state, use the hidden state to get

scores for all vocab words, and choose the word with the highest score as

the next word. The initial hidden state is computed by applying an affine

transform to the input image features, and the initial word is the

token.

每一个时间步,进行词嵌入,并进行前向传播的到当前的隐藏状态

用隐藏状态来得到单词的分数,选择分数最高的作为接下来的词

初始的隐藏状态使用输入图像做线性变换来得到

初始的词语为 token

For LSTMs you will also have to keep track of the cell state; in that case

the initial cell state should be zero.

Inputs:

- features: Array of input image features of shape (N, D).

- max_length: Maximum length T of generated captions.

Returns:

- captions: Array of shape (N, max_length) giving sampled captions,

where each element is an integer in the range [0, V). The first element

of captions should be the first sampled word, not the token.

"""

N = features.shape[0]

captions = self._null * np.ones((N, max_length), dtype=np.int32)

# Unpack parameters

W_proj, b_proj = self.params["W_proj"], self.params["b_proj"]

W_embed = self.params["W_embed"]

Wx, Wh, b = self.params["Wx"], self.params["Wh"], self.params["b"]

W_vocab, b_vocab = self.params["W_vocab"], self.params["b_vocab"]

###########################################################################

# TODO: Implement test-time sampling for the model. You will need to #

# initialize the hidden state of the RNN by applying the learned affine #

# transform to the input image features. The first word that you feed to #

# the RNN should be the token; its value is stored in the #

# variable self._start. At each timestep you will need to do to: #

# (1) Embed the previous word using the learned word embeddings #

# (2) Make an RNN step using the previous hidden state and the embedded #

# current word to get the next hidden state. #

# (3) Apply the learned affine transformation to the next hidden state to #

# get scores for all words in the vocabulary #

# (4) Select the word with the highest score as the next word, writing it #

# (the word index) to the appropriate slot in the captions variable #

# #

# For simplicity, you do not need to stop generating after an token #

# is sampled, but you can if you want to. #

# #

# HINT: You will not be able to use the rnn_forward or lstm_forward #

# functions; you'll need to call rnn_step_forward or lstm_step_forward in #

# a loop. #

# #

# NOTE: we are still working over minibatches in this function. Also if #

# you are using an LSTM, initialize the first cell state to zeros. #

###########################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

hidden_state, _ = affine_forward(features, W_proj, b_proj)

word = self._start*np.ones(N, dtype=np.int32) # (N,)

for i in range(max_length):

word_embed, _ = word_embedding_forward(word, W_embed)

hidden_state, _ = rnn_step_forward(

word_embed, hidden_state, Wx, Wh, b)

scores, _ = affine_forward(hidden_state, W_vocab, b_vocab)

word = np.argmax(scores, axis=1)

captions[:, i] = word

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

############################################################################

# END OF YOUR CODE #

############################################################################

return captions

3. 总结、预告

到这里我们使用 numpy 手撸了一个 RNN,RNN 的结构可以按照时间展开,就和我们以前使用的深度神经网络挺像的。我们只要小心的思考,还是可以抓住重点的

下一次我会更新作业2的iai,发现我更新好混乱,因为作业二的文章只写了一半,反倒是作业三的先开始做然后一边做一边写竟然给写完了iai,于是就先发出来啦!