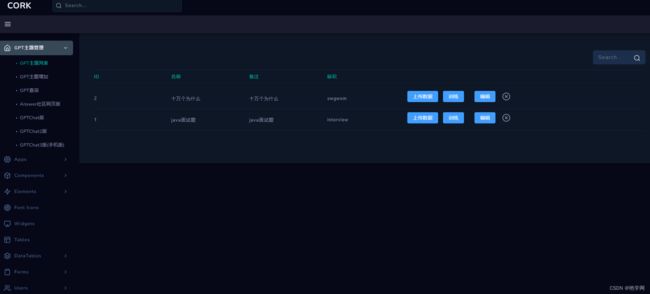

200行java代码实现ChatDOC

需求:

PDF,DOCX和TXT格式的文档,通过我们的模型短时间训练后,您就可以和自己的文档对话

package gpt3;

import com.colorbin.common.base.JsonTable;

import com.colorbin.common.constant.ProjectConstant;

import com.colorbin.entity.gpt.GptTernary;

import com.colorbin.entity.gpt.model.GptConvertUtils;

import com.colorbin.entity.gpt.model.GptTernaryVo;

import com.colorbin.rpa.c_magic_ai.c02_nlp.nlpUtil;

import com.colorbin.rpa.d_software_auto.d10_folder.fileUtil;

import com.colorbin.service.gpt.GptTernaryService;

import com.colorbin.service.gpt.impl.GptTernaryServiceImpl;

import com.colorbin.util._listUtils;

import com.fasterxml.jackson.core.JsonFactory;

import com.fasterxml.jackson.core.JsonParser;

import com.fasterxml.jackson.core.JsonToken;

import com.hankcs.hanlp.HanLP;

import com.hankcs.hanlp.corpus.dependency.CoNll.CoNLLSentence;

import com.hankcs.hanlp.corpus.dependency.CoNll.CoNLLWord;

import org.apache.poi.hwpf.HWPFDocument;

import org.apache.poi.hwpf.extractor.WordExtractor;

import org.apache.poi.hwpf.usermodel.Range;

import java.io.File;

import java.io.FileInputStream;

import java.io.IOException;

import java.lang.reflect.Field;

import java.util.*;

import java.util.concurrent.CompletableFuture;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

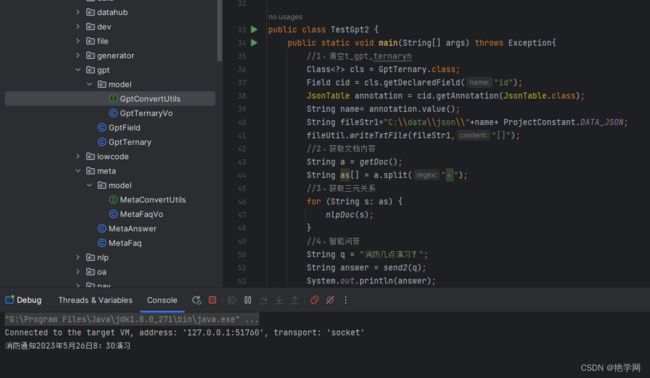

public class TestGpt2 {

public static void main(String[] args) throws Exception{

//1、清空t_gpt_ternaryh

Class<?> cls = GptTernary.class;

Field cid = cls.getDeclaredField("id");

JsonTable annotation = cid.getAnnotation(JsonTable.class);

String name= annotation.value();

String fileStr1="C:\\data\\json\\"+name+ ProjectConstant.DATA_JSON;

fileUtil.writeTxtFile(fileStr1,"[]");

//2、获取文档内容

String a = getDoc();

String as[] = a.split("。");

//3、获取三元关系

for (String s: as) {

nlpDoc(s);

}

//4、智能问答

String q = "消防几点演习?";

String answer = send2(q);

System.out.println(answer);

}

private static ThreadPoolExecutor threadPool =

new ThreadPoolExecutor(100, Integer.MAX_VALUE, 60L,

TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<>(),

new ThreadPoolExecutor.CallerRunsPolicy());

private static String send2(String name) throws Exception {

final List<String>[] list7 = new List[]{new ArrayList<>()};

CompletableFuture one = CompletableFuture.runAsync(() -> {

try {

String question = name;

List<String> list3 = com.colorbin.rpa.c_magic_ai.c02_nlp.nlpUtil.getJG(new String[]{question});

Set<String> stringSet = new HashSet<>();

stringSet.addAll(list3);

list7[0] = new ArrayList<>(stringSet);

} catch (Exception e) {

throw new RuntimeException(e);

}

}, threadPool);

CompletableFuture.allOf(one).get();

//去除停用词

List<String>[] list6 = new List[]{new ArrayList<>()};

CompletableFuture two = CompletableFuture.runAsync(() -> {

try {

list6[0] = com.colorbin.rpa.c_magic_ai.c02_nlp.nlpUtil.removeStopWords(list7[0]);

} catch (Exception e) {

throw new RuntimeException(e);

}

}, threadPool);

CompletableFuture.allOf(two).get();

String fileName = ProjectConstant.DATA_JSON_PATH + "t_gpt_ternary" +ProjectConstant.DATA_JSON;

List<GptTernary>[] list9 = new List[]{new ArrayList<>()};

//读取大文件 时间较长

List<GptTernary> list8 = new ArrayList<>();

JsonFactory factory = new JsonFactory();

JsonParser parser = factory.createParser(new File(fileName));

GptTernary m2 = new GptTernary();

while (!parser.isClosed()) {

JsonToken token = parser.nextToken();

if (token == null) {

break;

}

if (JsonToken.FIELD_NAME.equals(token)) {

String fieldName = parser.getCurrentName();

parser.nextToken();

String fieldValue = parser.getValueAsString();

if (fieldName.equals("subject")){

m2.setSubject(fieldValue);

}

if (fieldName.equals("object")){

m2.setObject(fieldValue);

}

if (fieldName.equals("predicate")){

m2.setPredicate(fieldValue);

}

if(m2.getSubject()!=null && m2.getObject()!=null && m2.getPredicate()!=null){

if(containsKeyFlag(m2.getSubject(),(list6[0])) || containsKeyFlag(m2.getObject(),(list6[0]))

|| containsKeyFlag(m2.getPredicate(),(list6[0]))){

list8.add(m2);

}

m2 = new GptTernary();

}

}

}

parser.close();

Set<GptTernary> metaFaqSet1 = new HashSet<>();

metaFaqSet1.addAll(list8);

list9[0] = new ArrayList<>(metaFaqSet1);

List<GptTernaryVo> list10 = new ArrayList<>();

CompletableFuture four = CompletableFuture.runAsync(() -> {

List<GptTernaryVo> lists = GptConvertUtils.INSTANCE.doToDTO(list9[0]);

for (GptTernary dataInterview : lists) {

double similarity = nlpUtil.getSimilarity(name, dataInterview.getSubject()+dataInterview.getPredicate()+dataInterview.getObject());

GptTernaryVo metaFaqVo = new GptTernaryVo();

metaFaqVo.setId(dataInterview.getId());

metaFaqVo.setSubject(dataInterview.getSubject());

metaFaqVo.setObject(dataInterview.getObject());

metaFaqVo.setPredicate(dataInterview.getPredicate());

metaFaqVo.setSim(similarity);

list10.add(metaFaqVo);

}

_listUtils.sort(list10, false, "sim");

}, threadPool);

CompletableFuture.allOf(four).get();

if (list10.size() > 0) {

return list10.get(0).getSubject()+list10.get(0).getPredicate()+list10.get(0).getObject();

}else {

return "没有找到相似答案";

}

}

public static void saveRelation(GptTernaryService gptTernaryService, String a, String b, String c) throws Exception{

GptTernary gptTernary = new GptTernary();

gptTernary.setSubject(a);

gptTernary.setPredicate(b);

gptTernary.setObject(c);

gptTernaryService.saveOrUpdate(gptTernary);

}

private static boolean containsKeyFlag(String query, List<String> strings) {

for (String str:strings){

if(query.contains(str)){

return true;

}

}

return false;

}

public static void nlpDoc(String text) throws Exception{

CoNLLSentence sentence = HanLP.parseDependency(text);

Map<String, List<CoNLLWord>> maps = new HashMap<>();

for (CoNLLWord word : sentence) {

String b = word.DEPREL;

maps.put(b, new ArrayList<>());

}

for (Map.Entry<String, List<CoNLLWord>> entry : maps.entrySet()) {

List<CoNLLWord> list = new ArrayList<>();

for (CoNLLWord word : sentence) {

if (entry.getKey().equals(word.DEPREL)) {

list.add(word);

maps.put(entry.getKey(), list);

}

}

}

GptTernaryService gptTernaryService = new GptTernaryServiceImpl();

if(maps.containsKey("主谓关系") && maps.containsKey("动宾关系")){

CoNLLWord entity1 = maps.get("主谓关系").get(0);

CoNLLWord entity2 = maps.get("动宾关系").get(0);

String relation = entity1.HEAD.LEMMA;

saveRelation(gptTernaryService, entity1.LEMMA.trim(), relation.trim().replaceAll("\r\n","").replaceAll(" ",""),

entity2.LEMMA);

}

Map<String, List<CoNLLWord>> dic = maps;

if (dic.containsKey("主谓关系") && dic.containsKey("动补结构")){

CoNLLWord entity1 = dic.get("主谓关系").get(0);

CoNLLWord prep = dic.get("动补结构").get(0);

if (maps.containsKey("介宾关系")){

CoNLLWord entity2 = maps.get("介宾关系").get(0);

String relation = entity1.HEAD.LEMMA + prep.LEMMA;

saveRelation(gptTernaryService, entity1.LEMMA.trim(), relation.trim().replaceAll("\r\n","").replaceAll(" ",""),

entity2.LEMMA);

}

}

if (dic.containsKey("前置宾语")){

CoNLLWord entity2 = dic.get("前置宾语").get(0);

if (dic.containsKey("状中结构")){

CoNLLWord adverbial = dic.get("状中结构").get(0);

if (maps.containsKey("介宾关系")){

CoNLLWord entity1 = maps.get("介宾关系").get(0);

String relation = adverbial.HEAD.LEMMA;

saveRelation(gptTernaryService, entity1.LEMMA.trim(), relation.trim().replaceAll("\r\n","").replaceAll(" ",""),

entity2.LEMMA);

}

}

}

}

public static String getDoc() throws Exception{

// readAndWriterTest3();

File file = null;

WordExtractor extractor = null;

String a = "";

try {

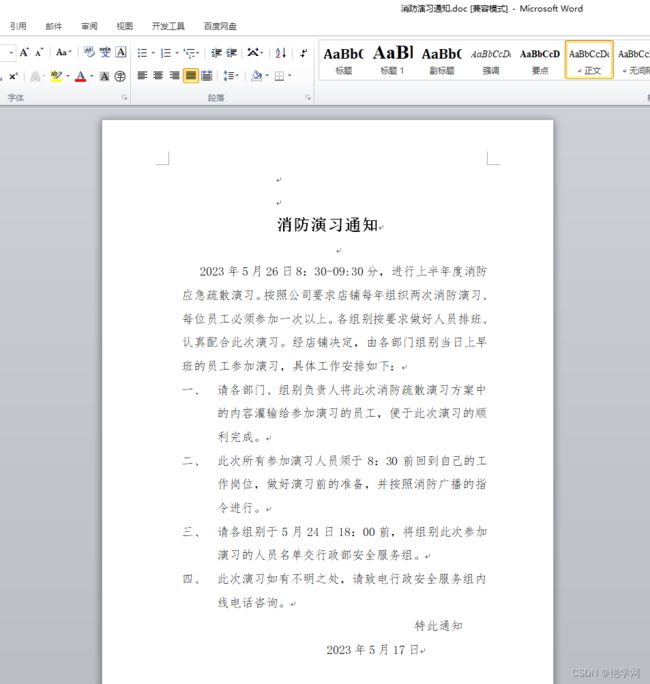

file = new File("D:\\Personal\\Desktop\\消防演习通知.doc");

FileInputStream fis = new FileInputStream(file.getAbsolutePath());

HWPFDocument document = new HWPFDocument(fis);

extractor = new WordExtractor(document);

String[] fileData = extractor.getParagraphText();

for(int i=0;i<fileData.length;i++){

if(fileData[i] != null)

// System.out.println(fileData[i]);

a+=fileData[i];

}

} catch(Exception exep) {

exep.printStackTrace();

}

return a;

}

public static void readAndWriterTest3() throws IOException {

File file = new File("D:\\Personal\\Desktop\\光大店2023.5.26消防演习通知.doc");

String str = "";

try {

FileInputStream fis = new FileInputStream(file);

HWPFDocument doc = new HWPFDocument(fis);

String doc1 = doc.getDocumentText();

System.out.println(doc1);

StringBuilder doc2 = doc.getText();

System.out.println(doc2);

Range rang = doc.getRange();

String doc3 = rang.text();

System.out.println(doc3);

fis.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

写的代码都是问GPT的。

- ChatGPT API节截至2023年6月1日前全面免费试用;

- 免费提交Prompt机器人,并进行多轮对话。不仅仅只有ChatGPT应用开发者才是开发者,只要您能编写出有趣、实用的Prompt,也将成为新时代的开发者;

- ChatDOC智能对话:只需提交PDF, DOC和TXT文档,您就能与您的文档进行智能对话,方便您更快速地阅读文档。

可以上传你的PDF,DOCX和TXT格式的文档,通过我们的模型短时间训练后,您就可以和自己的文档对话,现在支持单文档,后续支持多文档。