Stable Diffusion - 图像反推 (Interrogate) 提示词算法 (BLIP & DeepBooru)

欢迎关注我的CSDN:https://spike.blog.csdn.net/

本文地址:https://spike.blog.csdn.net/article/details/131817599

图像反推 (Interrogate) 功能,是指根据给定的图像生成一个或多个文本提示,这些提示可以描述图像的内容、风格、细节等方面。这个功能可以帮助用户快速找到合适的文本提示,从而生成自己想要的图像变体。图像反推功能,使用了 CLIP (BLIP) 和 DeepBooru 两种提示词反推算法,分别使用 视觉和语言的联合表示 和 基于标签的图像检索。

SD 启动程序:

cd stable_diffusion_webui_docker

conda deactivate

source venv/bin/activate

nohup python -u launch.py --listen --port 9301 --xformers --no-half-vae --enable-insecure-extension-access --theme dark --gradio-queue > nohup.sd.out &

启动日志,时间约 5.5 min (330.5s):

Python 3.8.16 (default, Mar 2 2023, 03:21:46)

[GCC 11.2.0]

Version: v1.4.0

Commit hash: 394ffa7b0a7fff3ec484bcd084e673a8b301ccc8

Installing requirements

Launching Web UI with arguments: --listen --port 9301 --xformers --no-half-vae --enable-insecure-extension-access --theme dark --gradio-queue

[-] ADetailer initialized. version: 23.7.6, num models: 12

dirname: stable_diffusion_webui_docker/localizations

localizations: {'zh-Hans (Stable)': 'stable_diffusion_webui_docker/extensions/stable-diffusion-webui-localization-zh_Hans/localizations/zh-Hans (Stable).json', 'zh-Hans (Testing)': 'stable_diffusion_webui_docker/extensions/stable-diffusion-webui-localization-zh_Hans/localizations/zh-Hans (Testing).json'}

2023-07-19 12:56:33,823 - ControlNet - INFO - ControlNet v1.1.233

ControlNet preprocessor location: stable_diffusion_webui_docker/extensions/sd-webui-controlnet/annotator/downloads

2023-07-19 12:56:35,787 - ControlNet - INFO - ControlNet v1.1.233

sd-webui-prompt-all-in-one background API service started successfully.

Loading weights [4199bcdd14] from stable_diffusion_webui_docker/models/Stable-diffusion/RevAnimated_v122.safetensors

Creating model from config: stable_diffusion_webui_docker/configs/v1-inference.yaml

LatentDiffusion: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

Loading VAE weights specified in settings: stable_diffusion_webui_docker/models/VAE/RevAnimated_Orangemix.vae.pt

Applying attention optimization: xformers... done.

Textual inversion embeddings loaded(5): bad-artist-anime, bad_prompt_version2-neg, badhandv4, EasyNegative, ng_deepnegative_v1_75t

Model loaded in 5.6s (load weights from disk: 0.3s, create model: 0.6s, apply weights to model: 1.8s, apply half(): 0.6s, load VAE: 1.0s, move model to device: 0.8s, load textual inversion embeddings: 0.3s).

preload_extensions_git_metadata for 18 extensions took 23.46s

Running on local URL: http://0.0.0.0:9301

To create a public link, set `share=True` in `launch()`.

Startup time: 330.5s (import torch: 94.9s, import gradio: 28.4s, import ldm: 23.4s, other imports: 37.3s, opts onchange: 0.2s, setup codeformer: 2.2s, list SD models: 0.3s, load scripts: 105.7s, load upscalers: 0.2s, refresh VAE: 0.1s, initialize extra networks: 0.2s, create ui: 6.9s, gradio launch: 30.0s, app_started_callback: 0.6s).

1. 反推提示词

选择 图生图 通过图像,反推关键词功能,支持 CLIP (BLIP) 和 DeepBooru 两个算法,建议结合使用,即:

- CLIP:类似图像的描述 (Caption);

- DeepBooru:类似图像的分类;

BLIP:

a woman sitting on a boat in the ocean wearing a hat and a white dress with a slit down the side,olive skin,aestheticism,Daphne Fedarb,a bronze sculpture,

DeepBooru,阈值不同,类别的数量有所差异:

score threshold: 0.35

1girl, bare legs, bare shoulders, barefoot, beach, blonde hair, blue sky, boat, day, dress, full body, hat, horizon, lips, long hair, looking at viewer, ocean, outdoors, pier, pool, poolside, railing, realistic, red lips, sitting, sky, sleeveless, sleeveless dress, smile, solo, stairs, sun hat, water, watercraft, white dress

score threshold: 0.5

1girl, barefoot, boat, day, dress, full body, hat, horizon, long hair, ocean, pier, pool, poolside, railing, realistic, sitting, solo, sun hat, water, watercraft, white dress

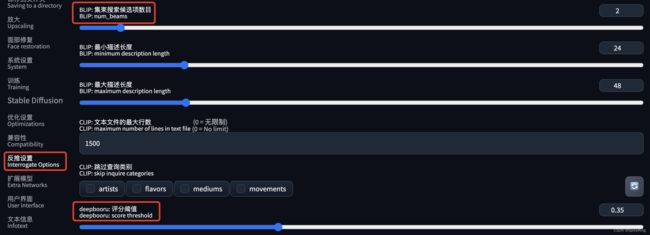

修改反推设置的建议:

num_beams: 增加到 2 。score threshold: 降低至 0.35,一般而言,分类准确率 0.35 已经足够,影响 DeepBooru 的输出。

即:

2. 环境安装

2.1 BLIP (引导的语言-图像预训练)

- Paper: BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

- BLIP:用于统一视觉语言理解和生成的语言-图像预训练引导方法

BLIP 的预训练模型架构和目标:BLIP 提出了多模态混合编码解码器,统一的视觉语言模型,可以在以下 3 种功能中运行:

- 单模态编码器使用 图像-文本对比(ITC)损失来对齐视觉和语言表示。

- 图像引导的文本编码器,使用额外的交叉注意力层来建模,视觉-语言交互,并且使用 图像-文本匹配(ITM)损失,来区分正负图像-文本对。

- 图像引导的文本解码器,用因果自注意力层替换双向自注意力层,并且与编码器共享相同的交叉注意力层和前馈网络。解码器使用语言建模(LM)损失,来生成给定图像的字幕。

即

安装遇到Bug: (ReadTimeoutError("HTTPSConnectionPool(host='huggingface.co', port=443): Read timed out. (read timeout=10)")

即:

File "stable_diffusion_webui_docker/repositories/BLIP/models/blip.py", line 187, in init_tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

原因是 bert-base-uncased 依赖需要下载,下载命令:

cd repositories/BLIP/models/

bypy downdir /huggingface/bert-base-uncased bert-base-uncased

下载模型:

load checkpoint from stable_diffusion_webui_docker/models/BLIP/model_base_caption_capfilt_large.pth

手动下载:

bypy downfile /stable_diffusion/models/blip_models/model_base_caption_capfilt_large.pth model_base_caption_capfilt_large.pth

注意:还需要依赖 CLIP 模型 (890M左右),默认下载地址 ~/.cache/clip,建议手动下载:

cd ~/.cache/clip

bypy downfile /stable_diffusion/models/blip_models/ViT-L-14_cache_clip.pt ViT-L-14_cache_clip.pt

ViT-L-14_cache_clip.pt ViT-L-14.pt

还需要依赖 CLIP 环境,即stable_diffusion_webui_docker/interrogate,以小文件为主,但是下载较慢。

Downloading CLIP categories...

在 GitHub - Bug interrogate CLIP crash in FileExistsError 链接中,下载 interrogate 文件夹,解压即可,这块 2 个算法 (BLIP & DeepBooru)都需要。

2.2 DeepBooru

- GitHub:TorchDeepDanbooru、DeepDanbooru

- 应用场景主要是在图片标签自动识别和生成方面,可以帮助用户更方便地搜索和浏览图片,也可以提高图片的可访问性和可利用性。

下载模型日志:

Downloading: "https://github.com/AUTOMATIC1111/TorchDeepDanbooru/releases/download/v1/model-resnet_custom_v3.pt" to stable_diffusion_webui_docker/models/torch_deepdanbooru/model-resnet_custom_v3.pt

手动下载:

wget https://ghproxy.com/https://github.com/AUTOMATIC1111/TorchDeepDanbooru/releases/download/v1/model-resnet_custom_v3.pt -O model-resnet_custom_v3.pt

其他

当修改文件夹名称之后,再次启动 virturalenv,则需要修改 virturalenv 与 pip 的 python 路径,替换为最新路径,即可:

vim pip

vim venv/bin/activate

参考:

-

GitHub - load checkpoint from BLIP/model_base_caption_capfilt_large.pth is so slow

-

GitHub - ViT-L-14.pt的下载地址

-

StackOverflow - How to modify path where Torch Hub models are downloaded

-

StackOverflow - How to change huggingface transformers default cache directory

-

GitHub - CLIP工程的默认模型下载路径

-

GitHub - Bug interrogate CLIP crash in FileExistsError

测试图像: