C# DlibDotNet 人脸识别、人脸68特征点识别、人脸5特征点识别、人脸对齐,三角剖分,人脸特征比对

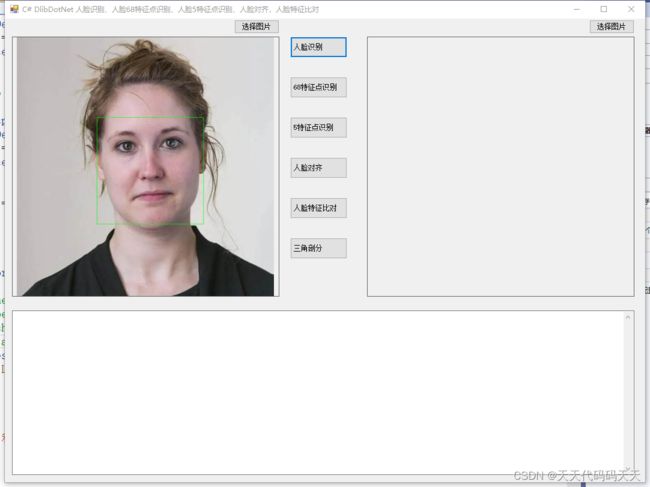

人脸识别

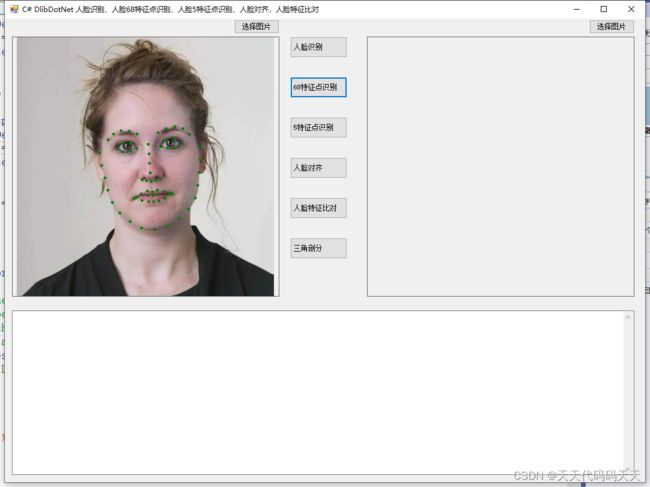

人脸68特征点识别

人脸5特征点识别

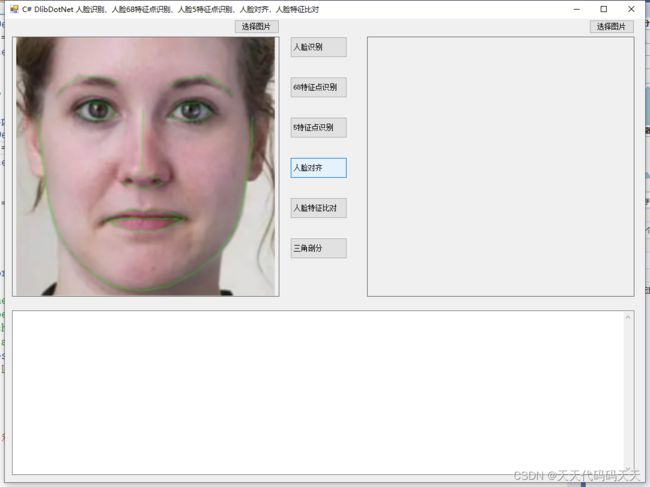

人脸对齐

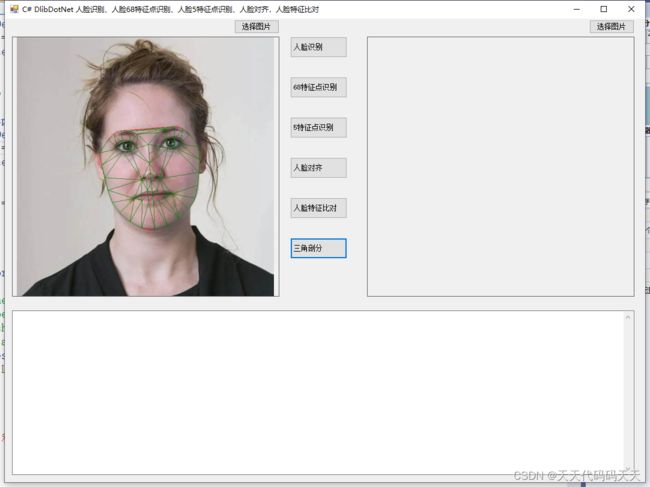

三角剖分

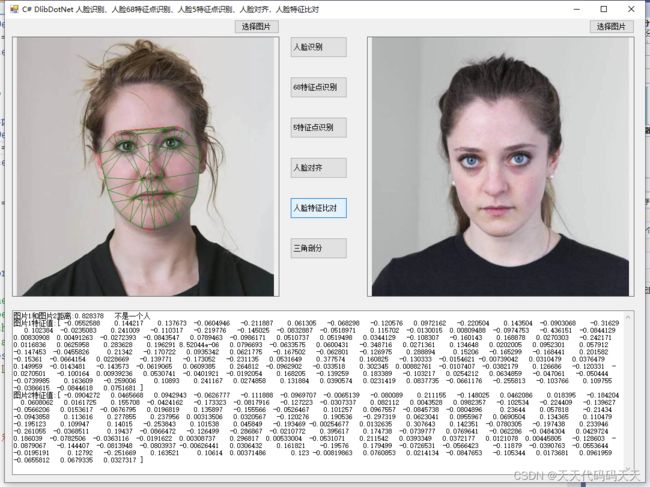

人脸特征比对

项目

项目

VS2022+.net4.8+OpenCvSharp4+DlibDotNet

Demo下载

代码

using DlibDotNet.Extensions;

using DlibDotNet;

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.IO;

using System.Linq;

using System.Text;

using System.Windows.Forms;

using static System.Windows.Forms.VisualStyles.VisualStyleElement;

using OpenCvSharp;

using System.Drawing.Imaging;

using System.Globalization;

namespace DlibDotNet_人脸识别_人脸68特征点识别_人脸5特征点识别

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string imgPath = "";

string imgPath2 = "";

string startupPath = "";

private void button1_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

imgPath = ofd.FileName;

pictureBox1.Image = new Bitmap(imgPath);

}

private void button2_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox2.Image = null;

imgPath2 = ofd.FileName;

pictureBox2.Image = new Bitmap(imgPath2);

}

private void Form1_Load(object sender, EventArgs e)

{

string startupPath = Application.StartupPath;

Dlib.Encoding = Environment.OSVersion.Platform == PlatformID.Win32NT ? Encoding.GetEncoding(CultureInfo.CurrentCulture.TextInfo.ANSICodePage) : Encoding.UTF8;

}

///

/// 人脸识别

///

///

///

private void button3_Click(object sender, EventArgs e)

{

if (imgPath == "")

{

return;

}

using (var faceDetector = Dlib.GetFrontalFaceDetector())

using (var image = Dlib.LoadImage(imgPath))

{

var dets = faceDetector.Operator(image);

foreach (var r in dets)

Dlib.DrawRectangle(image, r, new RgbPixel { Green = 255 });

var result = image.ToBitmap();

this.pictureBox1.Invoke(new Action(() =>

{

this.pictureBox1.Image?.Dispose();

this.pictureBox1.Image = result;

}));

}

}

///

/// 5特征点识别

///

///

///

private void button5_Click(object sender, EventArgs e)

{

if (imgPath == "")

{

return;

}

string faceDataPath = "shape_predictor_5_face_landmarks.dat";

Mat mat = new Mat(imgPath);

Bitmap bmp = new Bitmap(imgPath);

bmp = Get24bppRgb(bmp);

// 图像转换到Dlib的图像类中

Array2D img = DlibDotNet.Extensions.BitmapExtensions.ToArray2D(bmp);

var faceDetector = Dlib.GetFrontalFaceDetector();

var shapePredictor = ShapePredictor.Deserialize(faceDataPath);

// 检测人脸

var faces = faceDetector.Operator(img);

if (faces.Count() == 0) { return; }

// 人脸区域中识别脸部特征

var shape = shapePredictor.Detect(img, faces[0]);

var bradleyPoints = (from i in Enumerable.Range(0, (int)shape.Parts)

let p = shape.GetPart((uint)i)

select new OpenCvSharp.Point(p.X, p.Y)).ToArray();

foreach (var item in bradleyPoints)

{

Cv2.Circle(mat, item.X, item.Y, 2, Scalar.Green, 2);

}

pictureBox1.Image = OpenCvSharp.Extensions.BitmapConverter.ToBitmap(mat);

}

// Convert to Format24bppRgb

private static Bitmap Get24bppRgb(System.Drawing.Image image)

{

var bitmap = new Bitmap(image);

var bitmap24 = new Bitmap(bitmap.Width, bitmap.Height, PixelFormat.Format24bppRgb);

using (var gr = Graphics.FromImage(bitmap24))

{

gr.DrawImage(bitmap, new System.Drawing.Rectangle(0, 0, bitmap24.Width, bitmap24.Height));

}

return bitmap24;

}

///

/// 68特征点识别

///

///

///

private void button4_Click(object sender, EventArgs e)

{

if (imgPath == "")

{

return;

}

string faceDataPath = "shape_predictor_68_face_landmarks.dat";

Mat mat = new Mat(imgPath);

Bitmap bmp = new Bitmap(imgPath);

bmp = Get24bppRgb(bmp);

// 图像转换到Dlib的图像类中

Array2D img = DlibDotNet.Extensions.BitmapExtensions.ToArray2D(bmp);

var faceDetector = Dlib.GetFrontalFaceDetector();

var shapePredictor = ShapePredictor.Deserialize(faceDataPath);

// 检测人脸

var faces = faceDetector.Operator(img);

if (faces.Count() == 0) { return; }

// 人脸区域中识别脸部特征

var shape = shapePredictor.Detect(img, faces[0]);

var bradleyPoints = (from i in Enumerable.Range(0, (int)shape.Parts)

let p = shape.GetPart((uint)i)

select new OpenCvSharp.Point(p.X, p.Y)).ToArray();

foreach (var item in bradleyPoints)

{

Cv2.Circle(mat, item.X, item.Y, 2, Scalar.Green, 2);

}

pictureBox1.Image = OpenCvSharp.Extensions.BitmapConverter.ToBitmap(mat);

}

///

/// 人脸对齐

///

///

///

private void button6_Click(object sender, EventArgs e)

{

if (imgPath == "")

{

return;

}

var path = imgPath;

string faceDataPath = "shape_predictor_68_face_landmarks.dat";

using (var faceDetector = Dlib.GetFrontalFaceDetector())

using (var img = Dlib.LoadImage(path))

{

Dlib.PyramidUp(img);

var shapePredictor = ShapePredictor.Deserialize(faceDataPath);

var dets = faceDetector.Operator(img);

var shapes = new List();

foreach (var rect in dets)

{

var shape = shapePredictor.Detect(img, rect);

if (shape.Parts <= 2)

continue;

shapes.Add(shape);

}

if (shapes.Any())

{

var lines = Dlib.RenderFaceDetections(shapes);

foreach (var line in lines)

Dlib.DrawLine(img, line.Point1, line.Point2, new RgbPixel

{

Green = 255

});

var wb = img.ToBitmap();

this.pictureBox1.Image?.Dispose();

this.pictureBox1.Image = wb;

foreach (var l in lines)

l.Dispose();

var chipLocations = Dlib.GetFaceChipDetails(shapes);

using (var faceChips = Dlib.ExtractImageChips(img, chipLocations))

using (var tileImage = Dlib.TileImages(faceChips))

{

// It is NOT necessary to re-convert WriteableBitmap to Matrix.

// This sample demonstrate converting managed image class to

// dlib class and vice versa.

using (var tile = tileImage.ToBitmap())

using (var mat = tile.ToMatrix())

{

var tile2 = mat.ToBitmap();

this.pictureBox1.Image?.Dispose();

this.pictureBox1.Image = tile2;

}

}

foreach (var c in chipLocations)

c.Dispose();

}

foreach (var s in shapes)

s.Dispose();

}

}

///

/// 人脸特征比对

///

///

///

private void button7_Click(object sender, EventArgs e)

{

textBox1.Text = "";

if (imgPath == "" || imgPath2 == "")

{

return;

}

var detector = Dlib.GetFrontalFaceDetector();

var sp = ShapePredictor.Deserialize("shape_predictor_5_face_landmarks.dat");

var net = DlibDotNet.Dnn.LossMetric.Deserialize("dlib_face_recognition_resnet_model_v1.dat");

var img1 = Dlib.LoadImageAsMatrix(imgPath);

var img2 = Dlib.LoadImageAsMatrix(imgPath2);

var faces = new List>();

foreach (var face in detector.Operator(img1))

{

var shape = sp.Detect(img1, face);

var faceChipDetail = Dlib.GetFaceChipDetails(shape, 150, 0.25);

var faceChip = Dlib.ExtractImageChip(img1, faceChipDetail);

faces.Add(faceChip);

}

foreach (var face in detector.Operator(img2))

{

var shape = sp.Detect(img2, face);

var faceChipDetail = Dlib.GetFaceChipDetails(shape, 150, 0.25);

var faceChip = Dlib.ExtractImageChip(img2, faceChipDetail);

faces.Add(faceChip);

}

if (faces.Count != 2)

{

return;

}

var faceDescriptors = net.Operator(faces);

// Faces are connected in the graph if they are close enough. Here we check if

// the distance between two face descriptors is less than 0.6, which is the

// decision threshold the network was trained to use. Although you can

// certainly use any other threshold you find useful.

var diff = faceDescriptors[1] - faceDescriptors[0];

float len = Dlib.Length(diff);

String str = "";

if (len < 0.6)

{

str += "图片1和图片2距离:" + len.ToString() + " 是一个人" + "\r\n";

}

else

{

str += "图片1和图片2距离:" + len.ToString() + " 不是一个人" + "\r\n";

}

str += "图片1特征值:[" + faceDescriptors[0].ToString() + "]\r\n";

str += "图片2特征值:[" + faceDescriptors[1].ToString() + "]\r\n";

textBox1.Text = str;

}

///

/// 三角剖分

///

///

///

private void button8_Click(object sender, EventArgs e)

{

if (imgPath == "")

{

return;

}

string faceDataPath = "shape_predictor_68_face_landmarks.dat";

Mat mat = new Mat(imgPath);

Bitmap bmp = new Bitmap(imgPath);

bmp = Get24bppRgb(bmp);

// 图像转换到Dlib的图像类中

Array2D img = DlibDotNet.Extensions.BitmapExtensions.ToArray2D(bmp);

var faceDetector = Dlib.GetFrontalFaceDetector();

var shapePredictor = ShapePredictor.Deserialize(faceDataPath);

// 检测人脸

var faces = faceDetector.Operator(img);

if (faces.Count() == 0)

{

return;

}

// 人脸区域中识别脸部特征

var shape = shapePredictor.Detect(img, faces[0]);

var bradleyPoints = (from i in Enumerable.Range(0, (int)shape.Parts)

let p = shape.GetPart((uint)i)

select new OpenCvSharp.Point(p.X, p.Y)).ToArray();

pictureBox1.Image = OpenCvSharp.Extensions.BitmapConverter.ToBitmap(mat);

//凸包提取

var hull = Cv2.ConvexHullIndices(bradleyPoints);

var bradleyHull = (from i in hull

select bradleyPoints[i]).ToArray();

for (int i = 0; i < bradleyHull.Length - 1; i++)

{

Cv2.Line(mat, bradleyHull[i].X, bradleyHull[i].Y, bradleyHull[i + 1].X, bradleyHull[i + 1].Y, Scalar.Red);

}

Cv2.Line(mat, bradleyHull[bradleyHull.Length - 1].X, bradleyHull[bradleyHull.Length - 1].Y, bradleyHull[0].X, bradleyHull[0].Y, Scalar.Red);

pictureBox1.Image = OpenCvSharp.Extensions.BitmapConverter.ToBitmap(mat);

RotatedRect minAreaRect = Cv2.MinAreaRect(bradleyPoints);

//三角剖分

Subdiv2D subdiv = new Subdiv2D();

Rect rect = new Rect(0, 0, pictureBox1.Image.Width, pictureBox1.Image.Height);

subdiv.InitDelaunay(rect);

// 添加与绘制特征点

for (int i = 0; i < bradleyPoints.Length; i++)

{

subdiv.Insert(new Point2f(bradleyPoints[i].X, bradleyPoints[i].Y));

}

// 生成剖分三角形

Vec6f[] triangleList = subdiv.GetTriangleList();

OpenCvSharp.Point[] pt = new OpenCvSharp.Point[3];

// 绘制剖分三角形

for (int i = 0; i < triangleList.Length; i++)

{

Vec6f t = triangleList[i];

pt[0] = new OpenCvSharp.Point((int)t[0], (int)t[1]);

pt[1] = new OpenCvSharp.Point((int)t[2], (int)t[3]);

pt[2] = new OpenCvSharp.Point((int)t[4], (int)t[5]);

Cv2.Line(mat, pt[0], pt[1], Scalar.Green, 1);

Cv2.Line(mat, pt[1], pt[2], Scalar.Green, 1);

Cv2.Line(mat, pt[2], pt[1], Scalar.Green, 1);

}

pictureBox1.Image = OpenCvSharp.Extensions.BitmapConverter.ToBitmap(mat);

}

}

}