kafka客户端执行流程和源码分析

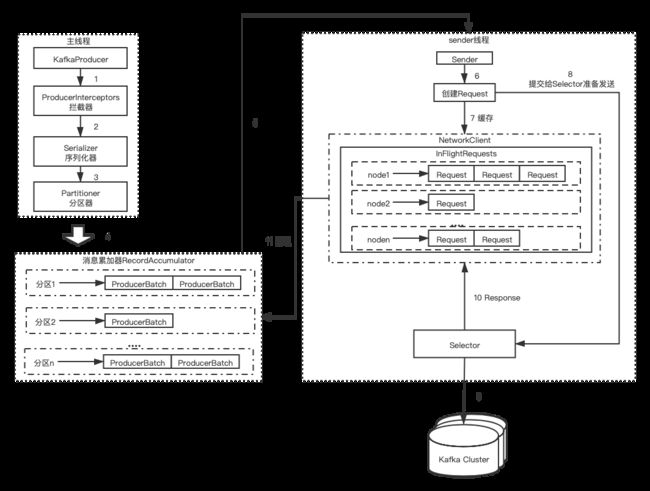

整个生产者客户端由2个线程协调运行,就是主线程和sender线程。在主线程中由Kafka Producer创建消息,然后通过拦截器、序列化器和分区器的作用后,缓存到消息累加器(也称为消息收集器),Sender负责从消息累加器获取消息并将其发送到Kafka中。

消息累加器主要用来缓存消息以便于sender线程可以批量发送,进而减少网络传输的资源消耗以提升性能。缓存大小是配置的,默认为32MB,如果生产速度过快,被阻塞最多60秒(配置的),就会抛异常。

主线程发送的消息会追加到RecoreAccumulator的双端队列中,在RecoreAccumulator对每个分区维护一个双端队列,内容就是ProducerBatch,Sender从队头获取消息,处理完后清除消息。

为了节省byteBuffer的创建和释放,在RecoreAccumulator内部有一个BufferPool,用来支持复用,不过只支持特定大小,默认为batch.size=16KB(可配置),其他大小的不会缓存到BufferPool。ProducerBatch的大小和batch.size有联系,如果创建消息没有大于batch.size,就会继续放入ProducerBatch中,否则就创建新的。

请求在Sender发往Kafka之前还会保存到InFlightRequests中,它的主要作用就是缓存了已经发送出去但是没有收到响应的请求,并且InFlightRequests提供许多管理方法,其中关键的就是限制最多缓存的请求数量--max.in.flight.requests.per.connection,默认为5.这个参数时每个连接最多只能缓存5个未响应的请求,超过该值就不能再向这个连接发送更多请求。

demo:

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("acks", "all");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer producer = new KafkaProducer<>(props);

for (int i = 0; i < 100; i++)

producer.send(new ProducerRecord("my-topic", Integer.toString(i), Integer.toString(i)));

producer.close();

接下来就看源码:

public Future send(ProducerRecord record, Callback callback) {

// intercept the record, which can be potentially modified; this method does not throw exceptions

ProducerRecord interceptedRecord = this.interceptors.onSend(record);

return doSend(interceptedRecord, callback);

}

private Future doSend(ProducerRecord record, Callback callback) {

TopicPartition tp = null;

try {

throwIfProducerClosed();

// first make sure the metadata for the topic is available

long nowMs = time.milliseconds();

ClusterAndWaitTime clusterAndWaitTime;

try {

clusterAndWaitTime = waitOnMetadata(record.topic(), record.partition(), nowMs, maxBlockTimeMs);

} catch (KafkaException e) {

if (metadata.isClosed())

throw new KafkaException("Producer closed while send in progress", e);

throw e;

}

nowMs += clusterAndWaitTime.waitedOnMetadataMs;

long remainingWaitMs = Math.max(0, maxBlockTimeMs - clusterAndWaitTime.waitedOnMetadataMs);

Cluster cluster = clusterAndWaitTime.cluster;

byte[] serializedKey;

try {

serializedKey = keySerializer.serialize(record.topic(), record.headers(), record.key());

} catch (ClassCastException cce) {

throw new SerializationException("Can't convert key of class " + record.key().getClass().getName() +

" to class " + producerConfig.getClass(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG).getName() +

" specified in key.serializer", cce);

}

byte[] serializedValue;

try {

serializedValue = valueSerializer.serialize(record.topic(), record.headers(), record.value());

} catch (ClassCastException cce) {

throw new SerializationException("Can't convert value of class " + record.value().getClass().getName() +

" to class " + producerConfig.getClass(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG).getName() +

" specified in value.serializer", cce);

}

int partition = partition(record, serializedKey, serializedValue, cluster);

tp = new TopicPartition(record.topic(), partition);

setReadOnly(record.headers());

Header[] headers = record.headers().toArray();

int serializedSize = AbstractRecords.estimateSizeInBytesUpperBound(apiVersions.maxUsableProduceMagic(),

compressionType, serializedKey, serializedValue, headers);

ensureValidRecordSize(serializedSize);

long timestamp = record.timestamp() == null ? nowMs : record.timestamp();

if (log.isTraceEnabled()) {

log.trace("Attempting to append record {} with callback {} to topic {} partition {}", record, callback, record.topic(), partition);

}

// producer callback will make sure to call both 'callback' and interceptor callback

Callback interceptCallback = new InterceptorCallback<>(callback, this.interceptors, tp);

if (transactionManager != null && transactionManager.isTransactional()) {

transactionManager.failIfNotReadyForSend();

}

RecordAccumulator.RecordAppendResult result = accumulator.append(tp, timestamp, serializedKey,

serializedValue, headers, interceptCallback, remainingWaitMs, true, nowMs);

if (result.abortForNewBatch) {

int prevPartition = partition;

partitioner.onNewBatch(record.topic(), cluster, prevPartition);

partition = partition(record, serializedKey, serializedValue, cluster);

tp = new TopicPartition(record.topic(), partition);

if (log.isTraceEnabled()) {

log.trace("Retrying append due to new batch creation for topic {} partition {}. The old partition was {}", record.topic(), partition, prevPartition);

}

// producer callback will make sure to call both 'callback' and interceptor callback

interceptCallback = new InterceptorCallback<>(callback, this.interceptors, tp);

result = accumulator.append(tp, timestamp, serializedKey,

serializedValue, headers, interceptCallback, remainingWaitMs, false, nowMs);

}

if (transactionManager != null && transactionManager.isTransactional())

transactionManager.maybeAddPartitionToTransaction(tp);

if (result.batchIsFull || result.newBatchCreated) {

log.trace("Waking up the sender since topic {} partition {} is either full or getting a new batch", record.topic(), partition);

this.sender.wakeup();

}

return result.future;

// handling exceptions and record the errors;

// for API exceptions return them in the future,

// for other exceptions throw directly

} catch (ApiException e) {

log.debug("Exception occurred during message send:", e);

if (callback != null)

callback.onCompletion(null, e);

this.errors.record();

this.interceptors.onSendError(record, tp, e);

return new FutureFailure(e);

} catch (InterruptedException e) {

this.errors.record();

this.interceptors.onSendError(record, tp, e);

throw new InterruptException(e);

} catch (KafkaException e) {

this.errors.record();

this.interceptors.onSendError(record, tp, e);

throw e;

} catch (Exception e) {

// we notify interceptor about all exceptions, since onSend is called before anything else in this method

this.interceptors.onSendError(record, tp, e);

throw e;

}

}

这就是主线程部分,再看sender部分,先看sender初始化和启动部分

KafkaProducer(Map configs,

Serializer keySerializer,

Serializer valueSerializer,

ProducerMetadata metadata,

KafkaClient kafkaClient,

ProducerInterceptors interceptors,

Time time) {

ProducerConfig config = new ProducerConfig(ProducerConfig.addSerializerToConfig(configs, keySerializer,

valueSerializer));

try {

Map userProvidedConfigs = config.originals();

this.producerConfig = config;

this.time = time;

String transactionalId = userProvidedConfigs.containsKey(ProducerConfig.TRANSACTIONAL_ID_CONFIG) ?

(String) userProvidedConfigs.get(ProducerConfig.TRANSACTIONAL_ID_CONFIG) : null;

this.clientId = config.getString(ProducerConfig.CLIENT_ID_CONFIG);

LogContext logContext;

if (transactionalId == null)

logContext = new LogContext(String.format("[Producer clientId=%s] ", clientId));

else

logContext = new LogContext(String.format("[Producer clientId=%s, transactionalId=%s] ", clientId, transactionalId));

log = logContext.logger(KafkaProducer.class);

log.trace("Starting the Kafka producer");

Map metricTags = Collections.singletonMap("client-id", clientId);

MetricConfig metricConfig = new MetricConfig().samples(config.getInt(ProducerConfig.METRICS_NUM_SAMPLES_CONFIG))

.timeWindow(config.getLong(ProducerConfig.METRICS_SAMPLE_WINDOW_MS_CONFIG), TimeUnit.MILLISECONDS)

.recordLevel(Sensor.RecordingLevel.forName(config.getString(ProducerConfig.METRICS_RECORDING_LEVEL_CONFIG)))

.tags(metricTags);

List reporters = config.getConfiguredInstances(ProducerConfig.METRIC_REPORTER_CLASSES_CONFIG,

MetricsReporter.class,

Collections.singletonMap(ProducerConfig.CLIENT_ID_CONFIG, clientId));

JmxReporter jmxReporter = new JmxReporter();

jmxReporter.configure(userProvidedConfigs);

reporters.add(jmxReporter);

MetricsContext metricsContext = new KafkaMetricsContext(JMX_PREFIX,

config.originalsWithPrefix(CommonClientConfigs.METRICS_CONTEXT_PREFIX));

this.metrics = new Metrics(metricConfig, reporters, time, metricsContext);

this.partitioner = config.getConfiguredInstance(ProducerConfig.PARTITIONER_CLASS_CONFIG, Partitioner.class);

long retryBackoffMs = config.getLong(ProducerConfig.RETRY_BACKOFF_MS_CONFIG);

if (keySerializer == null) {

this.keySerializer = config.getConfiguredInstance(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,

Serializer.class);

this.keySerializer.configure(config.originals(), true);

} else {

config.ignore(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG);

this.keySerializer = keySerializer;

}

if (valueSerializer == null) {

this.valueSerializer = config.getConfiguredInstance(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,

Serializer.class);

this.valueSerializer.configure(config.originals(), false);

} else {

config.ignore(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG);

this.valueSerializer = valueSerializer;

}

// load interceptors and make sure they get clientId

userProvidedConfigs.put(ProducerConfig.CLIENT_ID_CONFIG, clientId);

ProducerConfig configWithClientId = new ProducerConfig(userProvidedConfigs, false);

List> interceptorList = (List) configWithClientId.getConfiguredInstances(

ProducerConfig.INTERCEPTOR_CLASSES_CONFIG, ProducerInterceptor.class);

if (interceptors != null)

this.interceptors = interceptors;

else

this.interceptors = new ProducerInterceptors<>(interceptorList);

ClusterResourceListeners clusterResourceListeners = configureClusterResourceListeners(keySerializer,

valueSerializer, interceptorList, reporters);

this.maxRequestSize = config.getInt(ProducerConfig.MAX_REQUEST_SIZE_CONFIG);

this.totalMemorySize = config.getLong(ProducerConfig.BUFFER_MEMORY_CONFIG);

this.compressionType = CompressionType.forName(config.getString(ProducerConfig.COMPRESSION_TYPE_CONFIG));

this.maxBlockTimeMs = config.getLong(ProducerConfig.MAX_BLOCK_MS_CONFIG);

int deliveryTimeoutMs = configureDeliveryTimeout(config, log);

this.apiVersions = new ApiVersions();

this.transactionManager = configureTransactionState(config, logContext);

this.accumulator = new RecordAccumulator(logContext,

config.getInt(ProducerConfig.BATCH_SIZE_CONFIG),

this.compressionType,

lingerMs(config),

retryBackoffMs,

deliveryTimeoutMs,

metrics,

PRODUCER_METRIC_GROUP_NAME,

time,

apiVersions,

transactionManager,

new BufferPool(this.totalMemorySize, config.getInt(ProducerConfig.BATCH_SIZE_CONFIG), metrics, time, PRODUCER_METRIC_GROUP_NAME));

List addresses = ClientUtils.parseAndValidateAddresses(

config.getList(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG),

config.getString(ProducerConfig.CLIENT_DNS_LOOKUP_CONFIG));

if (metadata != null) {

this.metadata = metadata;

} else {

this.metadata = new ProducerMetadata(retryBackoffMs,

config.getLong(ProducerConfig.METADATA_MAX_AGE_CONFIG),

config.getLong(ProducerConfig.METADATA_MAX_IDLE_CONFIG),

logContext,

clusterResourceListeners,

Time.SYSTEM);

this.metadata.bootstrap(addresses);

}

this.errors = this.metrics.sensor("errors");

this.sender = newSender(logContext, kafkaClient, this.metadata);

String ioThreadName = NETWORK_THREAD_PREFIX + " | " + clientId;

this.ioThread = new KafkaThread(ioThreadName, this.sender, true);

this.ioThread.start();

config.logUnused();

AppInfoParser.registerAppInfo(JMX_PREFIX, clientId, metrics, time.milliseconds());

log.debug("Kafka producer started");

} catch (Throwable t) {

// call close methods if internal objects are already constructed this is to prevent resource leak. see KAFKA-2121

close(Duration.ofMillis(0), true);

// now propagate the exception

throw new KafkaException("Failed to construct kafka producer", t);

}

}

.......

Sender newSender(LogContext logContext, KafkaClient kafkaClient, ProducerMetadata metadata) {

int maxInflightRequests = configureInflightRequests(producerConfig);

int requestTimeoutMs = producerConfig.getInt(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG);

ChannelBuilder channelBuilder = ClientUtils.createChannelBuilder(producerConfig, time, logContext);

ProducerMetrics metricsRegistry = new ProducerMetrics(this.metrics);

Sensor throttleTimeSensor = Sender.throttleTimeSensor(metricsRegistry.senderMetrics);

KafkaClient client = kafkaClient != null ? kafkaClient : new NetworkClient(

new Selector(producerConfig.getLong(ProducerConfig.CONNECTIONS_MAX_IDLE_MS_CONFIG),

this.metrics, time, "producer", channelBuilder, logContext),

metadata,

clientId,

maxInflightRequests,

producerConfig.getLong(ProducerConfig.RECONNECT_BACKOFF_MS_CONFIG),

producerConfig.getLong(ProducerConfig.RECONNECT_BACKOFF_MAX_MS_CONFIG),

producerConfig.getInt(ProducerConfig.SEND_BUFFER_CONFIG),

producerConfig.getInt(ProducerConfig.RECEIVE_BUFFER_CONFIG),

requestTimeoutMs,

ClientDnsLookup.forConfig(producerConfig.getString(ProducerConfig.CLIENT_DNS_LOOKUP_CONFIG)),

time,

true,

apiVersions,

throttleTimeSensor,

logContext);

short acks = configureAcks(producerConfig, log);

return new Sender(logContext,

client,

metadata,

this.accumulator,

maxInflightRequests == 1,

producerConfig.getInt(ProducerConfig.MAX_REQUEST_SIZE_CONFIG),

acks,

producerConfig.getInt(ProducerConfig.RETRIES_CONFIG),

metricsRegistry.senderMetrics,

time,

requestTimeoutMs,

producerConfig.getLong(ProducerConfig.RETRY_BACKOFF_MS_CONFIG),

this.transactionManager,

apiVersions);

} 在看下Sender的run方法

public void run() {

log.debug("Starting Kafka producer I/O thread.");

// main loop, runs until close is called

while (running) {

try {

runOnce();

} catch (Exception e) {

log.error("Uncaught error in kafka producer I/O thread: ", e);

}

}

log.debug("Beginning shutdown of Kafka producer I/O thread, sending remaining records.");

// okay we stopped accepting requests but there may still be

// requests in the transaction manager, accumulator or waiting for acknowledgment,

// wait until these are completed.

while (!forceClose && ((this.accumulator.hasUndrained() || this.client.inFlightRequestCount() > 0) || hasPendingTransactionalRequests())) {

try {

runOnce();

} catch (Exception e) {

log.error("Uncaught error in kafka producer I/O thread: ", e);

}

}

// Abort the transaction if any commit or abort didn't go through the transaction manager's queue

while (!forceClose && transactionManager != null && transactionManager.hasOngoingTransaction()) {

if (!transactionManager.isCompleting()) {

log.info("Aborting incomplete transaction due to shutdown");

transactionManager.beginAbort();

}

try {

runOnce();

} catch (Exception e) {

log.error("Uncaught error in kafka producer I/O thread: ", e);

}

}

if (forceClose) {

// We need to fail all the incomplete transactional requests and batches and wake up the threads waiting on

// the futures.

if (transactionManager != null) {

log.debug("Aborting incomplete transactional requests due to forced shutdown");

transactionManager.close();

}

log.debug("Aborting incomplete batches due to forced shutdown");

this.accumulator.abortIncompleteBatches();

}

try {

this.client.close();

} catch (Exception e) {

log.error("Failed to close network client", e);

}

log.debug("Shutdown of Kafka producer I/O thread has completed.");

}

void runOnce() {

if (transactionManager != null) {

try {

transactionManager.maybeResolveSequences();

// do not continue sending if the transaction manager is in a failed state

if (transactionManager.hasFatalError()) {

RuntimeException lastError = transactionManager.lastError();

if (lastError != null)

maybeAbortBatches(lastError);

client.poll(retryBackoffMs, time.milliseconds());

return;

}

// Check whether we need a new producerId. If so, we will enqueue an InitProducerId

// request which will be sent below

transactionManager.bumpIdempotentEpochAndResetIdIfNeeded();

if (maybeSendAndPollTransactionalRequest()) {

return;

}

} catch (AuthenticationException e) {

// This is already logged as error, but propagated here to perform any clean ups.

log.trace("Authentication exception while processing transactional request", e);

transactionManager.authenticationFailed(e);

}

}

long currentTimeMs = time.milliseconds();

long pollTimeout = sendProducerData(currentTimeMs);

client.poll(pollTimeout, currentTimeMs);

}

接下来看下sendProducerData:

private long sendProducerData(long now) {

Cluster cluster = metadata.fetch();

// get the list of partitions with data ready to send

RecordAccumulator.ReadyCheckResult result = this.accumulator.ready(cluster, now);

// if there are any partitions whose leaders are not known yet, force metadata update

if (!result.unknownLeaderTopics.isEmpty()) {

// The set of topics with unknown leader contains topics with leader election pending as well as

// topics which may have expired. Add the topic again to metadata to ensure it is included

// and request metadata update, since there are messages to send to the topic.

for (String topic : result.unknownLeaderTopics)

this.metadata.add(topic, now);

log.debug("Requesting metadata update due to unknown leader topics from the batched records: {}",

result.unknownLeaderTopics);

this.metadata.requestUpdate();

}

// remove any nodes we aren't ready to send to

Iterator iter = result.readyNodes.iterator();

long notReadyTimeout = Long.MAX_VALUE;

while (iter.hasNext()) {

Node node = iter.next();

if (!this.client.ready(node, now)) {

iter.remove();

notReadyTimeout = Math.min(notReadyTimeout, this.client.pollDelayMs(node, now));

}

}

// create produce requests

Map> batches = this.accumulator.drain(cluster, result.readyNodes, this.maxRequestSize, now);

addToInflightBatches(batches);

if (guaranteeMessageOrder) {

// Mute all the partitions drained

for (List batchList : batches.values()) {

for (ProducerBatch batch : batchList)

this.accumulator.mutePartition(batch.topicPartition);

}

}

accumulator.resetNextBatchExpiryTime();

List expiredInflightBatches = getExpiredInflightBatches(now);

List expiredBatches = this.accumulator.expiredBatches(now);

expiredBatches.addAll(expiredInflightBatches);

// Reset the producer id if an expired batch has previously been sent to the broker. Also update the metrics

// for expired batches. see the documentation of @TransactionState.resetIdempotentProducerId to understand why

// we need to reset the producer id here.

if (!expiredBatches.isEmpty())

log.trace("Expired {} batches in accumulator", expiredBatches.size());

for (ProducerBatch expiredBatch : expiredBatches) {

String errorMessage = "Expiring " + expiredBatch.recordCount + " record(s) for " + expiredBatch.topicPartition

+ ":" + (now - expiredBatch.createdMs) + " ms has passed since batch creation";

failBatch(expiredBatch, -1, NO_TIMESTAMP, new TimeoutException(errorMessage), false);

if (transactionManager != null && expiredBatch.inRetry()) {

// This ensures that no new batches are drained until the current in flight batches are fully resolved.

transactionManager.markSequenceUnresolved(expiredBatch);

}

}

sensors.updateProduceRequestMetrics(batches);

// If we have any nodes that are ready to send + have sendable data, poll with 0 timeout so this can immediately

// loop and try sending more data. Otherwise, the timeout will be the smaller value between next batch expiry

// time, and the delay time for checking data availability. Note that the nodes may have data that isn't yet

// sendable due to lingering, backing off, etc. This specifically does not include nodes with sendable data

// that aren't ready to send since they would cause busy looping.

long pollTimeout = Math.min(result.nextReadyCheckDelayMs, notReadyTimeout);

pollTimeout = Math.min(pollTimeout, this.accumulator.nextExpiryTimeMs() - now);

pollTimeout = Math.max(pollTimeout, 0);

if (!result.readyNodes.isEmpty()) {

log.trace("Nodes with data ready to send: {}", result.readyNodes);

// if some partitions are already ready to be sent, the select time would be 0;

// otherwise if some partition already has some data accumulated but not ready yet,

// the select time will be the time difference between now and its linger expiry time;

// otherwise the select time will be the time difference between now and the metadata expiry time;

pollTimeout = 0;

}

sendProduceRequests(batches, now);

return pollTimeout;

}

再看ready方法:

public ReadyCheckResult ready(Cluster cluster, long nowMs) {

Set readyNodes = new HashSet<>();

long nextReadyCheckDelayMs = Long.MAX_VALUE;

Set unknownLeaderTopics = new HashSet<>();

boolean exhausted = this.free.queued() > 0;

for (Map.Entry> entry : this.batches.entrySet()) {

Deque deque = entry.getValue();

synchronized (deque) {

// When producing to a large number of partitions, this path is hot and deques are often empty.

// We check whether a batch exists first to avoid the more expensive checks whenever possible.

ProducerBatch batch = deque.peekFirst();

if (batch != null) {

TopicPartition part = entry.getKey();

Node leader = cluster.leaderFor(part);

if (leader == null) {

// This is a partition for which leader is not known, but messages are available to send.

// Note that entries are currently not removed from batches when deque is empty.

unknownLeaderTopics.add(part.topic());

} else if (!readyNodes.contains(leader) && !isMuted(part)) {

long waitedTimeMs = batch.waitedTimeMs(nowMs);

boolean backingOff = batch.attempts() > 0 && waitedTimeMs < retryBackoffMs;

long timeToWaitMs = backingOff ? retryBackoffMs : lingerMs;

boolean full = deque.size() > 1 || batch.isFull();

boolean expired = waitedTimeMs >= timeToWaitMs;

boolean sendable = full || expired || exhausted || closed || flushInProgress();

if (sendable && !backingOff) {

readyNodes.add(leader);

} else {

long timeLeftMs = Math.max(timeToWaitMs - waitedTimeMs, 0);

// Note that this results in a conservative estimate since an un-sendable partition may have

// a leader that will later be found to have sendable data. However, this is good enough

// since we'll just wake up and then sleep again for the remaining time.

nextReadyCheckDelayMs = Math.min(timeLeftMs, nextReadyCheckDelayMs);

}

}

}

}

}

return new ReadyCheckResult(readyNodes, nextReadyCheckDelayMs, unknownLeaderTopics);

}

基本上上面主流程就都过了一边,如果再看其他细节点,就自行了解吧!