python_day12_map

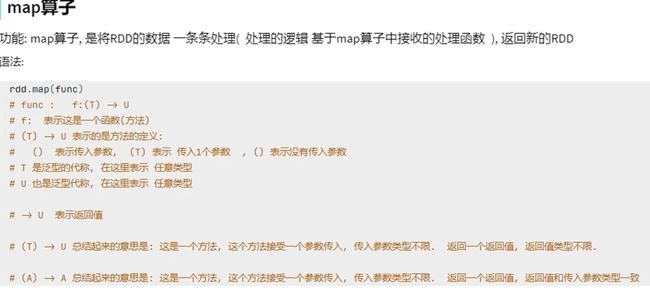

map方法(算子)

from pyspark import SparkConf, SparkContext

import os

为pyspark指向python解释器

os.environ['PYSPARK_PYTHON'] = "D:\\dev\\python\\python3.10.4\\python.exe"

创建SparkContext对象

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

准备一个RDD

rdd_1 = sc.parallelize([1, 2, 3, 4, 5])

a、通过map方法将全部数据乘10

def func(data):

return data * 10

rdd_2 = rdd_1.map(func)

print(rdd_2.collect())

# 关闭链接

sc.stop()

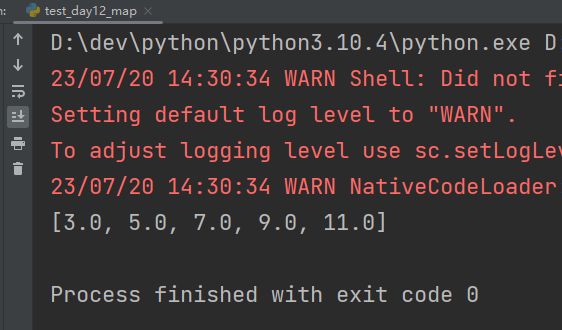

b、匿名函数写法(链式调用)

# 匿名函数写法(链式调用)

rdd_2 = rdd_1.map(lambda x: x * 10 + 5).map(lambda x: x / 5)

print(rdd_2.collect())

# 关闭链接

sc.stop()