基于OpenCV和KinectSDK实现Azure Kinect DK实时获取RGB、深度图像及红外图像、交互式数据采集将图片数据保存本地

基于OpenCV和KinectSDK实现Azure Kinect DK实时获取RGB、深度图像及红外图像、交互式数据采集将图片数据保存本地

- 基于OpenCV和KinectSDK实现Azure Kinect DK实时获取RGB、深度图像及红外图像、交互式数据采集将图片数据保存本地

- 1.开发环境

- 2.采集效果图

-

- 2.1采集界面

- 2.2RGB图像

- 2.3 深度图像

- 2.4红外图像

- 3.深度图计算原理

- 4.代码主要函数及其功能

-

- 4.1配置并启动Kinect设备

- 4.2声明数据结构

- 4.3创建txt文件流

- 4.4 循环获取每一帧的图像

- 4.5数据格式转换

- 4.6通过捕获键盘进行交互式数据采集

- 5.完整代码

-

- 5.1头文件ShowDep_Color.h

- 5.2ShowDep_Color.cpp

1.开发环境

-Visual Studio2017

-Microsoft.Azure.Kinect.Sensor1.4.1

-OpenCV3.4.1

-关于VS2017配置OpenCV环境的详细过程可以参考我的上一篇文章:

链接: VS2017逐步配置OpenCV教程

2.采集效果图

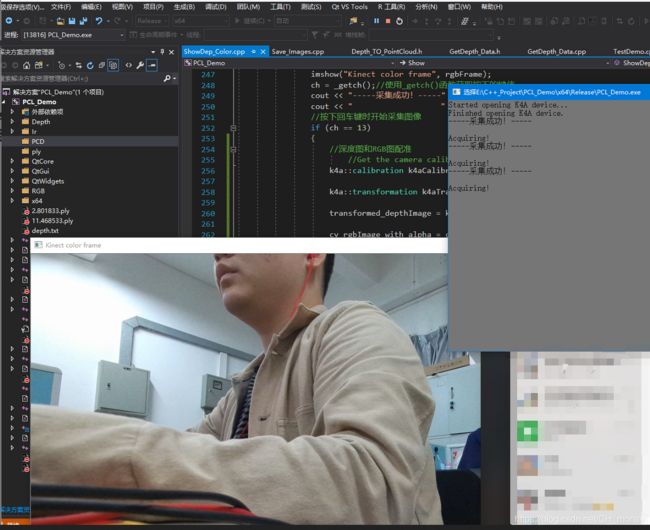

2.1采集界面

先上实际采集效果图,可以通过自定义按键控制其在Kinect视频流中交互式保存某一帧的彩色图、深度图、红外图数据,并将深度图的深度数据保存在ply文件中便于后续将开发。

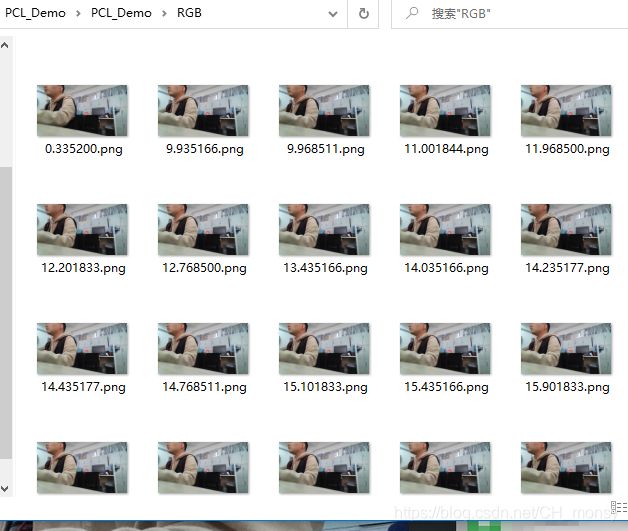

2.2RGB图像

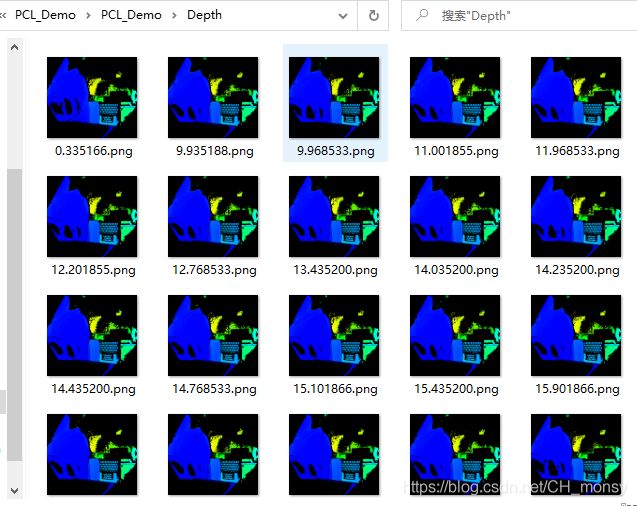

2.3 深度图像

2.4红外图像

3.深度图计算原理

这里列出几位大佬的文章,对于深度图的原理及计算方法讲解的非常详细:

链接1: 深度图像的获取原理

链接2: 有趣的深度图:可见性问题的解法

4.代码主要函数及其功能

4.1配置并启动Kinect设备

//这一代 Kinect 支持在一台电脑上打开多台 Kinect。可以用这句代码通过查询主机已连接的Kinet设备数来判断这台主机是否连接了 Kinect

const uint32_t deviceCount = k4a::device::get_installed_count();

if (deviceCount == 0)

{

cout << "未连接任何Kinect设备!" << endl;

}

//配置并启动设备

k4a_device_configuration_t config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

//设置Kinect的相机帧率为30FPS

config.camera_fps = K4A_FRAMES_PER_SECOND_30;

//设置Kinect的深度模式为Near FOV unbinned(这一代 Kinect 支持多种深度模式,官方文档推荐使用 K4A_DEPTH_MODE_NFOV_UNBINNED 和 K4A_DEPTH_MODE_WFOV_2X2BINNED 这两种深度模式)

config.depth_mode = K4A_DEPTH_MODE_NFOV_UNBINNED;

//设置Kinect的颜色属性为BGRA32

config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

//设置color图的分辨率位 720p

config.color_resolution = K4A_COLOR_RESOLUTION_720P;

//为了同时获取depth和color图,保持这两种图像是同步的

config.synchronized_images_only = true;

cout << "Started opening K4A device..." << endl;

k4a::device device = k4a::device::open(K4A_DEVICE_DEFAULT);

device.start_cameras(&config);

cout << "Finished opening K4A device." << endl;

4.2声明数据结构

Kinect 表示图像(RGB / IR / Depth)的内置数据结构 k4a::image。同时使用 cv::Mat数据结构,以便利用 OpenCV 的imshow函数来实时示。

k4a::image depthImage;

k4a::image rgbImage;

k4a::image irImage;

k4a::image transformed_depthImage;

cv::Mat cv_rgbImage_with_alpha;

cv::Mat cv_rgbImage_no_alpha;

cv::Mat cv_rgbImage_8U;

cv::Mat cv_depth;

cv::Mat cv_depth_8U;

cv::Mat cv_irImage;

cv::Mat cv_irImage_8U;

//声明一个Kinect的capture

k4a::capture capture;

cv::Mat depthFrame;

cv::Mat rgbFrame;

cv::Mat irFrame;

4.3创建txt文件流

通过txt文件来存储已获取的图片数据的数量及名称

// An highlighted block

//写入txt文件流

ofstream rgb_out;

ofstream d_out;

ofstream ir_out;

rgb_out.open("./rgb.txt");

d_out.open("./depth.txt");

ir_out.open("./ir.txt");

rgb_out << "# color images" << endl;

rgb_out << "# file: rgbd_dataset" << endl;

rgb_out << "# timestamp" << " " << "filename" << endl;

d_out << "# depth images" << endl;

d_out << "# file: rgbd_dataset" << endl;

d_out << "# timestamp" << " " << "filename" << endl;

ir_out << "# ir images" << endl;

ir_out << "# file: rgbd_dataset" << endl;

ir_out << "# timestamp" << " " << "filename" << endl;

rgb_out << flush;

d_out << flush;

4.4 循环获取每一帧的图像

使用 dev.get_capture 函数得到 capture 对象,并设置一个 blocking 时间,表示每隔一个 blocking 时间就获取一次数据,如果这个时间设置为 0 ,表示不需要间隔时间,来一帧获取一帧,并通过相对应的函数来得到相对应格式的图像数据。

while (1)

{

if (dev.get_capture(&capture, std::chrono::milliseconds(0)))

{

k4a::image depthImage = capture.get_depth_image();

k4a::image colorImage = capture.get_color_image();

k4a::image irImage = capture.get_ir_image();

}

}

4.5数据格式转换

ColorizeDepthImage(depthImage,DepthPixelColorizer::ColorizeBlueToRed,GetDepthModeRange(config.depth_mode), &depthTextureBuffer);

ColorizeDepthImage(irImage,DepthPixelColorizer::ColorizeGreyscale,GetIrLevels(K4A_DEPTH_MODE_PASSIVE_IR), &irTextureBuffer);

colorTextureBuffer = rgbImage.get_buffer();

//从设备中获取每一帧的彩色图、深度图及红外图数据

depthFrame = cv::Mat(depthImage.get_height_pixels(), depthImage.get_width_pixels(), CV_8UC4, depthTextureBuffer.data());

rgbFrame = cv::Mat(rgbImage.get_height_pixels(), rgbImage.get_width_pixels(), CV_8UC4, colorTextureBuffer);

irFrame = cv::Mat(irImage.get_height_pixels(), irImage.get_width_pixels(), CV_8UC4, irTextureBuffer.data());

//将k4A定义的数据格式转换为OpenCV可显示的数据格式

depthFrame.convertTo(cv_depth_8U, CV_8U, 1);

rgbFrame.convertTo(cv_rgbImage_8U, CV_8U, 1);

irFrame.convertTo(cv_irImage_8U, CV_8U, 1);

//根据需求显示相应的输出窗口

//cv::imshow("Kinect depth map", depthFrame);

cv::imshow("Kinect color frame", rgbFrame);

//cv::imshow("Kinect color frame", cv_rgbImage_8U);

//cv::imshow("Kinect ir frame", irFrame);

4.6通过捕获键盘进行交互式数据采集

if (_kbhit()) {//如果有按键按下,则_kbhit()函数返回真

imshow("Kinect color frame", rgbFrame);

ch = _getch();//使用_getch()函数获取按下的键值

cout << "-----采集成功!-----" << endl;

cout << " " << endl;

//按下回车键时开始采集图像

if (ch == 13)

{

//深度图和RGB图配准

//Get the camera calibration for the entire K4A device, which is used for all transformation functions.

k4a::calibration k4aCalibration = device.get_calibration(config.depth_mode, config.color_resolution);

k4a::transformation k4aTransformation = k4a::transformation(k4aCalibration);

transformed_depthImage =k4aTransformation.depth_image_to_color_camera(depthImage);

cv_rgbImage_with_alphacv::Mat(rgbImage.get_height_pixels(),rgbImage.get_width_pixels(), CV_8UC4,(void *)rgbImage.get_buffer());

cv::cvtColor(cv_rgbImage_with_alpha, cv_rgbImage_no_alpha, cv::COLOR_BGRA2BGR);

cv_depth = cv::Mat(transformed_depthImage.get_height_pixels(), transformed_depthImage.get_width_pixels(), CV_16U,(void *)transformed_depthImage.get_buffer(), static_cast<size_t>(transformed_depthImage.get_stride_bytes()));

normalize(cv_depth, cv_depth_8U, 0, 256 * 256, NORM_MINMAX);

cv_depth_8U.convertTo(cv_depth, CV_8U, 1);

cv_irImage = cv::Mat(irImage.get_height_pixels(), irImage.get_width_pixels(), CV_16U,(void *)irImage.get_buffer(), static_cast<size_t>(irImage.get_stride_bytes()));

normalize(cv_irImage, cv_irImage_8U, 0, 256 * 256, NORM_MINMAX);

cv_irImage.convertTo(cv_irImage_8U, CV_8U, 1);

//save image

double time_rgb = static_cast<double(std::chrono::duration_cast<std::chrono::microseconds>(

rgbImage.get_device_timestamp()).count());

std::string filename_rgb = std::to_string(time_rgb / 1000000) + ".png";

double time_d = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

depthImage.get_device_timestamp()).count());

std::string filename_d = std::to_string(time_d / 1000000) + ".png";

double time_ir = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

irImage.get_device_timestamp()).count());

std::string filename_ir = std::to_string(time_ir / 1000000) + ".png";

imwrite("./RGB/" + filename_rgb, cv_rgbImage_8U);

imwrite("./Depth/" + filename_d, cv_depth_8U);

imwrite("./Ir/" + filename_ir, cv_irImage_8U);

k4a_image_t xy_table = NULL;

k4a_image_t point_cloud = NULL;

int point_count = 0;

double time_point = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

rgbImage.get_device_timestamp()).count());

std::string filename_point = "ply/" + std::to_string(time_point / 1000000) + ".ply";

k4a_image_create(K4A_IMAGE_FORMAT_CUSTOM,

k4aCalibration.depth_camera_calibration.resolution_width,

k4aCalibration.depth_camera_calibration.resolution_height,

k4aCalibration.depth_camera_calibration.resolution_width * (int)sizeof(k4a_float2_t),&xy_table);

create_xy_table(&k4aCalibration, xy_table);

k4a_image_create(K4A_IMAGE_FORMAT_CUSTOM,

k4aCalibration.depth_camera_calibration.resolution_width,

k4aCalibration.depth_camera_calibration.resolution_height,

k4aCalibration.depth_camera_calibration.resolution_width*(int)sizeof(k4a_float3_t),&point_cloud);

if (depthImage == 0)

{

printf("Failed to get depth image from capture\n");

}

generate_point_cloud(depthImage, xy_table, point_cloud, &point_count);

write_point_cloud(filename_point.c_str(), point_cloud, point_count);

k4a_image_release(xy_table);

k4a_image_release(point_cloud);

std::cout << "Acquiring!" << endl;

//写入depth.txt, rgb.txt文件

rgb_out << std::to_string(time_rgb / 1000000) << " " << "rgb/" << filename_rgb << endl;

d_out << std::to_string(time_d / 1000000) << " " << "depth/" << filename_d << endl;

ir_out << std::to_string(time_ir / 1000000) << " " << "ir/" << filename_ir << endl;

imshow("Kinect color frame", rgbFrame);

}

}

if (waitKey(30) == 27 || waitKey(30) == 'q')

{

device.close();

std::cout << "----------------------------------" << std::endl;

std::cout << "------------- closed -------------" << std::endl;

std::cout << "----------------------------------" << std::endl;

break;

}

5.完整代码

5.1头文件ShowDep_Color.h

#pragma once

#pragma once

#include <utility>

#include <k4a/k4a.hpp>

#include "Pixel.h"

#include<iostream>

#include<vector>

#include<array>

#include <fstream>

#include <chrono>

#include <string>

#include <math.h>

#include <sstream>

#include<k4a/k4a.hpp>

#include<k4a/k4atypes.h>

#include <opencv2/opencv.hpp>

#include<opencv2/core/core.hpp>

#include<opencv2/highgui/highgui.hpp>

#include<pcl/io/pcd_io.h>

#include<pcl/point_cloud.h>

#include<pcl/point_types.h>

#include<pcl/visualization/pcl_visualizer.h>

#include "Pixel.h"

#include "DepthPixelColorizer.h"

#include "StaticImageProperties.h"

#include "k4a_grabber.h"

#include <conio.h>

#pragma comment(lib, "User32.lib")

#pragma comment(lib, "gdi32.lib")

using namespace std;

using namespace cv;

using namespace sen;

//宏

//方便控制是否 std::cout 信息

#define DEBUG_std_cout 0

namespace Show

{

void ShowDep_Color();

void create_xy_table(const k4a_calibration_t *calibration, k4a_image_t xy_table);

void generate_point_cloud(const k4a::image depth_image, const k4a_image_t xy_table, k4a_image_t point_cloud, int *point_count);

void write_point_cloud(const char *file_name, const k4a_image_t point_cloud, int point_count);

}

5.2ShowDep_Color.cpp

#include "ShowDep_Color.h"

void Show::create_xy_table(const k4a_calibration_t *calibration, k4a_image_t xy_table)

{

k4a_float2_t *table_data = (k4a_float2_t *)(void *)k4a_image_get_buffer(xy_table);

int width = calibration->depth_camera_calibration.resolution_width;

int height = calibration->depth_camera_calibration.resolution_height;

k4a_float2_t p;

k4a_float3_t ray;

int valid;

for (int y = 0, idx = 0; y < height; y++)

{

p.xy.y = (float)y;

for (int x = 0; x < width; x++, idx++)

{

p.xy.x = (float)x;

k4a_calibration_2d_to_3d(

calibration, &p, 1.f, K4A_CALIBRATION_TYPE_DEPTH, K4A_CALIBRATION_TYPE_DEPTH, &ray, &valid);

if (valid)

{

table_data[idx].xy.x = ray.xyz.x;

table_data[idx].xy.y = ray.xyz.y;

}

else

{

table_data[idx].xy.x = nanf("");

table_data[idx].xy.y = nanf("");

}

}

}

}

void Show::generate_point_cloud(const k4a::image depth_image, const k4a_image_t xy_table, k4a_image_t point_cloud, int *point_count)

{

int width = depth_image.get_width_pixels();

int height = depth_image.get_height_pixels();

//int height = k4a_image_get_height_pixels(depth_image);

uint16_t *depth_data = (uint16_t *)(void *)depth_image.get_buffer();

k4a_float2_t *xy_table_data = (k4a_float2_t *)(void *)k4a_image_get_buffer(xy_table);

k4a_float3_t *point_cloud_data = (k4a_float3_t *)(void *)k4a_image_get_buffer(point_cloud);

*point_count = 0;

for (int i = 0; i < width * height; i++)

{

if (depth_data[i] != 0 && !isnan(xy_table_data[i].xy.x) && !isnan(xy_table_data[i].xy.y))

{

point_cloud_data[i].xyz.x = xy_table_data[i].xy.x * (float)depth_data[i];

point_cloud_data[i].xyz.y = xy_table_data[i].xy.y * (float)depth_data[i];

point_cloud_data[i].xyz.z = (float)depth_data[i];

(*point_count)++;

}

else

{

point_cloud_data[i].xyz.x = nanf("");

point_cloud_data[i].xyz.y = nanf("");

point_cloud_data[i].xyz.z = nanf("");

}

}

}

void Show::write_point_cloud(const char *file_name, const k4a_image_t point_cloud, int point_count)

{

int width = k4a_image_get_width_pixels(point_cloud);

int height = k4a_image_get_height_pixels(point_cloud);

k4a_float3_t *point_cloud_data = (k4a_float3_t *)(void *)k4a_image_get_buffer(point_cloud);

//save to the ply file

std::ofstream ofs(file_name); // text mode first

ofs << "ply" << std::endl;

ofs << "format ascii 1.0" << std::endl;

ofs << "element vertex"

<< " " << point_count << std::endl;

ofs << "property float x" << std::endl;

ofs << "property float y" << std::endl;

ofs << "property float z" << std::endl;

ofs << "end_header" << std::endl;

ofs.close();

std::stringstream ss;

for (int i = 0; i < width * height; i++)

{

if (isnan(point_cloud_data[i].xyz.x) || isnan(point_cloud_data[i].xyz.y) || isnan(point_cloud_data[i].xyz.z))

{

continue;

}

ss << (float)point_cloud_data[i].xyz.x << " " << (float)point_cloud_data[i].xyz.y << " "

<< (float)point_cloud_data[i].xyz.z << std::endl;

}

std::ofstream ofs_text(file_name, std::ios::out | std::ios::app);

ofs_text.write(ss.str().c_str(), (std::streamsize)ss.str().length());

}

void Show::ShowDep_Color(){

//这一代 Kinect 支持在一台电脑上打开多台 Kinect。可以用这句代码通过查询主机已连接的Kinet设备数来判断这台主机是否连接了 Kinect

const uint32_t deviceCount = k4a::device::get_installed_count();

if (deviceCount == 0)

{

cout << "未连接任何Kinect设备!" << endl;

}

//配置并启动设备

k4a_device_configuration_t config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

//设置Kinect的相机帧率为30FPS

config.camera_fps = K4A_FRAMES_PER_SECOND_30;

//设置Kinect的深度模式为Near FOV unbinned(这一代 Kinect 支持多种深度模式,官方文档推荐使用 K4A_DEPTH_MODE_NFOV_UNBINNED 和 K4A_DEPTH_MODE_WFOV_2X2BINNED 这两种深度模式)

config.depth_mode = K4A_DEPTH_MODE_NFOV_UNBINNED;

//设置Kinect的颜色属性为BGRA32

config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

//设置color图的分辨率位 720p

config.color_resolution = K4A_COLOR_RESOLUTION_720P;

//为了同时获取depth和color图,保持这两种图像是同步的

config.synchronized_images_only = true;

cout << "Started opening K4A device..." << endl;

k4a::device device = k4a::device::open(K4A_DEVICE_DEFAULT);

device.start_cameras(&config);

cout << "Finished opening K4A device." << endl;

std::vector<Pixel> depthTextureBuffer;

std::vector<Pixel> irTextureBuffer;

uint8_t *colorTextureBuffer;

//写入txt文件流

ofstream rgb_out;

ofstream d_out;

ofstream ir_out;

rgb_out.open("./rgb.txt");

d_out.open("./depth.txt");

ir_out.open("./ir.txt");

rgb_out << "# color images" << endl;

rgb_out << "# file: rgbd_dataset" << endl;

rgb_out << "# timestamp" << " " << "filename" << endl;

d_out << "# depth images" << endl;

d_out << "# file: rgbd_dataset" << endl;

d_out << "# timestamp" << " " << "filename" << endl;

ir_out << "# ir images" << endl;

ir_out << "# file: rgbd_dataset" << endl;

ir_out << "# timestamp" << " " << "filename" << endl;

rgb_out << flush;

d_out << flush;

//从设备获取捕获图像

k4a::image depthImage;

k4a::image rgbImage;

k4a::image irImage;

k4a::image transformed_depthImage;

cv::Mat cv_rgbImage_with_alpha;

cv::Mat cv_rgbImage_no_alpha;

cv::Mat cv_rgbImage_8U;

cv::Mat cv_depth;

cv::Mat cv_depth_8U;

cv::Mat cv_irImage;

cv::Mat cv_irImage_8U;

//声明一个Kinect的capture

k4a::capture capture;

cv::Mat depthFrame;

cv::Mat rgbFrame;

cv::Mat irFrame;

int ch;

/*

检索并保存 Azure Kinect 图像数据

*/

k4a_device_t device_Record = NULL;

while (1) {

if (device.get_capture(&capture, std::chrono::milliseconds(0)))

{

//通过对应的函数获得相应格式的图像数据

depthImage = capture.get_depth_image();

rgbImage = capture.get_color_image();

irImage = capture.get_ir_image();

ColorizeDepthImage(depthImage, DepthPixelColorizer::ColorizeBlueToRed, GetDepthModeRange(config.depth_mode), &depthTextureBuffer);

ColorizeDepthImage(irImage, DepthPixelColorizer::ColorizeGreyscale, GetIrLevels(K4A_DEPTH_MODE_PASSIVE_IR), &irTextureBuffer);

colorTextureBuffer = rgbImage.get_buffer();

depthFrame = cv::Mat(depthImage.get_height_pixels(), depthImage.get_width_pixels(), CV_8UC4, depthTextureBuffer.data());

rgbFrame = cv::Mat(rgbImage.get_height_pixels(), rgbImage.get_width_pixels(), CV_8UC4, colorTextureBuffer);

irFrame = cv::Mat(irImage.get_height_pixels(), irImage.get_width_pixels(), CV_8UC4, irTextureBuffer.data());

depthFrame.convertTo(cv_depth_8U, CV_8U, 1);

rgbFrame.convertTo(cv_rgbImage_8U, CV_8U, 1);

irFrame.convertTo(cv_irImage_8U, CV_8U, 1);

//imshow("Kinect depth map", depthFrame);

cv::imshow("Kinect color frame", rgbFrame);

//imshow("Kinect color frame", cv_rgbImage_8U);

//imshow("Kinect ir frame", irFrame);

if (_kbhit()) {//如果有按键按下,则_kbhit()函数返回真

imshow("Kinect color frame", rgbFrame);

ch = _getch();//使用_getch()函数获取按下的键值

cout << "-----采集成功!-----" << endl;

cout << " " << endl;

//按下回车键时开始采集图像

if (ch == 13)

{

//深度图和RGB图配准

//Get the camera calibration for the entire K4A device, which is used for all transformation functions.

k4a::calibration k4aCalibration = device.get_calibration(config.depth_mode, config.color_resolution);

k4a::transformation k4aTransformation = k4a::transformation(k4aCalibration);

transformed_depthImage = k4aTransformation.depth_image_to_color_camera(depthImage);

cv_rgbImage_with_alpha = cv::Mat(rgbImage.get_height_pixels(), rgbImage.get_width_pixels(), CV_8UC4,

(void *)rgbImage.get_buffer());

cv::cvtColor(cv_rgbImage_with_alpha, cv_rgbImage_no_alpha, cv::COLOR_BGRA2BGR);

cv_depth = cv::Mat(transformed_depthImage.get_height_pixels(), transformed_depthImage.get_width_pixels(), CV_16U,

(void *)transformed_depthImage.get_buffer(), static_cast<size_t>(transformed_depthImage.get_stride_bytes()));

normalize(cv_depth, cv_depth_8U, 0, 256 * 256, NORM_MINMAX);

cv_depth_8U.convertTo(cv_depth, CV_8U, 1);

cv_irImage = cv::Mat(irImage.get_height_pixels(), irImage.get_width_pixels(), CV_16U,

(void *)irImage.get_buffer(), static_cast<size_t>(irImage.get_stride_bytes()));

normalize(cv_irImage, cv_irImage_8U, 0, 256 * 256, NORM_MINMAX);

cv_irImage.convertTo(cv_irImage_8U, CV_8U, 1);

//imshow("Kinect color frame", rgbFrame);

//save image

double time_rgb = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

rgbImage.get_device_timestamp()).count());

std::string filename_rgb = std::to_string(time_rgb / 1000000) + ".png";

double time_d = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

depthImage.get_device_timestamp()).count());

std::string filename_d = std::to_string(time_d / 1000000) + ".png";

double time_ir = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

irImage.get_device_timestamp()).count());

std::string filename_ir = std::to_string(time_ir / 1000000) + ".png";

imwrite("./RGB/" + filename_rgb, cv_rgbImage_8U);

imwrite("./Depth/" + filename_d, cv_depth_8U);

imwrite("./Ir/" + filename_ir, cv_irImage_8U);

k4a_image_t xy_table = NULL;

k4a_image_t point_cloud = NULL;

int point_count = 0;

double time_point = static_cast<double>(std::chrono::duration_cast<std::chrono::microseconds>(

rgbImage.get_device_timestamp()).count());

std::string filename_point = "ply/" + std::to_string(time_point / 1000000) + ".ply";

k4a_image_create(K4A_IMAGE_FORMAT_CUSTOM,

k4aCalibration.depth_camera_calibration.resolution_width,

k4aCalibration.depth_camera_calibration.resolution_height,

k4aCalibration.depth_camera_calibration.resolution_width * (int)sizeof(k4a_float2_t),

&xy_table);

create_xy_table(&k4aCalibration, xy_table);

k4a_image_create(K4A_IMAGE_FORMAT_CUSTOM,

k4aCalibration.depth_camera_calibration.resolution_width,

k4aCalibration.depth_camera_calibration.resolution_height,

k4aCalibration.depth_camera_calibration.resolution_width * (int)sizeof(k4a_float3_t),

&point_cloud);

if (depthImage == 0)

{

printf("Failed to get depth image from capture\n");

}

generate_point_cloud(depthImage, xy_table, point_cloud, &point_count);

write_point_cloud(filename_point.c_str(), point_cloud, point_count);

k4a_image_release(xy_table);

k4a_image_release(point_cloud);

std::cout << "Acquiring!" << endl;

//写入depth.txt, rgb.txt文件

rgb_out << std::to_string(time_rgb / 1000000) << " " << "rgb/" << filename_rgb << endl;

d_out << std::to_string(time_d / 1000000) << " " << "depth/" << filename_d << endl;

ir_out << std::to_string(time_ir / 1000000) << " " << "ir/" << filename_ir << endl;

imshow("Kinect color frame", rgbFrame);

}

}

}

if (waitKey(30) == 27 || waitKey(30) == 'q')

{

device.close();

std::cout << "----------------------------------" << std::endl;

std::cout << "------------- closed -------------" << std::endl;

std::cout << "----------------------------------" << std::endl;

break;

}

}

}