2021年Linux技术总结(二):kernel

一、内核简介

简介并没有讲Linux内核的历史故事,只是做了Linux 内核框架的描述,方便从大局来看整个内核部分,这样可以快速了解内核的功能。

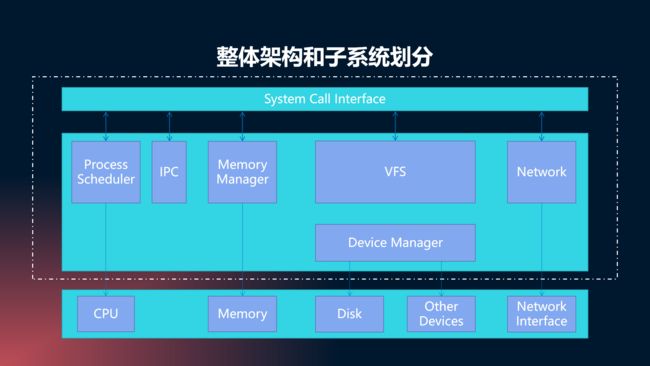

1.1 Linux 内核图

首先对Linux kernel的整体框架有一个大致的了解,方框内是Linux kernel,下方是硬件设备。

接下来,是一个更加详细的图,这个图是makelinux网站提供的一幅非常经典的Linux内核图,涵盖了内核最为核心的方法. Linux除了驱动开发外,还有很多通用子系统,比如CPU, memory, file system等核心模块,即便不做底层驱动开发, 掌握这些模块对于加深理解整个系统运转机制还是很有帮助。下面是源网站,可以对Linux kernel map内容做详细的了解:https://makelinux.github.io/kernel/map/

1.2 Linux kernel五大子系统

现代计算机(无论是PC还是嵌入式系统)的标准组成,就是CPU、Memory(内存和外存)、输入输出设备、网络设备和其它的外围设备。所以为了管理这些设备,Linux内核提出了5个子系统:

- Process Scheduler,也称作进程管理、进程调度,负责管理CPU资源,以便让各个进程可以以尽量公平的方式访问CPU

- Memory Manager,内存管理。负责管理Memory(内存)资源,内存管理会提供虚拟内存的机制

- VFS(Virtual File System),虚拟文件系统,Linux内核将不同功能的外部设备抽象为可以通过统一的文件操作接口(open、close、read、write等)来访问

- Network,网络子系统。负责管理系统的网络设备,并实现多种多样的网络标准

- IPC(Inter-Process Communication),进程间通信。IPC不管理任何的硬件,它主要负责Linux系统中进程之间的通信

1.3 Kernel源码目录结构

对Linux kernel 框架做一个整体了解后,还需要熟悉一个Linux kernel目录结构,这对Linux 是非常重要的,Linux的思想便是一切皆文件。

include/ ---- 内核头文件,需要提供给外部模块(例如用户空间代码)使用。

kernel/ ---- Linux内核的核心代码,包含了3.2小节所描述的进程调度子系统,以及和进程调度相关的模块。

mm/ ---- 内存管理子系统(3.3小节)。

fs/ ---- VFS子系统(3.4小节)。

net/ ---- 不包括网络设备驱动的网络子系统(3.5小节)。

ipc/ ---- IPC(进程间通信)子系统。

arch/ ---- 体系结构相关的代码,例如arm, x86等等。

arch/mach/ ---- 具体的machine/board相关的代码。

arch/include/asm/ ---- 体系结构相关的头文件。

arch/boot/dts ---- 设备树(Device Tree)文件。

init/ ---- Linux系统启动初始化相关的代码。

block/ ---- 提供块设备的层次。

sound/ ---- 音频相关的驱动及子系统,可以看作“音频子系统”。

drivers/ ---- 设备驱动(在Linux kernel 3.10中,设备驱动占了49.4的代码量)。

lib/ ---- 实现需要在内核中使用的库函数,例如CRC、FIFO、list、MD5等。

crypto/ ----- 加密、解密相关的库函数。

security/ ---- 提供安全特性(SELinux)。

virt/ ---- 提供虚拟机技术(KVM等)的支持。

usr/ ---- 用于生成initramfs的代码。

firmware/ ---- 保存用于驱动第三方设备的固件。

samples/ ---- 一些示例代码。

tools/ ---- 一些常用工具,如性能剖析、自测试等。

Kconfig, Kbuild, Makefile, scripts/ ---- 用于内核编译的配置文件、脚本等。

COPYING ---- 版权声明。

MAINTAINERS ----维护者名单。

CREDITS ---- Linux主要的贡献者名单。

REPORTING-BUGS ---- Bug上报的指南。

Documentation, README ---- 帮助、说明文档。

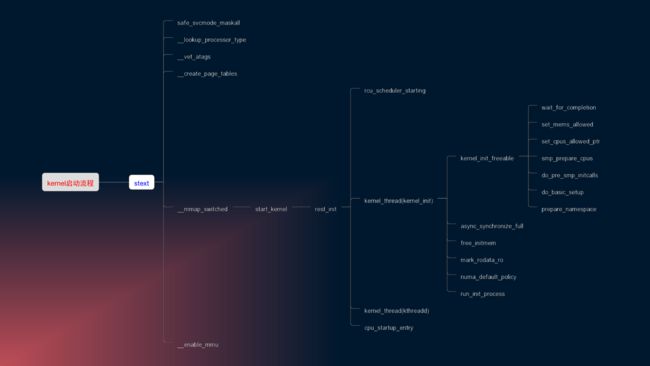

二、kernel启动流程

下面分析一下Linux内核的启动流程,熟悉Linux内核的启动流程,会在一定程度上加深对Linux kernel的结构理解。

Linux kernel 启动流程,大的方向上是:

- 进入入口函数stext(老版本的是kernel_entry);

- 信息验证,如CPU、设备树、

- 初始化内核配置;

- 调用init进程,内核转至用户态;

- 初始化内核、释放内存、调整内存策略;

- 配置设备树、初始化驱动和驱动子系统、挂载根文件系统;

- 使能MMC

2.1 入口函数

通过arch/arm/kernel/vmlinux.lds脚本链接文件查看Linux kernel内核入口时stext,然后我们通过搜索得知,stext定义在arch/arm/kernel/head.S中,函数代码如下:

ENTRY(stext)

ARM_BE8(setend be ) @ ensure we are in BE8 mode

THUMB( adr r9, BSYM(1f) ) @ Kernel is always entered in ARM.

THUMB( bx r9 ) @ If this is a Thumb-2 kernel,

THUMB( .thumb ) @ switch to Thumb now.

THUMB(1: )

#ifdef CONFIG_ARM_VIRT_EXT

bl __hyp_stub_install

#endif

@ ensure svc mode and all interrupts masked

safe_svcmode_maskall r9

mrc p15, 0, r9, c0, c0 @ get processor id

bl __lookup_processor_type @ r5=procinfo r9=cpuid

movs r10, r5 @ invalid processor (r5=0)?

THUMB( it eq ) @ force fixup-able long branch encoding

beq __error_p @ yes, error 'p'

#ifdef CONFIG_ARM_LPAE

mrc p15, 0, r3, c0, c1, 4 @ read ID_MMFR0

and r3, r3, #0xf @ extract VMSA support

cmp r3, #5 @ long-descriptor translation table format?

THUMB( it lo ) @ force fixup-able long branch encoding

blo __error_lpae @ only classic page table format

#endif

#ifndef CONFIG_XIP_KERNEL

adr r3, 2f

ldmia r3, {r4, r8}

sub r4, r3, r4 @ (PHYS_OFFSET - PAGE_OFFSET)

add r8, r8, r4 @ PHYS_OFFSET

#else

ldr r8, =PLAT_PHYS_OFFSET @ always constant in this case

#endif

bl __vet_atags

#ifdef CONFIG_SMP_ON_UP

bl __fixup_smp

#endif

#ifdef CONFIG_ARM_PATCH_PHYS_VIRT

bl __fixup_pv_table

#endif

bl __create_page_tables

/*

* The following calls CPU specific code in a position independent

* manner. See arch/arm/mm/proc-*.S for details. r10 = base of

* xxx_proc_info structure selected by __lookup_processor_type

* above. On return, the CPU will be ready for the MMU to be

* turned on, and r0 will hold the CPU control register value.

*/

ldr r13, =__mmap_switched @ address to jump to after

@ mmu has been enabled

adr lr, BSYM(1f) @ return (PIC) address

mov r8, r4 @ set TTBR1 to swapper_pg_dir

ldr r12, [r10, #PROCINFO_INITFUNC]

add r12, r12, r10

ret r12

1: b __enable_mmu

ENDPROC(stext)

通过注释,我们可以知道,进入内核的前提是:MMU = off, D-cache = off, I-cache = dont care, r0 = 0, r1 = machine nr, r2 = atags or dtb pointer.MMU、D-cache关闭,I-cache随意,r0是0,ri保存正确的机械ID,r2保存设备树首地址(atags不知道啥)。

然后stext流程便是:

- 调用safe_svcmode_maskall ,进入svc模式,关闭所有中断;

- 调用__lookup_processor_type确定该内核与CPU是否兼容,兼容的话获取procinfo信息;

- 调用 __vet_atags 验证设备树;

- 调用__create_page_tables创建页表;

- 调用__mmap_switched函数,保存返回地址;

- 最后调用 __enable_mmu函数使能 MMC。

顺便提下__mmap_switched函数,因为它最终会调用start_kernel函数,代码在arch/arm/kernel/head-common.S中,内容如下:

__mmap_switched:

adr r3, __mmap_switched_data

ldmia r3!, {r4, r5, r6, r7}

cmp r4, r5 @ Copy data segment if needed

1: cmpne r5, r6

ldrne fp, [r4], #4

strne fp, [r5], #4

bne 1b

mov fp, #0 @ Clear BSS (and zero fp)

1: cmp r6, r7

strcc fp, [r6],#4

bcc 1b

ARM( ldmia r3, {r4, r5, r6, r7, sp})

THUMB( ldmia r3, {r4, r5, r6, r7} )

THUMB( ldr sp, [r3, #16] )

str r9, [r4] @ Save processor ID

str r1, [r5] @ Save machine type

str r2, [r6] @ Save atags pointer

cmp r7, #0

strne r0, [r7] @ Save control register values

b start_kernel

ENDPROC(__mmap_switched)

2.2 启动内核

在倒数第二行,调用了start_kernel函数,start_kernel函数定义在文件 init/main.c,它会调用很多函数完成Linux的启动工作,先附上代码,以后再细说:

asmlinkage __visible void __init start_kernel(void)

{

char *command_line;

char *after_dashes;

/*

* Need to run as early as possible, to initialize the

* lockdep hash:

*/

lockdep_init();

set_task_stack_end_magic(&init_task);

smp_setup_processor_id();

debug_objects_early_init();

/*

* Set up the the initial canary ASAP:

*/

boot_init_stack_canary();

cgroup_init_early();

local_irq_disable();

early_boot_irqs_disabled = true;

/*

* Interrupts are still disabled. Do necessary setups, then

* enable them

*/

boot_cpu_init();

page_address_init();

pr_notice("%s", linux_banner);

setup_arch(&command_line);

mm_init_cpumask(&init_mm);

setup_command_line(command_line);

setup_nr_cpu_ids();

setup_per_cpu_areas();

smp_prepare_boot_cpu(); /* arch-specific boot-cpu hooks */

build_all_zonelists(NULL, NULL);

page_alloc_init();

pr_notice("Kernel command line: %s\n", boot_command_line);

parse_early_param();

after_dashes = parse_args("Booting kernel",

static_command_line, __start___param,

__stop___param - __start___param,

-1, -1, &unknown_bootoption);

if (!IS_ERR_OR_NULL(after_dashes))

parse_args("Setting init args", after_dashes, NULL, 0, -1, -1,

set_init_arg);

jump_label_init();

/*

* These use large bootmem allocations and must precede

* kmem_cache_init()

*/

setup_log_buf(0);

pidhash_init();

vfs_caches_init_early();

sort_main_extable();

trap_init();

mm_init();

/*

* Set up the scheduler prior starting any interrupts (such as the

* timer interrupt). Full topology setup happens at smp_init()

* time - but meanwhile we still have a functioning scheduler.

*/

sched_init();

/*

* Disable preemption - early bootup scheduling is extremely

* fragile until we cpu_idle() for the first time.

*/

preempt_disable();

if (WARN(!irqs_disabled(),

"Interrupts were enabled *very* early, fixing it\n"))

local_irq_disable();

idr_init_cache();

rcu_init();

/* trace_printk() and trace points may be used after this */

trace_init();

context_tracking_init();

radix_tree_init();

/* init some links before init_ISA_irqs() */

early_irq_init();

init_IRQ();

tick_init();

rcu_init_nohz();

init_timers();

hrtimers_init();

softirq_init();

timekeeping_init();

time_init();

sched_clock_postinit();

perf_event_init();

profile_init();

call_function_init();

WARN(!irqs_disabled(), "Interrupts were enabled early\n");

early_boot_irqs_disabled = false;

local_irq_enable();

kmem_cache_init_late();

/*

* HACK ALERT! This is early. We're enabling the console before

* we've done PCI setups etc, and console_init() must be aware of

* this. But we do want output early, in case something goes wrong.

*/

console_init();

if (panic_later)

panic("Too many boot %s vars at `%s'", panic_later,

panic_param);

lockdep_info();

/*

* Need to run this when irqs are enabled, because it wants

* to self-test [hard/soft]-irqs on/off lock inversion bugs

* too:

*/

locking_selftest();

#ifdef CONFIG_BLK_DEV_INITRD

if (initrd_start && !initrd_below_start_ok &&

page_to_pfn(virt_to_page((void *)initrd_start)) < min_low_pfn) {

pr_crit("initrd overwritten (0x%08lx < 0x%08lx) - disabling it.\n",

page_to_pfn(virt_to_page((void *)initrd_start)),

min_low_pfn);

initrd_start = 0;

}

#endif

page_ext_init();

debug_objects_mem_init();

kmemleak_init();

setup_per_cpu_pageset();

numa_policy_init();

if (late_time_init)

late_time_init();

sched_clock_init();

calibrate_delay();

pidmap_init();

anon_vma_init();

acpi_early_init();

#ifdef CONFIG_X86

if (efi_enabled(EFI_RUNTIME_SERVICES))

efi_enter_virtual_mode();

#endif

#ifdef CONFIG_X86_ESPFIX64

/* Should be run before the first non-init thread is created */

init_espfix_bsp();

#endif

thread_info_cache_init();

cred_init();

fork_init();

proc_caches_init();

buffer_init();

key_init();

security_init();

dbg_late_init();

vfs_caches_init(totalram_pages);

signals_init();

/* rootfs populating might need page-writeback */

page_writeback_init();

proc_root_init();

nsfs_init();

cpuset_init();

cgroup_init();

taskstats_init_early();

delayacct_init();

check_bugs();

acpi_subsystem_init();

sfi_init_late();

if (efi_enabled(EFI_RUNTIME_SERVICES)) {

efi_late_init();

efi_free_boot_services();

}

ftrace_init();

/* Do the rest non-__init'ed, we're now alive */

rest_init();

}

2.3 初始化init进程

在start_kernel最后,调用了rest_init函数,rest_init函数也定义在文件 init/main.c中,这个不长:

static noinline void __init_refok rest_init(void)

{

int pid;

rcu_scheduler_starting();

smpboot_thread_init();

/*

* We need to spawn init first so that it obtains pid 1, however

* the init task will end up wanting to create kthreads, which, if

* we schedule it before we create kthreadd, will OOPS.

*/

kernel_thread(kernel_init, NULL, CLONE_FS);

numa_default_policy();

pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

rcu_read_lock();

kthreadd_task = find_task_by_pid_ns(pid, &init_pid_ns);

rcu_read_unlock();

complete(&kthreadd_done);

/*

* The boot idle thread must execute schedule()

* at least once to get things moving:

*/

init_idle_bootup_task(current);

schedule_preempt_disabled();

/* Call into cpu_idle with preempt disabled */

cpu_startup_entry(CPUHP_ONLINE);

}

它的流程是:

- 调用 rcu_scheduler_starting,启动 RCU锁调度器

- 调用 kernel_thread 创建 kernel_init进程,将kernel由内核态转到用户态

- 调用 kernel_thread 创建 kthreadd内核进程,此内核进程的 PID为 2。 kthreadd进程负责所有内核进程的调度和管理。

- 最后调用 cpu_startup_entry 进入 idle进程,idle线程说白了,和待机很像,让cpu一直干愣着,有任务它就把cpu交给其它进程。

kernel_init函数用来管理init进程,代码如下:

static int __ref kernel_init(void *unused)

{

int ret;

kernel_init_freeable();

/* need to finish all async __init code before freeing the memory */

async_synchronize_full();

free_initmem();

mark_rodata_ro();

system_state = SYSTEM_RUNNING;

numa_default_policy();

flush_delayed_fput();

if (ramdisk_execute_command) {

ret = run_init_process(ramdisk_execute_command);

if (!ret)

return 0;

pr_err("Failed to execute %s (error %d)\n",

ramdisk_execute_command, ret);

}

/*

* We try each of these until one succeeds.

*

* The Bourne shell can be used instead of init if we are

* trying to recover a really broken machine.

*/

if (execute_command) {

ret = run_init_process(execute_command);

if (!ret)

return 0;

panic("Requested init %s failed (error %d).",

execute_command, ret);

}

if (!try_to_run_init_process("/sbin/init") ||

!try_to_run_init_process("/etc/init") ||

!try_to_run_init_process("/bin/init") ||

!try_to_run_init_process("/bin/sh"))

return 0;

panic("No working init found. Try passing init= option to kernel. "

"See Linux Documentation/init.txt for guidance.");

}

- 调用 kernel_init_freeable 函数用于完成 init进程的一些其他初始化工作,下面会再说;

- 调用 async_synchronize_full 函数,等待List async_running与async_pending都清空后返回,用来加速Linux启动;

- 调用 free_initmem 函数,释放Linux Kernel有关内存,用于满足多媒体、中断等高需求;

- 调用 mark_rodata_ro 函数,标记内核数据只读;

- 调用 numa_default_policy 函数,恢复当前进程的内存策略为默认状态;

- 如果ramdisk_execute_command、execute_command为真,调用 run_init_process 函数 初始化用户进程,否则通过其它尝试初始化用户层。

最后,看一下kernel_init_freeable()函数:

static noinline void __init kernel_init_freeable(void)

{

/*

* Wait until kthreadd is all set-up.

*/

wait_for_completion(&kthreadd_done);

/* Now the scheduler is fully set up and can do blocking allocations */

gfp_allowed_mask = __GFP_BITS_MASK;

/*

* init can allocate pages on any node

*/

set_mems_allowed(node_states[N_MEMORY]);

/*

* init can run on any cpu.

*/

set_cpus_allowed_ptr(current, cpu_all_mask);

cad_pid = task_pid(current);

smp_prepare_cpus(setup_max_cpus);

do_pre_smp_initcalls();

lockup_detector_init();

smp_init();

sched_init_smp();

do_basic_setup();

/* Open the /dev/console on the rootfs, this should never fail */

if (sys_open((const char __user *) "/dev/console", O_RDWR, 0) < 0)

pr_err("Warning: unable to open an initial console.\n");

(void) sys_dup(0);

(void) sys_dup(0);

/*

* check if there is an early userspace init. If yes, let it do all

* the work

*/

if (!ramdisk_execute_command)

ramdisk_execute_command = "/init";

if (sys_access((const char __user *) ramdisk_execute_command, 0) != 0) {

ramdisk_execute_command = NULL;

prepare_namespace();

}

/*

* Ok, we have completed the initial bootup, and

* we're essentially up and running. Get rid of the

* initmem segments and start the user-mode stuff..

*

* rootfs is available now, try loading the public keys

* and default modules

*/

integrity_load_keys();

load_default_modules();

}

- 调用 wait_for_completion 函数,等待 kthreadd_done 完成;

- 调用 set_mems_allowed 函数,初始化可在任何node分配到内存页;

- 调用 set_cpus_allowed_ptr 函数,设置cpu_bit_mask,限定task只在特定处理器上运行,使init进程能够在任意的cpu上运行;

- 调用 smp_prepare_cpus 函数,设定在编译核心时支援的最大CPU数量;

- 调用 do_pre_smp_initcalls 函数,遍历Symbol中部分函数,并调用do_one_initcall(fn)依次执行;

- 调用 do_basic_setup函数,完成 Linux下设备驱动初始化工作以及Linux下驱动模型子系统的初始化;

- 调用函数 prepare_namespace 函数,挂载根文件系统。

三、kernel移植

在没有大的修改下,kernel移植只需要移植defconfig配置文件以及设备树文件即可,它们分别在arch/arm/configs、arch/arm/boot/dts下。复制完后,再在arch/arm/boot/dts的Makefile中添加设备树,复制的哪个就添加到哪个下面就行。

移植脚本,注移植的NXP的imx6ullevk:

#!/bin/bash

board=Xport_Alientek

BOARD=XPORT_ALIENTEK

# Adding a compilation script

touch ${board}_building.sh

chmod 777 ${board}_building.sh

echo '#!/bin/bash' > ${board}_building.sh

echo '' >> ${board}_building.sh

echo "make ARCH=arm CROSS_COMPILE=arm-linux-gnueabihf- distclean" >> ${board}_building.sh

echo "make ARCH=arm CROSS_COMPILE=arm-linux-gnueabihf- imx_${board}_emmc_defconfig" >> ${board}_building.sh

echo "make ARCH=arm CROSS_COMPILE=arm-linux-gnueabihf- menuconfig" >> ${board}_building.sh

echo "make ARCH=arm CROSS_COMPILE=arm-linux-gnueabihf- all -j8" >> ${board}_building.sh

cd arch/arm/configs

cp imx_alientek_emmc_defconfig imx_${board}_emmc_defconfig

cd ../../../arch/arm/boot/dts

cp imx6ull-alientek-emmc.dts imx6ull-${board}-emmc.dts

getline()

{

cat -n Makefile|grep "imx6ull-${board}-emmc.dtb "|awk '{print $1}'

}

if [ `getline` > 0 ]

then

echo The development board : $board already exists

else

declare -i nline

getline()

{

cat -n Makefile|grep "CONFIG_SOC_IMX6ULL) += "|awk '{print $1}'

}

getlinenum()

{

awk "BEGIN{a=`getline`;b="1";c=(a+b);print c}";

}

nline=`getlinenum`-1

sed -i "${nline}a\ imx6ull-${board}-emmc.dtb "'\\' Makefile

echo The new linux-kernel : $board is added

fi