Python ProcessPoolExecutor实践

文章目录

- ProcessPoolExecutor实践

-

- 进程池的创建、关闭

- 等待进程执行完毕并获取返回结果

-

- 方法一:

- 方法二:

- 方法三:

ProcessPoolExecutor实践

进程池的创建、关闭

建议使用with,退出时自动调用shutdown()释放资源。

from concurrent.futures import ProcessPoolExecutor

def func_1():

executor = ProcessPoolExecutor(5)

# do something

executor.shutdown()

def func_2():

with ProcessPoolExecutor(5) as executor:

# do something

pass

等待进程执行完毕并获取返回结果

方法一:

import os

import time

from datetime import datetime

from concurrent.futures import ProcessPoolExecutor, wait, as_completed

def sleep(t):

print(f'[{os.getpid()}] sleeping')

time.sleep(t)

return t

def test1():

print(f'[{os.getpid()}] main proc')

with ProcessPoolExecutor(5) as executor:

jobs = []

time_list = [3, 2, 4, 1]

for i in time_list:

jobs.append(executor.submit(sleep, i))

print(datetime.now())

for job in jobs:

print(f'[{os.getpid()}] {datetime.now()} {job.result()}')

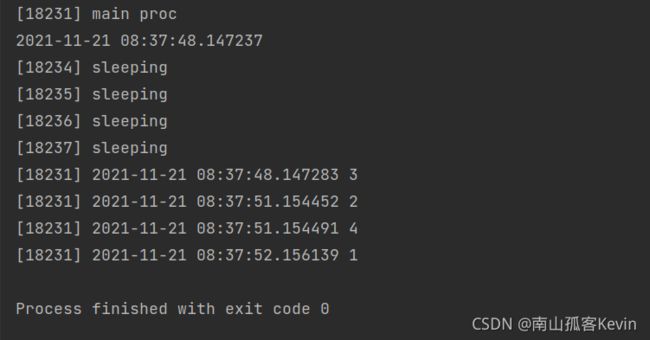

输出:

结论:

不建议使用这种方法。输出是按照任务列表顺序,但是打印的时间很令人迷惑,实际上主进程与3进程打印是有明显时间差的但是打印时间却没有展现出来。

方法二:

def test2():

print(f'[{os.getpid()}] main proc')

with ProcessPoolExecutor(5) as executor:

jobs = []

time_list = [3, 2, 4, 1]

for i in time_list:

jobs.append(executor.submit(sleep, i))

print(datetime.now())

for job in as_completed(jobs):

print(f'[{os.getpid()}] {datetime.now()} {job.result()}')

输出

结论:

as_completed(jobs) 会将结果按照任务完成顺序返回

方法三:

def test3():

print(f'[{os.getpid()}] main proc')

with ProcessPoolExecutor(5) as executor:

jobs = []

time_list = [3, 2, 4, 1]

for i in time_list:

jobs.append(executor.submit(sleep, i))

print(datetime.now())

wait(jobs)

for job in jobs:

print(f'[{os.getpid()}] {datetime.now()} {job.result()}')

输出:

结论:

通过wait(fs, timeout, return_when)等待任务完成

return_when参数:ALL_COMPLETED(默认),FIRST_COMPLETED,FIRST_EXCEPTION

ps: 可用job.done()判断任务是否已经完成

之后有问题还会继续补充