ES基础篇 Docker安装集群ES

文章目录

- 前言

- 部署环境

-

- 硬件环境

- 软件环境

- 部署步骤

-

- 细节说明

- 开始部署

- 最优实践

- 部署异常

-

- 权限问题

- max_map_count

前言

之前写过两篇安装ES教程,分别是Docker安装单节点ES和Linus安装单节点ES, 均为单节点ES,如果单纯从项目使用方面来说单节点够用了,特别是针对使用侧的用户而言,尤其是Docker安装特别容易简单,不需要什么额外的学习成本,按照步骤即可。但如果作为想深度学习,了解ES底层原理,明显是不够了,所以这次写个Docker安装ES集群供大家参考,所有操作均经过测试,可放心ctrl + c、ctrl+v

部署环境

硬件环境

阿里云乞丐版, 单核2G内存!!!

程序猿,懂得都懂!SWAP傍身,用时间换空间,2G的内存不够用,只能降低性能使用虚拟内存,谁让是猿呢?

[root@Genterator ~]# cat /proc/cpuinfo | grep name | cut -f2 -d: | uniq -c

1 Intel(R) Xeon(R) Platinum 8269CY CPU @ 2.50GHz

[root@Genterator ~]# cat /proc/meminfo | grep MemTotal

MemTotal: 1790344 kB

[root@Genterator ~]# free -h

total used free shared buff/cache available

Mem: 1.7Gi 1.4Gi 134Mi 0.0Ki 212Mi 204Mi

Swap: 2.5Gi 114Mi 2.4Gi

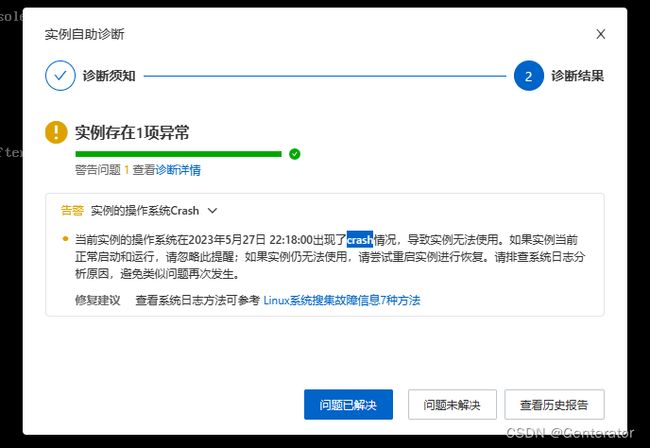

说个小插曲,刚部署的时候每个服务分配了512M,还是用了锁内存,防止使用硬盘内存,结果悲催了,丐版阿里云罢工,直接连接不上, CPU、内存满负荷运行,导致SSH链接不上,VNC也链接不上,面对crash只能选择重启, 附图:

有条件或者时间宝贵的,建议多核大内存吧!要是小白或者猿,那就像我一样,选择SWAP吧!

软件环境

- Elasticsearch

ES选择上根据个人需要把,我选择的7.17.1, 个人建议跟公司一致,或者比公司高几个版本的,因为每个版本都会有一些变动,比如前公司用6版的,里面有doc概念,结果在7版本就取消了,一切根据个人需要吧,如果喜欢尝鲜的,可以使用最新版! - Kibana

之前在Docker安装单节点ES中使用的Elasticsearch-head,作为小白可以实践一番,熟悉熟悉即可,此次使用Kibana,ELK的三剑客之一,也是大众喜欢的,强烈推荐,版本跟ES一致即可 - Docker-compose

之前Docker单节点部署也是使用的这个,怎么说呢,很方便,一个文件搞定,里面的配置也很明白,别人一看就能知道部署了些什么东西,集群特别建议使用docker-compose,一键部署很方便

部署步骤

细节说明

此次部署文件稍微多了些,做个说明,尽量让进来的童鞋一目了然,不用在部署上耗费太多时间

- 部署源文件位置:

/opt/shell/es/cluster,我是将所有需要用的文件都放到了这个文件夹下:

关于内容后面聊[root@Genterator cluster]# pwd /opt/shell/es/cluster [root@Genterator cluster]# ll 总用量 16 -rw-r--r-- 1 root root 2207 5月 27 22:18 docker-compose_es-cluster.yml - 部署ES位置:

/opt/volume/es/cluster/, 这里面主要存放es配置、数据和日志, 如下:[root@Genterator opt]# tree /opt/volume/es/cluster/ /opt/volume/es/cluster/ ├── data │ ├── 01 │ │ └── nodes │ │ └── 0 │ │ ├── node.lock │ │ ├── snapshot_cache │ │ │ ├── segments_1 │ │ │ └── write.lock │ │ └── _state │ │ ├── _0.cfe │ │ ├── _0.cfs │ │ ├── _0.si │ │ ├── manifest-0.st │ │ ├── node-0.st │ │ ├── segments_1 │ │ └── write.lock │ ├── 02 │ │ └── nodes │ │ └── 0 │ │ ├── node.lock │ │ ├── snapshot_cache │ │ │ ├── segments_3 │ │ │ └── write.lock │ │ └── _state │ │ ├── _16.cfe │ │ ├── _16.cfs │ │ ├── _16.si │ │ ├── manifest-2.st │ │ ├── node-2.st │ │ ├── segments_1c │ │ └── write.lock │ └── 03 │ └── nodes │ └── 0 │ ├── node.lock │ ├── snapshot_cache │ │ ├── segments_2 │ │ └── write.lock │ └── _state │ ├── _15.cfe │ ├── _15.cfs │ ├── _15.si │ ├── manifest-1.st │ ├── node-1.st │ ├── segments_1b │ └── write.lock └── logs ├── 01 │ ├── gc.log │ ├── gc.log.00 │ └── gc.log.01 ├── 02 │ ├── gc.log │ ├── gc.log.00 │ └── gc.log.01 └── 03 ├── gc.log ├── gc.log.00 ├── gc.log.01 └── gc.log.02 20 directories, 58 files

开始部署

-

创建工作所需目录并授权

# 创建工作所需目录 mkdir -p /opt/volume/es/cluster/data/01 /opt/volume/es/cluster/logs/01 mkdir -p /opt/volume/es/cluster/data/02 /opt/volume/es/cluster/logs/02 mkdir -p /opt/volume/es/cluster/data/03 /opt/volume/es/cluster/logs/03 # 授权 [root@Genterator volume]# chmod -R 777 /opt/volume/es -

编写docker-compose_es-cluster.yml

version: "3.8" services: es_01: image: elasticsearch:7.17.1 container_name: es_01 #测试完后再打开 # restart: always environment: - TZ=Asia/Shanghai - node.name=es_01 - cluster.name=es-cluster - discovery.seed_hosts=es_02,es_03 - cluster.initial_master_nodes=es_01,es_02,es_03 # 设置为true,这将确保Elasticsearch将所有内存都锁定到RAM中,而不会使用交换分区(内存小的慎用) # - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms512m -Xmx512m" ulimits: memlock: soft: -1 hard: -1 volumes: - /opt/volume/es/cluster/data/01:/usr/share/elasticsearch/data - /opt/volume/es/cluster/logs/01:/usr/share/elasticsearch/logs ports: - 9211:9200 - 9311:9300 networks: es_net: ipv4_address: 172.30.10.11 es_02: image: elasticsearch:7.17.1 container_name: es_02 # restart: always environment: - TZ=Asia/Shanghai - node.name=es_02 - cluster.name=es-cluster - discovery.seed_hosts=es_01,es_03 - cluster.initial_master_nodes=es_01,es_02,es_03 # - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms512m -Xmx512m" ulimits: memlock: soft: -1 hard: -1 volumes: - /opt/volume/es/cluster/data/02:/usr/share/elasticsearch/data - /opt/volume/es/cluster/logs/02:/usr/share/elasticsearch/logs networks: es_net: ipv4_address: 172.30.10.12 es_03: image: elasticsearch:7.17.1 container_name: es_03 # restart: always environment: - TZ=Asia/Shanghai - node.name=es_03 - cluster.name=es-cluster - discovery.seed_hosts=es_01,es_02 - cluster.initial_master_nodes=es_01,es_02,es_03 # - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms512m -Xmx512m" ulimits: memlock: soft: -1 hard: -1 volumes: - /opt/volume/es/cluster/data/03:/usr/share/elasticsearch/data - /opt/volume/es/cluster/logs/03:/usr/share/elasticsearch/logs networks: es_net: ipv4_address: 172.30.10.13 networks: es_net: driver: bridge ipam: config: - subnet: 172.30.10.0/25 gateway: 172.30.10.1 -

执行docker-compose命令

docker-compose -f docker-compose_es-cluster.yml up -d

最优实践

提供几个docker快速启停的命令,直接操作所有es节点:

启动所有ES节点:

docker start $(docker ps -a | grep “redis-” | awk ‘{print $1}’)

停止所有ES节点

docker stop $(docker ps -a | grep “redis-” | awk ‘{print $1}’)

删除所有ES节点

docker rm $(docker ps -a | grep “es-” | awk ‘{print $1}’)

部署异常

部署时依然肯能存在两个问题

权限问题

[root@Genterator single]# docker-compose up

WARNING: Some networks were defined but are not used by any service: name

Pulling elasticsearch (docker.elastic.co/elasticsearch/elasticsearch:7.17.1)...

7.17.1: Pulling from elasticsearch/elasticsearch

……

elasticsearch | Exception in thread "main" java.lang.RuntimeException: starting java failed with [1]

elasticsearch | output:

elasticsearch | [0.000s][error][logging] Error opening log file 'logs/gc.log': Permission denied

elasticsearch | [0.000s][error][logging] Initialization of output 'file=logs/gc.log' using options 'filecount=32,filesize=64m' failed.

elasticsearch | error:

elasticsearch | Invalid -Xlog option '-Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m', see error log for details.

elasticsearch | Error: Could not create the Java Virtual Machine.

……

处理:

给自己的ES部署文件夹授权

# 给需要写入文件的文件夹赋予权限

[root@Genterator single]# chmod 777 -R /opt/volume/es

max_map_count

max_map_count文件包含限制一个进程可以拥有的VMA(虚拟内存区域)的数量,默认值是65536。

elasticsearch | ERROR: [1] bootstrap checks failed. You must address the points described in the following [1] lines before starting Elasticsearch.

elasticsearch | bootstrap check failure [1] of [1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

elasticsearch | ERROR: Elasticsearch did not exit normally - check the logs at /usr/share/elasticsearch/logs/es-cluster.log

处理:

[root@Genterator single]# cat /proc/sys/vm/max_map_count

65530

[root@Genterator single]# sysctl -w vm.max_map_count=262144

vm.max_map_count = 262144

[root@Genterator single]# cat /proc/sys/vm/max_map_count

262144