DataX导入与导出数据

DataX导入与导出数据

-

- 1、Datax安装

- 2、从MySQL导出数据

-

- 2.1、将MySQL中的student数据库中的student表导入hive中

-

- 2.1.1、前提准备

- 2.1.2、编写脚本

- 2.1.3、执行脚本

- 2.2、将MySQL中的数据导入hbase

-

- 2.2.1、前提准备

- 2.2.2、编写脚本

- 2.2.3、执行脚本

- 3、从Hive导出数据

-

- 3.1、Hive中数据导入MySQL

-

- 3.1.1、前提准备

- 3.1.2、编写脚本

- 3.1.3、执行脚本

1、Datax安装

DataX不需要依赖其他服务,直接将DataX上传解压配置环境变量即可

官方安装包下载地址

2、从MySQL导出数据

2.1、将MySQL中的student数据库中的student表导入hive中

2.1.1、前提准备

- MySQL中必须有student数据库与student表,MySQL中需要有数据

- Hive中需要有student数据库,与student表,Hive中只需要有表

2.1.2、编写脚本

编写脚本并保存为 MySQLToHive.json

注意:编写脚本的时候Hive表中的字段分割符要与"fieldDelimiter": ","一致。

如下:

{

"job":

{

"setting":

{

"speed":

{

"channel": 3

}

},

"content":

[

{

"reader":

{

"name": "mysqlreader",

"parameter":

{

"username": "root",

"password": "123456",

"column":

[

"id",

"name",

"age",

"gender",

"clazz",

"last_mod"

],

"splitPk": "id",

"connection":

[

{

"table":

[

"student"

],

"jdbcUrl":

[

"jdbc:mysql://master:3306/student?useUnicode=true&characterEncoding=utf8"

]

}

]

}

},

"writer":

{

"name": "hdfswriter",

"parameter":

{

"defaultFS": "hdfs://master:9000",

"fileType": "text",

"path": "/user/hive/warehouse/student.db/student",

"fileName": "test",

"column":

[

{

"name": "id",

"type": "int"

},

{

"name": "name",

"type": "string"

},

{

"name": "age",

"type": "int"

},

{

"name": "gender",

"type": "string"

},

{

"name": "clazz",

"type": "string"

},

{

"name": "last_mod",

"type": "TIMESTAMP"

}

],

"writeMode": "append",

"fieldDelimiter": ","

}

}

}

]

}

}

2.1.3、执行脚本

datax.py MySQLToHive.json

执行结束,去Hive查询结果如下

2.2、将MySQL中的数据导入hbase

2.2.1、前提准备

- MySQL中必须有student数据库与student表,MySQL中需要有数据

- HBASE中需要有对应的student表和对应的列簇

2.2.2、编写脚本

“hbaseConfig”:

{

“hbase.zookeeper.quorum”: “master:2181,node1:2181,node2:2181”

}

指定zookeeper的地址

{

"job":

{

"setting":

{

"speed":

{

"channel": 3

}

},

"content":

[

{

"reader":

{

"name": "mysqlreader",

"parameter":

{

"username": "root",

"password": "123456",

"column":

[

"id",

"name",

"age",

"gender",

"clazz",

"last_mod"

],

"splitPk": "id",

"connection":

[

{

"table":

[

"student"

],

"jdbcUrl":

[

"jdbc:mysql://master:3306/student?useUnicode=true&characterEncoding=utf8"

]

}

]

}

},

"writer":

{

"name": "hbase11xwriter",

"parameter":

{

"hbaseConfig":

{

"hbase.zookeeper.quorum": "master:2181,node1:2181,node2:2181"

},

"table": "student",

"mode": "normal",

"rowkeyColumn":

[

{

"index": 0,

"type": "string"

}

],

"column":

[

{

"index": 1,

"name": "cf1:name",

"type": "string"

},

{

"index": 2,

"name": "cf1:age",

"type": "int"

},

{

"index": 3,

"name": "cf1:gender",

"type": "string"

},

{

"index": 4,

"name": "cf1:clazz",

"type": "string"

},

{

"index": 5,

"name": "cf1:last_mod",

"type": "string"

}

],

"encoding": "utf-8"

}

}

}

]

}

}

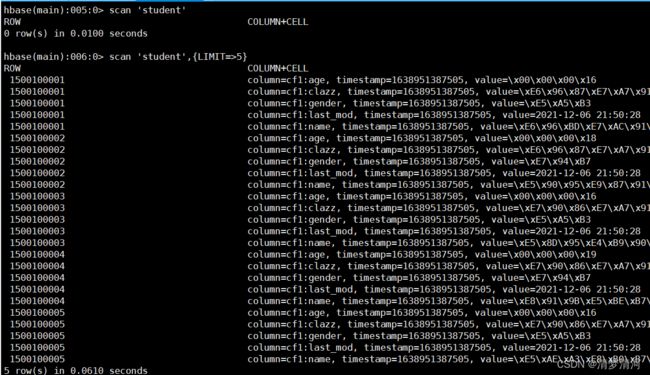

2.2.3、执行脚本

datax.py MySQLToHbase.json

3、从Hive导出数据

3.1、Hive中数据导入MySQL

3.1.1、前提准备

- Hive中需要有student表数据

- MySQL中需要有对应的数据库与表

3.1.2、编写脚本

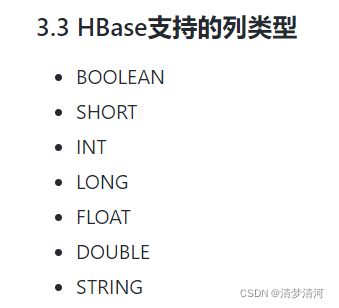

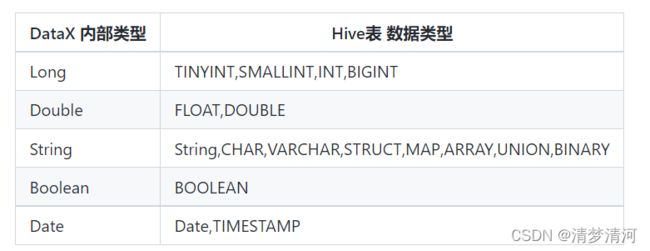

注意上面的数据类型

例如:在Hive中的数据类型是timestamp在编写脚本的时候就应该使用Date数据类型,否则将会出现脏数据。

{

"job":

{

"setting":

{

"speed":

{

"channel": 3

}

},

"content":

[

{

"reader":

{

"name": "hdfsreader",

"parameter":

{

"path": "/user/hive/warehouse/student.db/student/*",

"defaultFS": "hdfs://master:9000",

"column":

[

{

"index": 0,

"type": "long"

},

{

"index": 1,

"type": "string"

},

{

"index": 2,

"type": "long"

},

{

"index": 3,

"type": "string"

},

{

"index": 4,

"type": "string"

},

{

"index": 5,

"type": "date"

}

],

"fileType": "text",

"encoding": "UTF-8",

"fieldDelimiter": ","

}

},

"writer":

{

"name": "mysqlwriter",

"parameter":

{

"writeMode": "insert",

"username": "root",

"password": "123456",

"column":

[

"id",

"name",

"age",

"gender",

"clazz",

"last_mod"

],

"connection":

[

{

"jdbcUrl": "jdbc:mysql://master:3306/student?useUnicode=true&characterEncoding=utf8",

"table":

[

"student"

]

}

]

}

}

}

]

}

}

3.1.3、执行脚本

datax.py HiveToMySQL.json

去MySQL中查看student数据,查看数据完整,表示导入数据完成。