《学术小白的学习之路 09》基于困惑度和余弦相似度确定LDA最优主题数

一、第一种 基于困惑度

1.1 导入文本

from gensim import corpora, models

def ldamodel(num_topics):

cop = open(r'C:\Users\N\Desktop\senti_data (负) .csv',encoding='gb18030')

train = []

for line in cop.readlines():

line = [word.strip() for word in line.split(' ')]

train.append(line) # list of list 格式

dictionary = corpora.Dictionary(train)

corpus = [dictionary.doc2bow(text) for text in

train] # corpus里面的存储格式(0, 1), (1, 1), (2, 1), (3, 1), (4, 1), (5, 1), (6, 1)

corpora.MmCorpus.serialize('corpus.mm', corpus)

lda = models.LdaModel(corpus=corpus, id2word=dictionary, random_state=1,

num_topics=num_topics) # random_state 等价于随机种子的random.seed(),使每次产生的主题一致

topic_list = lda.print_topics(num_topics, 10)

# print("主题的单词分布为:\n")

# for topic in topic_list:

# print(topic)

return lda,dictionary

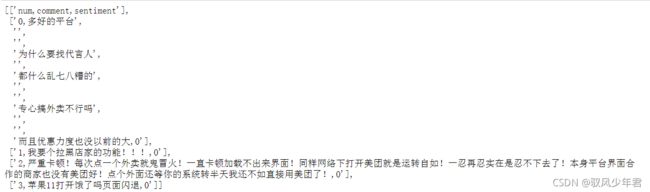

train的输出,未经过分词和停用词的处理。

修改一下将分词和停用词后的词再进行困惑度计算:

# 增加停用词

stopwords_path=r'C:\Users\N\Desktop\2021-09-情感分析研究\stopwords-master\userstopwords.txt'

# 设置停用词

print('start read stopwords data.')

stopwords = []

with open(stopwords_path, 'r', encoding='utf-8') as f:

for line in f:

if len(line)>0:

stopwords.append(line.strip())

def tokenizer(s):

words = []

cut = jieba.cut(s)

for word in cut:

if word not in stopwords:

words.append(word)

return words

def ldamodel(num_topics):

cop = open(r'C:\Users\N\Desktop\senti_data (负) .csv',encoding='gb18030')

train = []

for line in cop.readlines():

line=tokenizer(line.strip().replace(' ','').strip('\n'))

train.append(line)

dictionary = corpora.Dictionary(train)

corpus = [dictionary.doc2bow(text) for text in

train] # corpus里面的存储格式(0, 1), (1, 1), (2, 1), (3, 1), (4, 1), (5, 1), (6, 1)

corpora.MmCorpus.serialize('corpus.mm', corpus)

lda = models.LdaModel(corpus=corpus, id2word=dictionary, random_state=1,

num_topics=num_topics) # random_state 等价于随机种子的random.seed(),使每次产生的主题一致

topic_list = lda.print_topics(num_topics, 10)

# print("主题的单词分布为:\n")

# for topic in topic_list:

# print(topic)

return lda,dictionary

1.2、定义困惑度函数

import math

def perplexity(ldamodel, testset, dictionary, size_dictionary, num_topics):

print('the info of this ldamodel: \n')

print('num of topics: %s' % num_topics)

prep = 0.0

prob_doc_sum = 0.0

topic_word_list = []

for topic_id in range(num_topics):

topic_word = ldamodel.show_topic(topic_id, size_dictionary)

dic = {}

for word, probability in topic_word:

dic[word] = probability

topic_word_list.append(dic)

doc_topics_ist = []

for doc in testset:

doc_topics_ist.append(ldamodel.get_document_topics(doc, minimum_probability=0))

testset_word_num = 0

for i in range(len(testset)):

prob_doc = 0.0 # the probablity of the doc

doc = testset[i]

doc_word_num = 0

for word_id, num in dict(doc).items():

prob_word = 0.0

doc_word_num += num

word = dictionary[word_id]

for topic_id in range(num_topics):

# cal p(w) : p(w) = sumz(p(z)*p(w|z))

prob_topic = doc_topics_ist[i][topic_id][1]

prob_topic_word = topic_word_list[topic_id][word]

prob_word += prob_topic * prob_topic_word

prob_doc += math.log(prob_word) # p(d) = sum(log(p(w)))

prob_doc_sum += prob_doc

testset_word_num += doc_word_num

prep = math.exp(-prob_doc_sum / testset_word_num) # perplexity = exp(-sum(p(d)/sum(Nd))

print("模型困惑度的值为 : %s" % prep)

return prep

1.3、主函数

主函数:

from gensim import corpora, models

import matplotlib.pyplot as plt

def graph_draw(topic, perplexity): # 做主题数与困惑度的折线图

x = topic

y = perplexity

plt.plot(x, y, color="red", linewidth=2)

plt.xlabel("Number of Topic")

plt.ylabel("Perplexity")

plt.show()

if __name__ == '__main__':

for i in range(20,300,1): # 多少文档中抽取一篇(这里只是为了调试最优结果,可以直接设定不循环)

print("抽样为"+str(i)+"时的perplexity")

a=range(1,20,1) # 主题个数

p=[]

for num_topics in a:

lda,dictionary =ldamodel(num_topics)

corpus = corpora.MmCorpus('corpus.mm')

testset = []

for c in range(int(corpus.num_docs/i)):

testset.append(corpus[c*i])

prep = perplexity(lda, testset, dictionary, len(dictionary.keys()), num_topics)

p.append(prep)

graph_draw(a,p)

第二种 基于余弦相似度

2.1 数据导入

import re

import itertools

from gensim import corpora, models

# 载入情感分析后的数据

posdata = pd.read_csv("./posdata.csv", encoding = 'utf-8')

negdata = pd.read_csv("./negdata.csv", encoding = 'utf-8')

# 建立词典

pos_dict = corpora.Dictionary([[i] for i in posdata['word']]) # 正面

neg_dict = corpora.Dictionary([[i] for i in negdata['word']]) # 负面

# 建立语料库

pos_corpus = [pos_dict.doc2bow(j) for j in [[i] for i in posdata['word']]] # 正面

neg_corpus = [neg_dict.doc2bow(j) for j in [[i] for i in negdata['word']]] # 负面

2.2 寻找最优主题数

基于相似度的自适应最优LDA模型选择方法,确定主题数并进行主题分析。实验证明该方法可以在不需要人工调试主题数目的情况下,用相对少的迭代找到最优的主题结构。

具体步骤如下:

- 取初始主题数k值,得到初始模型,计算各主题之间的相似度(平均余弦距离)。

- 增加或减少k值,重新训练模型,再次计算各主题之间的相似度。

- 重复步骤2直到得到最优k值。

# 余弦相似度函数

def cos(vector1, vector2):

dot_product = 0.0;

normA = 0.0;

normB = 0.0;

for a,b in zip(vector1, vector2):

dot_product += a*b

normA += a**2

normB += b**2

if normA == 0.0 or normB==0.0:

return(None)

else:

return(dot_product / ((normA*normB)**0.5))

# 主题数寻优

def lda_k(x_corpus, x_dict):

# 初始化平均余弦相似度

mean_similarity = []

mean_similarity.append(1)

# 循环生成主题并计算主题间相似度

for i in np.arange(2,11):

# LDA模型训练

lda = models.LdaModel(x_corpus, num_topics = i, id2word = x_dict)

for j in np.arange(i):

term = lda.show_topics(num_words = 50)

# 提取各主题词

top_word = []

for k in np.arange(i):

top_word.append([''.join(re.findall('"(.*)"',i)) \

for i in term[k][1].split('+')]) # 列出所有词

# 构造词频向量

word = sum(top_word,[]) # 列出所有的词

unique_word = set(word) # 去除重复的词

# 构造主题词列表,行表示主题号,列表示各主题词

mat = []

for j in np.arange(i):

top_w = top_word[j]

mat.append(tuple([top_w.count(k) for k in unique_word]))

p = list(itertools.permutations(list(np.arange(i)),2))

l = len(p)

top_similarity = [0]

for w in np.arange(l):

vector1 = mat[p[w][0]]

vector2 = mat[p[w][1]]

top_similarity.append(cos(vector1, vector2))

# 计算平均余弦相似度

mean_similarity.append(sum(top_similarity)/l)

return(mean_similarity)

# 计算主题平均余弦相似度

pos_k = lda_k(pos_corpus, pos_dict)

neg_k = lda_k(neg_corpus, neg_dict)

# 绘制主题平均余弦相似度图形

from matplotlib.font_manager import FontProperties

font = FontProperties(size=14)

fig = plt.figure(figsize=(10,8))

ax1 = fig.add_subplot(211)

ax1.plot(pos_k)

ax1.set_xlabel('正面评论LDA主题数寻优', fontproperties=font)

ax2 = fig.add_subplot(212)

ax2.plot(neg_k)

ax2.set_xlabel('负面评论LDA主题数寻优', fontproperties=font)