huggingface的生成模型

GPT2

训练

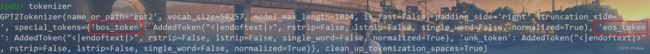

from transformers import GPT2Tokenizer, GPT2LMHeadModel tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") inputs = tokenizer("Hello, my dog is cute", return_tensors="pt") outputs = model(**inputs, labels=inputs["input_ids"]) loss = outputs.loss print(loss) logits = outputs.logits print(logits)可以看到tokenizer是有bos和eos的

inference / generate

默认是greedy search

from transformers import GPT2Tokenizer, GPT2LMHeadModel tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") inputs = tokenizer("Hello, my dog is cute and ", return_tensors="pt") # ipdb> inputs.keys() # dict_keys(['input_ids', 'attention_mask']) generation_output = model.generate( # **inputs, input_ids = inputs['input_ids'], attention_mask = inputs['attention_mask'], return_dict_in_generate=True, output_scores=True ) gen_texts = tokenizer.batch_decode( generation_output['sequences'], skip_special_tokens=True, ) print(gen_texts)注意生成的结果是带着inputs的

beam_search

只需要传入一个num_beams参数即可

from transformers import GPT2Tokenizer, GPT2LMHeadModel tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") inputs = tokenizer("Hello, my dog is cute and ", return_tensors="pt") # ipdb> inputs.keys() # dict_keys(['input_ids', 'attention_mask']) generation_output = model.generate( # **inputs, input_ids = inputs['input_ids'], attention_mask = inputs['attention_mask'], return_dict_in_generate=True, output_scores=True, num_beams=5, max_length=50, early_stopping=True, ) gen_texts = tokenizer.batch_decode( generation_output['sequences'], skip_special_tokens=True, ) print(gen_texts)early_stopping参数的话,就是当生成到EOS token的话就停止

可以看到生成结果依然有重复

引入n-grams惩罚

一个简单的补救措施是引入Paulus等人(2017)和Klein等人(2017)所介绍的n-grams(又称n个词的词序)惩罚措施。最常见的n-grams惩罚确保没有n-gram出现两次,方法是手动将可能产生已见n-gram的下一个词的概率设为0。

然而,在使用n-gram惩罚时必须小心。一篇关于纽约市的文章不应该使用2-gram的惩罚,否则,这个城市的名字就会在整个文本中只出现一次!

from transformers import GPT2Tokenizer, GPT2LMHeadModel tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") inputs = tokenizer("Hello, my dog is cute and ", return_tensors="pt") # ipdb> inputs.keys() # dict_keys(['input_ids', 'attention_mask']) generation_output = model.generate( # **inputs, input_ids = inputs['input_ids'], attention_mask = inputs['attention_mask'], return_dict_in_generate=True, output_scores=True, num_beams=5, max_length=50, early_stopping=True, no_repeat_ngram_size=2, #在使用n-gram惩罚时必须小心。一篇关于纽约市的文章不应该使用2-gram的惩罚,否则,这个城市的名字就会在整个文本中只出现一次! ) gen_texts = tokenizer.batch_decode( generation_output['sequences'], skip_special_tokens=True, ) print(gen_texts)

返回多个beam_search结果

from transformers import GPT2Tokenizer, GPT2LMHeadModel tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") inputs = tokenizer("Hello, my dog is cute and ", return_tensors="pt") # ipdb> inputs.keys() # dict_keys(['input_ids', 'attention_mask']) generation_output = model.generate( # **inputs, input_ids = inputs['input_ids'], attention_mask = inputs['attention_mask'], return_dict_in_generate=True, output_scores=True, num_beams=5, max_length=50, early_stopping=True, num_return_sequences=5, ) for i, beam_output in enumerate(generation_output['sequences']): print("{}: {}".format(i, tokenizer.decode(beam_output, skip_special_tokens=True)))上面可以看到,beam_search生成也会有beamsearch的情况,而设置n-grams惩罚又太简单粗暴,有些地方还不能用,另一种较好的解决方法是sampling

正如Ari Holtzman等人(2019)所论证的那样,高质量的人类语言并不遵循高概率下一个词的分布。换句话说,作为人类,我们希望生成的文本能给我们带来惊喜,而不是无聊/可预测的。作者通过绘制概率图,很好地展示了这一点,一个模型会给人类文本与光束搜索所做的事情。

这就是Beam-search multinomial sampling

from transformers import GPT2Tokenizer, GPT2LMHeadModel tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") inputs = tokenizer("Hello, my dog is cute and ", return_tensors="pt") # ipdb> inputs.keys() # dict_keys(['input_ids', 'attention_mask']) generation_output = model.generate( # **inputs, input_ids = inputs['input_ids'], attention_mask = inputs['attention_mask'], return_dict_in_generate=True, output_scores=True, num_beams=5, max_length=50, early_stopping=True, do_sample=True, ) gen_texts = tokenizer.batch_decode( generation_output['sequences'], skip_special_tokens=True, ) print(gen_texts)do_sample=True参数,可以启用multinomial sampling, beam-search multinomial sampling, Top-K sampling and Top-p sampling 等策略。所有这些策略都是从整个词汇的概率分布中选择下一个标记,并进行各种策略的调整。

How to generate text: using different decoding methods for language generation with Transformers

查看一个模型的generation相关的config参数

from transformers import GPT2Tokenizer, GPT2LMHeadModel tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") print(model.generation_config)这里输出的model.generation_config只显示了与默认生成配置不同的值,而没有列出任何默认值。

默认max_length是20

默认是greedy search

保存(常用的)generation的配置

from transformers import GPT2Tokenizer, GPT2LMHeadModel, GenerationConfig model = GPT2LMHeadModel.from_pretrained("gpt2") generation_config = GenerationConfig( max_new_tokens=50, do_sample=True, top_k=50, eos_token_id=model.config.eos_token_id, ) generation_config.save_pretrained("my_generation_config")生成的另一种写法

greedy search

from transformers import GPT2Tokenizer, GPT2LMHeadModel, LogitsProcessorList, MinLengthLogitsProcessor, StoppingCriteriaList, MaxLengthCriteria tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") # set pad_token_id to eos_token_id because GPT2 does not have a PAD token model.generation_config.pad_token_id = model.generation_config.eos_token_id inputs = tokenizer("Hello, my dog is cute and ", return_tensors="pt") # ipdb> inputs.keys() # dict_keys(['input_ids', 'attention_mask']) # instantiate logits processors logits_processor = LogitsProcessorList( [ MinLengthLogitsProcessor(10, eos_token_id=model.generation_config.eos_token_id), ] ) stopping_criteria = StoppingCriteriaList([MaxLengthCriteria(max_length=20)]) generation_output = model.greedy_search( inputs['input_ids'], logits_processor=logits_processor, stopping_criteria=stopping_criteria, ) gen_texts = tokenizer.batch_decode( generation_output, skip_special_tokens=True, ) print(gen_texts)beam search

甚至可以传encoder的相关信息!

from transformers import ( AutoTokenizer, AutoModelForSeq2SeqLM, LogitsProcessorList, MinLengthLogitsProcessor, BeamSearchScorer, ) import torch tokenizer = AutoTokenizer.from_pretrained("t5-base") model = AutoModelForSeq2SeqLM.from_pretrained("t5-base") encoder_input_str = "translate English to German: How old are you?" encoder_input_ids = tokenizer(encoder_input_str, return_tensors="pt").input_ids # lets run beam search using 3 beams num_beams = 3 # define decoder start token ids input_ids = torch.ones((num_beams, 1), device=model.device, dtype=torch.long) input_ids = input_ids * model.config.decoder_start_token_id # add encoder_outputs to model keyword arguments model_kwargs = { "encoder_outputs": model.get_encoder()( encoder_input_ids.repeat_interleave(num_beams, dim=0), return_dict=True ) } # instantiate beam scorer beam_scorer = BeamSearchScorer( batch_size=1, num_beams=num_beams, device=model.device, ) # instantiate logits processors logits_processor = LogitsProcessorList( [ MinLengthLogitsProcessor(5, eos_token_id=model.config.eos_token_id), ] ) outputs = model.beam_search(input_ids, beam_scorer, logits_processor=logits_processor, **model_kwargs) gen_texts = tokenizer.batch_decode(outputs, skip_special_tokens=True) print(gen_texts)GPT和BERT的tokenizer的区别

transformers库中GPTTokenizer需要同时读取

vocab_file和merges_file两个文件,不同于BertTokenizer只需要读取vocab_file一个词文件。主要原因是两种模型采用的编码不同:

- Bert采用的是字符级别的BPE编码,直接生成词表文件,官方词表中包含3w左右的单词,每个单词在词表中的位置即对应Embedding中的索引,Bert预留了100个

[unused]位置,便于使用者将自己数据中重要的token手动添加到词表中。- GPT采用的是byte级别的BPE编码,官方词表包含5w多的byte级别的token。

merges.txt中存储了所有的token,而vocab.json则是一个byte到索引的映射,通常频率越高的byte索引越小。所以转换的过程是,先将输入的所有tokens转化为merges.txt中对应的byte,再通过vocab.json中的字典进行byte到索引的转化对于GPT,比如输入的文本是

What's up with the tokenizer?首先使用

merges.txt转化为对应的Byte(类似于标准化的过程)['What', "'s", 'Ġup', 'Ġwith', 'Ġthe', 'Ġtoken', 'izer', '?']再通过

vocab.json文件存储的映射转化为对应的索引[ 'What', "'s", 'Ġup', 'Ġwith', 'Ġthe', 'Ġtoken', 'izer', '?'] ---- becomes ---- [ 2061, 338, 510, 351, 262, 11241, 7509, 30]

BERT

训练

from transformers import BertModel, BertTokenizer # 加载预训练模型和 tokenizer model = BertModel.from_pretrained('bert-large-uncased') tokenizer = BertTokenizer.from_pretrained('bert-large-uncased') # 处理输入文本并进行编码 text = "This is a test sentence." input_ids = tokenizer.encode(text, add_special_tokens=True, return_tensors='pt') #input_ids是[bs,l] # 使用 BertModel 对输入进行编码 outputs = model(input_ids) last_hidden_state = outputs.last_hidden_state #[bs,l,dim] all_hidden_states = outputs.hidden_statestokenizer是

BERT作encoder和decoder

from transformers import BertGenerationEncoder,BertGenerationDecoder encoder = BertGenerationEncoder.from_pretrained( "bert-large-uncased", bos_token_id=101, eos_token_id=102 ) # add cross attention layers and use BERT's cls token as BOS token and sep token as EOS token decoder = BertGenerationDecoder.from_pretrained( "bert-large-uncased", add_cross_attention=True, is_decoder=True, bos_token_id=101, eos_token_id=102, )verify是不是decoder

# verify the decoder self.decoder.config.is_decoder self.decoder.config.add_cross_attentionBertModel和BertGenerationEncoder的区别

通用操作

generate()时传入inputs_embeds

transformers要是最新的4.30.0

from transformers import AutoModelForCausalLM, AutoTokenizer model = AutoModelForCausalLM.from_pretrained("gpt2") tokenizer = AutoTokenizer.from_pretrained("gpt2") text = "Hello world" input_ids = tokenizer.encode(text, return_tensors="pt") # Traditional way of generating text outputs = model.generate(input_ids) print("\ngenerate + input_ids:", tokenizer.decode(outputs[0], skip_special_tokens=True)) # From inputs_embeds -- exact same output if you also pass `input_ids`. If you don't # pass `input_ids`, you will get the same generated content but without the prompt inputs_embeds = model.transformer.wte(input_ids) outputs = model.generate(input_ids, inputs_embeds=inputs_embeds) print("\ngenerate + inputs_embeds:", tokenizer.decode(outputs[0], skip_special_tokens=True))Passing inputs_embeds into GenerationMixin.generate() · Issue #6535 · huggingface/transformers · GitHub