一种细粒度资源锁的内核模块实现

参考Linux内核细粒度锁同步的核心实现的内核代码如下,为每个资源的每个功能建立一把锁,可以用于各类资源的保护和同步。

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#define RES_WAIT_TABLE_BITS 5

#define RES_WAIT_TABLE_SIZE (1 << RES_WAIT_TABLE_BITS)

struct res_def {

unsigned long flags;

void *private;

atomic_t refcount;

};

enum behavior {

EXCLUSIVE,

SHARED,

};

struct wait_res_queue {

struct res_def *res;

int bit_nr;

wait_queue_entry_t wait;

};

struct wait_res_key {

struct res_def *res;

int bit_nr;

int res_match;

};

static wait_queue_head_t res_wait_table[RES_WAIT_TABLE_SIZE] __cacheline_aligned;

static wait_queue_head_t *res_waitqueue(struct res_def *res)

{

// hash code val * GOLDEN_RATIO_64 >> (64 - bits);

return &res_wait_table[hash_ptr(res, RES_WAIT_TABLE_BITS)];

}

int res_lock_init(void)

{

int i;

for (i = 0; i < RES_WAIT_TABLE_SIZE; i ++) {

init_waitqueue_head(&res_wait_table[i]);

}

return 0;

}

static int wake_res_function(wait_queue_entry_t *wait, unsigned mode, int sync, void *arg)

{

struct wait_res_key *key = arg;

struct wait_res_queue *wait_res

= container_of(wait, struct wait_res_queue, wait);

if (wait_res->res != key->res) {

return 0;

}

key->res_match = 1;

if (wait_res->bit_nr != key->bit_nr) {

return 0;

}

if (test_bit(key->bit_nr, &key->res->flags)) {

return -1;

}

return autoremove_wake_function(wait, mode, sync, key);

}

static int wait_on_res_bit_common(wait_queue_head_t *q,

struct res_def *res, int bit_nr, int state, enum behavior behavior)

{

struct wait_res_queue wait_res;

wait_queue_entry_t *wait = &wait_res.wait;

bool bit_is_set;

int ret = 0;

init_wait(wait);

wait->flags = behavior == EXCLUSIVE ? WQ_FLAG_EXCLUSIVE : 0;

wait->func = wake_res_function;

wait_res.res = res;

wait_res.bit_nr = bit_nr;

for (;;) {

spin_lock_irq(&q->lock);

if (likely(list_empty(&wait->entry))) {

__add_wait_queue_entry_tail(q, wait);

atomic_set(&res->refcount, 1);

}

set_current_state(state);

spin_unlock_irq(&q->lock);

bit_is_set = test_bit(bit_nr, &res->flags);;

if (likely(bit_is_set)) {

schedule();

}

if (behavior == EXCLUSIVE) {

if (!test_and_set_bit_lock(bit_nr, &res->flags)) {

break;

}

} else if (behavior == SHARED) {

if (!test_bit(bit_nr, &res->flags)) {

break;

}

}

if (signal_pending_state(state, current)) {

ret = -EINTR;

break;

}

}

finish_wait(q, wait);

return ret;

}

void lock_res(struct res_def *res, int bit_nr)

{

wait_queue_head_t *q = res_waitqueue(res);

wait_on_res_bit_common(q, res, bit_nr, TASK_UNINTERRUPTIBLE,

EXCLUSIVE);

}

static void wake_up_res_bit(struct res_def *res, int bit_nr)

{

wait_queue_head_t *q = res_waitqueue(res);

struct wait_res_key key;

wait_queue_entry_t bookmark;

unsigned long flags;

key.res = res;

key.bit_nr = bit_nr;

key.res_match = 0;

bookmark.flags = 0;

bookmark.private = NULL;

bookmark.func = NULL;

INIT_LIST_HEAD(&bookmark.entry);

spin_lock_irqsave(&q->lock, flags);

__wake_up_locked_key_bookmark(q, TASK_NORMAL, &key, &bookmark);

while (bookmark.flags & WQ_FLAG_BOOKMARK) {

spin_unlock_irqrestore(&q->lock, flags);

cpu_relax();

spin_lock_irqsave(&q->lock, flags);

__wake_up_locked_key_bookmark(q, TASK_NORMAL, &key, &bookmark);

}

if (!waitqueue_active(q) || !key.res_match) {

atomic_set(&res->refcount, 1);

}

spin_unlock_irqrestore(&q->lock, flags);

}

void unlock_res(struct res_def *res, int bit_nr)

{

clear_bit_unlock(bit_nr, &res->flags);

if (atomic_read(&res->refcount)) {

wake_up_res_bit(res, bit_nr);

}

}

#define LOCK_RES_NUM 100

struct lock_res_args {

struct completion *sync;

struct res_def *res;

};

static int locker_res_task1(void *data)

{

struct lock_res_args *args = (struct lock_res_args *)data;

struct completion *done = args->sync;

struct res_def *res = args->res;

int i, j;

for (i = 0; i < LOCK_RES_NUM; i ++) {

for (j = 0; j < 64; j ++) {

lock_res(&res[i], j);

pr_err("%s line %d, lock res %d, bit %d.\n", __func__, __LINE__, i, j);

}

}

for (i = 0; i < LOCK_RES_NUM; i ++) {

for (j = 0; j < 64; j ++) {

unlock_res(&res[i], j);

pr_err("%s line %d, unlock res %d, bit %d.\n", __func__, __LINE__, i, j);

}

}

complete(done);

printk("%s line %d, test success.\n", __func__, __LINE__);

return 0;

}

static int locker_res_task2(void *data)

{

struct lock_res_args *args = (struct lock_res_args *)data;

struct completion *done = args->sync;

struct res_def *res = args->res;

int i, j;

for (i = 0; i < LOCK_RES_NUM; i ++) {

for (j = 0; j < 64; j ++) {

lock_res(&res[i], j);

pr_err("%s line %d, lock res %d, bit %d.\n", __func__, __LINE__, i, j);

}

}

for (i = 0; i < LOCK_RES_NUM; i ++) {

for (j = 0; j < 64; j ++) {

unlock_res(&res[i], j);

pr_err("%s line %d, unlock res %d, bit %d.\n", __func__, __LINE__, i, j);

}

}

complete(done);

printk("%s line %d, test success.\n", __func__, __LINE__);

return 0;

}

int lock_res_test(void)

{

struct task_struct *task1, *task2;

struct lock_res_args args1, args2;

DECLARE_COMPLETION_ONSTACK(done1);

DECLARE_COMPLETION_ONSTACK(done2);

struct res_def *res = kzalloc(sizeof(*res) * LOCK_RES_NUM, GFP_KERNEL);

if (res == NULL) {

pr_err("%s line %d, kmalloc res obj failure.\n", __func__, __LINE__);

return -1;

}

res_lock_init();

args1.res = args2.res = res;

args1.sync = &done1;

args2.sync = &done2;

lock_res(&res[0], 2);

printk("%s line %d, lock res bit 2.\n", __func__, __LINE__);

lock_res(&res[0], 1);

printk("%s line %d, lock res bit 1.\n", __func__, __LINE__);

unlock_res(&res[0], 2);

printk("%s line %d, unlock res bit 2.\n", __func__, __LINE__);

unlock_res(&res[0], 1);

printk("%s line %d, unlock res bit 1.\n", __func__, __LINE__);

task1 = kthread_run(locker_res_task1, &args1, "locker1");

if (IS_ERR(task1)) {

printk(KERN_ERR "seqfile: unable to create kernel thread: %ld\n",

PTR_ERR(task1));

return PTR_ERR(task1);

}

task2 = kthread_run(locker_res_task2, &args2, "locker2");

if (IS_ERR(task2)) {

printk(KERN_ERR "seqfile: unable to create kernel thread: %ld\n",

PTR_ERR(task2));

return PTR_ERR(task2);

}

wait_for_completion(&done1);

wait_for_completion(&done2);

kfree(&res[0]);

return 0;

}

static int run_work(void *data)

{

lock_res_test();

return 0;

}

static int lock_init(void)

{

struct task_struct *task;

task = kthread_run(run_work, NULL, "test_work");

if (IS_ERR(task)) {

printk(KERN_ERR "unable to create kernel thread: %ld\n",

PTR_ERR(task));

return PTR_ERR(task);

}

return 0;

}

static void lock_exit(void)

{

printk("%s line %d, moule unloading!\n", __func__, __LINE__);

}

module_init(lock_init);

module_exit(lock_exit);

MODULE_AUTHOR("zlcao");

MODULE_LICENSE("GPL"); Makefile

ifneq ($(KERNELRELEASE),)

obj-m:=lockres.o

else

KERNELDIR:=/lib/modules/$(shell uname -r)/build

PWD:=$(shell pwd)

all:

$(MAKE) -C $(KERNELDIR) M=$(PWD) modules

clean:

rm -rf *.o *.mod.c *.mod.o *.ko *.symvers *.mod .*.cmd *.order

format:

astyle --options=linux.astyle *.[ch]

endif模块中生成了100个资源,每个资源有64把锁,一共有6400把锁用于同步实现逻辑中的各种状态,创建两个内核线程,分别获取和释放这6400把锁。

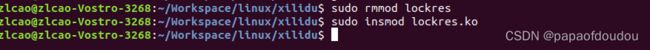

测试结果

这是一个通用逻辑,内核中有很多资源用这种细粒度的方式进行保护,比如page对象,磁盘读写请求BIO,buffer_head等等。

上面的代码经过重构,两个测试线程函数可以合并为一个

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#define RES_WAIT_TABLE_BITS 5

#define RES_WAIT_TABLE_SIZE (1 << RES_WAIT_TABLE_BITS)

struct res_def {

unsigned long flags;

void *private;

atomic_t refcount;

};

enum behavior {

EXCLUSIVE,

SHARED,

};

struct wait_res_queue {

struct res_def *res;

int bit_nr;

wait_queue_entry_t wait;

};

struct wait_res_key {

struct res_def *res;

int bit_nr;

int res_match;

};

static wait_queue_head_t res_wait_table[RES_WAIT_TABLE_SIZE] __cacheline_aligned;

static wait_queue_head_t *res_waitqueue(struct res_def *res)

{

// hash code val * GOLDEN_RATIO_64 >> (64 - bits);

return &res_wait_table[hash_ptr(res, RES_WAIT_TABLE_BITS)];

}

int res_lock_init(void)

{

int i;

for (i = 0; i < RES_WAIT_TABLE_SIZE; i ++) {

init_waitqueue_head(&res_wait_table[i]);

}

return 0;

}

static int wake_res_function(wait_queue_entry_t *wait, unsigned mode, int sync, void *arg)

{

struct wait_res_key *key = arg;

struct wait_res_queue *wait_res

= container_of(wait, struct wait_res_queue, wait);

if (wait_res->res != key->res) {

return 0;

}

key->res_match = 1;

if (wait_res->bit_nr != key->bit_nr) {

return 0;

}

if (test_bit(key->bit_nr, &key->res->flags)) {

return -1;

}

return autoremove_wake_function(wait, mode, sync, key);

}

static int wait_on_res_bit_common(wait_queue_head_t *q,

struct res_def *res, int bit_nr, int state, enum behavior behavior)

{

struct wait_res_queue wait_res;

wait_queue_entry_t *wait = &wait_res.wait;

bool bit_is_set;

int ret = 0;

init_wait(wait);

wait->flags = behavior == EXCLUSIVE ? WQ_FLAG_EXCLUSIVE : 0;

wait->func = wake_res_function;

wait_res.res = res;

wait_res.bit_nr = bit_nr;

for (;;) {

spin_lock_irq(&q->lock);

if (likely(list_empty(&wait->entry))) {

__add_wait_queue_entry_tail(q, wait);

atomic_set(&res->refcount, 1);

}

set_current_state(state);

spin_unlock_irq(&q->lock);

bit_is_set = test_bit(bit_nr, &res->flags);;

if (likely(bit_is_set)) {

schedule();

}

if (behavior == EXCLUSIVE) {

if (!test_and_set_bit_lock(bit_nr, &res->flags)) {

break;

}

} else if (behavior == SHARED) {

if (!test_bit(bit_nr, &res->flags)) {

break;

}

}

if (signal_pending_state(state, current)) {

ret = -EINTR;

break;

}

}

finish_wait(q, wait);

return ret;

}

void lock_res(struct res_def *res, int bit_nr)

{

wait_queue_head_t *q = res_waitqueue(res);

wait_on_res_bit_common(q, res, bit_nr, TASK_UNINTERRUPTIBLE,

EXCLUSIVE);

}

static void wake_up_res_bit(struct res_def *res, int bit_nr)

{

wait_queue_head_t *q = res_waitqueue(res);

struct wait_res_key key;

wait_queue_entry_t bookmark;

unsigned long flags;

key.res = res;

key.bit_nr = bit_nr;

key.res_match = 0;

bookmark.flags = 0;

bookmark.private = NULL;

bookmark.func = NULL;

INIT_LIST_HEAD(&bookmark.entry);

spin_lock_irqsave(&q->lock, flags);

__wake_up_locked_key_bookmark(q, TASK_NORMAL, &key, &bookmark);

while (bookmark.flags & WQ_FLAG_BOOKMARK) {

spin_unlock_irqrestore(&q->lock, flags);

cpu_relax();

spin_lock_irqsave(&q->lock, flags);

__wake_up_locked_key_bookmark(q, TASK_NORMAL, &key, &bookmark);

}

if (!waitqueue_active(q) || !key.res_match) {

atomic_set(&res->refcount, 1);

}

spin_unlock_irqrestore(&q->lock, flags);

}

void unlock_res(struct res_def *res, int bit_nr)

{

clear_bit_unlock(bit_nr, &res->flags);

if (atomic_read(&res->refcount)) {

wake_up_res_bit(res, bit_nr);

}

}

#define LOCK_RES_NUM 100

struct lock_res_args {

struct completion *sync;

struct res_def *res;

};

static int locker_res_task1(void *data)

{

struct lock_res_args *args = (struct lock_res_args *)data;

struct completion *done = args->sync;

struct res_def *res = args->res;

int i, j;

for (i = 0; i < LOCK_RES_NUM; i ++) {

for (j = 0; j < 64; j ++) {

lock_res(&res[i], j);

pr_err("%s line %d, lock res %d, bit %d.\n", __func__, __LINE__, i, j);

}

}

for (i = 0; i < LOCK_RES_NUM; i ++) {

for (j = 0; j < 64; j ++) {

unlock_res(&res[i], j);

pr_err("%s line %d, unlock res %d, bit %d.\n", __func__, __LINE__, i, j);

}

}

complete(done);

printk("%s line %d, test success.\n", __func__, __LINE__);

return 0;

}

static int __maybe_unused locker_res_task2(void *data)

{

struct lock_res_args *args = (struct lock_res_args *)data;

struct completion *done = args->sync;

struct res_def *res = args->res;

int i, j;

for (i = 0; i < LOCK_RES_NUM; i ++) {

for (j = 0; j < 64; j ++) {

lock_res(&res[i], j);

pr_err("%s line %d, lock res %d, bit %d.\n", __func__, __LINE__, i, j);

}

}

for (i = 0; i < LOCK_RES_NUM; i ++) {

for (j = 0; j < 64; j ++) {

unlock_res(&res[i], j);

pr_err("%s line %d, unlock res %d, bit %d.\n", __func__, __LINE__, i, j);

}

}

complete(done);

printk("%s line %d, test success.\n", __func__, __LINE__);

return 0;

}

int lock_res_test(void)

{

struct task_struct *task1, *task2;

struct lock_res_args args1, args2;

DECLARE_COMPLETION_ONSTACK(done1);

DECLARE_COMPLETION_ONSTACK(done2);

struct res_def *res = kzalloc(sizeof(*res) * LOCK_RES_NUM, GFP_KERNEL);

if (res == NULL) {

pr_err("%s line %d, kmalloc res obj failure.\n", __func__, __LINE__);

return -1;

}

res_lock_init();

args1.res = args2.res = res;

args1.sync = &done1;

args2.sync = &done2;

lock_res(&res[0], 2);

printk("%s line %d, lock res bit 2.\n", __func__, __LINE__);

lock_res(&res[0], 1);

printk("%s line %d, lock res bit 1.\n", __func__, __LINE__);

unlock_res(&res[0], 2);

printk("%s line %d, unlock res bit 2.\n", __func__, __LINE__);

unlock_res(&res[0], 1);

printk("%s line %d, unlock res bit 1.\n", __func__, __LINE__);

task1 = kthread_run(locker_res_task1, &args1, "locker1");

if (IS_ERR(task1)) {

printk(KERN_ERR "seqfile: unable to create kernel thread: %ld\n",

PTR_ERR(task1));

return PTR_ERR(task1);

}

task2 = kthread_run(locker_res_task1, &args2, "locker2");

if (IS_ERR(task2)) {

printk(KERN_ERR "seqfile: unable to create kernel thread: %ld\n",

PTR_ERR(task2));

return PTR_ERR(task2);

}

wait_for_completion(&done1);

wait_for_completion(&done2);

kfree(&res[0]);

return 0;

}

static int run_work(void *data)

{

lock_res_test();

return 0;

}

static int lock_init(void)

{

struct task_struct *task;

task = kthread_run(run_work, NULL, "test_work");

if (IS_ERR(task)) {

printk(KERN_ERR "unable to create kernel thread: %ld\n",

PTR_ERR(task));

return PTR_ERR(task);

}

return 0;

}

static void lock_exit(void)

{

printk("%s line %d, moule unloading!\n", __func__, __LINE__);

}

module_init(lock_init);

module_exit(lock_exit);

MODULE_AUTHOR("zlcao");

MODULE_LICENSE("GPL"); 总结:

本实现参考内核模块中的lock_page/unlock_page以及wait_bit实现,以LOCK PAGE为例,struct page数据结构中的成员flags定义了一个标志位PG_locked,内核通常利用PG_locked来设置一个页锁,lock_page函数用于申请页锁,如果页锁被其他进程占用了,那么进程会睡眠等待。