gh-ost大表DDL工具源码阅读

gh-ost大表DDL工具源码阅读

- 最终目的

- 开发环境与测试数据库准备

- 一个简单的ddl案例

- debug分析程序执行过程

-

- vscode debug配置

- 变量介绍

- 核心处理逻辑

- 分析我的需求

最终目的

尝试通过阅读源码、多测试熟悉gh-ost的功能,最终尝试将其改出大表归档工具。

开发环境与测试数据库准备

在一台安装了Golang1.17的4核8G的CentOS7上进行测试,MySQL5.7也在这台机器上;

先用Golang创建一个数据库表并插入一些数据:

// 用户明细表

type UserDetail struct {

gorm.Model

Id int `json:"id" gorm:"type:varchar(255);unique_index;not null;comment:ID"`

Name string `json:"name" gorm:"type:varchar(300);not null;comment:姓名"`

Age int `json:"age" gorm:"type:varchar(10);not null;comment:年龄"`

Birthday time.Time `json:"birthday" gorm:"type:date;not null;comment:出生日期"`

Gender string `json:"gender" gorm:"type:tinyint(1);not null;comment:性别"`

Mobile string `json:"mobile" gorm:"type:varchar(20);not null;comment:手机号"`

Email string `json:"email" gorm:"type:varchar(255);not null;comment:邮箱"`

Attr1 string `json:"attr1" gorm:"type:varchar(255);comment:个性化字段"`

Attr2 string `json:"attr2" gorm:"type:varchar(255);comment:个性化字段"`

Attr3 string `json:"attr3" gorm:"type:varchar(255);comment:个性化字段"`

Attr4 string `json:"attr4" gorm:"type:varchar(255);comment:个性化字段"`

Attr5 string `json:"attr5" gorm:"type:varchar(255);comment:个性化字段"`

Attr6 string `json:"attr6" gorm:"type:varchar(255);comment:个性化字段"`

Attr7 string `json:"attr7" gorm:"type:varchar(255);comment:个性化字段"`

Attr8 string `json:"attr8" gorm:"type:varchar(255);comment:个性化字段"`

Attr9 string `json:"attr9" gorm:"type:varchar(255);comment:个性化字段"`

Attr10 string `json:"attr10" gorm:"type:varchar(255);comment:个性化字段"`

Attr11 string `json:"attr11" gorm:"type:varchar(255);comment:个性化字段"`

Attr12 string `json:"attr12" gorm:"type:varchar(255);comment:个性化字段"`

Attr13 string `json:"attr13" gorm:"type:varchar(255);comment:个性化字段"`

Attr14 string `json:"attr14" gorm:"type:varchar(255);comment:个性化字段"`

Attr15 string `json:"attr15" gorm:"type:varchar(255);comment:个性化字段"`

Attr16 string `json:"attr16" gorm:"type:varchar(255);comment:个性化字段"`

Attr17 string `json:"attr17" gorm:"type:varchar(255);comment:个性化字段"`

Attr18 string `json:"attr18" gorm:"type:varchar(255);comment:个性化字段"`

Attr19 string `json:"attr19" gorm:"type:varchar(255);comment:个性化字段"`

Attr20 string `json:"attr20" gorm:"type:varchar(255);comment:个性化字段"`

Attr21 string `json:"attr21" gorm:"type:varchar(255);comment:个性化字段"`

Attr22 string `json:"attr22" gorm:"type:varchar(255);comment:个性化字段"`

Attr23 string `json:"attr23" gorm:"type:varchar(255);comment:个性化字段"`

Attr24 string `json:"attr24" gorm:"type:varchar(255);comment:个性化字段"`

Attr25 string `json:"attr25" gorm:"type:varchar(255);comment:个性化字段"`

Attr26 string `json:"attr26" gorm:"type:varchar(255);comment:个性化字段"`

Attr27 string `json:"attr27" gorm:"type:varchar(255);comment:个性化字段"`

Attr28 string `json:"attr28" gorm:"type:varchar(255);comment:个性化字段"`

Attr29 string `json:"attr29" gorm:"type:varchar(255);comment:个性化字段"`

}

var db *gorm.DB

var err error

func init() {

// 参考 https://github.com/go-sql-driver/mysql#dsn-data-source-name 获取详情

db, err = gorm.Open(mysql.New(mysql.Config{

DSN: "数据库用户:数据库密码@tcp(IP地址:3306)/数据库名?charset=utf8&parseTime=True&loc=Local", // DSN data source name

DefaultStringSize: 256, // string 类型字段的默认长度

DisableDatetimePrecision: true, // 禁用 datetime 精度,MySQL 5.6 之前的数据库不支持

DontSupportRenameIndex: true, // 重命名索引时采用删除并新建的方式,MySQL 5.7 之前的数据库和 MariaDB 不支持重命名索引

DontSupportRenameColumn: true, // 用 `change` 重命名列,MySQL 8 之前的数据库和 MariaDB 不支持重命名列

SkipInitializeWithVersion: false, // 根据当前 MySQL 版本自动配置

}), &gorm.Config{

Logger: logger.Default.LogMode(logger.Silent),

})

if err != nil {

fmt.Println("error:", err)

}

}

// 写入

func benchInsertUserDetail() {

fmt.Println("开始写入: ", +time.Now().Unix())

intertnumber := 0

for i := 0; i < 10; i++ {

value := i

go func() {

execstring := "INSERT INTO `peppa`.`user_details` (`id`, `created_at`, `updated_at`, `name`, `age`, `birthday`, `gender`, `mobile`, `email`, `attr1`, `attr2`, `attr3`, `attr4`, `attr5`, `attr6`, `attr7`, `attr8`, `attr9`, `attr10`, `attr11`, `attr12`, `attr13`, `attr14`, `attr15`, `attr17`, `attr19`, `attr22`, `attr23`, `attr25`, `attr26`, `attr28`) VALUES "

for k := value; k < 1000; k = k + 10 {

data := " "

for j := k * 10000; j < k*10000+10000; j++ {

if j < k*10000+9999 {

id := strconv.Itoa(j)

onedata := "('" + id + "', '" + time.Now().Format("2006-01-02 15:04:05") + "', '" + time.Now().Format("2006-01-02 15:04:05") + "', 'test" + id + "', '" + id + "', '" + time.Now().AddDate(0, 0, -int(j)).Format("2006-01-02 15:04:05") + "', '1'" + ", '15003456789', '" + id + "@outlook.com'" + ", '1', '1', '1', '1', '1', '23', '4', '5', '6', '7', '8', '9', '0', '-', '7', '435', '5', '4', '2', '25', '67', '687'), "

data = data + onedata

} else {

id := strconv.Itoa(j)

onedata := "('" + id + "', '" + time.Now().Format("2006-01-02 15:04:05") + "', '" + time.Now().Format("2006-01-02 15:04:05") + "', 'test" + id + "', '" + id + "', '" + time.Now().AddDate(0, 0, -int(j)).Format("2006-01-02 15:04:05") + "', '1'" + ", '15003456789', '" + id + "@outlook.com'" + ", '1', '1', '1', '1', '1', '23', '4', '5', '6', '7', '8', '9', '0', '-', '7', '435', '5', '4', '2', '25', '67', '687')"

data = data + onedata

}

}

_ = db.Exec(execstring + data)

intertnumber = intertnumber + 10000

}

}()

}

for intertnumber < 9999999 {

time.Sleep(1 * time.Second)

}

fmt.Println("完成写入: ", +time.Now().Unix())

}

func cue() {

fmt.Println("当前时间戳: ", +time.Now().Unix())

}

func main() {

runtime.GOMAXPROCS(runtime.NumCPU())

// db.AutoMigrate(&UserDetail{}) // 数据迁移

go func() {

// 定时器

fmt.Println("计时...")

for range time.Tick(20 * time.Second) {

cue()

}

}()

benchInsertUserDetail() // 造数据

}

执行 go run -gcflags="-m -l" .\main.go 每过20秒输出一次时间戳,输出几次后ctrl+c暂停即可。

# command-line-arguments

.\main.go:60:10: ... argument escapes to heap

.\main.go:67:6: &gorm.Config{...} escapes to heap

.\main.go:71:14: ... argument does not escape

.\main.go:71:15: "error:" escapes to heap

.\main.go:78:2: moved to heap: intertnumber

.\main.go:77:13: ... argument does not escape

.\main.go:77:14: "开始写入: " escapes to heap

.\main.go:77:32: +time.Now().Unix() escapes to heap

.\main.go:81:6: func literal escapes to heap

.\main.go:89: "('" + id + "', '" + time.Now().Format("2006-01-02 15:04:05") + "', '" + time.Now().Format("2006-01-02 15:04:05") + "', 'test" + id + "', '" +

id + "', '" + time.Now().AddDate(0, 0, -int(j)).Format("2006-01-02 15:04:05") + "', '1', '15003456789', '" + id + "@outlook.com', '1', '1', '1', '1', '1', '23', '4', '5', '6', '7', '8... does not escape

.\main.go:90:19: data + onedata escapes to heap

.\main.go:93: "('" + id + "', '" + time.Now().Format("2006-01-02 15:04:05") + "', '" + time.Now().Format("2006-01-02 15:04:05") + "', 'test" + id + "', '" +

id + "', '" + time.Now().AddDate(0, 0, -int(j)).Format("2006-01-02 15:04:05") + "', '1', '15003456789', '" + id + "@outlook.com', '1', '1', '1', '1', '1', '23', '4', '5', '6', '7', '8... does not escape

.\main.go:94:19: data + onedata escapes to heap

.\main.go:97:28: execstring + data does not escape

.\main.go:105:13: ... argument does not escape

.\main.go:105:14: "完成写入: " escapes to heap

.\main.go:105:32: +time.Now().Unix() escapes to heap

.\main.go:109:13: ... argument does not escape

.\main.go:109:14: "当前时间戳: " escapes to heap

.\main.go:109:35: +time.Now().Unix() escapes to heap

.\main.go:115:5: func literal escapes to heap

.\main.go:117:14: ... argument does not escape

.\main.go:117:15: "计时..." escapes to heap

<autogenerated>:1: leaking param content: .this

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:18:12: leaking param: writer

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:18:34: leaking param: stmt

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:18:51: leaking param: v

<autogenerated>:1: leaking param content: .this

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:16:13: leaking param: .anon0

<autogenerated>:1: leaking param content: .this

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:17:17: leaking param: .anon0

<autogenerated>:1: leaking param content: .this

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:20:10: leaking param: sql

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:20:22: leaking param: vars

<autogenerated>:1: leaking param content: .this

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:14:13: leaking param: .anon0

<autogenerated>:1: leaking param content: .this

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:15:11: leaking param: db

<autogenerated>:1: leaking param content: .this

<autogenerated>:1: leaking param content: .this

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:19:10: leaking param: .anon0

D:\Go\pkg\mod\gorm.io\gorm@v1.21.13\interfaces.go:19:25: leaking param: .anon1

开始写入: 1631000374

计时...

当前时间戳: 1631000394

当前时间戳: 1631000414

当前时间戳: 1631000434

当前时间戳: 1631000454

当前时间戳: 1631000474

当前时间戳: 1631000494

当前时间戳: 1631000514

当前时间戳: 1631000534

exit status 0xc000013a

160秒73万行,速度只有4千行每秒

然后将gh-ost的最新的release版本即tag为1.1.2的源码拉到CentOS7服务器,并通过vscode配置好的remote-ssh打开:

一个简单的ddl案例

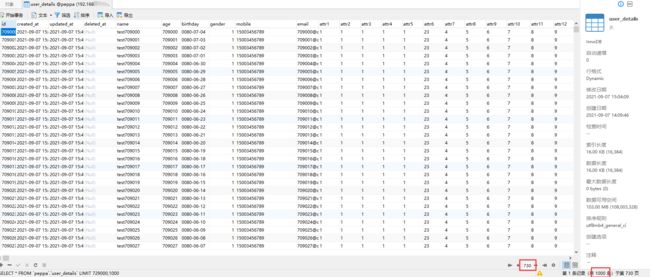

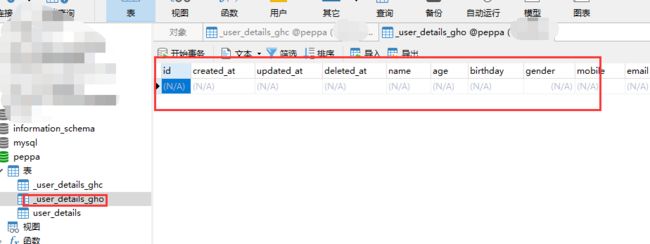

表字段如下:

咱们将email长度改长到300:

go run main.go --initially-drop-ghost-table \

--initially-drop-socket-file \

--host="127.0.0.1" \

--port=3306 \

--user="root" \

--password="数据库密码" \

--database="数据库名称" \

--table="user_details" \

--verbose \

--alter="alter table user_details modify email varchar(300)" \

--panic-flag-file=/tmp/ghost.panic.flag \

--chunk-size 10000 \

--dml-batch-size 50 \

--max-lag-millis 15000 \

--default-retries 1000 \

--allow-on-master \

--throttle-flag-file=/tmp/ghost.throttle.flag \

--exact-rowcount \

--ok-to-drop-table \

--replica-server-id=$RANDOM \

--execute >> ./user_details.out &

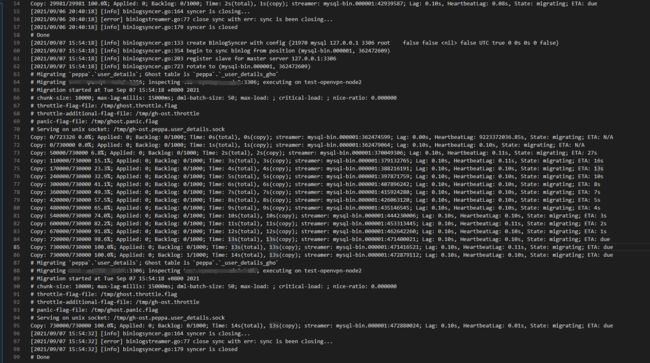

日志为:

修改成功后:

debug分析程序执行过程

vscode debug配置

通过简单配置用vscode ssh到服务器,并选择在目的机器安装Go插件(cs code),然后修改debug的launch.json配置:

就是创建launch.json后修改上图框出的俩个部分,每次修改alter参数中可变长字符串的长度即可:

{

// 使用 IntelliSense 了解相关属性。

// 悬停以查看现有属性的描述。

// 欲了解更多信息,请访问: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "Launch Package",

"type": "go",

"request": "launch",

"mode": "auto",

"program": "go/cmd/gh-ost/main.go",

"args": ["--initially-drop-ghost-table",

"--initially-drop-socket-file",

"--host","127.0.0.1",

"--port","3306",

"--user","root",

"--password","数据库密码",

"--database","peppa",

"--table","user_details",

"--verbose",

"--alter","alter table user_details modify email varchar(301)",

"--panic-flag-file","/tmp/ghost.panic.flag",

"--chunk-size", "10000",

"--dml-batch-size", "50",

"--max-lag-millis", "15000",

"--default-retries", "1000",

"--allow-on-master",

"--throttle-flag-file","/tmp/ghost.throttle.flag",

"--exact-rowcount",

"--ok-to-drop-table",

"--execute"," >> ./user_details.out &"]

}

]

}

然后通过debug熟悉运行过程。

变量介绍

-

Inspector 从 read-MySQL-server 读取数据(通常是副本,但可以是主服务器) 用于获取初始状态和结构,以及后续跟踪进度和更改日志。

-

Applier 连接并写入 applier-server,这是发生迁移的服务器。 这通常是主节点,但在给出

--test-on-replica或--execute-on-replica时可能是副本。Applier 是将行数据实际写入并将 binlog 事件应用到幽灵表的工具。 这是创建 ghost 和 changelog 表的地方。 这是切换阶段发生的地方。 -

EventsStreamer 从二进制日志中读取数据并将其流式传输。 它充当发布者,感兴趣的各方可以订阅每个表的事件。

-

Throttler收集与节流相关的指标,并就是否应进行节流做出明智的决定。

核心处理逻辑

程序入口main.go前面全部是输入参数的校验和处理,最后面的一句migrator.Migrate()调用logic包migrator.go的Migrate方法,该方法执行完整的迁移逻辑,以下是Migrate方法的全部逻辑

-

this.validateStatement()校验alter语句是否符合条件:1. 列名重命名是允许的 2. 不允许表的重命名 -

defer this.teardown()用来关闭所有启动的东西

func (this *Migrator) teardown() {

atomic.StoreInt64(&this.finishedMigrating, 1)

if this.inspector != nil {

this.migrationContext.Log.Infof("Tearing down inspector")

this.inspector.Teardown()

}

if this.applier != nil {

this.migrationContext.Log.Infof("Tearing down applier")

this.applier.Teardown()

}

if this.eventsStreamer != nil {

this.migrationContext.Log.Infof("Tearing down streamer")

this.eventsStreamer.Teardown()

}

if this.throttler != nil {

this.migrationContext.Log.Infof("Tearing down throttler")

this.throttler.Teardown()

}

}

this.initiateInspector()执行连接、验证和检查“inspector”服务器。在这里会查询表格行数、数据库模式、心跳。

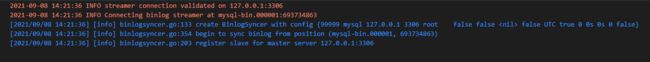

this.initiateStreaming()开始流式传输二进制日志事件并注册此类事件的监听器;

this.initiateApplier()初始化Applier工具。先后创建changelog、ghost表,再将幽灵表做修改。

this.applier.CreateChangelogTable()创建changelog表this.applier.CreateGhostTable()创建ghost表this.applier.AlterGhost()`` 修改ghost表 修改内容主要是前面从alter参数解析出来赋值给this.migrationContext.AlterStatementOptions`的语句。

// AlterGhost applies `alter` statement on ghost table

func (this *Applier) AlterGhost() error {

query := fmt.Sprintf(`alter /* gh-ost */ table %s.%s %s`,

sql.EscapeName(this.migrationContext.DatabaseName),

sql.EscapeName(this.migrationContext.GetGhostTableName()),

this.migrationContext.AlterStatementOptions,

)

this.migrationContext.Log.Infof("Altering ghost table %s.%s",

sql.EscapeName(this.migrationContext.DatabaseName),

sql.EscapeName(this.migrationContext.GetGhostTableName()),

)

this.migrationContext.Log.Debugf("ALTER statement: %s", query)

if _, err := sqlutils.ExecNoPrepare(this.db, query); err != nil {

return err

}

this.migrationContext.Log.Infof("Ghost table altered")

return nil

}

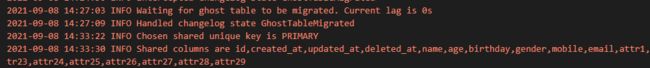

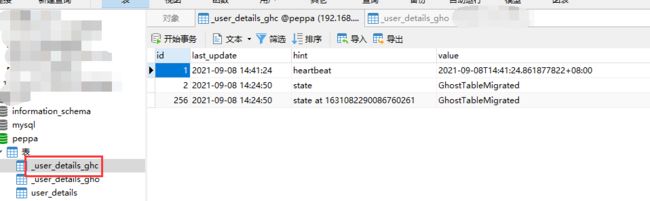

this.applier.WriteChangelogState(string(GhostTableMigrated))向changelog表写入状态go this.applier.InitiateHeartbeat()创建一个心跳周期,写入changelog表。这是异步完成的。

this.inspector.inspectOriginalAndGhostTables()方法比较原始表和幽灵表以查看迁移是否有意义和有效。它提取共享列的列表和选择的迁移唯一键。

this.initiateServer()方法开始在 unix socket/tcp 上监听传入的交互式命令。

2021-09-08 14:36:04 INFO Listening on unix socket file: /tmp/gh-ost.peppa.user_details.sock

2021-09-08 14:36:15 INFO As instructed, counting rows in the background; meanwhile I will use an estimated count, and will update it later on

this.addDMLEventsListener()开始侦听原始表上的 binlog 事件,并为每个此类事件创建和排队写入任务。

2021-09-08 14:37:57 INFO As instructed, I'm issuing a SELECT COUNT(*) on the table. This may take a while

this.applier.ReadMigrationRangeValues()读取将用于行复制的最小/最大值。

2021-09-08 14:40:17 INFO Exact number of rows via COUNT: 730000

2021-09-08 14:40:17 INFO Migration min values: [0]

2021-09-08 14:40:17 INFO Migration max values: [99999]

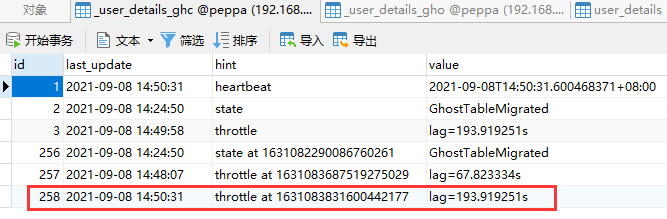

this.initiateThrottler()启动节流收集和节流检查。

2021-09-08 14:41:24 INFO Waiting for first throttle metrics to be collected

2021-09-08 14:41:24 INFO First throttle metrics collected

go this.executeWriteFuncs()通过applier写入rowcopy和backlog数据。 这是ghost表获取数据的地方。该函数以单线程方式填充数据。事件积压和行复制事件都被轮询;积压事件优先。

func (this *Migrator) executeWriteFuncs() error {

if this.migrationContext.Noop {

this.migrationContext.Log.Debugf("Noop operation; not really executing write funcs")

return nil

}

for {

if atomic.LoadInt64(&this.finishedMigrating) > 0 {

return nil

}

this.throttler.throttle(nil)

// We give higher priority to event processing, then secondary priority to

// rowcopy

select {

case eventStruct := <-this.applyEventsQueue:

{

if err := this.onApplyEventStruct(eventStruct); err != nil {

return err

}

}

default:

{

select {

case copyRowsFunc := <-this.copyRowsQueue:

{

copyRowsStartTime := time.Now()

// Retries are handled within the copyRowsFunc

if err := copyRowsFunc(); err != nil {

return this.migrationContext.Log.Errore(err)

}

if niceRatio := this.migrationContext.GetNiceRatio(); niceRatio > 0 {

copyRowsDuration := time.Since(copyRowsStartTime)

sleepTimeNanosecondFloat64 := niceRatio * float64(copyRowsDuration.Nanoseconds())

sleepTime := time.Duration(time.Duration(int64(sleepTimeNanosecondFloat64)) * time.Nanosecond)

time.Sleep(sleepTime)

}

}

default:

{

// Hmmmmm... nothing in the queue; no events, but also no row copy.

// This is possible upon load. Let's just sleep it over.

this.migrationContext.Log.Debugf("Getting nothing in the write queue. Sleeping...")

time.Sleep(time.Second)

}

}

}

}

}

return nil

}

go this.iterateChunks()迭代现有的表行,并将多行的复制任务生成到幽灵表上。

func (this *Migrator) iterateChunks() error {

terminateRowIteration := func(err error) error {

this.rowCopyComplete <- err

return this.migrationContext.Log.Errore(err)

}

if this.migrationContext.Noop {

this.migrationContext.Log.Debugf("Noop operation; not really copying data")

return terminateRowIteration(nil)

}

if this.migrationContext.MigrationRangeMinValues == nil {

this.migrationContext.Log.Debugf("No rows found in table. Rowcopy will be implicitly empty")

return terminateRowIteration(nil)

}

var hasNoFurtherRangeFlag int64

// Iterate per chunk:

for {

if atomic.LoadInt64(&this.rowCopyCompleteFlag) == 1 || atomic.LoadInt64(&hasNoFurtherRangeFlag) == 1 {

// Done

// There's another such check down the line

return nil

}

copyRowsFunc := func() error {

if atomic.LoadInt64(&this.rowCopyCompleteFlag) == 1 || atomic.LoadInt64(&hasNoFurtherRangeFlag) == 1 {

// Done.

// There's another such check down the line

return nil

}

// When hasFurtherRange is false, original table might be write locked and CalculateNextIterationRangeEndValues would hangs forever

hasFurtherRange := false

if err := this.retryOperation(func() (e error) {

hasFurtherRange, e = this.applier.CalculateNextIterationRangeEndValues()

return e

}); err != nil {

return terminateRowIteration(err)

}

if !hasFurtherRange {

atomic.StoreInt64(&hasNoFurtherRangeFlag, 1)

return terminateRowIteration(nil)

}

// Copy task:

applyCopyRowsFunc := func() error {

if atomic.LoadInt64(&this.rowCopyCompleteFlag) == 1 {

// No need for more writes.

// This is the de-facto place where we avoid writing in the event of completed cut-over.

// There could _still_ be a race condition, but that's as close as we can get.

// What about the race condition? Well, there's actually no data integrity issue.

// when rowCopyCompleteFlag==1 that means **guaranteed** all necessary rows have been copied.

// But some are still then collected at the binary log, and these are the ones we're trying to

// not apply here. If the race condition wins over us, then we just attempt to apply onto the

// _ghost_ table, which no longer exists. So, bothering error messages and all, but no damage.

return nil

}

_, rowsAffected, _, err := this.applier.ApplyIterationInsertQuery()

if err != nil {

return err // wrapping call will retry

}

atomic.AddInt64(&this.migrationContext.TotalRowsCopied, rowsAffected)

atomic.AddInt64(&this.migrationContext.Iteration, 1)

return nil

}

if err := this.retryOperation(applyCopyRowsFunc); err != nil {

return terminateRowIteration(err)

}

return nil

}

// Enqueue copy operation; to be executed by executeWriteFuncs()

this.copyRowsQueue <- copyRowsFunc

}

return nil

}

this.migrationContext.MarkRowCopyStartTime()记录开始行拷贝时间

这个信息会存在gho表中:

go this.initiateStatus()设置并激活 printStatus() 触发器

此时日志提示:

# Migrating `peppa`.`user_details`; Ghost table is `peppa`.`_user_details_gho`

this.consumeRowCopyComplete()在 rowCopyComplete 通道上阻塞一次,然后消费并丢弃任何可能挂起的未来传入事件。

此时幽灵表有了数据:

幽灵表的结构:

retrier(this.cutOver)执行迁移的最后一步,基于迁移类型(在副本?原子?安全?)

func (this *Migrator) cutOver() (err error) {

if this.migrationContext.Noop {

this.migrationContext.Log.Debugf("Noop operation; not really swapping tables")

return nil

}

this.migrationContext.MarkPointOfInterest()

this.throttler.throttle(func() {

this.migrationContext.Log.Debugf("throttling before swapping tables")

})

this.migrationContext.MarkPointOfInterest()

this.migrationContext.Log.Debugf("checking for cut-over postpone")

this.sleepWhileTrue(

func() (bool, error) {

heartbeatLag := this.migrationContext.TimeSinceLastHeartbeatOnChangelog()

maxLagMillisecondsThrottle := time.Duration(atomic.LoadInt64(&this.migrationContext.MaxLagMillisecondsThrottleThreshold)) * time.Millisecond

cutOverLockTimeout := time.Duration(this.migrationContext.CutOverLockTimeoutSeconds) * time.Second

if heartbeatLag > maxLagMillisecondsThrottle || heartbeatLag > cutOverLockTimeout {

this.migrationContext.Log.Debugf("current HeartbeatLag (%.2fs) is too high, it needs to be less than both --max-lag-millis (%.2fs) and --cut-over-lock-timeout-seconds (%.2fs) to continue", heartbeatLag.Seconds(), maxLagMillisecondsThrottle.Seconds(), cutOverLockTimeout.Seconds())

return true, nil

}

if this.migrationContext.PostponeCutOverFlagFile == "" {

return false, nil

}

if atomic.LoadInt64(&this.migrationContext.UserCommandedUnpostponeFlag) > 0 {

atomic.StoreInt64(&this.migrationContext.UserCommandedUnpostponeFlag, 0)

return false, nil

}

if base.FileExists(this.migrationContext.PostponeCutOverFlagFile) {

// Postpone file defined and exists!

if atomic.LoadInt64(&this.migrationContext.IsPostponingCutOver) == 0 {

if err := this.hooksExecutor.onBeginPostponed(); err != nil {

return true, err

}

}

atomic.StoreInt64(&this.migrationContext.IsPostponingCutOver, 1)

return true, nil

}

return false, nil

},

)

atomic.StoreInt64(&this.migrationContext.IsPostponingCutOver, 0)

this.migrationContext.MarkPointOfInterest()

this.migrationContext.Log.Debugf("checking for cut-over postpone: complete")

if this.migrationContext.TestOnReplica {

// With `--test-on-replica` we stop replication thread, and then proceed to use

// the same cut-over phase as the master would use. That means we take locks

// and swap the tables.

// The difference is that we will later swap the tables back.

if err := this.hooksExecutor.onStopReplication(); err != nil {

return err

}

if this.migrationContext.TestOnReplicaSkipReplicaStop {

this.migrationContext.Log.Warningf("--test-on-replica-skip-replica-stop enabled, we are not stopping replication.")

} else {

this.migrationContext.Log.Debugf("testing on replica. Stopping replication IO thread")

if err := this.retryOperation(this.applier.StopReplication); err != nil {

return err

}

}

}

if this.migrationContext.CutOverType == base.CutOverAtomic {

// Atomic solution: we use low timeout and multiple attempts. But for

// each failed attempt, we throttle until replication lag is back to normal

err := this.atomicCutOver()

this.handleCutOverResult(err)

return err

}

if this.migrationContext.CutOverType == base.CutOverTwoStep {

err := this.cutOverTwoStep()

this.handleCutOverResult(err)

return err

}

return this.migrationContext.Log.Fatalf("Unknown cut-over type: %d; should never get here!", this.migrationContext.CutOverType)

}

此时原表修改名称为_user_details_del表,待删除的表,_user_details_gho表修改名称为user_details:

记录步骤的表:

this.finalCleanup()在迁移结束时采取行动,删除表等。

[2021/09/08 15:10:05] [info] binlogsyncer.go:164 syncer is closing...

2021-09-08 15:10:05 INFO Closed streamer connection. err=

[2021/09/08 15:10:05] [error] binlogstreamer.go:77 close sync with err: sync is been closing...

[2021/09/08 15:10:05] [info] binlogsyncer.go:179 syncer is closed

2021-09-08 15:10:05 INFO Dropping table `peppa`.`_user_details_ghc`

Copy: 730000/730000 100.0%; Applied: 0; Backlog: 0/1000; Time: 53m30s(total), 3m42s(copy); streamer: mysql-bin.000001:804167442; Lag: 27.72s, HeartbeatLag: 663.80s, State: migrating; ETA: due

2021-09-08 15:10:05 INFO Table dropped

2021-09-08 15:10:09 ERROR Error 1146: Table 'peppa._user_details_ghc' doesn't exist

2021-09-08 15:10:10 ERROR Error 1146: Table 'peppa._user_details_ghc' doesn't exist

2021-09-08 15:10:10 ERROR Error 1146: Table 'peppa._user_details_ghc' doesn't exist

2021-09-08 15:10:10 ERROR Error 1146: Table 'peppa._user_details_ghc' doesn't exist

2021-09-08 15:10:10 INFO Dropping table `peppa`.`_user_details_del`

2021-09-08 15:10:10 ERROR Error 1146: Table 'peppa._user_details_ghc' doesn't exist

2021-09-08 15:10:10 INFO Table dropped

2021-09-08 15:10:10 ERROR Error 1146: Table 'peppa._user_details_ghc' doesn't exist

至此整个过程就结束了。

分析我的需求

这里我可以复用的是,幽灵表的创建,数据的迁移和表互换的逻辑;并在rowcopy之前,加上2个操作——忽略alter的ddl操作、只将符合日期条件的row复制到幽灵表。

为了实现这个过程,需要将行复制并copy到幽灵表的逻辑单独拉出来debug测试并进行数据的定位。