利用RepVGG训练一个cifar-10数据

文章目录

- 1. 训练整体代码

-

- 1.1. repvgg代码

- 1.2 cifar-10数据

- 2. 学习率更新策略

- 3. RepVGG原理详解

- 4. 权重convert过程

-

- 4.1 推理步骤:

- 4.2 权重转换的步骤

- 5. 导出onnx

-

- 5.1 模型转换前的onnx

- 5.2 权重转换后的onnx对比

- 6. 转换前后的推理时间对比

- 7. repvgg量化 (post training quantization)

- 8 tensorrt部署

- 9. repvgg当主干网络

- 10. 相关资源链接

其中, 6~9有待完成

1. 训练整体代码

from repvgg import RepVGG

import torch

from torchvision.datasets import CIFAR10

from torchvision import transforms

import torch.nn as nn

import torch.optim as optim

cifar_path = r"F:\Code\deep learning\object detection\datasets\cifar-10"

device = torch.device("cuda:0")

batch_size = 256

train_loader = torch.utils.data.DataLoader(

CIFAR10(cifar_path, train=True, transform=transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

]), download=False), batch_size=batch_size, num_workers=0)

test_loader = torch.utils.data.DataLoader(

CIFAR10(cifar_path, train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

]), download=False), batch_size=batch_size, num_workers=0)

model = RepVGG(num_blocks=[2, 4, 14, 1], num_classes=1000,

width_multiplier=[0.75, 0.75, 0.75, 2.5], override_groups_map=None, deploy=False)

state_dict = torch.load("weights/RepVGG-A0-train.pth", map_location='cpu')

# print(weights)

model.load_state_dict(state_dict)

model.linear = nn.Linear(model.linear.in_features, out_features=10)

model.to(device)

# print(model)

optimizer = optim.SGD(model.parameters(), lr=0.1, momentum=0.9, weight_decay=1e-4)

# 每间隔几个epoch,调整学习率

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, 5, 0.1)

criteration = nn.CrossEntropyLoss()

# eval 的预测

best_acc = 0

total_epochs = 100

for epoch in range(total_epochs):

model.train()

for i, (x, y) in enumerate(train_loader):

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

output = model(x)

# pred = output.max(1, keepdim=True)[1]

# correct += pred.eq(y.view_as(pred)).sum().item()

loss = criteration(output, y)

loss.backward()

optimizer.step()

if i % 100 == 0:

print("Epoch %d/%d, iter %d/%d, loss=%.4f" % (

epoch, total_epochs, i, len(train_loader), loss.item()))

model.eval()

total = 0

correct = 0

with torch.no_grad():

for i, (img, target) in enumerate(test_loader):

img = img.to(device)

out = model(img)

pred = out.max(1)[1].detach().cpu().numpy()

target = target.cpu().numpy()

correct += (pred == target).sum()

total += len(target)

acc = correct/total

print("\tValidation : Acc=%.4f" % acc, "({}/{})".format(correct, len(test_loader.dataset)))

if acc > best_acc:

best_acc = acc

torch.save(model, "save_weights/best_cifar10.pt")

scheduler.step()

# print("第%d个epoch的学习率:%f" % (epoch, optimizer.param_groups[0]['lr']))

print("Best Acc=%.4f" % best_acc)

1.1. repvgg代码

来源于github

预训练权重采用的是RepVGG-A0-train.pth,github上下载

model = RepVGG(num_blocks=[2, 4, 14, 1], num_classes=1000,

width_multiplier=[0.75, 0.75, 0.75, 2.5], override_groups_map=None, deploy=False)

state_dict = torch.load("weights/RepVGG-A0-train.pth", map_location='cpu')

# print(weights)

model.load_state_dict(state_dict)

# 修改网络最后一层的输出

model.linear = nn.Linear(model.linear.in_features, out_features=10)

1.2 cifar-10数据

链接:https://pan.baidu.com/s/1--BuNaMF38O07TVGbP-UYw

提取码:7gqn

--来自百度网盘超级会员V1的分享

cifar_path = r"F:\Code\deep learning\object detection\datasets\cifar-10"

train_loader = torch.utils.data.DataLoader(

CIFAR10(cifar_path, train=True, transform=transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

]), download=False), batch_size=batch_size, num_workers=0)

test_loader = torch.utils.data.DataLoader(

CIFAR10(cifar_path, train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

]), download=False), batch_size=batch_size, num_workers=0)

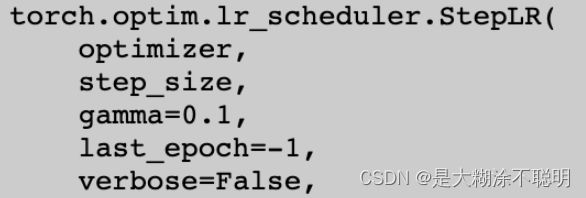

2. 学习率更新策略

注意:这里设置的step_size值未必合适,只是为了给出一个例子。

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, 5, 0.1)

...

...

for epoch in range(total_epochs):

loss = criteration(output, y)

loss.backward()

optimizer.step()

...

scheduler.step()

每间隔几个step_size,调整学习率大小

对于stepLR的方式: lr = lr * step_size

学习率更新参考链接

学习率常见的更新方式

注 : 上面给出的链接,自己并未进行验证,只是给出参考,具体需要使用时,需要自己验证。

3. RepVGG原理详解

略,网上有很多讲原理的,这里就不重复了

4. 权重convert过程

4.1 推理步骤:

- 训练一个网络,调用convert函数,将权重进行转换,同时删除模块本身的rbr_dense等与推理无关的属性

- 重新定义一个模型,deploy属性设置为True

- 将转换后的权重,加载到新模型里,注意,这里的load_checkpoint和之前的不一样,是作者自己定义的,这里有待自己验证(debug了一下, 用不用作者写的无所谓啊)

- model(img),才会调用前向推理函数

经过下面几行,会把权重进行转换

注:weight_path中,既保存了模型,也保存了原始权重

from repvgg import *

weight_path = "save_weights/best_cifar10.pt"

model = torch.load(weight_path)

state_dict = model.state_dict()

save_path = weight_path.replace("save", "convert")

# 这里保存的是model 经过convert后的权重

repvgg_model_convert(model, save_path=save_path)

repvgg_model_convert函数如下

def repvgg_model_convert(model: torch.nn.Module, save_path=None, do_copy=True):

if do_copy:

# 默认采用深拷贝

model = copy.deepcopy(model)

for module in model.modules():

if hasattr(module, 'switch_to_deploy'):

module.switch_to_deploy()

# 如果有保存路径,则直接保存,权重

if save_path is not None:

torch.save(model.state_dict(), save_path)

return model

每一个模块,都会调用moduke.switchto_deploy()方法

训练完的网络进行权重转换时,这里压根不会进入第一个Return

训练状态下的网络,deploy属性设置为False

def switch_to_deploy(self):

"""

训练完的网络进行权重转换时,这里压根不会进入第一个Return

训练状态下的网络,deploy属性设置为False

"""

if hasattr(self, 'rbr_reparam'):

return

kernel, bias = self.get_equivalent_kernel_bias()

self.rbr_reparam = nn.Conv2d(in_channels=self.rbr_dense.conv.in_channels,

out_channels=self.rbr_dense.conv.out_channels,

kernel_size=self.rbr_dense.conv.kernel_size, stride=self.rbr_dense.conv.stride,

padding=self.rbr_dense.conv.padding, dilation=self.rbr_dense.conv.dilation,

groups=self.rbr_dense.conv.groups, bias=True)

self.rbr_reparam.weight.data = kernel

self.rbr_reparam.bias.data = bias

for para in self.parameters():

para.detach_()

# 删除各种与部署无关的属性,这样才能正确加载转换后的模型

self.__delattr__('rbr_dense')

self.__delattr__('rbr_1x1')

if hasattr(self, 'rbr_identity'):

self.__delattr__('rbr_identity')

if hasattr(self, 'id_tensor'):

self.__delattr__('id_tensor')

# 将self.deploy属性设置为True

self.deploy = True

转换后的模型,先是模型forward,再次是模块进行前向推理

# RepVGG block的forward

def forward(self, inputs):

# 如果有该属性, 则调用self.rbr_reparam

# 下面的两行,是代表输出的

if hasattr(self, 'rbr_reparam'):

return self.nonlinearity(self.se(self.rbr_reparam(inputs)))

4.2 权重转换的步骤

- conv_bn 3x3 —>融合成 conv 3x3

- conv_bn 1x1 ----> 融合成 conv1x1 —>pad 成 conv3x3

- bn ----> 先加一个恒等映射 , 用 conv3x3实现, 特定通道, 特定位置的值为1和0 ----> conv3x3_bn融合 —> conv3x3

如果没有bn, 则直接为0, 0 - 三个branch的conv3x3,变成一个 conv3x3

def get_equivalent_kernel_bias(self):

kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense)

kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1)

kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity)

"""

# 先conv_bn融合,包括3x3和1x1

# 再把1x1的填充成3x3

"""

return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid

def _pad_1x1_to_3x3_tensor(self, kernel1x1):

if kernel1x1 is None:

return 0

else:

return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])

def _fuse_bn_tensor(self, branch):

if branch is None:

return 0, 0

if isinstance(branch, nn.Sequential):

kernel = branch.conv.weight

running_mean = branch.bn.running_mean

running_var = branch.bn.running_var

gamma = branch.bn.weight

beta = branch.bn.bias

eps = branch.bn.eps

else:

"""

支路只包含三种情况,1.conv_bn(本身是一个nn.Sequential)

为None

"""

assert isinstance(branch, nn.BatchNorm2d)

if not hasattr(self, 'id_tensor'):

"""分组卷积时"""

input_dim = self.in_channels // self.groups

kernel_value = np.zeros((self.in_channels, input_dim, 3, 3), dtype=np.float32)

"""

# 每个kernel,负责输出feature map的一个通道

# kerne_value中第一个索引 i,代表输出的第几路通道

# 第二个索引,则代表该卷积核中,第i个通道,不为0

# 先初始化一个3x3的卷积核,赋值操作时,只赋值一个中心位置的值

"""

for i in range(self.in_channels):

kernel_value[i, i % input_dim, 1, 1] = 1

self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)

kernel = self.id_tensor

running_mean = branch.running_mean

running_var = branch.running_var

gamma = branch.weight

beta = branch.bias

eps = branch.eps

std = (running_var + eps).sqrt()

t = (gamma / std).reshape(-1, 1, 1, 1)

return kernel * t, beta - running_mean * gamma / std

5. 导出onnx

5.1 模型转换前的onnx

from repvgg import *

device = torch.device("cpu")

# 这里的大小,是图片预期的大小

img = torch.rand((1, 3, 32, 32)).to(device)

# repvgg转换前的模型

weight_path = 'save_weights/best_cifar10.pt'

model = torch.load(weight_path)

model.to(device)

# model = RepVGG(num_blocks=[2, 4, 14, 1], num_classes=10,

# width_multiplier=[0.75, 0.75, 0.75, 2.5], override_groups_map=None, deploy=True)

# weight_path = 'convert_weights/best_cifar10.pt'

# state_dict = torch.load(weight_path)

# model.load_state_dict(state_dict)

# 使得BN和Dropout失效

model.eval()

# 导出的时候,不需要梯度

with torch.no_grad():

# 初始化一次

y = model(img)

try:

import onnx

from onnxsim import simplify

print('\nStarting ONNX export with onnx %s...' % onnx.__version__)

f = weight_path.replace('.pt', '.onnx') # filename

torch.onnx.export(model, img, f, verbose=False,

opset_version=11,

input_names=['images'],

output_names=['output'] if y is None else ['output'])

# Checks

onnx_model = onnx.load(f) # load onnx model

model_simp, check = simplify(onnx_model)

assert check, "Simplified ONNX model could not be validated"

onnx.save(model_simp, f)

# print(onnx.helper.printable_graph(onnx_model.graph)) # print a human readable model

print('ONNX export success, saved as %s' % f)

except Exception as e:

print('ONNX export failure: %s' % e)

5.2 权重转换后的onnx对比

from repvgg import *

device = torch.device("cpu")

# 这里的大小,是图片预期的大小

img = torch.rand((1, 3, 32, 32)).to(device)

# repvgg转换前的模型

# weight_path = 'save_weights/best_cifar10.pt'

#

# model = torch.load(weight_path)

# model.to(device)

"""转换后的模型"""

model = RepVGG(num_blocks=[2, 4, 14, 1], num_classes=10,

width_multiplier=[0.75, 0.75, 0.75, 2.5], override_groups_map=None, deploy=True)

weight_path = 'convert_weights/best_cifar10.pt'

state_dict = torch.load(weight_path, map_location = 'cpu')

model.load_state_dict(state_dict)

model.to(device)

# 使得BN和Dropout失效

model.eval()

# 导出的时候,不需要梯度

with torch.no_grad():

# 初始化一次

y = model(img)

try:

import onnx

from onnxsim import simplify

print('\nStarting ONNX export with onnx %s...' % onnx.__version__)

f = weight_path.replace('.pt', '.onnx') # filename

torch.onnx.export(model, img, f, verbose=False,

opset_version=11,

input_names=['images'],

output_names=['output'] if y is None else ['output'])

# Checks

onnx_model = onnx.load(f) # load onnx model

model_simp, check = simplify(onnx_model)

assert check, "Simplified ONNX model could not be validated"

onnx.save(model_simp, f)

# print(onnx.helper.printable_graph(onnx_model.graph)) # print a human readable model

print('ONNX export success, saved as %s' % f)

except Exception as e:

print('ONNX export failure: %s' % e)

转换完成后,都是plain conv, 没有branch

6. 转换前后的推理时间对比

7. repvgg量化 (post training quantization)

8 tensorrt部署

9. repvgg当主干网络

10. 相关资源链接

主要是自己添加了注释的相关代码

链接:百度链接

提取码:ooyu

–来自百度网盘超级会员V1的分享