YOLOv5、YOLOv8改进:SEAttention 通道注意力机制

基于通道的注意力机制 源自于 CVPR2018: Squeeze-and-Excitation Networks

官方代码:GitHub - hujie-frank/SENet: Squeeze-and-Excitation Networks

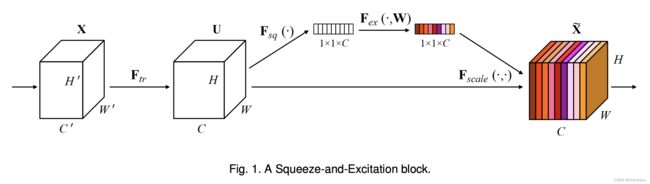

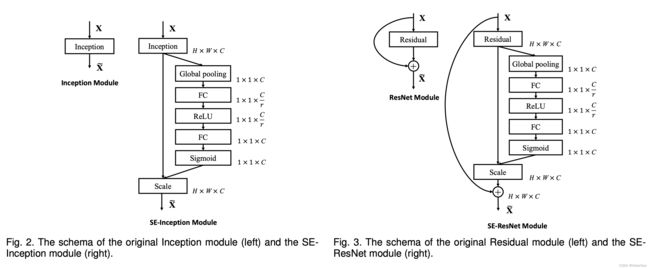

如图所示,其实就是将不同的通道赋予相关的权重。Attention机制用到这里用朴素的话说就是,把重要的通道赋予大的权重,然后将这些通道以及权重去线性组合。

至于这个权重是自己"学习"的。具体这样做,把每一个通道先下采样为一个实数,然后再通过两层全连接层,就会得到每个通道的权重。在训练构成中,这两个全连接层的参数也会和模型其他可训练参数一样一起更新。

下面是完整的改法

首先common加入以下代码

class SEAttention(nn.Module):

def __init__(self, channel=512,reduction=16):

super().__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Sequential(

nn.Linear(channel, channel // reduction, bias=False),

nn.ReLU(inplace=True),

nn.Linear(channel // reduction, channel, bias=False),

nn.Sigmoid()

)

def init_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

init.kaiming_normal_(m.weight, mode='fan_out')

if m.bias is not None:

init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

init.constant_(m.weight, 1)

init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

init.normal_(m.weight, std=0.001)

if m.bias is not None:

init.constant_(m.bias, 0)

def forward(self, x):

b, c, _, _ = x.size()

y = self.avg_pool(x).view(b, c)

y = self.fc(y).view(b, c, 1, 1)

return x * y.expand_as(x)接下来实在yolo.py中进行注册

你准备的注意力机制都可以放在这里面

elif m in [S2Attention, SimSPPF, ACmix, CrissCrossAttention, SOCA, ShuffleAttention, SEAttention, SimAM, SKAttention]:yaml文件的配置,以yolov5s为参照

# YOLOv5 by YOLOAir, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[-1, 1, SEAttention, [1024]],

[[17, 20, 24], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]以上就是完整的改法

YOLOv8和YOLOv5都是一个作者,common变成了conv yolo变成了task 其他都一样