ID3决策树原理是使用使信息熵下降速度最快(也即不确定性降低速度最快)的参数逐次进行分类的方法。训练方法如下:

1)输入数据集S,对各个参数进行分类,分别求出当前数据集的信息熵和分类后的期望熵,用当前数据集的信息熵减去期望熵得到信息增益;

2)在所有参数中找到信息增益最大的那个参数,用那个参数将数据集分成子集;

3)如果子集为同一类别,则为叶子节点,函数返回;

4)否则针对子集,使用步骤1和2递归进行分类;

C4.5的步骤和ID3差不多,唯一的区别是C4.5用的是增益率,所以能够解决多类别属性信息增益高的问题。增益率是在增益的基础上除以类别的内在信息熵(不考虑样本实际类别,只按照属性的类别得到的信息熵,记作IV)。

C++实践代码如下:

#ifndef _DECISION_TREE_HPP_

#define _DECISION_TREE_HPP_

#include

#include

主函数如下:

#include

#include "mat.hpp"

#include "decision_tree.hpp"

int cc(const double& d)

{

return d;

}

int pc(const int& idx, const double& d)

{

return d;

}

int main(int argc, char** argv)

{

std::vector > vec_dat;

vec_dat.push_back({ -1, 1, -1, -1 });

vec_dat.push_back({ 1, -1, 1, 1 });

vec_dat.push_back({ 1, -1, -1, 1 });

vec_dat.push_back({ -1, -1, -1, -1 });

vec_dat.push_back({ -1, -1, 1, 1 });

vec_dat.push_back({ -1, 1, 1, -1 });

vec_dat.push_back({ 1, 1, 1, -1 });

vec_dat.push_back({ 1, -1, -1, 1 });

vec_dat.push_back({ -1, 1, -1, -1 });

vec_dat.push_back({ 1, -1, 1, 1 });

struct dt_node* p_id3_tree = gen_id3_tree(vec_dat, pc, cc);

struct dt_node* p_c45_tree = gen_c45_tree(vec_dat, pc, cc);

for (auto itr = vec_dat.begin(); itr != vec_dat.end(); ++itr)

{

int i_id3_class = judge_id3(p_id3_tree, *itr, pc, -2);

int i_c45_class = judge_c45(p_c45_tree, *itr, pc, -2);

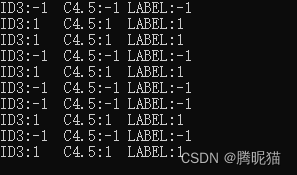

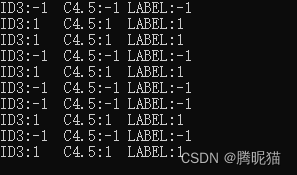

printf("ID3:%d\tC4.5:%d\tLABEL:%d\r\n", i_id3_class, i_c45_class, cc((*itr)[3]));

}

return 0;

}

结果显示对于训练集至少是可以完全匹配的,由于没有测试集,所以没有进行剪枝。