自回归模型的建模与参数估计-Python

AR§模型的参数估计

设{ X t X_t Xt}适合 X t = a 1 X t − 1 + ⋯ + a p X t − p + ϵ t X_t=a_1X_{t-1}+\cdots+a_pX_{t-p}+\epsilon_t Xt=a1Xt−1+⋯+apXt−p+ϵt,式中{ ϵ t \epsilon_t ϵt}为独立同分布白噪声序列; E ϵ t 2 = σ 2 E\epsilon^2_t=\sigma^2 Eϵt2=σ2,来自{ X t X_t Xt}的样本为 X 1 , X 2 , ⋯ , X n X_1,X_2,\cdots,X_n X1,X2,⋯,Xn,要估计 a 1 , a 2 , ⋯ , a p , σ 2 a_1,a_2,\cdots,a_p,\sigma^2 a1,a2,⋯,ap,σ2。

创建一个AR(2)过程

x t = 0.6 x t − 1 − 0.75 x t − 2 + ϵ t x_t = 0.6 x_{t-1} - 0.75 x_{t-2} + \epsilon_t xt=0.6xt−1−0.75xt−2+ϵt

import matplotlib.pyplot as plt

import numpy as np

from statsmodels.tsa.stattools import acf

from statsmodels.graphics.tsaplots import plot_acf, plot_pacf

n = 5000

mean = 0

std = 1

lag = 20

np.random.seed(0)

x_t = list(np.random.normal(mean, std, size=2))

epsilon_t = np.random.normal(mean, std, size=n) # 创建白噪声

for i in range(2, n+2):

x_t.append(0.6 * x_t[i-1] - 0.75 * x_t[i-2] + epsilon_t[i-2])

# 绘制白噪声

plt.plot(x_t)

plt.title("AR2 process")

plt.show()

# 计算自相关函数(ACF)

plot_acf(x=x_t, lags=lag, title="ACF x_t")

plot_pacf(x=x_t, lags=lag, title="PACF x_t")

plt.show()

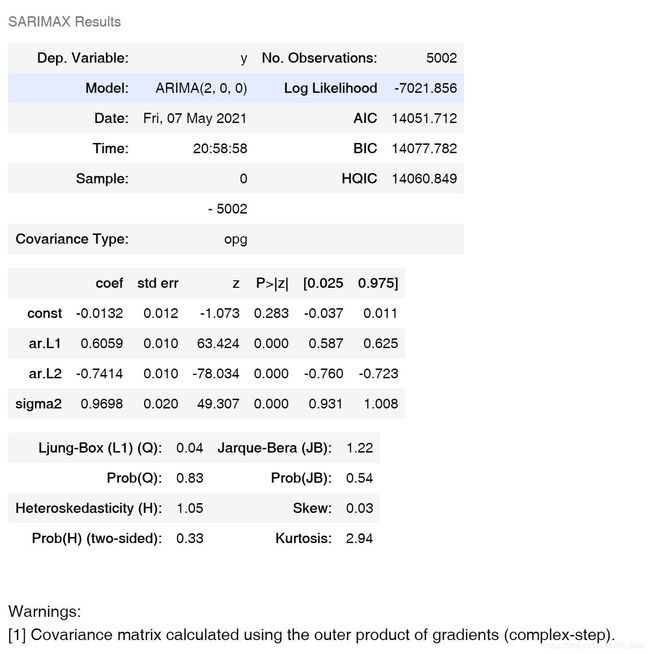

- 使用statsmodels模块估计参数和下文对比

# use statsmodel to fit this series

import statsmodels as sm

arma_mod20 = sm.tsa.arima.model.ARIMA(x_t, order=(2,0,0)).fit()

arma_mod20.summary()

np.mean(x_t)

-0.01320250123146827

其他参数可以使用下面三种方法进行估计。

- Yule-Walker 估计法

由Yule-Walker方程: b ⃗ p = Γ ⃗ p α ⃗ \vec b_p=\vec\Gamma_p\vec\alpha bp=Γpα

式中:

b ⃗ p = [ γ 1 γ 2 ⋮ γ p ] , Γ ⃗ p = [ γ 0 γ 1 ⋯ γ p − 1 γ 1 γ 0 ⋯ γ p − 2 ⋮ ⋮ ⋱ ⋮ γ p − 1 γ p − 2 ⋮ γ 0 ] , \vec b_p=\left[ \begin{matrix} \gamma_1 \\ \gamma_2 \\ \vdots \\ \gamma_p \end{matrix}\right], \vec \Gamma_p=\left[ \begin{matrix} \gamma_0 & \gamma_1 & \cdots & \gamma_{p-1} \\ \gamma_1 & \gamma_0 & \cdots & \gamma_{p-2}\\ \vdots & \vdots& \ddots & \vdots \\ \gamma_{p-1} & \gamma_{p-2}& \vdots & \gamma_{0} \end{matrix}\right], bp=⎣⎢⎢⎢⎡γ1γ2⋮γp⎦⎥⎥⎥⎤,Γp=⎣⎢⎢⎢⎢⎡γ0γ1⋮γp−1γ1γ0⋮γp−2⋯⋯⋱⋮γp−1γp−2⋮γ0⎦⎥⎥⎥⎥⎤,

α ⃗ = [ a 1 a 2 ⋮ a p ] \vec \alpha = \left[ \begin{matrix} a_1 \\ a_2 \\ \vdots \\ a_p \end{matrix}\right] α=⎣⎢⎢⎢⎡a1a2⋮ap⎦⎥⎥⎥⎤

γ k \gamma_k γk为自协方差函数可以用样本的自协方差函数 γ ^ k \hat{\gamma}_k γ^k代替,然后可以计算参数 a 1 , a 2 , ⋯ , a p a_1,a_2,\cdots,a_p a1,a2,⋯,ap, σ 2 \sigma^2 σ2的Yule-Walker估计为

σ ^ 2 = γ ^ 0 − a ^ 1 γ ^ 1 − ⋯ − a ^ p γ ^ p \hat{\sigma}^2=\hat{\gamma}_0-\hat a_1 \hat \gamma_1-\cdots -\hat a_p \hat \gamma_p σ^2=γ^0−a^1γ^1−⋯−a^pγ^p [ a ^ 1 a ^ 2 ⋮ a ^ p ] = [ γ ^ 0 γ ^ 1 ⋯ γ ^ p − 1 γ ^ 1 γ ^ 0 ⋯ γ ^ p − 2 ⋮ ⋮ ⋱ ⋮ γ ^ p − 1 γ ^ p − 2 ⋮ γ ^ 0 ] − 1 [ γ ^ 1 γ ^ 2 ⋮ γ ^ p ] , \left[ \begin{matrix} \hat{a}_1 \\ \hat{a}_2 \\ \vdots \\ \hat{a}_p \end{matrix}\right] = \left[ \begin{matrix} \hat \gamma_0 & \hat \gamma_1 & \cdots & \hat \gamma_{p-1} \\ \hat \gamma_1 & \hat \gamma_0 & \cdots & \hat \gamma_{p-2}\\ \vdots & \vdots& \ddots & \vdots \\ \hat \gamma_{p-1} & \hat \gamma_{p-2}& \vdots & \hat \gamma_{0} \end{matrix}\right]^{-1} \left[ \begin{matrix} \hat \gamma_1 \\ \hat \gamma_2 \\ \vdots \\ \hat \gamma_p \end{matrix}\right] , ⎣⎢⎢⎢⎡a^1a^2⋮a^p⎦⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎡γ^0γ^1⋮γ^p−1γ^1γ^0⋮γ^p−2⋯⋯⋱⋮γ^p−1γ^p−2⋮γ^0⎦⎥⎥⎥⎥⎤−1⎣⎢⎢⎢⎡γ^1γ^2⋮γ^p⎦⎥⎥⎥⎤,

自协方差函数

γ ( h ) = cov ( X ( t ) , X ( t − h ) ) = E [ X ( t ) − μ t ] [ X ( t − h ) − μ t − h ] = E [ X ( t ) X ( t − h ) ] − μ t μ t − h \gamma(h)=\text{cov}(X(t),X(t-h))=E[X(t)-\mu_t][X(t-h)-\mu_{t-h}]=E[X(t)X(t-h)]-\mu_t\mu_{t-h} γ(h)=cov(X(t),X(t−h))=E[X(t)−μt][X(t−h)−μt−h]=E[X(t)X(t−h)]−μtμt−h

def inverse_yule_walker_ar(x, p):

"""

Implementation for a direct inverse solution on Yule-Walker equations

@param x: the dataset, a numpy 1D array

@param p: lags, p in AR(p)

@return: a 1-D numpy array phi of shape p. phi[i] = parameter for x_{t-i} in a AR(p) model

"""

# compute sample autovariance function

x_bar = np.mean(x)

covp = []

for i in range(p+1):

lenx = len(x)-i

xt = x[i:]

xtp = x[:lenx]

covp.append(np.sum((xt-x_bar)*(xtp-x_bar))/len(x))

# compute the p * p R_p matrix

R_p = np.zeros([p,p])

for j in range(p):

for k in range(p):

R_p[j,k] = covp[np.abs(j-k)]

# compute phi = R_p^{-1} covp

phi = np.linalg.inv(R_p).dot(np.array(covp[1:]))

return phi

- 最小二乘法

X t = a 1 X t − 1 + ⋯ + a p X t − p + ϵ t X_t=a_1X_{t-1}+\cdots+a_pX_{t-p}+\epsilon_t Xt=a1Xt−1+⋯+apXt−p+ϵt取 t = p + 1 , p + 2 , ⋯ , n t=p+1,p+2,\cdots,n t=p+1,p+2,⋯,n

[ X p + 1 X p + 2 ⋮ X n ] = [ X p X p − 1 ⋯ X 1 X p + 1 X p + 2 ⋯ X 2 ⋮ ⋮ ⋱ ⋮ X n − 1 X n − 2 ⋮ X n − p ] [ a 1 a 2 ⋮ a p ] + [ ϵ p + 1 ϵ p + 2 ⋮ ϵ n ] , \left[ \begin{matrix} X_{p+1} \\ X_{p+2} \\ \vdots \\ X_{n} \end{matrix}\right] = \left[ \begin{matrix} X_p & X_{p-1} & \cdots & X_{1} \\ X_{p+1} & X_{p+2} & \cdots & X_{2}\\ \vdots & \vdots& \ddots & \vdots \\ X_{n-1} & X_{n-2} & \vdots & X_{n-p} \end{matrix}\right] \left[ \begin{matrix} a_1 \\ a_2 \\ \vdots \\ a_p \end{matrix}\right] + \left[ \begin{matrix} \epsilon_{p+1} \\ \epsilon_{p+2} \\ \vdots \\ \epsilon_{n} \end{matrix}\right] , ⎣⎢⎢⎢⎡Xp+1Xp+2⋮Xn⎦⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎡XpXp+1⋮Xn−1Xp−1Xp+2⋮Xn−2⋯⋯⋱⋮X1X2⋮Xn−p⎦⎥⎥⎥⎥⎤⎣⎢⎢⎢⎡a1a2⋮ap⎦⎥⎥⎥⎤+⎣⎢⎢⎢⎡ϵp+1ϵp+2⋮ϵn⎦⎥⎥⎥⎤,

用矩阵形式表示: X ⃗ = X ⃗ α ⃗ + ϵ ⃗ \vec X=\vec X \vec\alpha+\vec \epsilon X=Xα+ϵ

应用回归分析中的最小二乘法:

α ^ ⃗ = ( X ⃗ T X ⃗ ) − 1 X ⃗ T Y ⃗ \vec {\hat \alpha}=(\vec X^T\vec X)^{-1}\vec X^T\vec Y α^=(XTX)−1XTY

σ ^ 2 ⃗ = 1 n − p Y ⃗ T [ I ⃗ − X ⃗ ( X ⃗ T X ⃗ ) − 1 X ⃗ T ] Y ⃗ \vec {\hat \sigma^2}=\frac{1}{n-p}\vec Y^T[\vec I -\vec X(\vec X^T\vec X)^{-1}\vec X^T]\vec Y σ^2=n−p1YT[I−X(XTX)−1XT]Y

def OLS_ar(x, p):

"""

@param x: the dataset, a numpy 1D array

@param p: lags, p in AR(p)

@return: a 1-D numpy array phi of shape p. phi[i] = parameter for x_{t-i} in a AR(p) model

"""

# construct Y

lenx = len(x)-p

Y = x[p:]

X = np.zeros((lenx,p))

for i in range(lenx):

for j in range(p):

X[i,j] = x[np.abs(p-j-1+i)]

return np.dot(np.dot(np.linalg.inv(np.dot(X.T,X)),X.T),Y)

- 极大似然法

设 { ϵ t } \{\epsilon_t\} {ϵt}为正态白噪声序列,则 X T = ( X 1 , X 2 , ⋯ , X n ) T ∼ N n ( 0 , Σ ) X^T=(X_1,X_2,\cdots,X_n)^T \sim N_n(\boldsymbol 0,\boldsymbol \Sigma) XT=(X1,X2,⋯,Xn)T∼Nn(0,Σ),似然函数

ln L ( α , σ 2 ) = ln 1 ( 2 π ) n / 2 ∣ Σ ∣ 1 / 2 e − 1 2 x T Σ − 1 x = ln 1 ( 2 π ) n / 2 − 1 2 ln ∣ Σ ∣ − 1 2 x T Σ − 1 x \begin{aligned} \text{ln}L(\boldsymbol \alpha,\sigma^2)&=\text{ln}\frac{1}{(2\pi)^{n/2}|\boldsymbol \Sigma|^{1/2}}\text{e}^{-\frac{1}{2}\boldsymbol x^T\boldsymbol \Sigma^{-1}\boldsymbol x} \\ &=\text{ln}\frac{1}{(2\pi)^{n/2}}-\frac{1}{2}\text{ln}|\boldsymbol \Sigma|-\frac{1}{2}\boldsymbol x^T\boldsymbol \Sigma^{-1}\boldsymbol x \end{aligned} lnL(α,σ2)=ln(2π)n/2∣Σ∣1/21e−21xTΣ−1x=ln(2π)n/21−21ln∣Σ∣−21xTΣ−1x

Σ \boldsymbol \Sigma Σ是 X T X^T XT的协方差矩阵,是一个 n n n阶正定实对称方阵, ∣ Σ ∣ |\boldsymbol \Sigma| ∣Σ∣是 Σ \boldsymbol \Sigma Σ的行列式

证明详情不再赘述:https://blog.csdn.net/sunbobosun56801/article/details/99753664

最后得到的结论即是极大似然估计和最小二乘估计和最小二乘估计结果是一致的。

参考文献

[1] 时间序列与多元统计分析(2016). 孙祝岭 编.

[2] https://github.com/charlieblue17/timeseries2018