分布式搜索引擎——elasticsearch(三)

目录

1、数据聚合

1、Bucket聚合

2、Metric聚合

3、RestClient操作

2、自动补全

completion suggester查询

3、数据同步

4、es集群

ES集群的脑裂

ES集群的分布式存储

ES集群的故障转移

1、数据聚合

聚合可以实现对文档数据的统计、分析、运算。聚合常见的有三类:

- 桶(Bucket)聚合:用来对文档做分组

- TermAggregation:按照文档字段值分组

- Date Histogram:按照日期阶梯分组,例如一周为一组,或者一月为一组

- 度量(Metric)聚合:用以计算一些值,比如:最大值、最小值、平均值等

- Avg:求平均值

- Max:求最大值

- Min:求最小值

- Stats:同时求max、min、avg、sum等

- 管道(pipeline)聚合:其它聚合的结果为基础做聚合

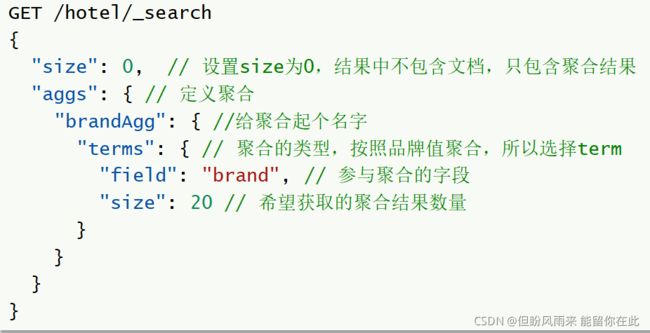

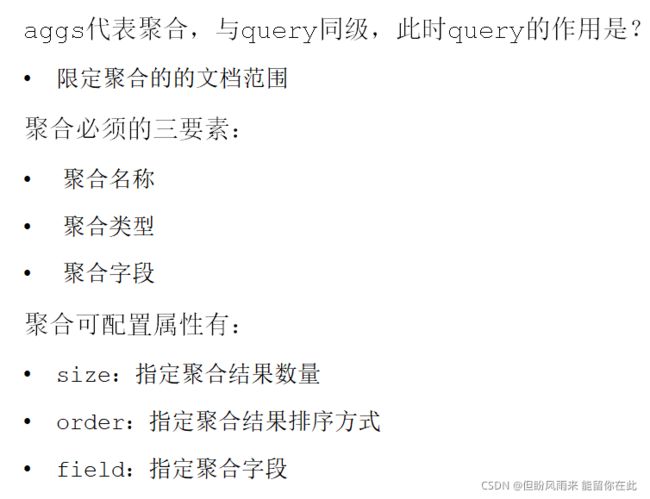

1、Bucket聚合

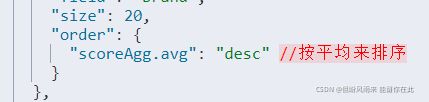

默认情况下,Bucket聚合会统计Bucket内的文档数量,记为_count,并且按照_count降序排序。

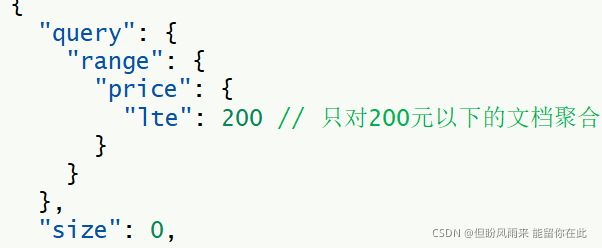

默认情况下,Bucket聚合是对索引库的所有文档做聚合,我们可以限定要聚合的文档范围,只要添加query条件即可

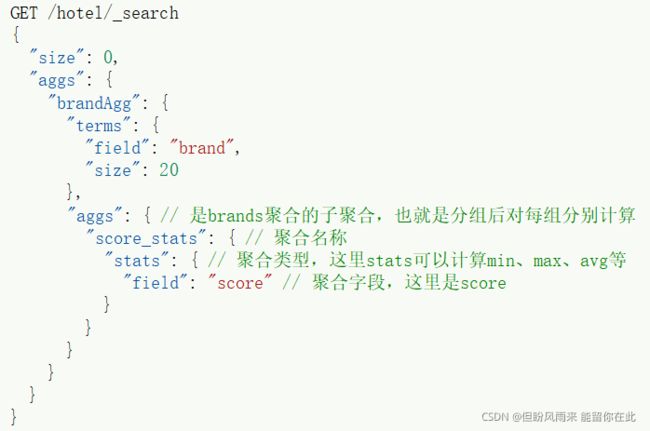

2、Metric聚合

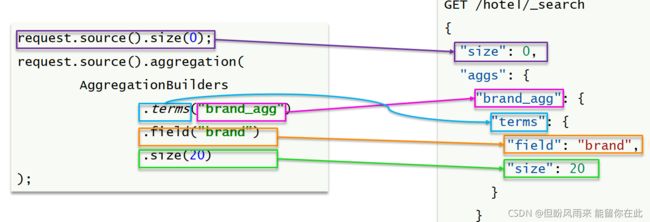

3、RestClient操作

request.source().size(0);

request.source().aggregation(

AggregationBuilders.terms("brandAgg").field("brand").size(20)

);

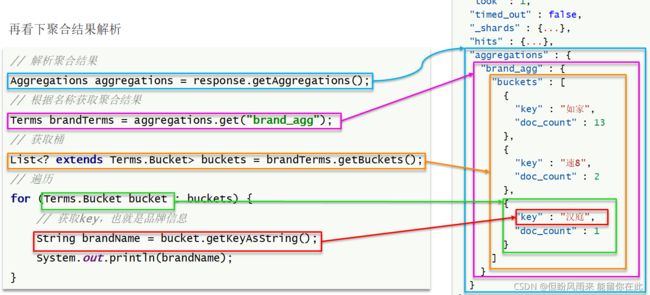

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

Aggregations aggregations = search.getAggregations();

Terms brandAgg = aggregations.get("brandAgg");

List list = brandAgg.getBuckets();

for (Terms.Bucket bucket : list) {

String key = bucket.getKeyAsString();

System.out.println(key);

}2、自动补全

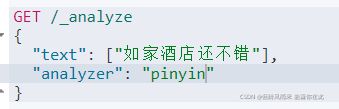

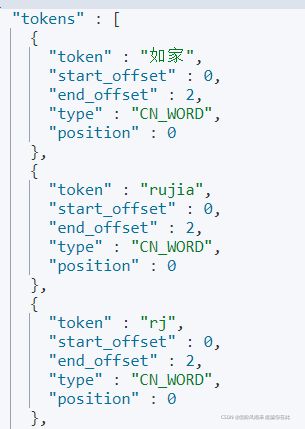

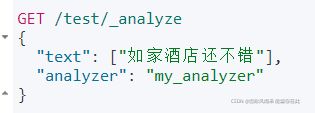

拼音分词器

我们可以在创建索引库时,通过settings来配置自定义的analyzer(分词器):

PUT /test

{

"settings": {

"analysis": {

"analyzer": {

"my_analyzer": {

"tokenizer": "ik_max_word",

"filter": "py"

}

},

"filter": {

"py": {

"type": "pinyin",

"keep_full_pinyin": false,

"keep_joined_full_pinyin": true,

"keep_original": true,

"limit_first_letter_length": 16,

"remove_duplicated_term": true,

"none_chinese_pinyin_tokenize": false

}

}

}

},

"mappings": {

"properties": {

"name":{

"type": "text",

"analyzer": "my_analyzer"

}

}

}

}拼音分词器适合在创建倒排索引的时候使用,但不能在搜索的时候使用。

因此字段在创建倒排索引时应该用my_analyzer分词器;字段在搜索时应该使用ik_smart分词器;

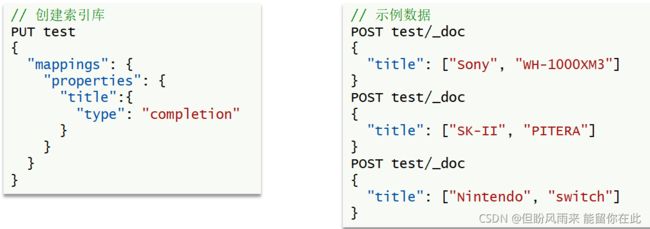

completion suggester查询

elasticsearch提供了Completion Suggester查询来实现自动补全功能。这个查询会匹配以用户输入内容开头的词条并返回。为了提高补全查询的效率,对于文档中字段的类型有一些约束:

- 参与补全查询的字段必须是completion类型。

- 字段的内容一般是用来补全的多个词条形成的数组。

PUT /hotel

{

"settings": {

"analysis": {

"analyzer": {

"text_anlyzer": {

"tokenizer": "ik_max_word",

"filter": "py"

},

"completion_analyzer": {

"tokenizer": "keyword",

"filter": "py"

}

},

"filter": {

"py": {

"type": "pinyin",

"keep_full_pinyin": false,

"keep_joined_full_pinyin": true,

"keep_original": true,

"limit_first_letter_length": 16,

"remove_duplicated_term": true,

"none_chinese_pinyin_tokenize": false

}

}

}

},

"mappings": {

"properties": {

"id":{

"type": "keyword"

},

"name":{

"type": "text",

"analyzer": "text_anlyzer",

"search_analyzer": "ik_smart",

"copy_to": "all"

},

"address":{

"type": "keyword",

"index": false

},

"price":{

"type": "integer"

},

"score":{

"type": "integer"

},

"brand":{

"type": "keyword",

"copy_to": "all"

},

"city":{

"type": "keyword"

},

"starName":{

"type": "keyword"

},

"business":{

"type": "keyword",

"copy_to": "all"

},

"location":{

"type": "geo_point"

},

"pic":{

"type": "keyword",

"index": false

},

"all":{

"type": "text",

"analyzer": "text_anlyzer",

"search_analyzer": "ik_smart"

},

"suggestion":{

"type": "completion",

"analyzer": "completion_analyzer"

}

}

}

} @Test

void test6() throws IOException {

SearchRequest request = new SearchRequest("hotel");

request.source().suggest(new SuggestBuilder().addSuggestion(

"suggestions",

SuggestBuilders.completionSuggestion("suggestion")

.prefix("h")

.skipDuplicates(true)

.size(10)

));

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

Suggest suggest = search.getSuggest();

CompletionSuggestion suggestion = suggest.getSuggestion("suggestions");

List options = suggestion.getOptions();

for (CompletionSuggestion.Entry.Option option : options) {

String string = option.getText().toString();

System.out.println(string);

}

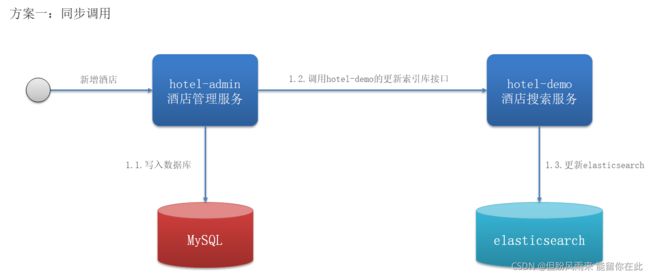

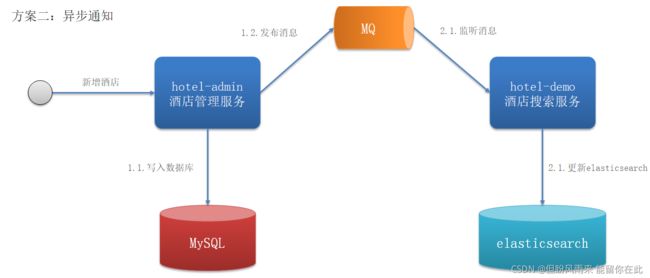

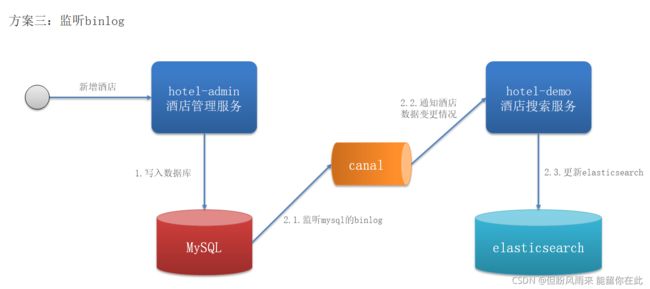

} 3、数据同步

elasticsearch中的酒店数据来自于mysql数据库,因此mysql数据发生改变时,elasticsearch也必须跟着改变,这个就是elasticsearch与mysql之间的数据同步。

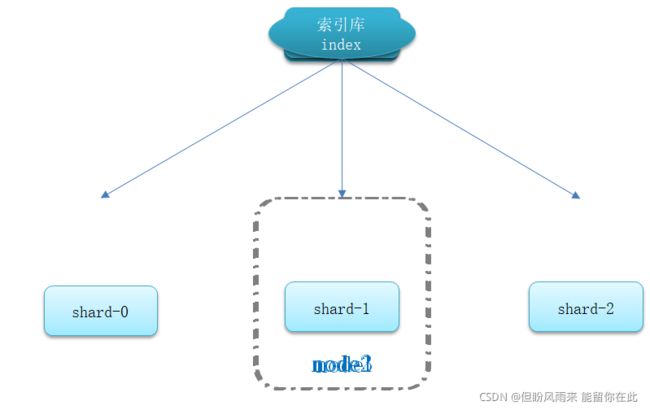

4、es集群

单机的elasticsearch做数据存储,必然面临两个问题:海量数据存储问题、单点故障问题。

- 海量数据存储问题:将索引库从逻辑上拆分为N个分片(shard),存储到多个节点

- 单点故障问题:将分片数据在不同节点备份(replica )

es运行需要修改一些linux系统权限,修改`/etc/sysctl.conf`文件

vi /etc/sysctl.conf添加下面的内容:

vm.max_map_count=262144然后执行命令,让配置生效:

sysctl -p编写一个docker-compose文件

version: '2.2'

services:

es01:

image: elasticsearch:7.12.1

container_name: es01

environment:

- node.name=es01

- cluster.name=es-docker-cluster //集群名称一样,会自动形成集群

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- data01:/usr/share/elasticsearch/data

ports:

- 9200:9200

networks:

- elastic

es02:

image: elasticsearch:7.12.1

container_name: es02

environment:

- node.name=es02

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- data02:/usr/share/elasticsearch/data

ports:

- 9201:9200

networks:

- elastic

es03:

image: elasticsearch:7.12.1

container_name: es03

environment:

- node.name=es03

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- data03:/usr/share/elasticsearch/data

networks:

- elastic

ports:

- 9202:9200

volumes:

data01:

driver: local

data02:

driver: local

data03:

driver: local

networks:

elastic:

driver: bridge启动集群

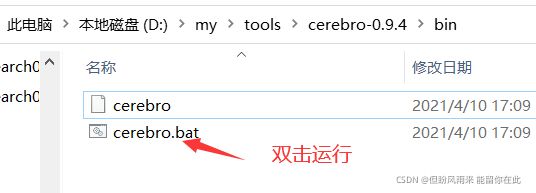

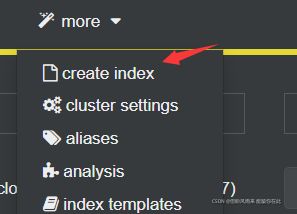

docker-compose up -d集群状态监控

使用cerebro来监控es集群状态,官方网址:https://github.com/lmenezes/cerebro

localhost:9000

或

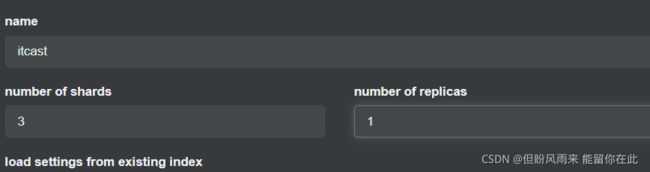

PUT /itcast

{

"settings": {

"number_of_shards": 3, // 分片数量

"number_of_replicas": 1 // 副本数量,给每个片加一个副本

},

"mappings": {

"properties": {

// mapping映射定义 ...

}

}

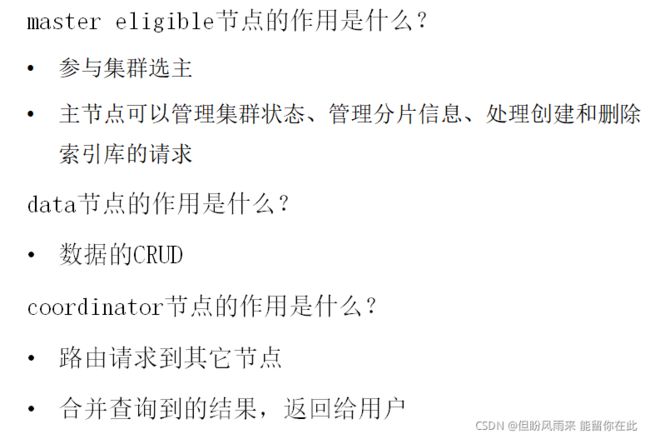

}elasticsearch中的每个节点角色都有自己不同的职责,因此建议集群部署时,每个节点都有独立的角色。

ES集群的脑裂

默认情况下,每个节点都是master eligible节点,因此一旦master节点宕机,其它候选节点会选举一个成为主节点。当主节点与其他节点网络故障时,可能发生脑裂问题。

为了避免脑裂,需要要求选票超过 ( eligible节点数量 + 1 )/ 2 才能当选为主,因此eligible节点数量最好是奇数。对应配置项是discovery.zen.minimum_master_nodes,在es7.0以后,已经成为默认配置,因此一般不会发生脑裂问题

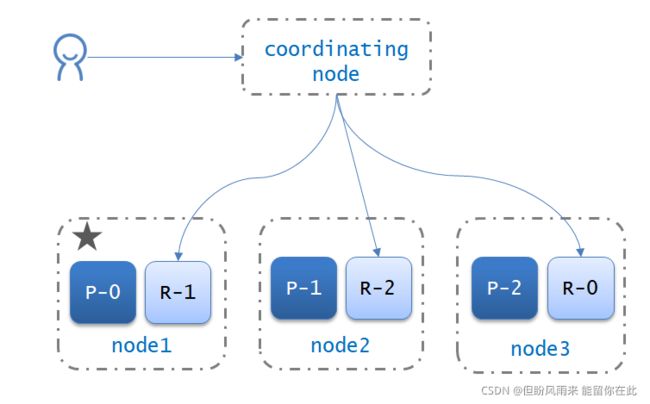

ES集群的分布式存储

当新增文档时,应该保存到不同分片,保证数据均衡,那么coordinating node如何确定数据该存储到哪个分片呢?

elasticsearch会通过hash算法来计算文档应该存储到哪个分片:

- _routing默认是文档的id

- 算法与分片数量有关,因此索引库一旦创建,分片数量不能修改!

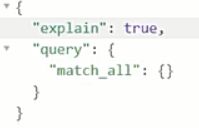

elasticsearch的查询分成两个阶段:

- scatter phase:分散阶段,coordinating node会把请求分发到每一个分片

- gather phase:聚集阶段,coordinating node汇总data node的搜索结果,并处理为最终结果集返回给用户

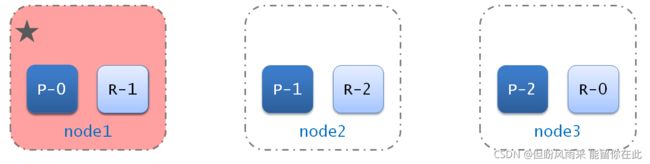

ES集群的故障转移

集群的master节点会监控集群中的节点状态,如果发现有节点宕机,会立即将宕机节点的分片数据迁移到其它节点,确保数据安全,这个叫做故障转移。