11.QT-ffmpeg+QAudioOutput实现音频播放器

1.前言

由于QAudioOutput支持的输入数据必须是原始数据,所以播放mp3,WAV,AAC等格式文件,需要解封装后才能支持播放.

而在QT中,提供了QMediaPlayer类可以支持解封装,但是该类的解码协议都是基于平台的,如果平台自身无法播放,那么QMediaPlayer也无法播放.有兴趣的朋友可以去试试.

所以接下来,我们使用ffmpeg+QAudioOutput来实现一个简单的音频播放器.

在此之前,需要学习:

- 2.AVFormatContext和AVInputFormat_诺谦的博客-CSDN博客

- 3.AVPacket使用_诺谦的博客-CSDN博客

- 4.FFMPEG-AVFrame_诺谦的博客-CSDN博客

- 5.AVStream和AVCodecParameters_诺谦的博客-CSDN博客

- 6.AVCodecContext和AVCodec_诺谦的博客-CSDN博客

- 7.SwrContext音频重采样使用_诺谦的博客-CSDN博客

- 8.ffmpeg-基础常用知识_诺谦的博客-CSDN博客

- 9.下载ffmpeg、使QT支持同时编译32位和64位_诺谦的博客-CSDN博客

- 10.QT-QAudioOutput类使用

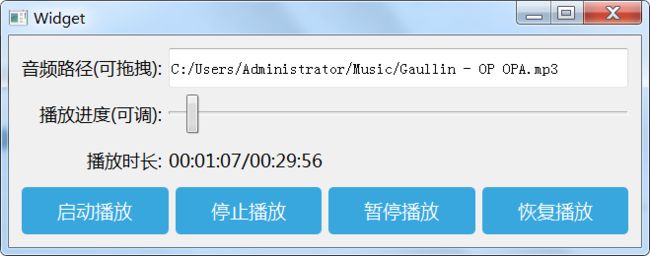

2.界面展示

3.效果展示

下载链接(已经把ffmpeg移植好了,可以直接编译): 10.QT-QAudioOutput类使用

没积分可以加入Qml / Qt / C++技术交流群获取源码 : 779866667

4.代码流程

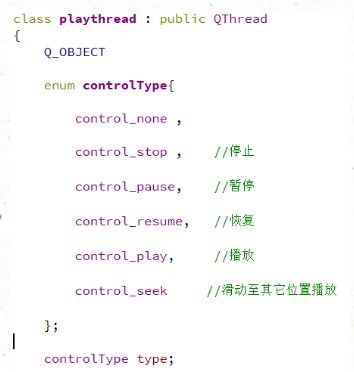

首先创建一个playthread线程类,然后在线程中,不断解数据,重采样,并输入到QAudioOutput的缓冲区进行播放.以及处理界面发来的命令

4.1 playthread线程类

在playthread线程类中,最核心的函数是runPlay(),该函数就是在不断的不断解数据,重采样,并输入到QAudioOutput的缓冲区进行播放.

playtherad.cpp如下所示:

#include "playthread.h"

playthread::playthread()

{

audio=NULL;

type = control_none;

}

bool playthread::initAudio(int SampleRate)

{

QAudioFormat format;

if(audio!=NULL)

return true;

format.setSampleRate(SampleRate); //设置采样率

format.setChannelCount(2); //设置通道数

format.setSampleSize(16); //样本数据16位

format.setCodec("audio/pcm"); //播出格式为pcm格式

format.setByteOrder(QAudioFormat::LittleEndian); //默认小端模式

format.setSampleType(QAudioFormat::UnSignedInt); //无符号整形数

QAudioDeviceInfo info(QAudioDeviceInfo::defaultOutputDevice()); //选择默认输出设备

// foreach(int count,info.supportedChannelCounts())

// {

// qDebug()<<"输出设备支持的通道数:"<setBufferSize(100000);

return true;

}

void playthread::play(QString filePath)

{

this->filePath = filePath;

type = control_play;

if(!this->isRunning())

{

this->start();

}

}

void playthread::stop()

{

if(this->isRunning())

{

type = control_stop;

}

}

void playthread::pause()

{

if(this->isRunning())

{

type = control_pause;

}

}

void playthread::resume()

{

if(this->isRunning())

{

type = control_resume;

}

}

void playthread::seek(int value)

{

if(this->isRunning())

{

seekMs = value;

type = control_seek;

}

}

void playthread::debugErr(QString prefix, int err) //根据错误编号获取错误信息并打印

{

char errbuf[512]={0};

av_strerror(err,errbuf,sizeof(errbuf));

qDebug()<suspend();

msleep(500);

}

if(type == control_resume)

{

audio->resume();

}

}

if(type == control_play) //重新播放

{

ret = true;

if(audio->state()== QAudio::ActiveState)

audio->stop();

}

if(type == control_stop) //停止

{

ret = true;

if(audio->state()== QAudio::ActiveState)

audio->stop();

}

return ret;

}

void playthread::runPlay()

{

int ret;

int destMs,currentMs;

if(audio==NULL)

{

emit ERROR("输出设备不支持该格式,不能播放音频");

return ;

}

//初始化网络库 (可以打开rtsp rtmp http 协议的流媒体视频)

avformat_network_init();

AVFormatContext *pFmtCtx=NULL;

ret = avformat_open_input(&pFmtCtx, this->filePath.toLocal8Bit().data(),NULL, NULL) ; //打开音视频文件并创建AVFormatContext结构体以及初始化.

if (ret!= 0)

{

debugErr("avformat_open_input",ret);

return ;

}

ret = avformat_find_stream_info(pFmtCtx, NULL); //初始化流信息

if (ret!= 0)

{

debugErr("avformat_find_stream_info",ret);

return ;

}

int audioindex=-1;

audioindex = av_find_best_stream(pFmtCtx, AVMEDIA_TYPE_AUDIO, -1, -1, NULL, 0);

qDebug()<<"audioindex:"<streams[audioindex]->codecpar->codec_id);//获取codec

AVCodecContext *acodecCtx = avcodec_alloc_context3(acodec); //构造AVCodecContext ,并将vcodec填入AVCodecContext中

avcodec_parameters_to_context(acodecCtx, pFmtCtx->streams[audioindex]->codecpar); //初始化AVCodecContext

ret = avcodec_open2(acodecCtx, NULL,NULL); //打开解码器,由于之前调用avcodec_alloc_context3(vcodec)初始化了vc,那么codec(第2个参数)可以填NULL

if (ret!= 0)

{

debugErr("avcodec_open2",ret);

return ;

}

SwrContext *swrctx =NULL;

swrctx=swr_alloc_set_opts(swrctx, av_get_default_channel_layout(2),AV_SAMPLE_FMT_S16,44100,

acodecCtx->channel_layout, acodecCtx->sample_fmt,acodecCtx->sample_rate, NULL,NULL);

swr_init(swrctx);

destMs = av_q2d(pFmtCtx->streams[audioindex]->time_base)*1000*pFmtCtx->streams[audioindex]->duration;

qDebug()<<"码率:"<bit_rate;

qDebug()<<"格式:"<sample_fmt;

qDebug()<<"通道:"<channels;

qDebug()<<"采样率:"<sample_rate;

qDebug()<<"时长:"<name;

AVPacket * packet =av_packet_alloc();

AVFrame *frame =av_frame_alloc();

audio->stop();

QIODevice*io = audio->start();

while(1)

{

if(runIsBreak())

break;

if(type == control_seek)

{

av_seek_frame(pFmtCtx, audioindex, seekMs/(double)1000/av_q2d(pFmtCtx->streams[audioindex]->time_base),AVSEEK_FLAG_BACKWARD);

type = control_none;

emit seekOk();

}

ret = av_read_frame(pFmtCtx, packet);

if (ret!= 0)

{

debugErr("av_read_frame",ret);

emit duration(destMs,destMs);

break ;

}

if(packet->stream_index==audioindex)

{

//解码一帧数据 ret = avcodec_send_packet(acodecCtx, packet); av_packet_unref(packet);

if (ret != 0) { debugErr("avcodec_send_packet",ret); continue ; }

while( avcodec_receive_frame(acodecCtx, frame) == 0)

{

if(runIsBreak())

break;

uint8_t *data[2] = { 0 };

int byteCnt=frame->nb_samples * 2 * 2;

unsigned char *pcm = new uint8_t[byteCnt]; //frame->nb_samples*2*2表示分配样本数据量*两通道*每通道2字节大小

data[0] = pcm; //输出格式为AV_SAMPLE_FMT_S16(packet类型),所以转换后的LR两通道都存在data[0]中

ret = swr_convert(swrctx,

data, frame->nb_samples, //输出

(const uint8_t**)frame->data,frame->nb_samples ); //输入

//将重采样后的data数据发送到输出设备,进行播放

while (audio->bytesFree() < byteCnt)

{

if(runIsBreak())

break;

msleep(10);

}

if(!runIsBreak())

io->write((const char *)pcm,byteCnt);

currentMs = av_q2d(pFmtCtx->streams[audioindex]->time_base)*1000*frame->pts;

//qDebug()<<"时长:"< 4.2 widget界面类

而在界面中要处理的就很简单,widget.cpp如下所示:

#include "widget.h"

#include "ui_widget.h"

#include

Widget::Widget(QWidget *parent)

: QWidget(parent)

, ui(new Ui::Widget)

{

ui->setupUi(this);

this->setAcceptDrops(true);

thread = new playthread();

connect(thread,SIGNAL(duration(int,int)),this,SLOT(onDuration(int,int)));

connect(thread,SIGNAL(seekOk()),this,SLOT(onSeekOk()));

thread->start();

sliderSeeking =false;

}

Widget::~Widget()

{

delete ui;

thread->stop();

}

void Widget::onSeekOk()

{

sliderSeeking=false;

}

void Widget::onDuration(int currentMs,int destMs) //时长

{

static int currentMs1=-1,destMs1=-1;

if(currentMs1==currentMs&&destMs1==destMs)

{

return;

}

currentMs1 = currentMs;

destMs1 = destMs;

qDebug()<<"onDuration:"<label_duration->setText(currentTime+"/"+destTime);

if(!sliderSeeking) //未滑动

{

ui->slider->setMaximum(destMs);

ui->slider->setValue(currentMs);

}

}

void Widget::dragEnterEvent(QDragEnterEvent *event)

{

if(event->mimeData()->hasUrls()) //判断拖的类型

{

event->acceptProposedAction();

}

else

{

event->ignore();

}

}

void Widget::dropEvent(QDropEvent *event)

{

if(event->mimeData()->hasUrls()) //判断放的类型

{

QList List = event->mimeData()->urls();

if(List.length()!=0)

{

ui->line_audioPath->setText(List[0].toLocalFile());

}

}

else

{

event->ignore();

}

}

void Widget::on_btn_start_clicked()

{

sliderSeeking=false;

thread->play(ui->line_audioPath->text());

}

void Widget::on_btn_stop_clicked()

{

thread->stop();

}

void Widget::on_btn_pause_clicked()

{

thread->pause();

}

void Widget::on_btn_resume_clicked()

{

thread->resume();

}

void Widget::on_slider_sliderPressed()

{

sliderSeeking=true;

}

void Widget::on_slider_sliderReleased()

{

thread->seek(ui->slider->value());

}

下章学习: 12.QT-通过QOpenGLWidget显示YUV画面,通过QOpenGLTexture纹理渲染YUV_诺谦的博客-CSDN博客