Neutron 软件架构设计与实现

Neutron 的软件架构设计

-

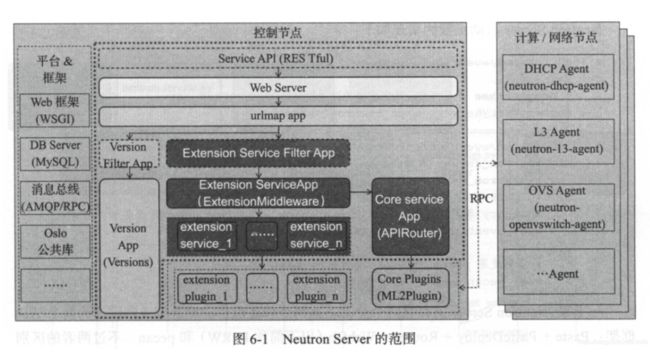

Neutron 作为一个分布式系统,采用了主从分布式架构设计,具有负责在中央控制的 Neutron Server 组件(接收北向 API 请求,控制逻辑,下达任务),也有负责在地方执行的 Agents 组件(执行任务,反馈结果)。Neutron Server 和 Agents 之间采用 MQ/RPC 异步通讯模型。

-

Neutron 作为一个开源的 Network as a Service 项目,首先需要考虑能够兼容多种不同的 Network Providers(底层网络技术),例如:Linux Bridge、Open vSwitch、SDN Controller 等等。所以 Neutron Server 还采用了 Multi-Plugins(多插件类型)架构设计,与 Agents 一一对应。

Neutron API

Neutron API 由 Web Server 和 WSGI Application 两部分组成:

-

Web Server:Neutron Server 首先是一个 Web Server,可以对外提供基于 HTTP 协议的 RESTful API。

-

WSGI Application:WSGI 是 Python Application 和 Web Server 进行集成的标准。

在 Neutron API 层面,考虑到 Services(服务类型)的可扩展性,可以进一步细分为 2 类 API:

-

Core API:用于提供标准的网络服务,对应 Core Plugins,包括:社区提供的 ML2 Plugin,或者是 3-party 提供的 Vendor Plugins。向上提供标准的 Network、Subnet、Port API 资源类型。

-

Resource and Attribute Extension API:用于提供扩展的网络服务,对应 Service Plugins,包括:社区提供的 Routing、LB、VPN、FW 等 Plugins。向上提供 Router、LBaaS、VPNaaS、FWaaS 等 API 资源类型。

Web Server 和 WSGI Application

Neutron Server 对应的服务进程是 neutron-server.service,包含了 Web Server、WSGI Applicant、Plugins、RPC、DB ORM等功能模块。

neutron-server.service 的程序入口函数:

# /opt/stack/neutron/neutron/cmd/eventlet/server/__init__.py

def main():

server.boot_server(wsgi_eventlet.eventlet_wsgi_server)

# /opt/stack/neutron/neutron/server/wsgi_eventlet.py

def eventlet_wsgi_server():

# 获取 Neutron Server API WSGI Application

neutron_api = service.serve_wsgi(service.NeutronApiService)

# 启动 API 和 RPC 服务

start_api_and_rpc_workers(neutron_api)Web Server 通过加载 WSGI Module 将 Web Server 划分为 WSGI Server、WSGI Middleware 和 WSGI Application。我们主要关注 Neutron Server 的 WSGI Application。关键代码如下:

# /opt/stack/neutron/neutron/common/config.py

def load_paste_app(app_name):

"""Builds and returns a WSGI app from a paste config file.

:param app_name: Name of the application to load

"""

# oslo_service 的封装函数,解析 api-paste.ini 文件并加载其定义的 Apps 实例

loader = wsgi.Loader(cfg.CONF)

# Log the values of registered opts

if cfg.CONF.debug:

cfg.CONF.log_opt_values(LOG, logging.DEBUG)

# 实参 app_name 为 `neutron`

app = loader.load_app(app_name)

return app经过Paste库(一套Python的Web开发工具)的一系列处理后,程序流进入pipline_factory函数。

/opt/stack/neutron/neutron/pecan_wsgi/app.py

def v2_factory(global_config, **local_config):

# REST API 的根 "/" 控制器是 root.V2Controller

app = pecan.make_app(root.V2Controller(),

debug=False,

force_canonical=False,

hooks=app_hooks,

guess_content_type_from_ext=True)

# 初始化 Neutron Server

startup.initialize_all()

return app至此就得到了一个完整的 Neutron Server 服务进程,同时我们也找到了 API Request 的 "/" 入口。

Core API 和 Extension API

Neutron Server 启动后我们可以通过 curl 获取到当前支持的 Core API 和 Extension API 清单。

Neutron Multi-Plugins

与 Neutron API 对应的,Neutron Multi-Plugins 也有 Core Plugins 和 Service Plugins 这两大类型。可以通过编辑 neutron.conf 指定你希望启动的 Core 和 Service Plugins 的具体类型。

通常的,Core Plugin 选择使用 ML2,而 Service Plugins 这可以指定多个,包括:L3RouterPlugin、FWaaSPlugin、LBaaSPlugin、VPNaaSPlugin 等等。

[DEFAULT]

...

# The core plugin Neutron will use (string value)

core_plugin = ml2

# The service plugins Neutron will use (list value)

service_plugins = neutron.services.l3_router.l3_router_plugin.L3RouterPluginNeutron Server 加载对应的 Plugins 代码的实现如下:

# /opt/stack/neutron/neutron/manager.py

class NeutronManager(object):

"""Neutron's Manager class.

Neutron's Manager class is responsible for parsing a config file and

instantiating the correct plugin that concretely implements

neutron_plugin_base class.

"""

...

def __init__(self, options=None, config_file=None):

...

# 从配置文件中读取 Core Plugin,e.g. ml2

plugin_provider = cfg.CONF.core_plugin

LOG.info("Loading core plugin: %s", plugin_provider)

# NOTE(armax): keep hold of the actual plugin object

# 实例化 Core Plugin Class,e.g. ML2Plugin Class

plugin = self._get_plugin_instance(CORE_PLUGINS_NAMESPACE,

plugin_provider)

# 将 Core Plugin 登记到 Plugins Directory

directory.add_plugin(lib_const.CORE, plugin)

...

# load services from the core plugin first

# 首先加载 Core Plugin 本身默认支持的 Extension Plugins

self._load_services_from_core_plugin(plugin)

# 继续加载通过配置文件额外指定的 Extension Plugins

self._load_service_plugins()

...

def _load_services_from_core_plugin(self, plugin):

"""Puts core plugin in service_plugins for supported services."""

LOG.debug("Loading services supported by the core plugin")

# supported service types are derived from supported extensions

# 来之 Core Plugin 默认支持的 Extension Plugins(e.g. lbaas、fwaas、aas、router、qos)

for ext_alias in getattr(plugin, "supported_extension_aliases", []):

if ext_alias in constants.EXT_TO_SERVICE_MAPPING:

service_type = constants.EXT_TO_SERVICE_MAPPING[ext_alias]

# 将 Extension Plugins 登记到 Plugins Directory

directory.add_plugin(service_type, plugin)

LOG.info("Service %s is supported by the core plugin",

service_type)

def _load_service_plugins(self):

"""Loads service plugins.

Starts from the core plugin and checks if it supports

advanced services then loads classes provided in configuration.

"""

# 从配置文件中读取 Extension Plugins

plugin_providers = cfg.CONF.service_plugins

# 获取 Core Plugin 使用 Neutron 原生的 Datastore 则会返回一些默认的 Extension Plugins(e.g. tag、timestamp、flavors、revisions)

plugin_providers.extend(self._get_default_service_plugins())

LOG.debug("Loading service plugins: %s", plugin_providers)

for provider in plugin_providers:

if provider == '':

continue

LOG.info("Loading Plugin: %s", provider)

# 实例化 Extension Plugins Class 的对象

plugin_inst = self._get_plugin_instance('neutron.service_plugins',

provider)

# only one implementation of svc_type allowed

# specifying more than one plugin

# for the same type is a fatal exception

# TODO(armax): simplify this by moving the conditional into the

# directory itself.

plugin_type = plugin_inst.get_plugin_type()

if directory.get_plugin(plugin_type):

raise ValueError(_("Multiple plugins for service "

"%s were configured") % plugin_type)

# 将 Extension Plugins 登记到 Plugins Directory

directory.add_plugin(plugin_type, plugin_inst)

# search for possible agent notifiers declared in service plugin

# (needed by agent management extension)

# 获取 Core Plugin 实例对象

plugin = directory.get_plugin()

if (hasattr(plugin, 'agent_notifiers') and

hasattr(plugin_inst, 'agent_notifiers')):

# 将 Extension Plugins 对应的 Agent notifiers 更新到 Core Plugin 的 Agent notifiers 字典中

plugin.agent_notifiers.update(plugin_inst.agent_notifiers)

# disable incompatible extensions in core plugin if any

utils.disable_extension_by_service_plugin(plugin, plugin_inst)至此,Neutron 就将当前选择使用的 Core Plugin 和 Extension Plugin 都被实例化并登记到 Plugins Directory 了。

Plugins 注册完成后,会在启动 neutron-server.service 服务进程的过程中同步启动对应的 Plugin Workers 进程或协程。前面提到 Neutron Plugins 和对应的 Neutron Agents 通过 MQ/RPC 来进行通信,所以 Plugin Workers 具有 2 种类型:

-

RpcWorker:核心业务逻辑的 RPC Worker。可以通过 neutron.conf 中的配置项 rpc_workers 来设定 RPC Worker 的数量。

-

RpcReportsWorker:仅具有 RPC State 的 Report 功能。可以通过 neutron.conf 中的配置项 rpc_state_report_workers 和 rpc_state_report_workers 来指定是否要开启 RPC State Report 功能,以及 Workers 的数量。

# /opt/stack/neutron/neutron/server/wsgi_eventlet.py

# 在启动 neutron-server.servce 时执行

def start_api_and_rpc_workers(neutron_api):

try:

# 获取所有 RPC and Plugins workers 的 Launcher 实例对象

worker_launcher = service.start_all_workers()

# 创建协程池

pool = eventlet.GreenPool()

# 以协程的方式启动 WSGI Application

api_thread = pool.spawn(neutron_api.wait)

# 以协程的方式启动 RPC and Plugins workers

plugin_workers_thread = pool.spawn(worker_launcher.wait)

# api and other workers should die together. When one dies,

# kill the other.

api_thread.link(lambda gt: plugin_workers_thread.kill())

plugin_workers_thread.link(lambda gt: api_thread.kill())

pool.waitall()

except NotImplementedError:

LOG.info("RPC was already started in parent process by "

"plugin.")

neutron_api.wait()

# /opt/stack/neutron/neutron/service.py

def _get_rpc_workers():

# 从 Plugins Directory 获取 Core plugin 实例对象

plugin = directory.get_plugin()

# 从 Plugins Directory 获取 Service plugins(包括 Core Plugin)清单列表

service_plugins = directory.get_plugins().values()

...

# passing service plugins only, because core plugin is among them

# 创建所有 Plugins(core + service)的 RpcWorker 对象实例

rpc_workers = [RpcWorker(service_plugins,

worker_process_count=cfg.CONF.rpc_workers)]

if (cfg.CONF.rpc_state_report_workers > 0 and

plugin.rpc_state_report_workers_supported()):

rpc_workers.append(

RpcReportsWorker(

[plugin],

worker_process_count=cfg.CONF.rpc_state_report_workers

)

)

return rpc_workers

class RpcWorker(neutron_worker.BaseWorker):

"""Wraps a worker to be handled by ProcessLauncher"""

start_listeners_method = 'start_rpc_listeners'

def __init__(self, plugins, worker_process_count=1):

super(RpcWorker, self).__init__(

worker_process_count=worker_process_count

)

self._plugins = plugins

self._servers = []

def start(self):

super(RpcWorker, self).start()

for plugin in self._plugins:

if hasattr(plugin, self.start_listeners_method):

try:

# 获取所有 Plugins 的 RPC listeners 方法

servers = getattr(plugin, self.start_listeners_method)()

except NotImplementedError:

continue

self._servers.extend(servers)

...

class RpcReportsWorker(RpcWorker):

start_listeners_method = 'start_rpc_state_reports_listener'

通过API Controllers 来关联 API 和 Plugin

在 Pecan 框架中,Controller 是 API 的具体实现,包含了 WSGI Application 中要求的 URL Routed、View Function、HTTP Method 以及三者间 Mapper 的封装。这里我们主要关注 Controller 是如何实现的。

在 neutronapiapp_v2_0 Factory Function 的代码中,除了生成并返回 WSGI Application 对象之外,还执行了 startup.initialize_all(),它所做的事情就是准备好 neutron-server.service 启动所必须的前提条件。包括:加载 Plugins 代码、实例化 API Controllers、以及处理 API、Controllers、Plugins 这 3 者之间的 Mapping 关系。

下述代码中 initialize_all() 所做的事情就是首先加载所有选择使用的 Plugins 代码,然后将 Core Plugins & Core API、Extension Plugins & Extension API 重新封装为一个 CollectionsController 实例对象,并统一注册到 NeutronManger 实例属性self.resource_plugin_mappings 和 self.resource_controller_mappings 中。

# /opt/stack/neutron/neutron/pecan_wsgi/startup.py

# Core Resources 清单

RESOURCES = {'network': 'networks',

'subnet': 'subnets',

'subnetpool': 'subnetpools',

'port': 'ports'}

def initialize_all():

# 加载 Plugins,如上文所说

manager.init()

# PluginAwareExtensionManager 做的事情就是从 configured extension path 加载 extensions,并且对 Extension Plugins 提供一些常用管理函数,e.g. add_extension、extend_resources、get_resources

ext_mgr = extensions.PluginAwareExtensionManager.get_instance()

# 将 Core Resources 加入到了 Extension Resources 清单

ext_mgr.extend_resources("2.0", attributes.RESOURCES)

# At this stage we have a fully populated resource attribute map;

# build Pecan controllers and routes for all core resources

# 获取 Core Plugin 实例对象

plugin = directory.get_plugin()

# 循环处理 Core Resources

for resource, collection in RESOURCES.items():

# Keeps track of Neutron resources for which quota limits are enforced.

resource_registry.register_resource_by_name(resource)

# 将 Core Resource、Core Plugin 封装到 new_controller 实例对象

new_controller = res_ctrl.CollectionsController(collection, resource,

plugin=plugin)

# 将 new_controller 以 resource_name:new_controller 的方式保存到 NeutronManager 实例属性

manager.NeutronManager.set_controller_for_resource(

collection, new_controller)

# 将 plugin 以 resource_name:plugin 的方式保存到 NeutronManager 实例属性

manager.NeutronManager.set_plugin_for_resource(collection, plugin)

pecanized_resources = ext_mgr.get_pecan_resources()

for pec_res in pecanized_resources:

manager.NeutronManager.set_controller_for_resource(

pec_res.collection, pec_res.controller)

manager.NeutronManager.set_plugin_for_resource(

pec_res.collection, pec_res.plugin)

# Now build Pecan Controllers and routes for all extensions

# 获取 Extension Resources 对应的 ResourceExtension

resources = ext_mgr.get_resources()

# Extensions controller is already defined, we don't need it.

resources.pop(0)

# 循环处理 ResourceExtension objects

for ext_res in resources:

path_prefix = ext_res.path_prefix.strip('/')

collection = ext_res.collection

# Retrieving the parent resource. It is expected the format of

# the parent resource to be:

# {'collection_name': 'name-of-collection',

# 'member_name': 'name-of-resource'}

# collection_name does not appear to be used in the legacy code

# inside the controller logic, so we can assume we do not need it.

parent = ext_res.parent or {}

parent_resource = parent.get('member_name')

collection_key = collection

if parent_resource:

collection_key = '/'.join([parent_resource, collection])

collection_actions = ext_res.collection_actions

member_actions = ext_res.member_actions

if manager.NeutronManager.get_controller_for_resource(collection_key):

# This is a collection that already has a pecan controller, we

# do not need to do anything else

continue

legacy_controller = getattr(ext_res.controller, 'controller',

ext_res.controller)

new_controller = None

if isinstance(legacy_controller, base.Controller):

resource = legacy_controller.resource

plugin = legacy_controller.plugin

attr_info = legacy_controller.attr_info

member_actions = legacy_controller.member_actions

pagination = legacy_controller.allow_pagination

sorting = legacy_controller.allow_sorting

# NOTE(blogan): legacy_controller and ext_res both can both have

# member_actions. the member_actions for ext_res are strictly for

# routing, while member_actions for legacy_controller are used for

# handling the request once the routing has found the controller.

# They're always the same so we will just use the ext_res

# member_action.

# 将 Extension Resource、Extension Plugin、原 Extension Controller 的部分属性重新封装到 new_controller 实例对象

new_controller = res_ctrl.CollectionsController(

collection, resource, resource_info=attr_info,

parent_resource=parent_resource, member_actions=member_actions,

plugin=plugin, allow_pagination=pagination,

allow_sorting=sorting, collection_actions=collection_actions)

# new_controller.collection has replaced hyphens with underscores

manager.NeutronManager.set_plugin_for_resource(

new_controller.collection, plugin)

if path_prefix:

manager.NeutronManager.add_resource_for_path_prefix(

collection, path_prefix)

else:

new_controller = utils.ShimCollectionsController(

collection, None, legacy_controller,

collection_actions=collection_actions,

member_actions=member_actions,

action_status=ext_res.controller.action_status,

collection_methods=ext_res.collection_methods)

# 将 new_controller 以 resource_name:new_controller 的方式保存到 NeutronManager 实例属性

manager.NeutronManager.set_controller_for_resource(

collection_key, new_controller)

policy.reset()Core API 请求处理

根控制器 V2Controller 没有显式地声明 Core Controller,但其实所有 Core API Request 都在 method def _lookup(self, collection, *remainder): 中。所有未显式定义的 URL path 都被路由到 _lookup 方法中得到了处理。

在 initialize_all() 阶段已经把 Core Controllers 都注册到 NeutronManager 的实例属性 self.resource_controller_mappings 中了,这里再根据 API Request 的类型(e.g. networks、subnets)从 NeutronManager 的实例属性中取出。

Network Controller 的实例属性可以看出,其关联到了 Core Plugin ML2,并在该 Plugin 类中实现了对 network 这个 Resource 的 “视图函数”。比如:API 请求 GET /v2.0/networks 的视图函数是 Ml2Plugin.get_networks。实际上所有 Core Resources 都会关联到同一个 Core Plugin,但 Extension Resources 就会根据不同的类型关联到相应的 Service Plugins 上。

Neutron 就是通过这种方式实现了从 Neutron API 层到 Neutron Plugin 层的调用封装。

# /opt/stack/neutron/neutron/plugins/ml2/plugin.py

@db_api.retry_if_session_inactive()

def get_networks(self, context, filters=None, fields=None,

sorts=None, limit=None, marker=None, page_reverse=False):

# NOTE(ihrachys) use writer manager to be able to update mtu

# TODO(ihrachys) remove in Queens when mtu is not nullable

with db_api.CONTEXT_WRITER.using(context):

nets_db = super(Ml2Plugin, self)._get_networks(

context, filters, None, sorts, limit, marker, page_reverse)

# NOTE(ihrachys) pre Pike networks may have null mtus; update them

# in database if needed

# TODO(ihrachys) remove in Queens+ when mtu is not nullable

net_data = []

for net in nets_db:

if net.mtu is None:

net.mtu = self._get_network_mtu(net, validate=False)

net_data.append(self._make_network_dict(net, context=context))

self.type_manager.extend_networks_dict_provider(context, net_data)

nets = self._filter_nets_provider(context, net_data, filters)

return [db_utils.resource_fields(net, fields) for net in nets]Extension API 请求处理

Extensions API 的本质是一个 WSGI Middleware 而非 WSGI Application。

# /opt/stack/neutron/neutron/api/extensions.py

import routes

def plugin_aware_extension_middleware_factory(global_config, **local_config):

"""Paste factory."""

def _factory(app):

ext_mgr = PluginAwareExtensionManager.get_instance()

# ExtensionMiddleware 是 Extensions middleware for WSGI(路由隐射、视图函数)的封装,接收 Extensions Resources Request 并进行处理

return ExtensionMiddleware(app, ext_mgr=ext_mgr)

return _factory

class ExtensionMiddleware(base.ConfigurableMiddleware):

"""Extensions middleware for WSGI."""

def __init__(self, application,

...

# extended resources

for resource in self.ext_mgr.get_resources():

...

# 自定义 Actions

for action, method in resource.collection_actions.items():

conditions = dict(method=[method])

path = "/%s/%s" % (resource.collection, action)

with mapper.submapper(controller=resource.controller,

action=action,

path_prefix=path_prefix,

conditions=conditions) as submap:

submap.connect(path_prefix + path, path)

submap.connect(path_prefix + path + "_format",

"%s.:(format)" % path)

# 自定义 Methods

for action, method in resource.collection_methods.items():

conditions = dict(method=[method])

path = "/%s" % resource.collection

with mapper.submapper(controller=resource.controller,

action=action,

path_prefix=path_prefix,

conditions=conditions) as submap:

submap.connect(path_prefix + path, path)

submap.connect(path_prefix + path + "_format",

"%s.:(format)" % path)

# 将 ResourceCollection、ResourceController、ResourceMemberAction 映射起来

mapper.resource(resource.collection, resource.collection,

controller=resource.controller,

member=resource.member_actions,

parent_resource=resource.parent,

path_prefix=path_prefix)

...从上述代码可以看出,虽然 Core API 使用了 Pecan 框架,但 Extension API 依旧使用了 routes 来进行 Mapper 的维护。

Neutron Agents

Neutron 具有大量的 Networking Agents 服务进程被分布式的部署到了各类节点之上运行,为 Neutron 提供各类网元功能的管理和执行服务。

Neutron Agent 的软件架构可以分为 3 层:

-

北向接口层:提供 RPC 接口,供 Neutron Server 调用;

-

数据模型转换层:将 RPC 数据模型转换为底层网元的 CLI 模型。

-

CLI 协议层:南向通过 CLI 协议栈对相应的底层网元进行配置;

例如,当 Neutron 为 VM 创建并绑定一个 Port 时,Linux Bridge Agent 和 OvS Agent 就会执行一系列指令。

OvS Agents

Neutron Agent 的程序入口依旧定义在 setup.cfg 文件中,我们这里主要以 OvS Agent 为例关注 neutron-openvswitch-agent.service 服务进程的启动流程。

# /opt/stack/neutron/setup.cfg

neutron-openvswitch-agent = neutron.cmd.eventlet.plugins.ovs_neutron_agent:main

# /opt/stack/neutron/neutron/plugins/ml2/drivers/openvswitch/agent/main.py

_main_modules = {

'ovs-ofctl': 'neutron.plugins.ml2.drivers.openvswitch.agent.openflow.'

'ovs_ofctl.main',

'native': 'neutron.plugins.ml2.drivers.openvswitch.agent.openflow.'

'native.main',

}

def main():

common_config.init(sys.argv[1:])

driver_name = cfg.CONF.OVS.of_interface

mod_name = _main_modules[driver_name]

mod = importutils.import_module(mod_name)

mod.init_config()

common_config.setup_logging()

profiler.setup("neutron-ovs-agent", cfg.CONF.host)

mod.main()这里可以看见 OvS Agent 有两种不同的启动模式 ovs-ofctl 和 native,通过配置项 of_interface 来指定。通常为 ovs-ofctl,表示使用 Open vSwitch 的 ovs-ofctl 指令来操作流表。

通过RPC来连接Plugins与Agents

Plugins 和 Agents 之间通过 RPC/MQ 进行通信,两者都既是 RPC Producer(生产者)也是 RPC Consumer(消费者)。

Plugin RPC Consumer

Plugin 要想成为 Consumer,就需要向 RPC Server 申请,称为 Registered Endpoints。完成后,Plugin 就注册好了与对应的 Agent 进行 RPC 通信的 Endpoint(调用接口)了。

# /opt/stack/neutron/neutron/plugins/ml2/plugin.py

# 以 Core Plugin 的 RPC Listeners 启动方法为例

class Ml2Plugin(...):

...

@log_helpers.log_method_call

def start_rpc_listeners(self):

"""Start the RPC loop to let the plugin communicate with agents."""

# 设置 ML2Plugin 与 Agents 通信的 endpoints

self._setup_rpc()

self.topic = topics.PLUGIN

self.conn = n_rpc.Connection()

# 将 endpoints 注册到 RPC Consumer 并创建 RPC Consumer 实例对象

self.conn.create_consumer(self.topic, self.endpoints, fanout=False)

self.conn.create_consumer(

topics.SERVER_RESOURCE_VERSIONS,

[resources_rpc.ResourcesPushToServerRpcCallback()],

fanout=True)

# process state reports despite dedicated rpc workers

self.conn.create_consumer(topics.REPORTS,

[agents_db.AgentExtRpcCallback()],

fanout=False)

# 以线程的方式启动 endpoint 中的 RPC servers 实例对象

return self.conn.consume_in_threads()

def start_rpc_state_reports_listener(self):

self.conn_reports = n_rpc.Connection()

self.conn_reports.create_consumer(topics.REPORTS,

[agents_db.AgentExtRpcCallback()],

fanout=False)

return self.conn_reports.consume_in_threads()

def _setup_rpc(self):

"""Initialize components to support agent communication."""

# Agents endpoints 清单

self.endpoints = [

rpc.RpcCallbacks(self.notifier, self.type_manager),

securitygroups_rpc.SecurityGroupServerRpcCallback(),

dvr_rpc.DVRServerRpcCallback(),

dhcp_rpc.DhcpRpcCallback(),

agents_db.AgentExtRpcCallback(),

metadata_rpc.MetadataRpcCallback(),

resources_rpc.ResourcesPullRpcCallback()

]其中最重要的 start_rpc_listeners 和 start_rpc_state_reports_listener 函数分别在上提到的 RpcWorker 和 RpcReportsWorker Workers 类被调用,从而实现 RPC Workers 的加载和运行。

OvS Agent RPC Consumer

在启动 ovs-agnet.service 服务进程过程中实例化 OVSNeutronAgent 类时,要求先完成 OvS Agent RPC Consumer 的配置。

class OVSNeutronAgent(...):

...

def __init__(self, bridge_classes, ext_manager, conf=None):

...

# 创建 RPC Consumer

self.setup_rpc()

...

def setup_rpc(self):

self.plugin_rpc = OVSPluginApi(topics.PLUGIN)

# allow us to receive port_update/delete callbacks from the cache

self.plugin_rpc.register_legacy_notification_callbacks(self)

self.sg_plugin_rpc = sg_rpc.SecurityGroupServerAPIShim(

self.plugin_rpc.remote_resource_cache)

self.dvr_plugin_rpc = dvr_rpc.DVRServerRpcApi(topics.PLUGIN)

self.state_rpc = agent_rpc.PluginReportStateAPI(topics.REPORTS)

# RPC network init

self.context = context.get_admin_context_without_session()

# Made a simple RPC call to Neutron Server.

while True:

try:

self.state_rpc.has_alive_neutron_server(self.context)

except oslo_messaging.MessagingTimeout as e:

LOG.warning('l2-agent cannot contact neutron server. '

'Check connectivity to neutron server. '

'Retrying... '

'Detailed message: %(msg)s.', {'msg': e})

continue

break

# 定义监听消费者函数类型

# Define the listening consumers for the agent

consumers = [[constants.TUNNEL, topics.UPDATE],

[constants.TUNNEL, topics.DELETE],

[topics.DVR, topics.UPDATE]]

if self.l2_pop:

consumers.append([topics.L2POPULATION, topics.UPDATE])

self.connection = agent_rpc.create_consumers([self],

topics.AGENT,

consumers,

start_listening=False)设置完 RPC Consumer 之后,OvS Agent 定义的 RPC Consumer 函数就能够从 MQ 接受并消费从 Plugin 发送过来的消息了。如下代码实现。

注意,OvS Agent 只会监听 UPDATE 和 DELETE 的 RPC 消息,并没有监听 CREATE,这是因为 Neutron Port 的创建并不由 OvS Agent 完成而是由 nova-compute.service 来完成。

def port_update(self, context, **kwargs):

port = kwargs.get('port')

# Put the port identifier in the updated_ports set.

# Even if full port details might be provided to this call,

# they are not used since there is no guarantee the notifications

# are processed in the same order as the relevant API requests

self.updated_ports.add(port['id'])

def port_delete(self, context, **kwargs):

port_id = kwargs.get('port_id')

self.deleted_ports.add(port_id)

self.updated_ports.discard(port_id)

def network_update(self, context, **kwargs):

network_id = kwargs['network']['id']

for port_id in self.network_ports[network_id]:

# notifications could arrive out of order, if the port is deleted

# we don't want to update it anymore

if port_id not in self.deleted_ports:

self.updated_ports.add(port_id)

LOG.debug("network_update message processed for network "

"%(network_id)s, with ports: %(ports)s",

{'network_id': network_id,

'ports': self.network_ports[network_id]})Plugin RPC 调用 OvS Agent 的示例

以 Create Port 业务流程中, Plugin RPC 调用 OvS Agent 的示例:

# /opt/stack/neutron/neutron/plugins/ml2/plugin.py

class Ml2Plugin(...):

...

def create_port(self, context, port):

...

return self._after_create_port(context, result, mech_context)

def _after_create_port(self, context, result, mech_context):

...

try:

bound_context = self._bind_port_if_needed(mech_context)

except ml2_exc.MechanismDriverError:

...

return bound_context.current

@db_api.retry_db_errors

def _bind_port_if_needed(self, context, allow_notify=False,

need_notify=False, allow_commit=True):

...

if not try_again:

if allow_notify and need_notify:

self._notify_port_updated(context)

return context

...

return context

def _notify_port_updated(self, mech_context):

port = mech_context.current

segment = mech_context.bottom_bound_segment

if not segment:

# REVISIT(rkukura): This should notify agent to unplug port

network = mech_context.network.current

LOG.debug("In _notify_port_updated(), no bound segment for "

"port %(port_id)s on network %(network_id)s",

{'port_id': port['id'], 'network_id': network['id']})

return

self.notifier.port_update(mech_context._plugin_context, port,

segment[api.NETWORK_TYPE],

segment[api.SEGMENTATION_ID],

segment[api.PHYSICAL_NETWORK])

# /opt/stack/neutron/neutron/plugins/ml2/rpc.py

class AgentNotifierApi(...):

...

def port_update(self, context, port, network_type, segmentation_id,

physical_network):

# 构建 RPC Client

cctxt = self.client.prepare(topic=self.topic_port_update,

fanout=True)

# 发送 RPC 消息

cctxt.cast(context, 'port_update', port=port,

network_type=network_type, segmentation_id=segmentation_id,

physical_network=physical_network)最终 Plugin 发出的 RPC 消息就会被订阅该 Target 的 Agent Sonsumer 接收到了。

比如被 OvS Agent 接收到该消息,并执行后续的动作。

# /opt/stack/neutron/neutron/plugins/ml2/drivers/openvswitch/agent/ovs_neutron_agent.py

def port_update(self, context, **kwargs):

port = kwargs.get('port')

# Put the port identifier in the updated_ports set.

# Even if full port details might be provided to this call,

# they are not used since there is no guarantee the notifications

# are processed in the same order as the relevant API requests

self.updated_ports.add(port['id'])Plugin Callback

除了上述举例直接调用 RPC 函数(call、cast)的方式之外,Plugins 还实现了一套 Callback System 机制。

Callback System 与 RPC 一样是为了实现通信,不同之处在于:

-

RPC 是为了实现 neutron-server 与 agent 不同服务进程之间的任务消息传递;

-

Callback System 是为了实现同一进程内部的、Core and Service components 之间的通信。

Callback System 用于传递 Resource 的 Lifecycle Events(e.g. before creation, before deletion, etc.),让不同的 Core 和 Services 之间、以及让不同的 Services 之间可以感知到特定 Resource 的状态变化。