Ubuntu安装hadoop(3.2.4),hbase(2.4.0),hive(3.1.0),phoenix(5.1.2)集群

集群安装

1. 环境准备

1.1 服务器的准备

192.168.12.253 ds1

192.168.12.38 ds2

192.168.12.39 ds3

1.2 修改hostname(所有节点)

在192.168.12.253 节点上 hostnamectl set-hostname ds1

在192.168.12.38 节点上 hostnamectl set-hostname ds2

在192.168.12.39 节点上 hostnamectl set-hostname ds3

1.3 配置节点的IP-主机名映射信息(所有节点)

vi /etc/hosts

新增下面内容

192.168.12.253 ds1

192.168.12.38 ds2

192.168.12.39 ds3

1.4 关闭防火墙(所有节点)

sudo systemctl stop ufw

sudo systemctl disable ufw

1.5 修改SSH配置(所有节点)

vim /etc/ssh/sshd_config

将 PermitEmptyPasswords no 改为 PermitEmptyPasswords yes

1.6 配置免密登录(所有节点)

生成ssh key:(每个节点执行)

ssh-keygen -t rsa

ds1、ds2、ds3上操作互信配置:(每个节点执行)

ssh-copy-id -i ~/.ssh/id_rsa.pub ds1

ssh-copy-id -i ~/.ssh/id_rsa.pub ds2

ssh-copy-id -i ~/.ssh/id_rsa.pub ds3

上同上面操作类似,完成互信配置

1.7 安装jdk(所有节点)

安装命令:

sudo apt-get install openjdk-8-jre

查看jdk版本:

java -version

安装路径:

/usr/lib/jvm/java-8-openjdk-amd64

1.8 安装mysql(ds1)

安装命令:

sudo apt-get update

sudo apt-get install mysql-server

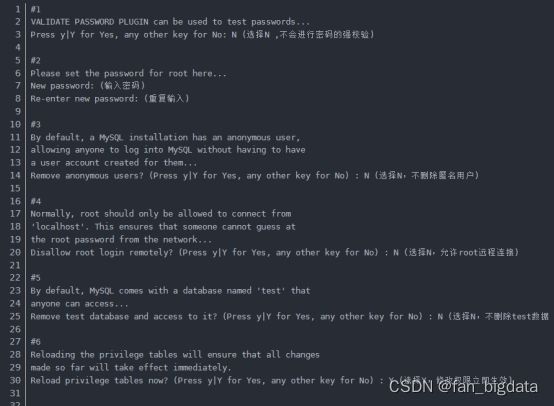

初始化配置:

sudo mysql_secure_installation

查看mysql的服务状态:

systemctl status mysql.service

修改配置文件 mysqld.cnf

cd /etc/mysql/mysql.conf.d

vim mysqld.cnf

将 bind-address = 127.0.0.1注释掉

配置mysql的环境变量

vim /etc/profile

添加

export MYSQL_HOME=/usr/share/mysql

export PATH=$MYSQL_HOME/bin:$PATH

刷新环境变量

source /etc/profile

启动和停止mysql服务

停止:

sudo service mysql stop

启动:

sudo service mysql start

进入mysql数据库

mysql -u root -p introcks1234

2. 安装zookeeper集群

2.1 下载zookeeper安装包

下载地址: https://www.apache.org/dyn/closer.lua/zookeeper/zookeeper-3.8.0/apache-zookeeper-3.8.0-bin.tar.gz

2.2 上传与解压

上传到 /home/intellif中

解压 :

tar -zxvf apache-zookeeper-3.8.0-bin.tar.gz -C /opt

修改文件权限:

chmod -R 755 apache-zookeeper-3.8.0-bin

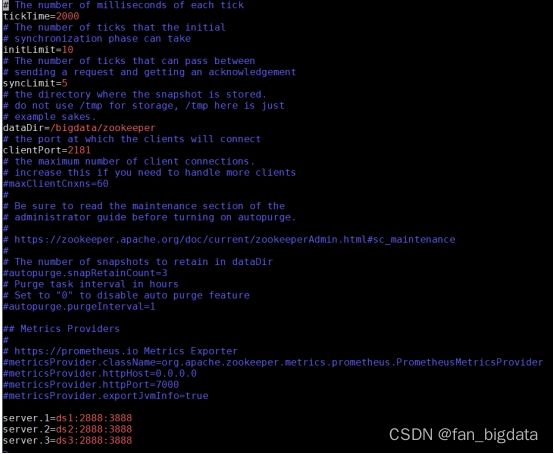

2.3 修改配置文件

cd apache-zookeeper-3.8.0-bin/conf

cp zoo_sample.cfg zoo.cfg

vim zoo.cfg

新增下面内容:

server.1=ds1:2888:3888

server.2=ds2:2888:3888

server.3=ds3:2888:3888

注意3888后面不能有空格,否则后面启动时会报错: Address unresolved: ds1:3888

2.4 分发到ds2,ds3中

将zookeeper分发到ds2,ds3中

scp -r apache-zookeeper-3.8.0-bin ds2:/opt

scp -r apache-zookeeper-3.8.0-bin ds3:/opt

2.5 配置环境变量(所有节点)

vim ~/.profile

添加下面两行:

export ZOOKEEPER_HOME=/opt/apache-zookeeper-3.8.0-bin

export PATH=$ZOOKEEPER_HOME/bin:$PATH

source /etc/profile

2.6 创建myid文件

先创建目录/bigdata/zookeeper (所有节点)

mkdir -p /bigdata/zookeeper

cd /bigdata/zookeeper

在ds1上执行

echo 1 > myid

在ds2上执行

echo 2 > myid

在ds3上执行

echo 3 > myid

2.7 启动与停止、查看状态命令(所有节点)

启动:

zkServer.sh start

停止:

zkServer.sh stop

查看状态:

zkServer.sh status

3. 安装hadoop高可用集群

3.1集群规划

| ds1 | ds2 | ds3 | |

|---|---|---|---|

| NameNode | yes | yes | no |

| DataNode | yes | yes | yes |

| JournalNode | yes | yes | yes |

| NodeManager | yes | yes | yes |

| ResourceManager | yes | no | no |

| Zookeeper | yes | yes | yes |

| ZKFC | yes | yes | no |

3.2下载安装包

hadoop版本: 3.2.4

下载地址:https://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-3.2.4/hadoop-3.2.4.tar.gz

3.3上传与解压

上传到服务器/home/intellif下

解压 :

tar -zxvf hadoop-3.2.4.tar.gz

mv hadoop-3.2.4/ /opt

3.4修改配置文件

Hadoop核心配置文件介绍:

| 文件名称 | 描述 |

|---|---|

| hadoop-env.sh | 脚本中要用到的环境变量,以运行hadoop |

| mapred-env.sh | 脚本中要用到的环境变量,以运行mapreduce(覆盖hadoop-env.sh中设置的变量) |

| yarn-env.sh | 脚本中要用到的环境变量,以运行YARN(覆盖hadoop-env.sh中设置的变量) |

| core-site.xml | Hadoop Core的配置项,例如HDFS,MAPREDUCE,YARN中常用的I/O设置等 |

| hdfs-site.xml | Hadoop守护进程的配置项,包括namenode和datanode等 |

| mapred-site.xml | MapReduce守护进程的配置项,包括job历史服务器 |

| yarn-site.xml | Yarn守护进程的配置项,包括资源管理器和节点管理器 |

| workers | 具体运行datanode和节点管理器的主机名称 |

cd /opt/hadoop-3.2.4/etc/hadoop

3.4.1 hadoop-env.sh配置修改

vim hadoop-env.sh

在最后添加:

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HDFS_NAMENODE_USER=hdfs

export HDFS_DATANODE_USER=hdfs

export HDFS_ZKFC_USER=hdfs

export HDFS_JOURNALNODE_USER=hdfs

3.4.2 yarn-env.sh 配置修改

vim yarn-env.sh

在最后添加(jdk的路径):

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

3.4.3 core-site.xml 配置修改

vim core-site.xml

将替换为如下内容

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://myclustervalue>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/bigdata/hadoop/tmpdirvalue>

property>

<property>

<name>ha.zookeeper.quorumname>

<value>ds1:2181,ds2:2181,ds3:2181value>

property>

<property>

<name>hadoop.proxyuser.hdfs.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.hdfs.groupsname>

<value>*value>

property>

configuration>

3.4.4 hdfs-site.xml配置修改

vim hdfs-site.xml

<configuration>

<property>

<name>dfs.nameservicesname>

<value>myclustervalue>

property>

<property>

<name>dfs.ha.namenodes.myclustername>

<value>nn1,nn2value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1name>

<value>ds1:8020value>

property>

<property>

<name>dfs.namenode.http-address.mycluster.nn1name>

<value>ds1:50070value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2name>

<value>ds2:8020value>

property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2name>

<value>ds2:50070value>

property>

<property>

<name>dfs.namenode.shared.edits.dirname>

<value>qjournal://ds1:8485;ds2:8485;ds3:8485/myclustervalue>

property>

<property>

<name>dfs.journalnode.edits.dirname>

<value>/bigdata/hadoop/journalvalue>

property>

<property>

<name>dfs.ha.automatic-failover.enabledname>

<value>truevalue>

property>

<property>

<name>dfs.client.failover.proxy.provider.myclustername>

<value> org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvidervalue>

property>

<property>

<name>dfs.ha.fencing.methodsname>

<value>sshfencevalue>

property>

<property>

<name>dfs.ha.fencing.ssh.private-key-filesname>

<value>/home/hdfs/.ssh/id_rsavalue>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>file:///bigdata/hadoop/namenode value>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>file:///bigdata/hadoop/datanode value>

property>

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.permissions.enabledname>

<value>falsevalue>

property>

configuration>

3.4.5 mapred-site.xml配置修改

vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>mapreduce.application.classpathname>

<value>

/opt/hadoop-3.2.4/share/hadoop/common/*,

/opt/hadoop-3.2.4/share/hadoop/common/lib/*,

/opt/hadoop-3.2.4/share/hadoop/hdfs/*,

/opt/hadoop-3.2.4/share/hadoop/hdfs/lib/*,

/opt/hadoop-3.2.4/share/hadoop/mapreduce/*,

/opt/hadoop-3.2.4/share/hadoop/mapreduce/lib/*,

/opt/hadoop-3.2.4/share/hadoop/yarn/*,

/opt/hadoop-3.2.4/share/hadoop/yarn/lib/*

value>

property>

configuration>

3.4.6 yarn-site.xml配置修改

vim yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.scheduler.classname>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairSchedulervalue>

property>

<property>

<name>yarn.resourcemanager.ha.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.cluster-idname>

<value>cluster1value>

property>

<property>

<name>yarn.resourcemanager.ha.idname>

<value>rm1value>

property>

<property>

<name>yarn.resourcemanager.ha.rm-idsname>

<value>rm1,rm2value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm1name>

<value>ds1value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm2name>

<value>ds2value>

property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1name>

<value>ds1:8088value>

property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2name>

<value>ds2:8088value>

property>

<property>

<name>hadoop.zk.addressname>

<value>ds1:2181,ds2:2181,ds3:2181value>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.pmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.resource.memory-mbname>

<value>204800value>

property>

<property>

<name>yarn.scheduler.minimum-allocation-mbname>

<value>8192value>

property>

<property>

<name>yarn.scheduler.maximum-allocation-mbname>

<value>614400value>

property>

<property>

<name>yarn.app.mapreduce.am.resource.mbname>

<value>8192value>

property>

<property>

<name>yarn.app.mapreduce.am.command-optsname>

<value>-Xmx6553mvalue>

property>

<property>

<name>yarn.nodemanager.resource.cpu-vcoresname>

<value>32value>

property>

<property>

<name>yarn.scheduler.maximum-allocation-vcoresname>

<value>96value>

property>

configuration>

3.4.7 workers配置修改

vim workers

删除原来的,改为:

ds1

ds2

ds3

3.5 分发hadoop到ds2,ds3

scp -r hadoop-3.2.4 ds2:/opt

scp -r hadoop-3.2.4 ds3:/opt

3.6 配置hadoop环境变量(所有节点)

vim ~/.profile

新增下面内容

export HADOOP_HOME=/opt/hadoop-3.2.4

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

重刷环境变量:

source ~/.profile

3.7 格式化与启动

3.7.1 确保zookeeper启动(所有节点)

查看zookeeper是否启动

zkServer.sh status

如果未启动则启动zookeeper

zkServer.sh start

3.7.2 启动journalnode(所有节点)

hdfs --daemon start journalnode

3.7.3 namenode格式化(ds1)

hdfs namenode -format

3.7.4 启动namenode(ds1)

hdfs --daemon start namenode

3.7.5 设置备用namenode(ds2)

hdfs namenode -bootstrapStandby

3.7.6 启动备用namenode(ds2)

hdfs --daemon start namenode

3.7.7 启动datanode(所用节点)

hdfs --daemon start datanode

3.7.8 格式化ZKFC(ds1)

hdfs zkfc -formatZK

3.7.9 启动ZKFC(ds1,ds2)

hdfs --daemon start zkfc

此时HA已经搭建完成了

3.7.10 停止与启动

先停止Hadoop集群:

stop-all.sh

再重新启动:

start-all.sh

通过jps查看进程

ds1:

ds2:

ds3:

3.7.11 页面访问

192.168.12.253:50070

192.168.12.38:50070

3.7.12 启动historyserver服务

mr-jobhistory-daemon.sh start historyserver

4. hbase集群的安装

4.1 下载安装包

下载地址:https://hbase.apache.org/downloads.html

因后续还需要安装phoenix,故在选择hbase版本时需要注意与phoenix的版本不可以选太新的hbase版本。

此采用hbase-2.4.0版本

4.2 上传与解压

上传到服务器/home/intellif下

解压:

tar -zxvf hbase-2.4.0-bin.tar.gz

mv hbase-2.4.0/ /opt

4.3 修改配置文件

cd /opt/hbase-2.4.0/conf

4.3.1 hbase-env.sh配置修改

vim hbase-env.sh

添加:

export HBASE_MANAGES_ZK=false

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

4.3.2 hbase-site.xml配置修改

vim hbase-site.xml

<configuration>

<property>

<name>hbase.rootdirname>

<value>hdfs://mycluster/hbasevalue>

property>

<property>

<name>hbase.cluster.distributedname>

<value>truevalue>

property>

<property>

<name>hbase.zookeeper.quorumname>

<value>ds1,ds2,ds3value>

property>

<property>

<name>hbase.zookeeper.property.dataDirname>

<value>/bigdata/hbase/zkDatavalue>

property>

<property>

<name>hbase.tmp.dirname>

<value>/bigdata/hbase/tmpvalue>

property>

<property>

<name>hbase.unsafe.stream.capability.enforcename>

<value>falsevalue>

property>

<property>

<name>hbase.rpc.timeoutname>

<value>900000value>

property>

<property>

<name>hbase.client.scanner.timeout.periodname>

<value>900000value>

property>

<property>

<name>index.builder.threads.maxname>

<value>40value>

property>

<property>

<name>index.writer.threads.maxname>

<value>40value>

property>

<property>

<name>index.tablefactory.cache.sizename>

<value>20value>

property>

<property>

<name>hbase.zookeeper.property.clientPortname>

<value>2181value>

property>

<property>

<name>hbase.hstore.compactionThresholdname>

<value>6value>

property>

<property>

<name>hbase.hstore.compaction.maxname>

<value>12value>

property>

<property>

<name>hbase.hstore.blockingStoreFilesname>

<value>16value>

property>

configuration>

4.3.3 regionservers配置修改

删除原来内容

新增:

ds1

ds2

ds3

4.3.4 复制core-site.xml与hdfs-site.xml文件到conf下

cp /opt/hadoop-3.2.4/etc/hadoop/core-site.xml /opt/hbase-2.4.0/conf

cp /opt/hadoop-3.2.4/etc/hadoop/hdfs-site.xml /opt/hbase-2.4.0/conf

4.4 分发hbase到ds2,ds3

scp -r hbase-2.4.0 ds2:/opt

scp -r hbase-2.4.0 ds3:/opt

4.5 配置hbase环境变量(所有节点)

vim ~/.profile

新增下面内容

export HBASE_HOME=/opt/hbase-2.4.0

export PATH=$PATH:$HBASE_HOME/bin

重刷环境变量

source ~/.profile

4.6 启动hbase(ds1)

start-hbase.sh

4.7 查看hbase进程jps

通过jps查看进程

4.8 页面访问

5. phoenxi集群安装

5.1下载

官网点击下载:

选择历史版本:

选择 phoenix-hbase-2.4.0-5.1.2-bin.tar.gz

5.2 上传与解压

上传到服务器/home/hdfs下

解压 :

tar -zxvf phoenix-hbase-2.4.0-5.1.2-bin.tar.gz

mv phoenix-hbase-2.4.0-5.1.2-bin/ /opt

5.3 拷贝hbase配置文件hbase-site.xml到phoenix bin目录

cp /opt/hbase-2.4.0/conf/hbase-site.xml /opt/phoenix-hbase-2.4.0-5.1.2-bin/bin/

5.4 分发phoenix到ds2,ds3中

scp -r phoenix-hbase-2.4.0-5.1.2-bin ds2:/opt

scp -rphoenix-hbase-2.4.0-5.1.2-bin ds3:/opt

5.5 拷贝phoenix jar包到hbase lib目录下(所有节点)

cp /opt/phoenix-hbase-2.4.0-5.1.2-bin/phoenix-server-hbase-2.4.0-5.1.2.jar /opt/hbase-2.4.0/lib/

5.6 配置phoenix环境变量(所有节点)

vim ~/.profile

新增下面内容

export PHOENIX_HOME=/opt/phoenix-hbase-2.4.0-5.1.2-bin

export PATH=$PATH:$PHOENIX_HOME/bin

重刷环境变量

source ~/.profile

5.7 phoenix连接hbase

首先确保hbase正常启动

连接命令:

sqlline.py ds1,ds2,ds3:2181

6. 安装hive(ds1)

6.1 下载

地址: Apache Downloads

6.2上传与解压

上传到 /home/intellif中

解压 :

tar -zxvf apache-hive-3.1.3-bin.tar.gz -C /opt

修改文件权限:

chmod -R 755 apache-hive-3.1.3-bin

mv apache-hive-3.1.3-bin hive

mv hive /opt

6.3 修改配置文件

cd /opt/hive/conf

cp hive-env.sh.template hive-env.sh

cp hive-default.xml.template hive-site.xml

6.3.1 修改hive-env.sh配置

vim hive-env.sh

新增下面三行

export HADOOP_HOME=//opt/hadoop-3.2.4

export HIVE_CONF_DIR=/opt/hive/conf

export HIVE_AUX_JARS_PATH=/opt/hive/lib

6.3.2 修改hive-site.xml配置

vim hive-site.xml

将 …替换为下面的内容

<configuration>

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://192.168.12.253:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false&serverTimezone=Asia/Shanghai&allowMultiQueries=true&zeroDateTimeBehavior=CONVERT_TO_NULLvalue>

<description>mysql链接地址description>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.cj.jdbc.Drivervalue>

<description>mysql驱动description>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>rootvalue>

<description>mysql用户名description>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>introcks1234value>

<description>mysql密码description>

property>

<property>

<name>system:java.io.tmpdirname>

<value>/user/hive/tmpvalue>

<description>在hdfs创建的地址description>

property>

<property>

<name>system:user.namename>

<value>hdfsvalue>

<description>这个随便取得namedescription>

property>

<property>

<name>hive.cli.print.headername>

<value>truevalue>

<description>Whether to print the names of the columns in query output.description>

property>

<property>

<name>hive.cli.print.current.dbname>

<value>truevalue>

<description>Whether to include the current database in the Hive prompt.description>

property>

<property>

<name>hive.server2.thrift.bind.hostname>

<value>ds1value>

property>

<property>

<name>hive.server2.thrift.portname>

<value>10000value>

property>

<property>

<name>hive.exec.dynamic.partition.modename>

<value>nonstrictvalue>

property>

<property>

<name>hive.security.authorization.enabledname>

<value>truevalue>

<description>enableordisable the hive clientauthorizationdescription>

property>

<property>

<name>hive.security.authorization.createtable.owner.grantsname>

<value>ALLvalue>

<description>theprivileges automatically granted to theownerwhenever a table gets created. Anexample like "select,drop"willgrant select and drop privilege to theowner of thetabledescription>

property>

configuration>

6.4 创建hdfs目录

在hdfs中创建目录

hdfs dfs -mkdir -p /user

hdfs dfs -mkdir -p /user/hive

hdfs dfs -mkdir -p /user/hive/warehouse

hdfs dfs -mkdir -p /user/hive/tmp

hdfs dfs -mkdir -p /user/hive/log

hdfs dfs -chmod -R 777 /user

6.5 上传mysql驱动包,替换guava包

将jar包mysql-connector-java-8.0.28.jar上传到hive的lib目录下

删除hive中的guava-19.0.jar包将hadoop下的guava-27.0-jre.jar复制到hive lib下

rm -rf /opt/hive/lib/guava-19.0.jar

cp /opt/hadoop-3.2.4/share/hadoop/common/lib/guava-27.0-jre.jar /opt/hive/lib

6.6 配置hive环境变量

vim ~/.profile

新增下面内容

export HIVE_HOME=/opt/hive

export PATH=$PATH:$HIVE_HOME/bin

source ~/.profile

6.7 初始化hive元数据

schematool -dbType mysql -initSchema

当查询mysql中hive数据库有如下表。表示初始化成功

6.8 启动hive服务

后台启动

nohup hive --service metastore & (启动hive元数据服务)

nohup hive --service hiveserver2 & (启动jdbc连接服务)

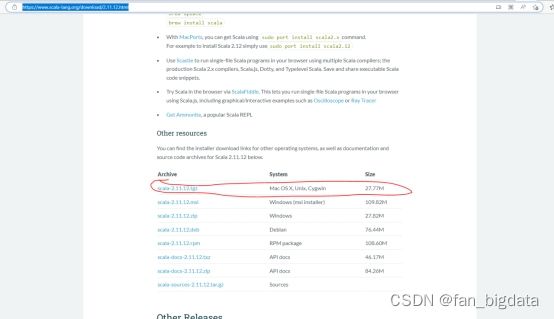

7. *安装scala*

7.1 下载

下载地址: Scala 2.11.12 | The Scala Programming Language (scala-lang.org)

7.2 上传与解压

分发到ds2、ds3中

scp scala-2.11.12.tgz ds2:/home/intellif/

scp scala-2.11.12.tgz ds3:/home/intellif/

解压:

sudo tar -zxf scala-2.11.12.tgz -C /usr/local

7.3 配置环境变量(所有节点)

vim ~/.profile

export SCALA_HOME=/usr/local/scala-2.11.12

export PATH=$PATH:$SCALA_HOME/bin

source ~/.profile

7.4 查看scala版本

scala -version

8. *安装spark*

8.1 下载

下载地址:Index of /dist/spark/spark-2.4.6 (apache.org)

8.2 上传与解压

tar -zxvf spark-2.4.6-bin-hadoop2.7.tgz -C /opt/

8.3 修改配置文件

8.3.1 修改slaves配置

cd /opt/spark-2.4.6-bin-hadoop2.7/conf

cp slaves.template slaves

vim slaves

将localhost改为

ds1

ds2

ds3

8.3.2 修改spark-env.sh配置

cp spark-env.sh.template spark-env.sh

vim spark-env.sh

新增下面内容

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export YARN_CONF_DIR=/opt/hadoop-3.2.4/etc/hadoop

8.3.3 复制hive-site.xml和hbase-site.xml到conf下

cp /opt/hive/conf/hive-site.xml /opt/spark-2.4.6-bin-hadoop2.7/conf

cp /opt/hbase-2.4.0/conf/hbase-site.xl /opt/spark-2.4.6-bin-hadoop2.7/conf

8.4 分发到ds2,ds3中

scp -r spark-2.4.6-bin-hadoop2.7/ ds2:/opt/

scp -r spark-2.4.6-bin-hadoop2.7/ ds3:/opt/

8.5 配置环境变量(所有节点)

vim ~/.profile

export SPARK_HOME=/opt/spark-2.4.6-bin-hadoop2.7

export PATH=$PATH:$SPARK_HOME/bin

source ~/.profile

8.6 验证

spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

--deploy-mode cluster \

/opt/spark-2.4.6-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.4.6.jar 10