在树莓派中写入科大讯飞语音转文字识别程序

在树莓派桌面里新建一个xunfei_zhuan.py文件,然后打开文件,然后使用默认软件编程

点击terminal,在里面使用以下命令安装cffi==1.12.3库

pip3 install cffi==1.12.3

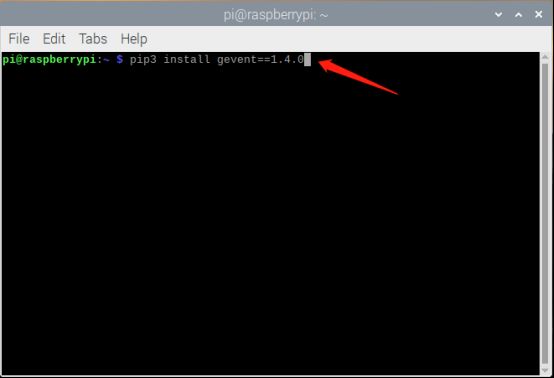

使用以下命令安装gevent==1.4.0库

pip3 install gevent==1.4.0

使用以下命令安装greenlet==0.4.15库

pip3 install greenlet==0.4.15

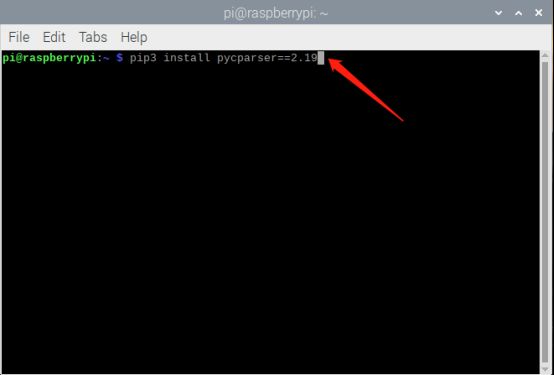

使用以下命令安装pycparser==2.19库

pip3 install pycparser==2.19

使用以下命令安装six==1.12.0库

pip3 install six==1.12.0

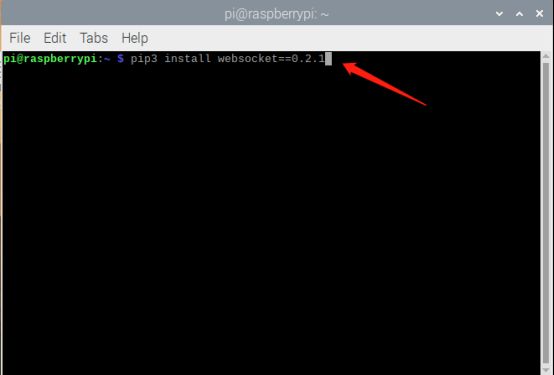

使用以下命令安装websocket==0.2.1库

pip3 install websocket==0.2.1

使用以下命令安装websocket-client==0.56.0库

pip3 install websocket-client==0.56.0

导入下面python库

import wave

import pyaudio

import websocket

import datetime

import hashlib

import base64

import hmac

import json

from urllib.parse import urlencode

import time

import ssl

from wsgiref.handlers import format_date_time

from datetime import datetime

from time import mktime

import _thread as thread

定义音频帧的标识

STATUS_FIRST_FRAME = 0 # 第一帧的标识

STATUS_CONTINUE_FRAME = 1 # 中间帧标识

STATUS_LAST_FRAME = 2 # 最后一帧的标识

定义一个类叫Ws_Param

class Ws_Param(object):

初始化这个类

def __init__(self, APPID, APIKey, APISecret, AudioFile):

self.APPID = APPID

self.APIKey = APIKey

self.APISecret = APISecret

self.AudioFile = AudioFile

# 公共参数(common)

self.CommonArgs = {"app_id": self.APPID}

# 业务参数(business),更多个性化参数可在官网查看

self.BusinessArgs = {"domain": "iat", "language": "zh_cn", "accent": "mandarin", "vinfo":1,"vad_eos":10000}

在类里面定义一个函数create_url,生成url

def create_url(self):

url = 'wss://ws-api.xfyun.cn/v2/iat'

# 生成RFC1123格式的时间戳

now = datetime.now()

date = format_date_time(mktime(now.timetuple()))

# 拼接字符串

signature_origin = "host: " + "ws-api.xfyun.cn" + "\n"

signature_origin += "date: " + date + "\n"

signature_origin += "GET " + "/v2/iat " + "HTTP/1.1"

# 进行hmac-sha256进行加密

signature_sha = hmac.new(self.APISecret.encode('utf-8'), signature_origin.encode('utf-8'),

digestmod=hashlib.sha256).digest()

signature_sha = base64.b64encode(signature_sha).decode(encoding='utf-8')

authorization_origin = "api_key=\"%s\", algorithm=\"%s\", headers=\"%s\", signature=\"%s\"" % (

self.APIKey, "hmac-sha256", "host date request-line", signature_sha)

authorization = base64.b64encode(authorization_origin.encode('utf-8')).decode(encoding='utf-8')

# 将请求的鉴权参数组合为字典

v = {

"authorization": authorization,

"date": date,

"host": "ws-api.xfyun.cn"

}

# 拼接鉴权参数,生成url

url = url + '?' + urlencode(v)

# print("date: ",date)

# print("v: ",v)

# 此处打印出建立连接时候的url,参考本demo的时候可取消上方打印的注释,比对相同参数时生成的url与自己代码生成的url是否一致

# print('websocket url :', url)

return url

定义一个函数on_message,收到websocket消息的处理

def on_message(ws, message):

try:

code = json.loads(message)["code"]

sid = json.loads(message)["sid"]

if code != 0:

errMsg = json.loads(message)["message"]

print("sid:%s call error:%s code is:%s" % (sid, errMsg, code))

else:

data = json.loads(message)["data"]["result"]["ws"]

# print(json.loads(message))

result = ""

for i in data:

for w in i["cw"]:

result += w["w"]

print(result)

# print(json.dumps(data, ensure_ascii=False))

except Exception as e:

print("receive msg,but parse exception:", e)

收到websocket错误的处理

def on_error(ws, error):

pass

收到websocket关闭的处理

def on_close(ws):

print("### closed ###")

定义一个函数on_open, 收到websocket连接建立的处理

def on_open(ws):

def run(*args):

frameSize = 8000 # 每一帧的音频大小

intervel = 0.04 # 发送音频间隔(单位:s)

status = STATUS_FIRST_FRAME # 音频的状态信息,标识音频是第一帧,还是中间帧、最后一帧

with open(wsParam.AudioFile, "rb") as fp:

while True:

buf = fp.read(frameSize)

# 文件结束

if not buf:

status = STATUS_LAST_FRAME

# 第一帧处理

# 发送第一帧音频,带business 参数

# appid 必须带上,只需第一帧发送

if status == STATUS_FIRST_FRAME:

d = {"common": wsParam.CommonArgs,

"business": wsParam.BusinessArgs,

"data": {"status": 0, "format": "audio/L16;rate=16000",

"audio": str(base64.b64encode(buf), 'utf-8'),

"encoding": "raw"}}

d = json.dumps(d)

ws.send(d)

status = STATUS_CONTINUE_FRAME

# 中间帧处理

elif status == STATUS_CONTINUE_FRAME:

d = {"data": {"status": 1, "format": "audio/L16;rate=16000",

"audio": str(base64.b64encode(buf), 'utf-8'),

"encoding": "raw"}}

ws.send(json.dumps(d))

# 最后一帧处理

elif status == STATUS_LAST_FRAME:

d = {"data": {"status": 2, "format": "audio/L16;rate=16000",

"audio": str(base64.b64encode(buf), 'utf-8'),

"encoding": "raw"}}

ws.send(json.dumps(d))

time.sleep(1)

break

# 模拟音频采样间隔

time.sleep(intervel)

ws.close()

thread.start_new_thread(run, ())

定义一个录音函数record,能够将实时说话的语音保存

def record(time): #录音程序

CHUNK = 1024

FORMAT = pyaudio.paInt16

CHANNELS = 1

RATE = 16000

RECORD_SECONDS =time

WAVE_OUTPUT_FILENAME = "./output.pcm"

p = pyaudio.PyAudio()

stream = p.open(format=FORMAT,

channels=CHANNELS,

rate=RATE,

input=True,

frames_per_buffer=CHUNK)

print("* recording")

frames = []

for i in range(0,int(RATE / CHUNK * RECORD_SECONDS)):

data = stream.read(CHUNK)

frames.append(data)

print("* done recording")

stream.stop_stream()

stream.close()

p.terminate()

wf = wave.open(WAVE_OUTPUT_FILENAME, 'wb')

wf.setnchannels(CHANNELS)

wf.setsampwidth(p.get_sample_size(FORMAT))

wf.setframerate(RATE)

wf.writeframes(b''.join(frames))

wf.close()

程序入口,开始执行程序

if __name__ == "__main__":

record(3)

time1 = datetime.now()

wsParam = Ws_Param(APPID='d69356bc', APIKey='c3f938a4da84f7449bd2f958d461e7e1',

APISecret='ZjE4ZGE4ZGU4YzViZmFhNTI0ZmYyNTE0',

AudioFile=r'./output.pcm')

websocket.enableTrace(False)

wsUrl = wsParam.create_url()

ws = websocket.WebSocketApp(wsUrl, on_message=on_message, on_error=on_error, on_close=on_close)

ws.on_open = on_open

ws.run_forever(sslopt={"cert_reqs": ssl.CERT_NONE})

time2 = datetime.now()

完整代码呈现

import wave

import pyaudio

import websocket

import datetime

import hashlib

import base64

import hmac

import json

from urllib.parse import urlencode

import time

import ssl

from wsgiref.handlers import format_date_time

from datetime import datetime

from time import mktime

import _thread as thread

STATUS_FIRST_FRAME = 0 # 第一帧的标识

STATUS_CONTINUE_FRAME = 1 # 中间帧标识

STATUS_LAST_FRAME = 2 # 最后一帧的标识

class Ws_Param(object):

# 初始化

def __init__(self, APPID, APIKey, APISecret, AudioFile):

self.APPID = APPID

self.APIKey = APIKey

self.APISecret = APISecret

self.AudioFile = AudioFile

# 公共参数(common)

self.CommonArgs = {"app_id": self.APPID}

# 业务参数(business),更多个性化参数可在官网查看

self.BusinessArgs = {"domain": "iat", "language": "zh_cn", "accent": "mandarin", "vinfo":1,"vad_eos":10000}

# 生成url

def create_url(self):

url = 'wss://ws-api.xfyun.cn/v2/iat'

# 生成RFC1123格式的时间戳

now = datetime.now()

date = format_date_time(mktime(now.timetuple()))

# 拼接字符串

signature_origin = "host: " + "ws-api.xfyun.cn" + "\n"

signature_origin += "date: " + date + "\n"

signature_origin += "GET " + "/v2/iat " + "HTTP/1.1"

# 进行hmac-sha256进行加密

signature_sha = hmac.new(self.APISecret.encode('utf-8'), signature_origin.encode('utf-8'),

digestmod=hashlib.sha256).digest()

signature_sha = base64.b64encode(signature_sha).decode(encoding='utf-8')

authorization_origin = "api_key=\"%s\", algorithm=\"%s\", headers=\"%s\", signature=\"%s\"" % (

self.APIKey, "hmac-sha256", "host date request-line", signature_sha)

authorization = base64.b64encode(authorization_origin.encode('utf-8')).decode(encoding='utf-8')

# 将请求的鉴权参数组合为字典

v = {

"authorization": authorization,

"date": date,

"host": "ws-api.xfyun.cn"

}

# 拼接鉴权参数,生成url

url = url + '?' + urlencode(v)

# print("date: ",date)

# print("v: ",v)

# 此处打印出建立连接时候的url,参考本demo的时候可取消上方打印的注释,比对相同参数时生成的url与自己代码生成的url是否一致

# print('websocket url :', url)

return url

# 收到websocket消息的处理

def on_message(ws, message):

try:

code = json.loads(message)["code"]

sid = json.loads(message)["sid"]

if code != 0:

errMsg = json.loads(message)["message"]

print("sid:%s call error:%s code is:%s" % (sid, errMsg, code))

else:

data = json.loads(message)["data"]["result"]["ws"]

# print(json.loads(message))

result = ""

for i in data:

for w in i["cw"]:

result += w["w"]

print(result)

# print(json.dumps(data, ensure_ascii=False))

except Exception as e:

print("receive msg,but parse exception:", e)

def on_error(ws, error):

pass

def on_close(ws):

print("### closed ###")

def on_open(ws):

def run(*args):

frameSize = 8000 # 每一帧的音频大小

intervel = 0.04 # 发送音频间隔(单位:s)

status = STATUS_FIRST_FRAME

with open(wsParam.AudioFile, "rb") as fp:

while True:

buf = fp.read(frameSize)

# 文件结束

if not buf:

status = STATUS_LAST_FRAME

# 第一帧处理

# 发送第一帧音频,带business 参数

# appid 必须带上,只需第一帧发送

if status == STATUS_FIRST_FRAME:

d = {"common": wsParam.CommonArgs,

"business": wsParam.BusinessArgs,

"data": {"status": 0, "format": "audio/L16;rate=16000",

"audio": str(base64.b64encode(buf), 'utf-8'),

"encoding": "raw"}}

d = json.dumps(d)

ws.send(d)

status = STATUS_CONTINUE_FRAME

# 中间帧处理

elif status == STATUS_CONTINUE_FRAME:

d = {"data": {"status": 1, "format": "audio/L16;rate=16000",

"audio": str(base64.b64encode(buf), 'utf-8'),

"encoding": "raw"}}

ws.send(json.dumps(d))

# 最后一帧处理

elif status == STATUS_LAST_FRAME:

d = {"data": {"status": 2, "format": "audio/L16;rate=16000",

"audio": str(base64.b64encode(buf), 'utf-8'),

"encoding": "raw"}}

ws.send(json.dumps(d))

time.sleep(1)

break

# 模拟音频采样间隔

time.sleep(intervel)

ws.close()

thread.start_new_thread(run, ())

def record(time): #录音程序

CHUNK = 1024

FORMAT = pyaudio.paInt16

CHANNELS = 1

RATE = 16000

RECORD_SECONDS =time

WAVE_OUTPUT_FILENAME = "./output.pcm"

p = pyaudio.PyAudio()

stream = p.open(format=FORMAT,

channels=CHANNELS,

rate=RATE,

input=True,

frames_per_buffer=CHUNK)

print("* recording")

frames = []

for i in range(0,int(RATE / CHUNK * RECORD_SECONDS)):

data = stream.read(CHUNK)

frames.append(data)

print("* done recording")

stream.stop_stream()

stream.close()

p.terminate()

wf = wave.open(WAVE_OUTPUT_FILENAME, 'wb')

wf.setnchannels(CHANNELS)

wf.setsampwidth(p.get_sample_size(FORMAT))

wf.setframerate(RATE)

wf.writeframes(b''.join(frames))

wf.close()

if __name__ == "__main__":

record(3)

time1 = datetime.now()

wsParam = Ws_Param(APPID='d69356bc', APIKey='c3f938a4da84f7449bd2f958d461e7e1',

APISecret='ZjE4ZGE4ZGU4YzViZmFhNTI0ZmYyNTE0',

AudioFile=r'./output.pcm')

websocket.enableTrace(False)

wsUrl = wsParam.create_url()

ws = websocket.WebSocketApp(wsUrl, on_message=on_message, on_error=on_error, on_close=on_close)

ws.on_open = on_open

ws.run_forever(sslopt={"cert_reqs": ssl.CERT_NONE})

time2 = datetime.now()

然后使用命令进入桌面路径

cd /home/pi/Desktop

最后使用以下命令运行

python3 xunfei_zhuan.py

最后运行通之后,可以实时讲话,同时程序会将你说的话转成文字输出出来。