【大数据】Hadoop在呼唤Hive(附一键部署Hive脚本)

CSDN话题挑战赛第2期

参赛话题:学习笔记

一、准备工作

1、下载Hive包

Hive下载地址

本文以apache-hive-3.1.2-bin.tar.gz作为部署,可以自身需要选择版本

Hive文档地址

2、下载mysql的rpm包

01_mysql-community-common-5.7.29-1.el7.x86_64.rpm

02_mysql-community-libs-5.7.29-1.el7.x86_64.rpm

03_mysql-community-libs-compat-5.7.29-1.el7.x86_64.rpm

04_mysql-community-client-5.7.29-1.el7.x86_64.rpm

05_mysql-community-server-5.7.29-1.el7.x86_64.rpm

mysql-connector-java-5.1.48.jar

3、下载Hive引擎tez包

tez-0.10.1-SNAPSHOT.tar.gz

4、将shell脚本放到/usr/bin下

给脚本赋权限

cd /usr/bin

chmod +x hive-install.sh

5、启动Hadoop集群

执行命令

one 10

具体安装包可加公众号【纯码农(purecodefarmer)】输入“hive”即可获取下载链接

一键部署hadoop集群可参考【大数据】搭建Hadoop集群(附一键部署脚本)

备注:将下载的包放到hadoop集群主机的/opt/software/hive 目录下

二、Hive的搭建(脚本分解)

1、安装mysql

在安装mysql包时我们需要先删除自带的Mysql-libs

可使用命令查看是否有

rpm -qa | grep -i -E mysql\|mariadb

![]()

1、卸载原有的mysql

脚本内容

echo "-------删除原有的mysql-------"

systemctl stop mysqld

service mysql stop 2>/dev/null

service mysqld stop 2>/dev/null

rpm -qa | grep -i mysql | xargs -n1 rpm -e --nodeps 2>/dev/null

rpm -qa | grep -i mariadb | xargs -n1 rpm -e --nodeps 2>/dev/null

rm -rf /var/lib/mysql

rm -rf /usr/lib64/mysql

rm -rf /etc/my.cnf

rm -rf /usr/my.cnf

rm -rf /var/log/mysqld.log

执行脚本命令

sh hive-install.sh 1

2、安装mysql驱动包

脚本内容

cd /opt/software/hive

echo "-------安装mysql依赖-------"

rpm -ivh 01_mysql-community-common-5.7.29-1.el7.x86_64.rpm

rpm -ivh 02_mysql-community-libs-5.7.29-1.el7.x86_64.rpm

rpm -ivh 03_mysql-community-libs-compat-5.7.29-1.el7.x86_64.rpm

echo "-------安装mysql-client-------"

rpm -ivh 04_mysql-community-client-5.7.29-1.el7.x86_64.rpm

echo "-------安装mysql-server-------"

rpm -ivh 05_mysql-community-server-5.7.29-1.el7.x86_64.rpm

echo "-------启动mysql-------"

systemctl start mysqld

pass=`cat /var/log/mysqld.log | grep password`

pass1=`echo ${pass#*localhost: }`

echo "-------mysql 初始密码-------" $pass1

echo "-------登录mysql-------"

mysql -uroot -p$pass1

执行脚本命令

sh hive-install.sh 1

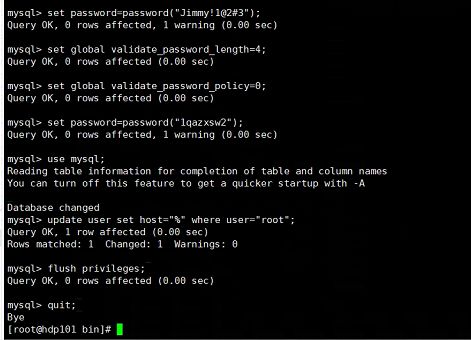

3、修改mysql的默认密码

# 设置复杂密码

mysql> set password=password("Jimmy!1@2#3");

# 修改密码策略

mysql> set global validate_password_length=4;

mysql> set global validate_password_policy=0;

# 设置简单密码

mysql> set password=password("1qazxsw2");

# 进入mysql库修改Host

mysql> use mysql;

mysql> update user set host="%" where user="root";

# 刷新并退出

mysql> flush privileges;

mysql> quit;

2、安装hive

安装完Mysql驱动后安装Hive的安装包

1、安装Hive并配置环境变量

脚本内容

echo "-------解压Hive的安装包-------"

mkdir /opt/module/hive312

tar -zxvf /opt/software/hive/apache-hive-3.1.2-bin.tar.gz -C /opt/module/hive312

echo "-------重命名Hive解压包-------"

mv /opt/module/apache-hive-3.1.2-bin/ /opt/module/hive312

echo "-------配置Hive的环境变量-------"

echo "" >> /etc/profile.d/my_env.sh

echo "#Hive environment" >> /etc/profile.d/my_env.sh

echo "export HIVE_HOME=/opt/module/hive312" >> /etc/profile.d/my_env.sh

echo "export PATH=\$PATH:\${HIVE_HOME}/bin" >> /etc/profile.d/my_env.sh

echo "-------解决日志Jar包冲突-------"

mv /opt/module/hive312/lib/log4j-slf4j-impl-2.10.0.jar /opt/module/hive312/lib/log4j-slf4j-impl-2.10.0.bak

echo "-------拷贝Mysql驱动放到Hive下-------"

cp /opt/software/hive/mysql-connector-java-5.1.48.jar /opt/module/hive312/lib

执行脚本命令

sh hive-install.sh 2

备注:配置了环境变量重新刷新后进入,不然环境变量不生效

2、配置Metastore到Mysql

脚本内容

echo "-------配置Hive Metastore元数据-------"

cd $HIVE_HOME/conf/

rm -rf hive-site.xml

sudo touch hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'javax.jdo.option.ConnectionURL ' >> $HIVE_HOME/conf/hive-site.xml

echo 'jdbc:mysql://hdp101:3306/metastore?useSSL=false ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'javax.jdo.option.ConnectionDriverName ' >> $HIVE_HOME/conf/hive-site.xml

echo 'com.mysql.jdbc.Driver ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'javax.jdo.option.ConnectionUserName ' >> $HIVE_HOME/conf/hive-site.xml

echo 'root ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'javax.jdo.option.ConnectionPassword ' >> $HIVE_HOME/conf/hive-site.xml

echo '1qazxsw2 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.metastore.warehouse.dir ' >> $HIVE_HOME/conf/hive-site.xml

echo '/user/hive/warehouse ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.metastore.schema.verification ' >> $HIVE_HOME/conf/hive-site.xml

echo 'false ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.metastore.uris ' >> $HIVE_HOME/conf/hive-site.xml

echo 'thrift://hdp101:9083 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.server2.thrift.port ' >> $HIVE_HOME/conf/hive-site.xml

echo '10000 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.server2.thrift.bind.host ' >> $HIVE_HOME/conf/hive-site.xml

echo 'hdp101 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.metastore.event.db.notification.api.auth ' >> $HIVE_HOME/conf/hive-site.xml

echo 'false ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

# 修改Hive的计算引擎

echo "-------修改Hive的计算引擎-------"

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.execution.engine ' >> $HIVE_HOME/conf/hive-site.xml

echo 'tez ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.tez.container.size ' >> $HIVE_HOME/conf/hive-site.xml

echo '1024 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

执行脚本命令

sh hive-install.sh 3

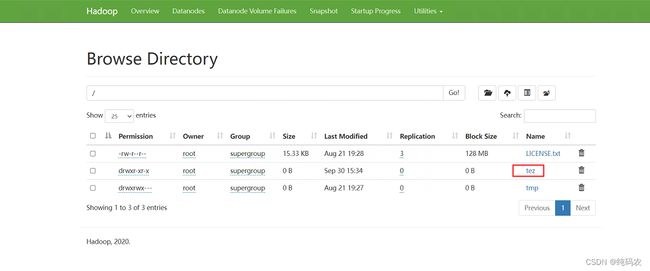

3、安装tez并配置

备注:安装tez的时候先启动Hadoop集群

脚本内容

echo "-------安装tez-------"

mkdir /opt/module/tez

tar -zxvf /opt/software/hive/tez-0.10.1-SNAPSHOT.tar.gz -C /opt/module/tez

echo "-------上传tez依赖到HDFS-------"

`hadoop fs -mkdir /tez`

`hadoop fs -put /opt/software/hive/tez-0.10.1-SNAPSHOT.tar.gz /tez`

echo "-------配置tez-site文件-------"

cd $HADOOP_HOME/etc/hadoop

rm -rf tez-site.xml

sudo touch tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.lib.uris ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '${fs.defaultFS}/tez/tez-0.10.1-SNAPSHOT.tar.gz ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.use.cluster.hadoop-libs ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'true ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.am.resource.memory.mb ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '1024 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.am.resource.cpu.vcores ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '1 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.container.max.java.heap.fraction ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '0.4 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.task.resource.memory.mb ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '1024 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.task.resource.cpu.vcores ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '1 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo ' ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo "-------修改Hadoop环境变量-------"

echo "export TEZ_CONF_DIR=\$HADOOP_HOME/etc/hadoop" >> $HADOOP_HOME/etc/hadoop/hadoop-env.sh

echo "export TEZ_JARS=/opt/module/tez" >> $HADOOP_HOME/etc/hadoop/hadoop-env.sh

echo "export HADOOP_CLASSPATH=\$HADOOP_CLASSPATH:\${TEZ_CONF_DIR}:\${TEZ_JARS}/*:\${TEZ_JARS}/lib/*" >> $HADOOP_HOME/etc/hadoop/hadoop-env.sh

echo "-------解决jar包冲突,删除tez的日志jar-------"

rm /opt/module/tez/lib/slf4j-log4j12-1.7.10.jar

执行脚本命令

sh hive-install.sh 4

4、启动停止Hive

1、新建Hive的元数据库

# 登录Mysql数据库

mysql -uroot -p1qazxsw2

# 新建Hive元数据库 metastore 是在hive-site.xml中配置的

mysql> create database metastore;

# 刷新并退出

mysql> quit;

2、初始化元数据库

cd /opt/module/hive312/bin/

schematool -initSchema -dbType mysql -verbose

3、启动Hive命令

脚本内容

echo "-------启动Hive-------"

HIVE_LOG_DIR=$HIVE_HOME/logs

mkdir -p $HIVE_LOG_DIR

metapid=$(check_process HiveMetastore 9083)

cmd="nohup hive --service metastore >$HIVE_LOG_DIR/metastore.log 2>&1 &"

cmd=$cmd" sleep 4; hdfs dfsadmin -safemode wait >/dev/null 2>&1"

[ -z "$metapid" ] && eval $cmd || echo "-------Metastroe服务已启动-------"

server2pid=$(check_process HiveServer2 10000)

cmd="nohup hive --service hiveserver2 >$HIVE_LOG_DIR/hiveServer2.log 2>&1 &"

[ -z "$server2pid" ] && eval $cmd || echo "-------HiveServer2服务已启动-------"

执行脚本命令

sh hive-install.sh 5

4、停止Hive命令

脚本内容

echo "-------关闭Hive-------"

metapid=$(check_process HiveMetastore 9083)

[ "$metapid" ] && kill $metapid || echo "-------Metastore服务已关闭-------"

server2pid=$(check_process HiveServer2 10000)

[ "$server2pid" ] && kill $server2pid || echo "-------HiveServer2服务已关闭-------"

执行脚本命令

sh hive-install.sh 6

5、重启Hive命令

执行脚本命令

sh hive-install.sh 7

6、更改Hive的日志路径

cd /opt/module/hive312/conf

mv hive-log4j2.properties.template hive-log4j2.properties

vim hive-log4j2.properties

# 修改后重启Hive

property.hive.log.dir = /opt/module/hive312/logs

三、Hive一键部署脚本

脚本内容

#!/bin/bash

# 卸载原有的mysql

uninstall_mysql() {

echo "-------删除原有的mysql-------"

systemctl stop mysqld

service mysql stop 2>/dev/null

service mysqld stop 2>/dev/null

rpm -qa | grep -i mysql | xargs -n1 rpm -e --nodeps 2>/dev/null

rpm -qa | grep -i mariadb | xargs -n1 rpm -e --nodeps 2>/dev/null

rm -rf /var/lib/mysql

rm -rf /usr/lib64/mysql

rm -rf /etc/my.cnf

rm -rf /usr/my.cnf

rm -rf /var/log/mysqld.log

}

# 安装mysql驱动包

install_mysql() {

cd /opt/software/hive

echo "-------安装mysql依赖-------"

rpm -ivh 01_mysql-community-common-5.7.29-1.el7.x86_64.rpm

rpm -ivh 02_mysql-community-libs-5.7.29-1.el7.x86_64.rpm

rpm -ivh 03_mysql-community-libs-compat-5.7.29-1.el7.x86_64.rpm

echo "-------安装mysql-client-------"

rpm -ivh 04_mysql-community-client-5.7.29-1.el7.x86_64.rpm

echo "-------安装mysql-server-------"

rpm -ivh 05_mysql-community-server-5.7.29-1.el7.x86_64.rpm

echo "-------启动mysql-------"

systemctl start mysqld

password=`cat /var/log/mysqld.log | grep password`

password1=`echo ${password#*localhost: }`

echo "-------mysql 初始密码-------" $password1

echo "-------登录mysql-------"

mysql -uroot -p$password1

}

# 安装hive

install_hive() {

echo "-------解压Hive的安装包-------"

tar -zxvf /opt/software/hive/apache-hive-3.1.2-bin.tar.gz -C /opt/module/

echo "-------重命名Hive解压包-------"

mv /opt/module/apache-hive-3.1.2-bin/ /opt/module/hive312

echo "-------配置Hive的环境变量-------"

echo "" >> /etc/profile.d/my_env.sh

echo "#Hive environment" >> /etc/profile.d/my_env.sh

echo "export HIVE_HOME=/opt/module/hive312" >> /etc/profile.d/my_env.sh

echo "export PATH=\$PATH:\${HIVE_HOME}/bin" >> /etc/profile.d/my_env.sh

echo "-------解决日志Jar包冲突-------"

mv /opt/module/hive312/lib/log4j-slf4j-impl-2.10.0.jar /opt/module/hive312/lib/log4j-slf4j-impl-2.10.0.bak

echo "-------拷贝Mysql驱动放到Hive下-------"

cp /opt/software/hive/mysql-connector-java-5.1.48.jar /opt/module/hive312/lib

}

# 配置Hive Metastore元数据

hive_config() {

echo "-------配置Hive Metastore元数据-------"

HIVE_HOME=/opt/module/hive312

cd $HIVE_HOME/conf/

rm -rf hive-site.xml

sudo touch hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

# Hive元数据库名称metastore

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'javax.jdo.option.ConnectionURL ' >> $HIVE_HOME/conf/hive-site.xml

echo 'jdbc:mysql://hdp101:3306/metastore?useSSL=false ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'javax.jdo.option.ConnectionDriverName ' >> $HIVE_HOME/conf/hive-site.xml

echo 'com.mysql.jdbc.Driver ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'javax.jdo.option.ConnectionUserName ' >> $HIVE_HOME/conf/hive-site.xml

echo 'root ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'javax.jdo.option.ConnectionPassword ' >> $HIVE_HOME/conf/hive-site.xml

echo '1qazxsw2 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.metastore.warehouse.dir ' >> $HIVE_HOME/conf/hive-site.xml

echo '/user/hive/warehouse ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.metastore.schema.verification ' >> $HIVE_HOME/conf/hive-site.xml

echo 'false ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.metastore.uris ' >> $HIVE_HOME/conf/hive-site.xml

echo 'thrift://hdp101:9083 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.server2.thrift.port ' >> $HIVE_HOME/conf/hive-site.xml

echo '10000 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.server2.thrift.bind.host ' >> $HIVE_HOME/conf/hive-site.xml

echo 'hdp101 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.metastore.event.db.notification.api.auth ' >> $HIVE_HOME/conf/hive-site.xml

echo 'false ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

# 修改Hive的计算引擎

echo "-------修改Hive的计算引擎-------"

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.execution.engine ' >> $HIVE_HOME/conf/hive-site.xml

echo 'tez ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo 'hive.tez.container.size ' >> $HIVE_HOME/conf/hive-site.xml

echo '1024 ' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

echo '' >> $HIVE_HOME/conf/hive-site.xml

}

# 安装tez并配置

install_tez() {

echo "-------安装tez-------"

mkdir /opt/module/tez

tar -zxvf /opt/software/hive/tez-0.10.1-SNAPSHOT.tar.gz -C /opt/module/tez

echo "-------上传tez依赖到HDFS-------"

`hadoop fs -mkdir /tez`

`hadoop fs -put /opt/software/hive/tez-0.10.1-SNAPSHOT.tar.gz /tez`

echo "-------配置tez-site文件-------"

cd $HADOOP_HOME/etc/hadoop

rm -rf tez-site.xml

sudo touch tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.lib.uris ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '${fs.defaultFS}/tez/tez-0.10.1-SNAPSHOT.tar.gz ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.use.cluster.hadoop-libs ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'true ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.am.resource.memory.mb ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '1024 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.am.resource.cpu.vcores ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '1 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.container.max.java.heap.fraction ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '0.4 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.task.resource.memory.mb ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '1024 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo 'tez.task.resource.cpu.vcores ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '1 ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo '' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo ' ' >> $HADOOP_HOME/etc/hadoop/tez-site.xml

echo "-------修改Hadoop环境变量-------"

echo "export TEZ_CONF_DIR=\$HADOOP_HOME/etc/hadoop" >> $HADOOP_HOME/etc/hadoop/hadoop-env.sh

echo "export TEZ_JARS=/opt/module/tez" >> $HADOOP_HOME/etc/hadoop/hadoop-env.sh

echo "export HADOOP_CLASSPATH=\$HADOOP_CLASSPATH:\${TEZ_CONF_DIR}:\${TEZ_JARS}/*:\${TEZ_JARS}/lib/*" >> $HADOOP_HOME/etc/hadoop/hadoop-env.sh

echo "-------解决jar包冲突,删除tez的日志jar-------"

rm -rf /opt/module/tez/lib/slf4j-log4j12-1.7.10.jar

}

#检查进程是否运行正常,参数1为进程名,参数2为进程端口

function check_process()

{

pid=$(ps -ef 2>/dev/null | grep -v grep | grep -i $1 | awk '{print $2}')

ppid=$(netstat -nltp 2>/dev/null | grep $2 | awk '{print $7}' | cut -d '/' -f 1)

echo $pid

[[ "$pid" =~ "$ppid" ]] && [ "$ppid" ] && return 0 || return 1

}

# 启动Hive

hive_start() {

echo "-------启动Hive-------"

HIVE_LOG_DIR=$HIVE_HOME/logs

mkdir -p $HIVE_LOG_DIR

metapid=$(check_process HiveMetastore 9083)

cmd="nohup hive --service metastore >$HIVE_LOG_DIR/metastore.log 2>&1 &"

cmd=$cmd" sleep 4; hdfs dfsadmin -safemode wait >/dev/null 2>&1"

[ -z "$metapid" ] && eval $cmd || echo "-------Metastroe服务已启动-------"

server2pid=$(check_process HiveServer2 10000)

cmd="nohup hive --service hiveserver2 >$HIVE_LOG_DIR/hiveServer2.log 2>&1 &"

[ -z "$server2pid" ] && eval $cmd || echo "-------HiveServer2服务已启动-------"

}

# 关闭Hive

hive_stop() {

echo "-------关闭Hive-------"

metapid=$(check_process HiveMetastore 9083)

[ "$metapid" ] && kill $metapid || echo "-------Metastore服务已关闭-------"

server2pid=$(check_process HiveServer2 10000)

[ "$server2pid" ] && kill $server2pid || echo "-------HiveServer2服务已关闭-------"

}

#根据用户的选择进行对应的安装

custom_option() {

case $1 in

1)

uninstall_mysql

install_mysql

;;

2)

install_hive

;;

3)

hive_config

;;

4)

install_tez

;;

5)

hive_start

;;

6)

hive_stop

;;

7)

hive_stop

sleep 2

hive_start

;;

*)

echo "please option 1~7"

esac

}

#规定$1用户安装软件选择[]

custom_option $1

执行脚本命令

# 不同命令

1:安装Mysql

2:安装Hive

3:配置Hive

4:安装Tez引擎

5:启动Hive

6:停止Hive

7:重启Hive

sh hive-install.sh [1~7]