ELK高级搜索(三)

文章目录

-

- 11.索引Index入门

-

- 11.1 索引管理

- 11.2 定制分词器

- 11.3 type底层结构

- 11.4 定制dynamic mapping

- 11.5 零停机重建索引

- 12.中文分词器 IK分词器

-

- 12.1 Ik分词器安装使用

- 12.2 ik配置文件

- 12.3 使用mysql热更新

- 13.java api 实现索引管理

- 14.search搜索入门

-

- 14.1 搜索语法入门

- 14.2 multi-index 多索引搜索

- 14.3 分页搜索

- 14.4 query string基础语法

- 14.5 query DSL入门

- 14.6 full-text search 全文检索

- 14.7 DSL 语法练习

- 14.8 Filter

- 14.9 定位错误语法

- 14.10 定制排序规则

- 14.11 Text字段排序问题

- 14.12 Scroll分批查询

- 15.java api实现搜索

11.索引Index入门

11.1 索引管理

直接put数据 PUT index/_doc/1,es会自动生成索引,并建立动态映射dynamic mapping。

在生产上,需要自己手动建立索引和映射,为了更好地管理索引。就像数据库的建表语句一样。

11.1.1 创建索引

创建索引的语法

PUT /index

{

"settings": { ... any settings ... },

"mappings": {

"properties" : {

"field1" : { "type" : "text" }

}

},

"aliases": {

"default_index": {}

}

}

举例:

PUT /my_index

{

"settings": {

"number_of_shards": 1,

"number_of_replicas": 1

},

"mappings": {

"properties": {

"field1":{

"type": "text"

},

"field2":{

"type": "text"

}

}

},

"aliases": {

"default_index": {}

}

}

索引别名

插入数据

POST /my_index/_doc/1

{

"field1":"java",

"field2":"js"

}

查询数据 都可以查到

GET /my_index/_doc/1

GET /default_index/_doc/1

11.1.2 查询索引

GET /my_index/_mapping

GET /my_index/_setting

11.1.3 修改索引

修改副本数

PUT /my_index/_settings

{

"index" : {

"number_of_replicas" : 2

}

}

11.1.4 删除索引

DELETE /my_index

DELETE /index_one,index_two

DELETE /index_*

DELETE /_all

为了安全起见,防止恶意删除索引,删除时必须指定索引名:

elasticsearch.yml

action.destructive_requires_name: true

11.2 定制分词器

11.2.1 默认的分词器

standard

分词三个组件,character filter,tokenizer,token filter

standard tokenizer:以单词边界进行切分

standard token filter:什么都不做

lowercase token filter:将所有字母转换为小写

stop token filer(默认被禁用):移除停用词,比如a the it等等

11.2.2 修改分词器的设置

启用english停用词token filter

PUT /my_index

{

"settings": {

"analysis": {

"analyzer": {

"es_std": {

"type": "standard",

"stopwords": "_english_"

}

}

}

}

}

测试分词

GET /my_index/_analyze

{

"analyzer": "standard",

"text": "a dog is in the house"

}

GET /my_index/_analyze

{

"analyzer": "es_std",

"text":"a dog is in the house"

}

11.2.3 定制化自己的分词器

PUT /my_index

{

"settings": {

"analysis": {

"char_filter": {

"&_to_and": {

"type": "mapping",

"mappings": ["&=> and"]

}

},

"filter": {

"my_stopwords": {

"type": "stop",

"stopwords": ["the", "a"]

}

},

"analyzer": {

"my_analyzer": {

"type": "custom",

"char_filter": ["html_strip", "&_to_and"],

"tokenizer": "standard",

"filter": ["lowercase", "my_stopwords"]

}

}

}

}

}

测试

GET /my_index/_analyze

{

"analyzer": "my_analyzer",

"text": "tom&jerry are a friend in the house, , HAHA!!"

}

设置字段使用自定义分词器

PUT /my_index/_mapping/

{

"properties": {

"content": {

"type": "text",

"analyzer": "my_analyzer"

}

}

}

11.3 type底层结构

11.3.1 type是什么

type,是一个index中用来区分类似的数据的,类似的数据,但是可能有不同的fields,而且有不同的属性来控制索引建立、分词器。

field的value,在底层的lucene中建立索引的时候,全部是opaque bytes类型,不区分类型的。

lucene是没有type的概念的,在document中,实际上将type作为一个document的field来存储,即_type,es通过_type来进行type的过滤和筛选。

11.3.2 es中不同type存储机制

一个index中的多个type,实际上是放在一起存储的,因此一个index下,不能有多个type重名,而类型或者其他设置不同的,因为那样是无法处理的

{

"goods": {

"mappings": {

"electronic_goods": {

"properties": {

"name": {

"type": "string",

},

"price": {

"type": "double"

},

"service_period": {

"type": "string"

}

}

},

"fresh_goods": {

"properties": {

"name": {

"type": "string",

},

"price": {

"type": "double"

},

"eat_period": {

"type": "string"

}

}

}

}

}

}

PUT /goods/electronic_goods/1

{

"name": "小米空调",

"price": 1999.0,

"service_period": "one year"

}

PUT /goods/fresh_goods/1

{

"name": "澳洲龙虾",

"price": 199.0,

"eat_period": "one week"

}

es文档在底层的存储

{

"goods": {

"mappings": {

"_type": {

"type": "text",

"index": "false"

},

"name": {

"type": "text"

}

"price": {

"type": "double"

}

"service_period": {

"type": "text"

},

"eat_period": {

"type": "text"

}

}

}

}

底层数据存储格式

{

"_type": "electronic_goods",

"name": "小米空调",

"price": 1999.0,

"service_period": "one year",

"eat_period": ""

}

{

"_type": "fresh_goods",

"name": "澳洲龙虾",

"price": 199.0,

"service_period": "",

"eat_period": "one week"

}

11.3.3 type弃用

同一索引下,不同type的数据存储其他type的field 大量空值,造成资源浪费。

所以,不同类型数据,要放到不同的索引中。

es9中,将会彻底删除type。

11.4 定制dynamic mapping

11.4.1 定制dynamic策略

-

true:遇到陌生字段,就进行dynamic mapping

-

false:新检测到的字段将被忽略。这些字段将不会被索引,因此将无法搜索,但仍将出现在返回点击的源字段中。这些字段不会添加到映射中,必须显式添加新字段

-

strict:遇到陌生字段,就报错

创建mapping

PUT /my_index

{

"mappings": {

"dynamic": "strict",

"properties": {

"title": {

"type": "text"

},

"address": {

"type": "object",

"dynamic": "true"

}

}

}

}

插入数据

PUT /my_index/_doc/1

{

"title": "my article",

"content": "this is my article",

"address": {

"province": "guangdong",

"city": "guangzhou"

}

}

报错

{

"error": {

"root_cause": [

{

"type": "strict_dynamic_mapping_exception",

"reason": "mapping set to strict, dynamic introduction of [content] within [_doc] is not allowed"

}

],

"type": "strict_dynamic_mapping_exception",

"reason": "mapping set to strict, dynamic introduction of [content] within [_doc] is not allowed"

},

"status": 400

}

11.4.2 自定义dynamic mapping策略

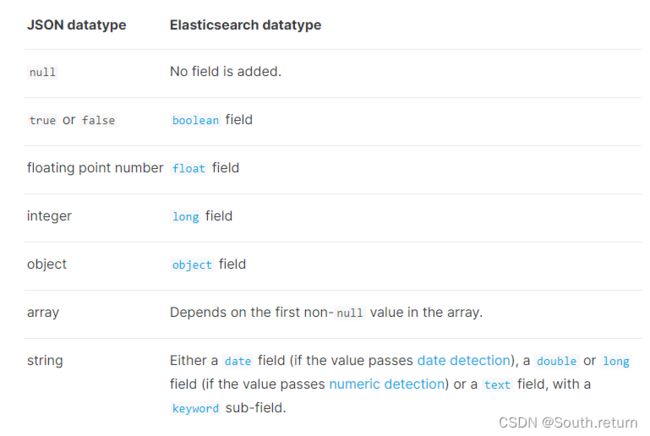

es会根据传入的值,推断类型。

date_detection 日期探测

默认会按照一定格式识别date,比如yyyy-MM-dd。但是如果某个field先过来一个2017-01-01的值,就会被自动dynamic mapping成date,后面如果再来一个"hello world"之类的值,就会报错。可以手动关闭某个type的date_detection,如果有需要,自己手动指定某个field为date类型。

PUT /my_index

{

"mappings": {

"date_detection": false,

"properties": {

"title": {

"type": "text"

},

"address": {

"type": "object",

"dynamic": "true"

}

}

}

}

测试

PUT /my_index/_doc/1

{

"title": "my article",

"content": "this is my article",

"address": {

"province": "guangdong",

"city": "guangzhou"

},

"post_date":"2019-09-10"

}

查看映射

GET /my_index/_mapping

自定义日期格式

PUT my_index

{

"mappings": {

"dynamic_date_formats": ["MM/dd/yyyy"]

}

}

插入数据

PUT my_index/_doc/1

{

"create_date": "09/25/2019"

}

numeric_detection 数字探测

虽然json支持本机浮点和整数数据类型,但某些应用程序或语言有时可能将数字呈现为字符串。通常正确的解决方案是显式地映射这些字段,但是可以启用数字检测(默认情况下禁用)来自动完成这些操作。

PUT my_index

{

"mappings": {

"numeric_detection": true

}

}

PUT my_index/_doc/1

{

"my_float": "1.0",

"my_integer": "1"

}

11.4.3 定制自己的dynamic mapping template

PUT /my_index

{

"mappings": {

"dynamic_templates": [

{

"en": {

"match": "*_en",

"match_mapping_type": "string",

"mapping": {

"type": "text",

"analyzer": "english"

}

}

}

]

}

}

插入数据

PUT /my_index/_doc/1

{

"title": "this is my first article"

}

PUT /my_index/_doc/2

{

"title_en": "this is my first article"

}

搜索

GET my_index/_search?q=first

GET my_index/_search?q=is

title没有匹配到任何的dynamic模板,默认就是standard分词器,不会过滤停用词,is会进入倒排索引,用is来搜索是可以搜索到的

title_en匹配到了dynamic模板,就是english分词器,会过滤停用词,is这种停用词就会被过滤掉,用is来搜索就搜索不到了

模板写法

PUT my_index

{

"mappings": {

"dynamic_templates": [

{

"integers": {

"match_mapping_type": "long",

"mapping": {

"type": "integer"

}

}

},

{

"strings": {

"match_mapping_type": "string",

"mapping": {

"type": "text",

"fields": {

"raw": {

"type": "keyword",

"ignore_above": 256

}

}

}

}

}

]

}

}

模板参数

"match": "long_*",

"unmatch": "*_text",

"match_mapping_type": "string",

"path_match": "name.*",

"path_unmatch": "*.middle",

"match_pattern": "regex",

"match": "^profit_\d+$"

场景

1 结构化搜索

默认情况下,elasticsearch将字符串字段映射为带有子关键字字段的文本字段。但是,如果只对结构化内容进行索引,而对全文搜索不感兴趣,则可以仅将“字段”映射为“关键字”。请注意,这意味着为了搜索这些字段,必须搜索索引所用的完全相同的值。

{

"strings_as_keywords": {

"match_mapping_type": "string",

"mapping": {

"type": "keyword"

}

}

}

2 仅搜索

与前面的示例相反,如果您只关心字符串字段的全文搜索,并且不打算对字符串字段运行聚合、排序或精确搜索,您可以告诉弹性搜索将其仅映射为文本字段(这是5之前的默认行为)

{

"strings_as_text": {

"match_mapping_type": "string",

"mapping": {

"type": "text"

}

}

}

3 norms 不关心评分

norms是指标时间的评分因素。如果您不关心评分,例如,如果您从不按评分对文档进行排序,则可以在索引中禁用这些评分因子的存储并节省一些空间。

{

"strings_as_keywords": {

"match_mapping_type": "string",

"mapping": {

"type": "text",

"norms": false,

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

}

}

}

11.5 零停机重建索引

11.5.1 零停机重建索引

场景:

一个field的设置是不能被修改的,如果要修改一个Field,应该重新按照新的mapping建立一个index,然后将数据批量查询出来,重新用bulk api写入index中。

批量查询的时候,建议采用scroll api,并且采用多线程并发的方式来reindex数据,每次scoll就查询指定日期的一段数据,交给一个线程即可。

(1)一开始,依靠dynamic mapping,插入数据,但是不小心有些数据是2019-09-10这种日期格式的,所以title这种field被自动映射为了date类型,实际上它应该是string类型的。

PUT /my_index/_doc/1

{

"title": "2019-09-10"

}

PUT /my_index/_doc/2

{

"title": "2019-09-11"

}

(2)当后期向索引中加入string类型的title值的时候,就会报错。

PUT /my_index/_doc/3

{

"title": "my first article"

}

报错

{

"error": {

"root_cause": [

{

"type": "mapper_parsing_exception",

"reason": "failed to parse [title]"

}

],

"type": "mapper_parsing_exception",

"reason": "failed to parse [title]",

"caused_by": {

"type": "illegal_argument_exception",

"reason": "Invalid format: \"my first article\""

}

},

"status": 400

}

(3)如果此时想修改title的类型,是不可能的。

PUT /my_index/_mapping

{

"properties": {

"title": {

"type": "text"

}

}

}

报错

{

"error": {

"root_cause": [

{

"type": "illegal_argument_exception",

"reason": "mapper [title] of different type, current_type [date], merged_type [text]"

}

],

"type": "illegal_argument_exception",

"reason": "mapper [title] of different type, current_type [date], merged_type [text]"

},

"status": 400

}

(4)此时,唯一的办法,就是进行reindex,也就是说,重新建立一个索引,将旧索引的数据查询出来,再导入新索引。

(5)如果说旧索引的名字,是old_index,新索引的名字是new_index,终端java应用,已经在使用old_index在操作了,难道还要去停止java应用,修改使用的index为new_index,才重新启动java应用吗?这个过程中,就会导致java应用停机,可用性降低。

(6)所以说,给java应用一个别名,这个别名是指向旧索引的,java应用先用着,java应用先用prod_index alias来操作,此时实际指向的是旧的my_index。

PUT /my_index/_alias/prod_index

(7)新建一个index,调整其title的类型为string。

PUT /my_index_new

{

"mappings": {

"properties": {

"title": {

"type": "text"

}

}

}

}

(8)使用scroll api将数据批量查询出来。

GET /my_index/_search?scroll=1m

{

"query": {

"match_all": {}

},

"size": 1

}

返回

{

"_scroll_id": "DnF1ZXJ5VGhlbkZldGNoBQAAAAAAADpAFjRvbnNUWVZaVGpHdklqOV9zcFd6MncAAAAAAAA6QRY0b25zVFlWWlRqR3ZJajlfc3BXejJ3AAAAAAAAOkIWNG9uc1RZVlpUakd2SWo5X3NwV3oydwAAAAAAADpDFjRvbnNUWVZaVGpHdklqOV9zcFd6MncAAAAAAAA6RBY0b25zVFlWWlRqR3ZJajlfc3BXejJ3",

"took": 1,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"failed": 0

},

"hits": {

"total": 3,

"max_score": null,

"hits": [

{

"_index": "my_index",

"_type": "my_type",

"_id": "1",

"_score": null,

"_source": {

"title": "2019-01-02"

},

"sort": [

0

]

}

]

}

}

(9)采用bulk api将scoll查出来的一批数据,批量写入新索引。

POST /_bulk

{ "index": { "_index": "my_index_new", "_id": "1" }}

{ "title": "2019-09-10" }

(10)反复循环8~9,查询一批又一批的数据出来,采取bulk api将每一批数据批量写入新索引。

(11)将prod_index alias切换到my_index_new上去,java应用会直接通过index别名使用新的索引中的数据,java应用程序不需要停机,零提交,高可用。

POST /_aliases

{

"actions": [

{ "remove": { "index": "my_index", "alias": "prod_index" }},

{ "add": { "index": "my_index_new", "alias": "prod_index" }}

]

}

(12)直接通过prod_index别名来查询,是否ok。

GET /prod_index/_search

11.5.2 生产实践:基于alias对client透明切换index

PUT /my_index_v1/_alias/my_index

client对my_index进行操作

reindex操作,完成之后,切换v1到v2

POST /_aliases

{

"actions": [

{ "remove": { "index": "my_index_v1", "alias": "my_index" }},

{ "add": { "index": "my_index_v2", "alias": "my_index" }}

]

}

12.中文分词器 IK分词器

12.1 Ik分词器安装使用

12.1.1 中文分词器

standard 分词器,仅适用于英文。

GET /_analyze

{

"analyzer": "standard",

"text": "中华人民共和国人民大会堂"

}

想要的效果是什么:中华人民共和国,人民大会堂

IK分词器就是目前最流行的es中文分词器

12.1.2 安装

官网:https://github.com/medcl/elasticsearch-analysis-ik

下载地址:https://github.com/medcl/elasticsearch-analysis-ik/releases

根据es版本下载相应版本包。

解压到 es/plugins/ik中。

重启es

12.1.3 ik分词器基础知识

ik_max_word: 会将文本做最细粒度的拆分,比如会将“中华人民共和国人民大会堂”拆分为“中华人民共和国,中华人民,中华,华人,人民共和国,人民大会堂,人民大会,大会堂”,会穷尽各种可能的组合;

ik_smart: 会做最粗粒度的拆分,比如会将“中华人民共和国人民大会堂”拆分为“中华人民共和国,人民大会堂”。

12.1.4 ik分词器的使用

存储时,使用ik_max_word,搜索时,使用ik_smart

PUT /my_index

{

"mappings": {

"properties": {

"text": {

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart"

}

}

}

}

搜索

GET /my_index/_search?q=中华人民共和国人民大会堂

12.2 ik配置文件

12.2.1 ik配置文件

ik配置文件地址:es/plugins/ik/config目录

-

IKAnalyzer.cfg.xml:用来配置自定义词库

-

main.dic:ik原生内置的中文词库,总共有27万多条,只要是这些单词,都会被分在一起

-

preposition.dic: 介词

-

quantifier.dic:放了一些单位相关的词,量词

-

suffix.dic:放了一些后缀

-

surname.dic:中国的姓氏

-

stopword.dic:英文停用词

ik原生最重要的两个配置文件:

-

main.dic:包含了原生的中文词语,会按照这个里面的词语去分词

-

stopword.dic:包含了英文的停用词

停用词,stopword

a the and at but 停用词,会在分词的时候,直接被干掉,不会建立在倒排索引中

12.2.2 自定义词库

(1)自己建立词库:每年都会涌现一些特殊的流行词,网红,蓝瘦香菇,喊麦,鬼畜,一般不会在ik的原生词典里

-

自己补充自己的最新的词语,到ik的词库里面

-

IKAnalyzer.cfg.xml:ext_dict,创建mydict.dic

-

补充自己的词语,然后需要重启es,才能生效

(2)自己建立停用词库:比如了,的,啥,么,我们可能并不想去建立索引,让人家搜索

- custom/ext_stopword.dic,已经有了常用的中文停用词,可以补充自己的停用词,然后重启es

12.3 使用mysql热更新

12.3.1 热更新

每次都是在es的扩展词典中,手动添加新词语,很坑

(1)每次添加完,都要重启es才能生效,非常麻烦

(2)es是分布式的,可能有数百个节点,你不能每次都一个一个节点上面去修改

es不停机,直接我们在外部某个地方添加新的词语,es中立即热加载到这些新词语

热更新的方案

(1)基于ik分词器原生支持的热更新方案,部署一个web服务器,提供一个http接口,通过modified和tag两个http响应头,来提供词语的热更新

(2)修改ik分词器源码,然后手动支持从mysql中每隔一定时间,自动加载新的词库

用第二种方案,第一种,ik git社区官方都不建议采用,觉得不太稳定

12.3.2 步骤

1、下载源码

https://github.com/medcl/elasticsearch-analysis-ik/releases

ik分词器,是个标准的java maven工程,直接导入eclipse就可以看到源码

2、修改源

-

org.wltea.analyzer.dic.Dictionary类,160行Dictionary单例类的初始化方法,在这里需要创建一个我们自定义的线程,并且启动它

-

org.wltea.analyzer.dic.HotDictReloadThread类:就是死循环,不断调用Dictionary.getSingleton().reLoadMainDict(),去重新加载词典

-

Dictionary类,399行:this.loadMySQLExtDict(); 加载mymsql字典

-

Dictionary类,609行:this.loadMySQLStopwordDict();加载mysql停用词

-

config下jdbc-reload.properties。mysql配置文件

3、mvn package打包代码

target\releases\elasticsearch-analysis-ik-7.3.0.zip

4、解压缩ik压缩包

将mysql驱动jar,放入ik的目录下

5、修改jdbc相关配置

6、重启es

观察日志,日志中就会显示我们打印的那些东西,比如加载了什么配置,加载了什么词语,什么停用词

7、在mysql中添加词库与停用词

8、分词实验,验证热更新生效

GET /_analyze

{

"analyzer": "ik_smart",

"text": "传智播客"

}

13.java api 实现索引管理

package com.itheima.es;

import org.elasticsearch.action.ActionListener;

import org.elasticsearch.action.admin.indices.alias.Alias;

import org.elasticsearch.action.admin.indices.close.CloseIndexRequest;

import org.elasticsearch.action.admin.indices.delete.DeleteIndexRequest;

import org.elasticsearch.action.admin.indices.open.OpenIndexRequest;

import org.elasticsearch.action.admin.indices.open.OpenIndexResponse;

import org.elasticsearch.action.support.ActiveShardCount;

import org.elasticsearch.action.support.master.AcknowledgedResponse;

import org.elasticsearch.client.IndicesClient;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.client.indices.CreateIndexRequest;

import org.elasticsearch.client.indices.CreateIndexResponse;

import org.elasticsearch.client.indices.GetIndexRequest;

import org.elasticsearch.common.settings.Settings;

import org.elasticsearch.common.unit.TimeValue;

import org.elasticsearch.common.xcontent.XContentType;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import java.io.IOException;

/**

- @author Administrator

- @version 1.0

**/

@SpringBootTest

@RunWith(SpringRunner.class)

public class TestIndex {

@Autowired

RestHighLevelClient client;

// @Autowired

// RestClient restClient;

```

//创建索引

@Test

public void testCreateIndex() throws IOException {

//创建索引对象

CreateIndexRequest createIndexRequest = new CreateIndexRequest("itheima_book");

//设置参数

createIndexRequest.settings(Settings.builder().put("number_of_shards", "1").put("number_of_replicas", "0"));

//指定映射1

createIndexRequest.mapping(" {\n" +

" \t\"properties\": {\n" +

" \"name\":{\n" +

" \"type\":\"keyword\"\n" +

" },\n" +

" \"description\": {\n" +

" \"type\": \"text\"\n" +

" },\n" +

" \"price\":{\n" +

" \"type\":\"long\"\n" +

" },\n" +

" \"pic\":{\n" +

" \"type\":\"text\",\n" +

" \"index\":false\n" +

" }\n" +

" \t}\n" +

"}", XContentType.JSON);

//指定映射2

```

// Map message = new HashMap<>();

// message.put("type", "text");

// Map properties = new HashMap<>();

// properties.put("message", message);

// Map mapping = new HashMap<>();

// mapping.put("properties", properties);

// createIndexRequest.mapping(mapping);

```

//指定映射3

```

// XContentBuilder builder = XContentFactory.jsonBuilder();

// builder.startObject();

// {

// builder.startObject("properties");

// {

// builder.startObject("message");

// {

// builder.field("type", "text");

// }

// builder.endObject();

// }

// builder.endObject();

// }

// builder.endObject();

// createIndexRequest.mapping(builder);

```

//设置别名

createIndexRequest.alias(new Alias("itheima_index_new"));

// 额外参数

//设置超时时间

createIndexRequest.setTimeout(TimeValue.timeValueMinutes(2));

//设置主节点超时时间

createIndexRequest.setMasterTimeout(TimeValue.timeValueMinutes(1));

//在创建索引API返回响应之前等待的活动分片副本的数量,以int形式表示

createIndexRequest.waitForActiveShards(ActiveShardCount.from(2));

createIndexRequest.waitForActiveShards(ActiveShardCount.DEFAULT);

//操作索引的客户端

IndicesClient indices = client.indices();

//执行创建索引库

CreateIndexResponse createIndexResponse = indices.create(createIndexRequest, RequestOptions.DEFAULT);

//得到响应(全部)

boolean acknowledged = createIndexResponse.isAcknowledged();

//得到响应 指示是否在超时前为索引中的每个分片启动了所需数量的碎片副本

boolean shardsAcknowledged = createIndexResponse.isShardsAcknowledged();

System.out.println("!!!!!!!!!!!!!!!!!!!!!!!!!!!" + acknowledged);

System.out.println(shardsAcknowledged);

}

//异步新增索引

@Test

public void testCreateIndexAsync() throws IOException {

//创建索引对象

CreateIndexRequest createIndexRequest = new CreateIndexRequest("itheima_book2");

//设置参数

createIndexRequest.settings(Settings.builder().put("number_of_shards", "1").put("number_of_replicas", "0"));

//指定映射1

createIndexRequest.mapping(" {\n" +

" \t\"properties\": {\n" +

" \"name\":{\n" +

" \"type\":\"keyword\"\n" +

" },\n" +

" \"description\": {\n" +

" \"type\": \"text\"\n" +

" },\n" +

" \"price\":{\n" +

" \"type\":\"long\"\n" +

" },\n" +

" \"pic\":{\n" +

" \"type\":\"text\",\n" +

" \"index\":false\n" +

" }\n" +

" \t}\n" +

"}", XContentType.JSON);

//监听方法

ActionListener<CreateIndexResponse> listener =

new ActionListener<CreateIndexResponse>() {

@Override

public void onResponse(CreateIndexResponse createIndexResponse) {

System.out.println("!!!!!!!!创建索引成功");

System.out.println(createIndexResponse.toString());

}

@Override

public void onFailure(Exception e) {

System.out.println("!!!!!!!!创建索引失败");

e.printStackTrace();

}

};

//操作索引的客户端

IndicesClient indices = client.indices();

//执行创建索引库

indices.createAsync(createIndexRequest, RequestOptions.DEFAULT, listener);

try {

Thread.sleep(5000);

} catch (InterruptedException e) {

e.printStackTrace();

}

```

```

}

```

```

//删除索引库

@Test

public void testDeleteIndex() throws IOException {

//删除索引对象

DeleteIndexRequest deleteIndexRequest = new DeleteIndexRequest("itheima_book2");

//操作索引的客户端

IndicesClient indices = client.indices();

//执行删除索引

AcknowledgedResponse delete = indices.delete(deleteIndexRequest, RequestOptions.DEFAULT);

//得到响应

boolean acknowledged = delete.isAcknowledged();

System.out.println(acknowledged);

}

//异步删除索引库

@Test

public void testDeleteIndexAsync() throws IOException {

//删除索引对象

DeleteIndexRequest deleteIndexRequest = new DeleteIndexRequest("itheima_book2");

//操作索引的客户端

IndicesClient indices = client.indices();

//监听方法

ActionListener<AcknowledgedResponse> listener =

new ActionListener<AcknowledgedResponse>() {

@Override

public void onResponse(AcknowledgedResponse deleteIndexResponse) {

System.out.println("!!!!!!!!删除索引成功");

System.out.println(deleteIndexResponse.toString());

}

@Override

public void onFailure(Exception e) {

System.out.println("!!!!!!!!删除索引失败");

e.printStackTrace();

}

};

//执行删除索引

indices.deleteAsync(deleteIndexRequest, RequestOptions.DEFAULT, listener);

try {

Thread.sleep(5000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

// Indices Exists API

@Test

public void testExistIndex() throws IOException {

GetIndexRequest request = new GetIndexRequest("itheima_book");

request.local(false);//从主节点返回本地信息或检索状态

request.humanReadable(true);//以适合人类的格式返回结果

request.includeDefaults(false);//是否返回每个索引的所有默认设置

boolean exists = client.indices().exists(request, RequestOptions.DEFAULT);

System.out.println(exists);

}

```

```

// Indices Open API

@Test

public void testOpenIndex() throws IOException {

OpenIndexRequest request = new OpenIndexRequest("itheima_book");

OpenIndexResponse openIndexResponse = client.indices().open(request, RequestOptions.DEFAULT);

boolean acknowledged = openIndexResponse.isAcknowledged();

System.out.println("!!!!!!!!!"+acknowledged);

}

// Indices Close API

@Test

public void testCloseIndex() throws IOException {

CloseIndexRequest request = new CloseIndexRequest("index");

AcknowledgedResponse closeIndexResponse = client.indices().close(request, RequestOptions.DEFAULT);

boolean acknowledged = closeIndexResponse.isAcknowledged();

System.out.println("!!!!!!!!!"+acknowledged);

}

}

14.search搜索入门

14.1 搜索语法入门

14.1.1 query string search

无条件搜索所有

GET /book/_search

{

"took" : 969,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 3,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "book",

"_type" : "_doc",

"_id" : "1",

"_score" : 1.0,

"_source" : {

"name" : "Bootstrap开发",

"description" : "Bootstrap是由Twitter推出的一个前台页面开发css框架,是一个非常流行的开发框架,此框架集成了多种页面效果。此开发框架包含了大量的CSS、JS程序代码,可以帮助开发者(尤其是不擅长css页面开发的程序人员)轻松的实现一个css,不受浏览器限制的精美界面css效果。",

"studymodel" : "201002",

"price" : 38.6,

"timestamp" : "2019-08-25 19:11:35",

"pic" : "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags" : [

"bootstrap",

"dev"

]

}

},

{

"_index" : "book",

"_type" : "_doc",

"_id" : "2",

"_score" : 1.0,

"_source" : {

"name" : "java编程思想",

"description" : "java语言是世界第一编程语言,在软件开发领域使用人数最多。",

"studymodel" : "201001",

"price" : 68.6,

"timestamp" : "2019-08-25 19:11:35",

"pic" : "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags" : [

"java",

"dev"

]

}

},

{

"_index" : "book",

"_type" : "_doc",

"_id" : "3",

"_score" : 1.0,

"_source" : {

"name" : "spring开发基础",

"description" : "spring 在java领域非常流行,java程序员都在用。",

"studymodel" : "201001",

"price" : 88.6,

"timestamp" : "2019-08-24 19:11:35",

"pic" : "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags" : [

"spring",

"java"

]

}

}

]

}

}

解释

-

took:耗费了几毫秒

-

timed_out:是否超时,这里是没有

-

_shards:到几个分片搜索,成功几个,跳过几个,失败几个

-

hits.total:查询结果的数量,3个document

-

hits.max_score:score的含义,就是document对于一个search的相关度的匹配分数,越相关,就越匹配,分数也高

-

hits.hits:包含了匹配搜索的document的所有详细数据

14.1.2 传参

与http请求传参类似

GET /book/_search?q=name:java&sort=price:desc

类比sql: select * from book where name like ’ %java%’ order by price desc

{

"took" : 2,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : null,

"hits" : [

{

"_index" : "book",

"_type" : "_doc",

"_id" : "2",

"_score" : null,

"_source" : {

"name" : "java编程思想",

"description" : "java语言是世界第一编程语言,在软件开发领域使用人数最多。",

"studymodel" : "201001",

"price" : 68.6,

"timestamp" : "2019-08-25 19:11:35",

"pic" : "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags" : [

"java",

"dev"

]

},

"sort" : [

68.6

]

}

]

}

}

14.1.3 图解timeout

GET /book/_search?timeout=10ms

全局设置:配置文件中设置 search.default_search_timeout:100ms。默认不超时。

14.2 multi-index 多索引搜索

14.2.1 multi-index搜索模式

如何一次性搜索多个index和多个type下的数据

/_search:所有索引下的所有数据都搜索出来

/index1/_search:指定一个index,搜索其下所有的数据

/index1,index2/_search:同时搜索两个index下的数据

/index*/_search:按照通配符去匹配多个索引

应用场景:生产环境log索引可以按照日期分开。

log_to_es_20190910

log_to_es_20190911

log_to_es_20180910

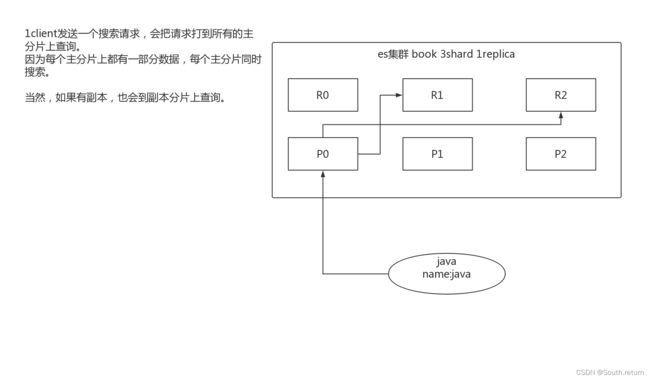

14.2.2 图解简单的搜索原理

搜索原理初步图解

14.3 分页搜索

14.3.1 分页搜索的语法

sql: select * from book limit 1,5

size,from

GET /book/_search?size=10

GET /book/_search?size=10&from=0

GET /book/_search?size=10&from=20

GET /book_search?from=0&size=3

14.3.2 deep paging

什么是deep paging

根据相关度评分倒排序,所以分页过深,协调节点会将大量数据聚合分析。

deep paging 性能问题

1 消耗网络带宽,因为所搜过深的话,各 shard 要把数据传递给 coordinate node,这个过程是有大量数据传递的,消耗网络。

2 消耗内存,各 shard 要把数据传送给 coordinate node,这个传递回来的数据,是被 coordinate node 保存在内存中的,这样会大量消耗内存。

3 消耗cup,coordinate node 要把传回来的数据进行排序,这个排序过程很消耗cpu。

所以:鉴于deep paging的性能问题,所有应尽量减少使用。

14.4 query string基础语法

14.4.1 query string基础语法

GET /book/_search?q=name:java

GET /book/_search?q=+name:java

GET /book/_search?q=-name:java

一个是掌握q=field:search content的语法,还有一个是掌握+和-的含义

14.4.2 _all metadata的原理和作用

GET /book/_search?q=java

直接可以搜索所有的field,任意一个field包含指定的关键字就可以搜索出来。我们在进行中搜索的时候,难道是对document中的每一个field都进行一次搜索吗?不是的。

es中_all元数据。建立索引的时候,插入一条docunment,es会将所有的field值经行全量分词,把这些分词,放到_all field中。在搜索的时候,没有指定field,就在_all搜索。

举例

{

name:jack

email:[email protected]

address:beijing

}

_all : jack,[email protected],beijing

14.5 query DSL入门

14.5.1 DSL

query string 后边的参数原来越多,搜索条件越来越复杂,不能满足需求。

GET /book/_search?q=name:java&size=10&from=0&sort=price:desc

DSL:Domain Specified Language,特定领域的语言

es特有的搜索语言,可在请求体中携带搜索条件,功能强大。

查询全部 GET /book/_search

GET /book/_search

{

"query": { "match_all": {} }

}

排序 GET /book/_search?sort=price:desc

GET /book/_search

{

"query" : {

"match" : {

"name" : " java"

}

},

"sort": [

{ "price": "desc" }

]

}

分页查询 GET /book/_search?size=10&from=0

GET /book/_search

{

"query": { "match_all": {} },

"from": 0,

"size": 1

}

指定返回字段 GET /book/ _search? _source=name,studymodel

GET /book/_search

{

"query": { "match_all": {} },

"_source": ["name", "studymodel"]

}

通过组合以上各种类型查询,实现复杂查询。

14.5.2 Query DSL语法

{

QUERY_NAME: {

ARGUMENT: VALUE,

ARGUMENT: VALUE,...

}

}

{

QUERY_NAME: {

FIELD_NAME: {

ARGUMENT: VALUE,

ARGUMENT: VALUE,...

}

}

}

GET /test_index/_search

{

"query": {

"match": {

"test_field": "test"

}

}

}

14.5.3 组合多个搜索条件

搜索需求:title必须包含elasticsearch,content可以包含elasticsearch也可以不包含,author_id必须不为111

sql where and or !=

初始数据:

POST /website/_doc/1

{

"title": "my hadoop article",

"content": "hadoop is very bad",

"author_id": 111

}

POST /website/_doc/2

{

"title": "my elasticsearch article",

"content": "es is very bad",

"author_id": 112

}

POST /website/_doc/3

{

"title": "my elasticsearch article",

"content": "es is very goods",

"author_id": 111

}

搜索:

GET /website/_doc/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"title": "elasticsearch"

}

}

],

"should": [

{

"match": {

"content": "elasticsearch"

}

}

],

"must_not": [

{

"match": {

"author_id": 111

}

}

]

}

}

}

返回:

{

"took" : 488,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 0.47000363,

"hits" : [

{

"_index" : "website",

"_type" : "_doc",

"_id" : "2",

"_score" : 0.47000363,

"_source" : {

"title" : "my elasticsearch article",

"content" : "es is very bad",

"author_id" : 112

}

}

]

}

}

更复杂的搜索需求:

select * from test_index where name=‘tom’ or (hired =true and (personality =‘good’ and rude != true ))

GET /test_index/_search

{

"query": {

"bool": {

"must": { "match":{ "name": "tom" }},

"should": [

{ "match":{ "hired": true }},

{ "bool": {

"must":{ "match": { "personality": "good" }},

"must_not": { "match": { "rude": true }}

}}

],

"minimum_should_match": 1

}

}

}

14.6 full-text search 全文检索

14.6.1 全文检索

重新创建book索引

PUT /book/

{

"settings": {

"number_of_shards": 1,

"number_of_replicas": 0

},

"mappings": {

"properties": {

"name":{

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart"

},

"description":{

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart"

},

"studymodel":{

"type": "keyword"

},

"price":{

"type": "double"

},

"timestamp": {

"type": "date",

"format": "yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||epoch_millis"

},

"pic":{

"type":"text",

"index":false

}

}

}

}

插入数据

PUT /book/_doc/1

{

"name": "Bootstrap开发",

"description": "Bootstrap是由Twitter推出的一个前台页面开发css框架,是一个非常流行的开发框架,此框架集成了多种页面效果。此开发框架包含了大量的CSS、JS程序代码,可以帮助开发者(尤其是不擅长css页面开发的程序人员)轻松的实现一个css,不受浏览器限制的精美界面css效果。",

"studymodel": "201002",

"price":38.6,

"timestamp":"2019-08-25 19:11:35",

"pic":"group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags": [ "bootstrap", "dev"]

}

PUT /book/_doc/2

{

"name": "java编程思想",

"description": "java语言是世界第一编程语言,在软件开发领域使用人数最多。",

"studymodel": "201001",

"price":68.6,

"timestamp":"2019-08-25 19:11:35",

"pic":"group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags": [ "java", "dev"]

}

PUT /book/_doc/3

{

"name": "spring开发基础",

"description": "spring 在java领域非常流行,java程序员都在用。",

"studymodel": "201001",

"price":88.6,

"timestamp":"2019-08-24 19:11:35",

"pic":"group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags": [ "spring", "java"]

}

搜索

GET /book/_search

{

"query" : {

"match" : {

"description" : "java程序员"

}

}

}

14.6.2 _score初探

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 2,

"relation" : "eq"

},

"max_score" : 2.137549,

"hits" : [

{

"_index" : "book",

"_type" : "_doc",

"_id" : "3",

"_score" : 2.137549,

"_source" : {

"name" : "spring开发基础",

"description" : "spring 在java领域非常流行,java程序员都在用。",

"studymodel" : "201001",

"price" : 88.6,

"timestamp" : "2019-08-24 19:11:35",

"pic" : "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags" : [

"spring",

"java"

]

}

},

{

"_index" : "book",

"_type" : "_doc",

"_id" : "2",

"_score" : 0.57961315,

"_source" : {

"name" : "java编程思想",

"description" : "java语言是世界第一编程语言,在软件开发领域使用人数最多。",

"studymodel" : "201001",

"price" : 68.6,

"timestamp" : "2019-08-25 19:11:35",

"pic" : "group1/M00/00/00/wKhlQFs6RCeAY0pHAAJx5ZjNDEM428.jpg",

"tags" : [

"java",

"dev"

]

}

}

]

}

}

结果分析

1、建立索引时, description字段 term倒排索引

- java 2,3

- 程序员 3

2、搜索时直接找description中含有java的文档 2,3,并且3号文档含有两个java字段一个程序员,所以得分高排在前面,2号文档含有一个java排在后面。

14.7 DSL 语法练习

14.7.1 match_all

GET /book/_search

{

"query": {

"match_all": {}

}

}

14.7.2 match

GET /book/_search

{

"query": {

"match": {

"description": "java程序员"

}

}

}

14.7.3 multi_match

GET /book/_search

{

"query": {

"multi_match": {

"query": "java程序员",

"fields": ["name", "description"]

}

}

}

14.7.4 range query 范围查询

GET /book/_search

{

"query": {

"range": {

"price": {

"gte": 80,

"lte": 90

}

}

}

}

14.7.5 term query

字段为keyword时,存储和搜索都不分词

GET /book/_search

{

"query": {

"term": {

"description": "java程序员"

}

}

}

14.7.6 terms query

GET /book/_search

{

"query": { "terms": { "tag": [ "search", "full_text", "nosql" ] }}

}

14.7.7 exist query 查询有某些字段值的文档

GET /_search

{

"query": {

"exists": {

"field": "join_date"

}

}

}

14.7.8 Fuzzy query

返回包含与搜索词类似的词的文档,该词由Levenshtein编辑距离度量。

包括以下几种情况:

-

更改角色(box→fox)

-

删除字符(aple→apple)

-

插入字符(sick→sic)

-

调换两个相邻字符(ACT→CAT)

GET /book/_search

{

"query": {

"fuzzy": {

"description": {

"value": "jave"

}

}

}

}

14.7.9 IDs

GET /book/_search

{

"query": {

"ids" : {

"values" : ["1", "4", "100"]

}

}

}

14.7.10 prefix 前缀查询

GET /book/_search

{

"query": {

"prefix": {

"description": {

"value": "spring"

}

}

}

}

14.7.11 regexp query 正则查询

GET /book/_search

{

"query": {

"regexp": {

"description": {

"value": "j.*a",

"flags" : "ALL",

"max_determinized_states": 10000,

"rewrite": "constant_score"

}

}

}

}

14.8 Filter

14.8.1 filter与query示例

需求:用户查询description中有"java程序员",并且价格大于80小于90的数据。

GET /book/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"description": "java程序员"

}

},

{

"range": {

"price": {

"gte": 80,

"lte": 90

}

}

}

]

}

}

}

使用filter:

GET /book/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"description": "java程序员"

}

}

],

"filter": {

"range": {

"price": {

"gte": 80,

"lte": 90

}

}

}

}

}

}

14.8.2 filter与query对比

filter,仅仅只是按照搜索条件过滤出需要的数据而已,不计算任何相关度分数,对相关度没有任何影响。

query,会去计算每个document相对于搜索条件的相关度,并按照相关度进行排序。

应用场景:

- 一般来说,如果你是在进行搜索,需要将最匹配搜索条件的数据先返回,那么用query

- 如果你只是要根据一些条件筛选出一部分数据,不关注其排序,那么用filter

14.8.3 filter与query性能

filter,不需要计算相关度分数,不需要按照相关度分数进行排序,同时还有内置的自动cache最常使用filter的数据

query,相反,要计算相关度分数,按照分数进行排序,而且无法cache结果

14.9 定位错误语法

验证错误语句:

GET /book/_validate/query?explain

{

"query": {

"mach": {

"description": "java程序员"

}

}

}

返回:

{

"valid" : false,

"error" : "org.elasticsearch.common.ParsingException: no [query] registered for [mach]"

}

正确

GET /book/_validate/query?explain

{

"query": {

"match": {

"description": "java程序员"

}

}

}

返回

{

"_shards" : {

"total" : 1,

"successful" : 1,

"failed" : 0

},

"valid" : true,

"explanations" : [

{

"index" : "book",

"valid" : true,

"explanation" : "description:java description:程序员"

}

]

}

一般用在那种特别复杂庞大的搜索下,比如一下写了上百行的搜索,这个时候可以先用validate api去验证一下,搜索是否合法。

合法以后,explain就像mysql的执行计划,可以看到搜索的目标等信息。

14.10 定制排序规则

14.10.1 默认排序规则

默认情况下,是按照_score降序排序的

然而,某些情况下,可能没有有用的_score,比如说filter

GET book/_search

{

"query": {

"bool": {

"must": [

{

"match": {

"description": "java程序员"

}

}

]

}

}

}

当然,也可以是constant_score

14.10.2 定制排序规则

相当于sql中order by ?sort=sprice:desc

GET /book/_search

{

"query": {

"constant_score": {

"filter" : {

"term" : {

"studymodel" : "201001"

}

}

}

},

"sort": [

{

"price": {

"order": "asc"

}

}

]

}

14.11 Text字段排序问题

如果对一个text field进行排序,结果往往不准确,因为分词后是多个单词,再排序就不是我们想要的结果了。

通常解决方案是:

方案一:fielddate:true

方案二:将一个text field建立两次索引,一个分词 用来进行搜索;一个不分词 用来进行排序

PUT /website

{

"mappings": {

"properties": {

"title": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword"

}

}

},

"content": {

"type": "text"

},

"post_date": {

"type": "date"

},

"author_id": {

"type": "long"

}

}

}

}

插入数据

PUT /website/_doc/1

{

"title": "first article",

"content": "this is my second article",

"post_date": "2019-01-01",

"author_id": 110

}

PUT /website/_doc/2

{

"title": "second article",

"content": "this is my second article",

"post_date": "2019-01-01",

"author_id": 110

}

PUT /website/_doc/3

{

"title": "third article",

"content": "this is my third article",

"post_date": "2019-01-02",

"author_id": 110

}

搜索

GET /website/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"title.keyword": {

"order": "desc"

}

}

]

}

14.12 Scroll分批查询

场景:下载某一个索引中1亿条数据,到文件或是数据库。不能一下全查出来,系统内存溢出。所以使用scoll滚动搜索技术,一批一批查询。

scoll搜索会在第一次搜索的时候,保存一个当时的视图快照,之后只会基于该旧的视图快照提供数据搜索,如果这个期间数据变更,是不会让用户看到的

每次发送scroll请求,我们还需要指定一个scoll参数,指定一个时间窗口,每次搜索请求只要在这个时间窗口内能完成就可以了。

搜索

GET /book/_search?scroll=1m

{

"query": {

"match_all": {}

},

"size": 3

}

返回

{

"_scroll_id" : "DXF1ZXJ5QW5kRmV0Y2gBAAAAAAAAMOkWTURBNDUtcjZTVUdKMFp5cXloVElOQQ==",

"took" : 3,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 3,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

]

}

}

获得的结果会有一个scoll_id,下一次再发送scoll请求的时候,必须带上这个scoll_id

GET /_search/scroll

{

"scroll": "1m",

"scroll_id" : "DXF1ZXJ5QW5kRmV0Y2gBAAAAAAAAMOkWTURBNDUtcjZTVUdKMFp5cXloVElOQQ=="

}

与分页区别:

分页给用户看的 deep paging

scroll是用户系统内部操作,如下载批量数据,数据转移。零停机改变索引映射。

15.java api实现搜索

package com.itheima.es;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.index.query.*;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.SearchHits;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.elasticsearch.search.sort.SortOrder;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import java.io.IOException;

import java.util.Map;

/**

* creste by itheima.itcast

*/

@SpringBootTest

@RunWith(SpringRunner.class)

public class TestSearch {

@Autowired

RestHighLevelClient client;

//搜索全部记录

@Test

public void testSearchAll() throws IOException {

// GET book/_search

// {

// "query": {

// "match_all": {}

// }

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

//获取某些字段

searchSourceBuilder.fetchSource(new String[]{"name"}, new String[]{});

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

//搜索分页

@Test

public void testSearchPage() throws IOException {

// GET book/_search

// {

// "query": {

// "match_all": {}

// },

// "from": 0,

// "size": 2

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

//第几页

int page=1;

//每页几个

int size=2;

//下标计算

int from=(page-1)*size;

searchSourceBuilder.from(from);

searchSourceBuilder.size(size);

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("id:" + id);

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

//ids搜索

@Test

public void testSearchIds() throws IOException {

// GET /book/_search

// {

// "query": {

// "ids" : {

// "values" : ["1", "4", "100"]

// }

// }

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.idsQuery().addIds("1","4","100"));

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("id:" + id);

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

//match搜索

@Test

public void testSearchMatch() throws IOException {

//

// GET /book/_search

// {

// "query": {

// "match": {

// "description": "java程序员"

// }

// }

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchQuery("description", "java程序员"));

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("id:" + id);

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

//term 搜索

@Test

public void testSearchTerm() throws IOException {

//

// GET /book/_search

// {

// "query": {

// "term": {

// "description": "java程序员"

// }

// }

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.termQuery("description", "java程序员"));

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("id:" + id);

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

//multi_match搜索

@Test

public void testSearchMultiMatch() throws IOException {

// GET /book/_search

// {

// "query": {

// "multi_match": {

// "query": "java程序员",

// "fields": ["name", "description"]

// }

// }

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.multiMatchQuery("java程序员","name","description"));

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("id:" + id);

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

// GET /book/_search

// {

// "query": {

// "bool": {

// "must": [

// {

// "multi_match": {

// "query": "java程序员",

// "fields": ["name","description"]

// }

// }

// ],

// "should": [

// {

// "match": {

// "studymodel": "201001"

// }

// }

// ]

// }

// }

// }

//bool搜索

@Test

public void testSearchBool() throws IOException {

// GET /book/_search

// {

// "query": {

// "bool": {

// "must": [

// {

// "multi_match": {

// "query": "java程序员",

// "fields": ["name","description"]

// }

// }

// ],

// "should": [

// {

// "match": {

// "studymodel": "201001"

// }

// }

// ]

// }

// }

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//构建multiMatch请求

MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders.multiMatchQuery("java程序员", "name", "description");

//构建match请求

MatchQueryBuilder matchQueryBuilder = QueryBuilders.matchQuery("studymodel", "201001");

BoolQueryBuilder boolQueryBuilder=QueryBuilders.boolQuery();

boolQueryBuilder.must(multiMatchQueryBuilder);

boolQueryBuilder.should(matchQueryBuilder);

searchSourceBuilder.query(boolQueryBuilder);

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("id:" + id);

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

// GET /book/_search

// {

// "query": {

// "bool": {

// "must": [

// {

// "multi_match": {

// "query": "java程序员",

// "fields": ["name","description"]

// }

// }

// ],

// "should": [

// {

// "match": {

// "studymodel": "201001"

// }

// }

// ],

// "filter": {

// "range": {

// "price": {

// "gte": 50,

// "lte": 90

// }

// }

//

// }

// }

// }

// }

//filter搜索

@Test

public void testSearchFilter() throws IOException {

// GET /book/_search

// {

// "query": {

// "bool": {

// "must": [

// {

// "multi_match": {

// "query": "java程序员",

// "fields": ["name","description"]

// }

// }

// ],

// "should": [

// {

// "match": {

// "studymodel": "201001"

// }

// }

// ],

// "filter": {

// "range": {

// "price": {

// "gte": 50,

// "lte": 90

// }

// }

//

// }

// }

// }

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//构建multiMatch请求

MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders.multiMatchQuery("java程序员", "name", "description");

//构建match请求

MatchQueryBuilder matchQueryBuilder = QueryBuilders.matchQuery("studymodel", "201001");

BoolQueryBuilder boolQueryBuilder=QueryBuilders.boolQuery();

boolQueryBuilder.must(multiMatchQueryBuilder);

boolQueryBuilder.should(matchQueryBuilder);

boolQueryBuilder.filter(QueryBuilders.rangeQuery("price").gte(50).lte(90));

searchSourceBuilder.query(boolQueryBuilder);

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("id:" + id);

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

//sort搜索

@Test

public void testSearchSort() throws IOException {

// GET /book/_search

// {

// "query": {

// "bool": {

// "must": [

// {

// "multi_match": {

// "query": "java程序员",

// "fields": ["name","description"]

// }

// }

// ],

// "should": [

// {

// "match": {

// "studymodel": "201001"

// }

// }

// ],

// "filter": {

// "range": {

// "price": {

// "gte": 50,

// "lte": 90

// }

// }

//

// }

// }

// },

// "sort": [

// {

// "price": {

// "order": "asc"

// }

// }

// ]

// }

//1构建搜索请求

SearchRequest searchRequest = new SearchRequest("book");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//构建multiMatch请求

MultiMatchQueryBuilder multiMatchQueryBuilder = QueryBuilders.multiMatchQuery("java程序员", "name", "description");

//构建match请求

MatchQueryBuilder matchQueryBuilder = QueryBuilders.matchQuery("studymodel", "201001");

BoolQueryBuilder boolQueryBuilder=QueryBuilders.boolQuery();

boolQueryBuilder.must(multiMatchQueryBuilder);

boolQueryBuilder.should(matchQueryBuilder);

boolQueryBuilder.filter(QueryBuilders.rangeQuery("price").gte(50).lte(90));

searchSourceBuilder.query(boolQueryBuilder);

//按照价格升序

searchSourceBuilder.sort("price", SortOrder.ASC);

searchRequest.source(searchSourceBuilder);

//2执行搜索

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//3获取结果

SearchHits hits = searchResponse.getHits();

//数据数据

SearchHit[] searchHits = hits.getHits();

System.out.println("--------------------------");

for (SearchHit hit : searchHits) {

String id = hit.getId();

float score = hit.getScore();

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

String description = (String) sourceAsMap.get("description");

Double price = (Double) sourceAsMap.get("price");

System.out.println("id:" + id);

System.out.println("name:" + name);

System.out.println("description:" + description);

System.out.println("price:" + price);

System.out.println("==========================");

}

}

}