PyTorch分类网络实现

下面提供的是一套PyTorch框架下实现的分类网络训练代码。同时画出混淆矩阵、学习率曲线、loss曲线、acc曲线。运行train_for_3.py文件即可开始训练模型。

代码文件夹结构如下图:

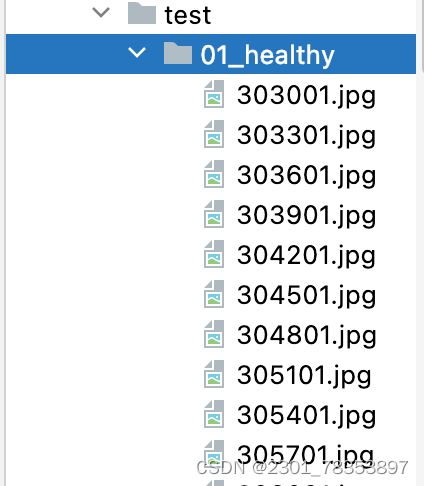

其中,文件夹中具体的数据如下:

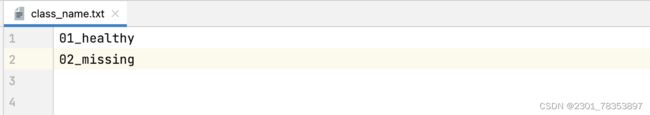

在class_name.txt中的数据为:

下面提供各个文件夹下的具体代码实现。

dataset.py

import glob

import numpy as np

import cv2

import os

import torch

from torch.utils.data import Dataset

import torchvision

from PIL import Image

class mydataset(Dataset):

def __init__(self, root, class_txt):

super(mydataset,self).__init__()

self.root = root

with open(class_txt, "r") as f:

class_names = f.readlines() # ["0-other garbage-fast food box", "1-other garbage-soiled plastic",.....]

self.class_names = []

for name in class_names:

self.class_names.append(name.replace("\n",""))

# ./train/*/*.jpg

self.pictures = glob.glob(os.path.join(self.root,"*","*.jpg")) # [] # ["./trian/0-other garbage-fast food box/1.jpg",

self.transform = torchvision.transforms.Compose([

torchvision.transforms.Resize((224,224)), # PIL --> tensor

torchvision.transforms.ToTensor(), # /255 -- 01

torchvision.transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) # imagenet

])

def __getitem__(self,index):

image_path = self.pictures[index]

image = Image.open(image_path)

image = self.transform(image) # b, 3, H, W

label = None

image_name = image_path.split("/")[-2] # [,trian,0-dadada,1.jpg]

for i, name in enumerate(self.class_names):

# if image_name == name:

if name in image_path:

label = i

break

if label==None:

raise NotImplementedError

return image, torch.tensor(label,dtype=torch.long)

def __len__(self):

return len(self.pictures)

models.py

from torchvision.models.alexnet import alexnet

from torchvision.models.vgg import vgg16

from torchvision.models.resnet import resnet18

from torchvision.models.mobilenet import mobilenet_v3_small

from torchvision import models

from torch import nn

import torchvision

import torch

class Efficientnet_v2_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(Efficientnet_v2_model, self).__init__()

self.efficientnet_v2 = models.efficientnet_v2_s(pretrained=pretrained)

num_ftrs = self.efficientnet_v2.classifier[1].in_features

self.efficientnet_v2.classifier[1] = nn.Linear(num_ftrs, class_num)

def forward(self, x):

x = self.efficientnet_v2(x)

return x

class Resnet18_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(Resnet18_model, self).__init__()

self.resnet18 = resnet18(pretrained=pretrained) # imagenet -- 1000

num_ftrs = self.resnet18.fc.in_features # 512

self.resnet18.fc = nn.Linear(num_ftrs,class_num)

def forward(self, x):

x = self.resnet18(x)

return x

class Resnet34_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(Resnet34_model, self).__init__()

self.resnet34 = models.resnet34(pretrained=pretrained)

num_ftrs = self.resnet34.fc.in_features

self.resnet34.fc = nn.Linear(num_ftrs,class_num) # --->

def forward(self, x):

x = self.resnet34(x) # dad

#

x = self.a(x)

x = self.c(x) # nn.Conv2d

x = self.b(x)

# summary

return x

class Resnet50_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(Resnet50_model, self).__init__()

self.resnet50 = models.resnet50(pretrained=pretrained)

num_ftrs = self.resnet50.fc.in_features

self.resnet50.fc = nn.Linear(num_ftrs,class_num)

def forward(self, x):

x = self.resnet50(x)

return x

class Resnet101_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(Resnet101_model, self).__init__()

self.resnet101 = models.resnet101(pretrained=pretrained)

num_ftrs = self.resnet101.fc.in_features

self.resnet101.fc = nn.Linear(num_ftrs,class_num)

def forward(self, x):

x = self.resnet101(x)

return x

class ViT_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(ViT_model, self).__init__()

self.vit = models.vit_b_16(pretrained=pretrained)

num_ftrs = self.vit.heads.head.in_features

self.vit.heads.head=nn.Linear(num_ftrs,class_num)

def forward(self,x):

x = self.vit(x)

return x

class Swin_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(Swin_model, self).__init__()

self.swin = models.swin_b(pretrained=pretrained)

num_ftrs = self.swin.head.in_features

self.swin.head=nn.Linear(num_ftrs,class_num)

def forward(self,x):

x = self.swin(x)

return x

class Mobilenet_model(nn.Module):

def __init__(self, class_num, pretrained=False):

super(Mobilenet_model, self).__init__()

self.mobilenet = models.mobilenet_v3_small(pretrained=pretrained)

num_ftrs = self.mobilenet.classifier[-1].in_features

self.mobilenet.classifier[-1] = nn.Linear(num_ftrs,class_num)

def forward(self, x):

x = self.mobilenet(x)

return x

class Densenet_model(nn.Module):

def __init__(self, class_num, pretrained=False):

super(Densenet_model, self).__init__()

self.densenet = models.densenet121(pretrained=pretrained)

num_ftrs = self.densenet.classifier.in_features

self.densenet.classifier = nn.Linear(num_ftrs,class_num)

def forward(self, x):

x = self.densenet(x)

return x

class Shufflenet_model(nn.Module):

def __init__(self, class_num, pretrained=False):

super(Shufflenet_model, self).__init__()

self.shufflenet = models.shufflenet_v2_x1_0(pretrained=pretrained)

num_ftrs = self.shufflenet.fc.in_features

self.shufflenet.fc = nn.Linear(num_ftrs,class_num)

def forward(self, x):

x = self.shufflenet(x)

return x

from Res2Net import res2net101_26w_4s

class Res2Net101_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(Res2Net101_model, self).__init__()

self.res2net101 = res2net101_26w_4s(pretrained=pretrained)

num_ftrs = self.res2net101.fc.in_features

self.res2net101.fc = nn.Linear(num_ftrs, class_num)

def forward(self, x):

x = self.res2net101(x)

return x

from SCnet import scnet101,scnet50

class SCnet101_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(SCnet101_model, self).__init__()

self.scnet101 = scnet101(pretrained=pretrained)

num_ftrs = self.scnet101.fc.in_features

self.scnet101.fc = nn.Linear(num_ftrs, class_num)

def forward(self, x):

x = self.scnet101(x)

return x

class SCnet50_model(nn.Module):

def __init__(self,class_num, pretrained=False):

super(SCnet50_model, self).__init__()

self.scnet50 = scnet50(pretrained=pretrained)

num_ftrs = self.scnet50.fc.in_features

self.scnet50.fc = nn.Linear(num_ftrs, class_num)

def forward(self, x):

x = self.scnet50(x)

return x

# from resnest.torch import resnest101

# class Resnest101_model(nn.Module):

# def __init__(self,class_num, pretrained=False):

# super(Resnest101_model, self).__init__()

# self.resnest101 = resnest101(pretrained=pretrained)

#

# num_ftrs = self.resnest101.fc.in_features

#

# self.resnest101.fc = nn.Linear(num_ftrs, class_num)

#

# def forward(self, x):

# x = self.resnest101(x)

#

# return x

train_for_3.py

import pandas as pd

import torch

from torch import optim

from dataset import mydataset

from torch.utils.data import DataLoader

import os

import numpy as np

import random

from matplotlib import pyplot as plt

from tqdm import tqdm

import seaborn as sns

from sklearn.metrics import confusion_matrix

from models import *

from torchsummary import summary

def set_seed(seed = 7):

os.environ["PYTHONHASHSEED"] = str(seed)

np.random.seed(seed)

random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

if __name__ == '__main__':

# 固定随机数

set_seed()

# 模型保存的位置

log_dir = "./log"

os.makedirs(log_dir,exist_ok=True)

# 批大小

batch_size = 64

# 学习率

lr = 1e-4

# 训练周期

epochs = 10

# 数据集以及配置文件

class_txt = "./class_name.txt"

train_root = "./train"

val_root = "./test"

test_root = "./test"

# 类别数目

class_num = 2

# 是否预训练

pretrained = False # imagenet --> 分类 --> 权重

# 选择模型

model_name = "Resnet18"

########## 以下为不可调节参数!!!

if model_name == "Efficientnet":

model = Efficientnet_v2_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Resnet18":

model = Resnet18_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Resnet34":

model = Resnet34_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Resnet50":

model = Resnet50_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Resnet101":

model = Resnet101_model(class_num=class_num, pretrained=pretrained)

elif model_name == "ViT":

model = ViT_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Swin":

model = Swin_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Mobilenet":

model = Mobilenet_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Densenet":

model = Densenet_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Shufflenet":

model = Shufflenet_model(class_num=class_num, pretrained=pretrained)

elif model_name == "Res2Net101":

model = Res2Net101_model(class_num=class_num, pretrained=pretrained)

elif model_name == "SCnet101":

model = SCnet101_model(class_num=class_num, pretrained=pretrained)

# elif model_name == "Resnest101":

# model = Resnest101_model(class_num=class_num, pretrained=pretrained)

else:

raise NotImplementedError

# 计算参数

# print(model(torch.randn(1,3,224,224)))

# try:

# print(summary(model,(3,224,224),1,"cpu"))

# except:

# print(model)

print(model)

# 选择设备

if torch.cuda.is_available():

device = "cuda"

else:

device = "cpu"

print("using {}".format(device))

model = model.to(device)

train_dataset = mydataset(train_root,class_txt)

train_datasetloader = DataLoader(train_dataset, batch_size, True,)

val_dataset = mydataset(val_root,class_txt)

val_datasetloader = DataLoader(val_dataset, 1, True,)

test_dataset = mydataset(test_root, class_txt)

test_datasetloader = DataLoader(test_dataset, batch_size, True, )

print('Train on {} samples, test on {} samples.'

.format(len(train_dataset), len(val_dataset)))

# 交叉熵loss

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr) # 0.01 # 0.001

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, epochs, 0.000005)

best_acc = -1e3

train_acc_list = []

val_acc_list = []

train_loss_list = []

val_loss_list = []

lr_list = []

for epoch in range(epochs):

print("now is " + str(epoch + 1) + " epoch")

train_loss = 0

val_loss = 0

# 训练

train_predict = []

train_truth_label = []

model.train()

for i, (x, label) in tqdm(enumerate(train_datasetloader),total=len(train_datasetloader)):

x = x.to(device)

label = label.to(device)

predict = model(x) # b, 40

loss = criterion(predict, label)

optimizer.zero_grad() # 梯度0

loss.backward()

optimizer.step()

train_loss = train_loss + loss.item()

predict_index = torch.argmax(predict, dim=-1) # b [1, 1, 0]

train_predict.append(predict_index.cpu().numpy())

train_truth_label.append(label.cpu().numpy())

# 记录学习率

lr_list.append(scheduler.get_lr())

# 调整学习率

scheduler.step()

train_predict = np.concatenate(train_predict)

train_truth_label = np.concatenate(train_truth_label)

acc = (train_predict == train_truth_label).sum() / len(train_predict)

train_acc_list.append(acc * 100)

train_loss /= (i+1)

train_loss_list.append(train_loss)

# 测试

val_predict = []

val_truth_label = []

model.eval()

with torch.no_grad():

for i, (x, label) in tqdm(enumerate(val_datasetloader), total=len(val_datasetloader)):

x = x.to(device)

label = label.to(device)

predict = model(x)

loss = criterion(predict, label)

val_loss = val_loss + loss.item()

predict_index = torch.argmax(predict, dim=-1)

val_predict.append(predict_index.cpu().numpy())

val_truth_label.append(label.cpu().numpy())

val_loss /= (i + 1)

val_loss_list.append(val_loss)

val_predict = np.concatenate(val_predict)

val_truth_label = np.concatenate(val_truth_label)

acc = (val_predict == val_truth_label).sum() / len(val_predict)

val_acc_list.append(acc * 100)

print("now acc: {}, previous acc: {}".format(acc, best_acc))

# 保存最好的模型

if acc > best_acc:

# print(len(val_predict))

# print(val_predict)

print("save model")

best_acc = acc

torch.save(model.state_dict(), os.path.join(log_dir, "{}.pth".format(model_name)))

# 画四个图

plt.close()

# plt.plot(np.linspace(1,int(epochs+1),num=epochs),train_acc_list)

# plt.plot(np.linspace(1,int(epochs+1),num=epochs),val_acc_list)

# plt.xticks(range(1, epochs+2))

plt.plot(train_acc_list)

plt.plot(val_acc_list)

plt.title('accuracy of train and val')

plt.xlabel("epochs")

plt.ylabel("accuracy")

plt.legend(["accuracy of train","accuracy of val"])

plt.savefig(os.path.join(log_dir, "acc_{}.jpg".format(model_name)),dpi=400)

plt.close()

# plt.plot(np.linspace(1,int(epochs+1),num=epochs),train_loss_list)

# plt.plot(np.linspace(1,int(epochs+1),num=epochs),val_loss_list)

# plt.xticks(range(1, epochs+2))

plt.plot(train_loss_list)

plt.plot(val_loss_list)

plt.title('loss of train and val')

plt.xlabel("epochs")

plt.ylabel("CrossEntropyLoss")

plt.legend(["loss of train","loss of val"])

plt.savefig(os.path.join(log_dir, "loss_{}.jpg".format(model_name)),dpi=400)

plt.close()

# plt.plot(np.linspace(1,int(epochs+1),num=epochs),lr_list)

# plt.xticks(range(1, epochs+2))

plt.plot(lr_list)

plt.ticklabel_format(axis='y', scilimits=[-3, 3])

plt.title('learning rate')

plt.xlabel("epochs")

plt.ylabel("learning rate")

plt.savefig(os.path.join(log_dir, "lr_{}.jpg".format(model_name)),dpi=400)

plt.close()

### test

model = model.to("cpu")

ckp = torch.load(os.path.join(log_dir, "{}.pth".format(model_name)),map_location="cpu")

model.load_state_dict(ckp)

model = model.to(device)

model.eval()

test_predict = []

test_truth_label = []

with torch.no_grad():

for i, (x, label) in tqdm(enumerate(val_datasetloader), total=len(val_datasetloader)):

x = x.to(device)

label = label.to(device)

predict = model(x)

predict_index = torch.argmax(predict, dim=-1)

test_predict.append(predict_index.cpu().numpy())

test_truth_label.append(label.cpu().numpy())

predict = torch.softmax(predict,dim=-1)

IMAGENET_DEFAULT_MEAN = (0.485, 0.456, 0.406)

IMAGENET_DEFAULT_STD = (0.229, 0.224, 0.225)

mean = torch.as_tensor(IMAGENET_DEFAULT_MEAN).to(device)[None, :, None, None]

std = torch.as_tensor(IMAGENET_DEFAULT_STD).to(device)[None, :, None, None]

ori_img = x * std + mean # in [0, 1]

# print(ori_img.shape)

from PIL import Image

plt.imshow(np.uint(255 * ori_img[0].cpu().permute(1,2,0).numpy()))

plt.axis('off')

plt.title("label_" +str(label[0].item()) + "_predict_" + str(predict_index[0].item()) + "_possibility_" + "%.2f"%(predict[0,predict_index[0]].item()), fontsize='large',

color=("green" if str(label[0]) == str(predict_index[0]) else "red"))

plt.savefig(os.path.join(log_dir,"index_" + str(i)+"_标签_" +str(label[0].item()) + "_预测类别_" + str(predict_index[0].item()) + "_概率_" +"%.2f"%(predict[0,predict_index[0]].item()) + ".jpg"))

test_predict = np.concatenate(test_predict)

test_truth_label = np.concatenate(test_truth_label)

acc = (test_predict == test_truth_label).sum() / len(test_predict)

print("now test acc: {}".format(acc))

matrix = confusion_matrix(test_truth_label, test_predict, normalize='true')

dataframe = pd.DataFrame(matrix)

plt.close()

sns.heatmap(dataframe, annot=True, cmap="OrRd")

plt.title("confusion_matrix")

plt.ylabel("ground truth")

plt.xlabel("predict label")

plt.savefig(os.path.join(log_dir, "confusion_matrix_{}.jpg".format(model_name)), dpi=400)

SCnet.py (从作者开源仓库获得,无修改)

##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

## Created by: Jiang-Jiang Liu

## Email: [email protected]

## Copyright (c) 2020

##

## LICENSE file in the root directory of this source tree

##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

"""SCNet variants"""

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.model_zoo as model_zoo

__all__ = ['SCNet', 'scnet50', 'scnet101', 'scnet50_v1d', 'scnet101_v1d']

model_urls = {

'scnet50': 'https://backseason.oss-cn-beijing.aliyuncs.com/scnet/scnet50-dc6a7e87.pth',

'scnet50_v1d': 'https://backseason.oss-cn-beijing.aliyuncs.com/scnet/scnet50_v1d-4109d1e1.pth',

'scnet101': 'https://backseason.oss-cn-beijing.aliyuncs.com/scnet/scnet101-44c5b751.pth',

# 'scnet101_v1d': coming soon...

}

class SCConv(nn.Module):

def __init__(self, inplanes, planes, stride, padding, dilation, groups, pooling_r, norm_layer):

super(SCConv, self).__init__()

self.k2 = nn.Sequential(

nn.AvgPool2d(kernel_size=pooling_r, stride=pooling_r),

nn.Conv2d(inplanes, planes, kernel_size=3, stride=1,

padding=padding, dilation=dilation,

groups=groups, bias=False),

norm_layer(planes),

)

self.k3 = nn.Sequential(

nn.Conv2d(inplanes, planes, kernel_size=3, stride=1,

padding=padding, dilation=dilation,

groups=groups, bias=False),

norm_layer(planes),

)

self.k4 = nn.Sequential(

nn.Conv2d(inplanes, planes, kernel_size=3, stride=stride,

padding=padding, dilation=dilation,

groups=groups, bias=False),

norm_layer(planes),

)

def forward(self, x):

identity = x

out = torch.sigmoid(torch.add(identity, F.interpolate(self.k2(x), identity.size()[2:]))) # sigmoid(identity + k2)

out = torch.mul(self.k3(x), out) # k3 * sigmoid(identity + k2)

out = self.k4(out) # k4

return out

class SCBottleneck(nn.Module):

"""SCNet SCBottleneck

"""

expansion = 4

pooling_r = 4 # down-sampling rate of the avg pooling layer in the K3 path of SC-Conv.

def __init__(self, inplanes, planes, stride=1, downsample=None,

cardinality=1, bottleneck_width=32,

avd=False, dilation=1, is_first=False,

norm_layer=None):

super(SCBottleneck, self).__init__()

group_width = int(planes * (bottleneck_width / 64.)) * cardinality

self.conv1_a = nn.Conv2d(inplanes, group_width, kernel_size=1, bias=False)

self.bn1_a = norm_layer(group_width)

self.conv1_b = nn.Conv2d(inplanes, group_width, kernel_size=1, bias=False)

self.bn1_b = norm_layer(group_width)

self.avd = avd and (stride > 1 or is_first)

if self.avd:

self.avd_layer = nn.AvgPool2d(3, stride, padding=1)

stride = 1

self.k1 = nn.Sequential(

nn.Conv2d(

group_width, group_width, kernel_size=3, stride=stride,

padding=dilation, dilation=dilation,

groups=cardinality, bias=False),

norm_layer(group_width),

)

self.scconv = SCConv(

group_width, group_width, stride=stride,

padding=dilation, dilation=dilation,

groups=cardinality, pooling_r=self.pooling_r, norm_layer=norm_layer)

self.conv3 = nn.Conv2d(

group_width * 2, planes * 4, kernel_size=1, bias=False)

self.bn3 = norm_layer(planes*4)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.dilation = dilation

self.stride = stride

def forward(self, x):

residual = x

out_a= self.conv1_a(x)

out_a = self.bn1_a(out_a)

out_b = self.conv1_b(x)

out_b = self.bn1_b(out_b)

out_a = self.relu(out_a)

out_b = self.relu(out_b)

out_a = self.k1(out_a)

out_b = self.scconv(out_b)

out_a = self.relu(out_a)

out_b = self.relu(out_b)

if self.avd:

out_a = self.avd_layer(out_a)

out_b = self.avd_layer(out_b)

out = self.conv3(torch.cat([out_a, out_b], dim=1))

out = self.bn3(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

class SCNet(nn.Module):

""" SCNet Variants Definations

Parameters

----------

block : Block

Class for the residual block.

layers : list of int

Numbers of layers in each block.

classes : int, default 1000

Number of classification classes.

dilated : bool, default False

Applying dilation strategy to pretrained SCNet yielding a stride-8 model.

deep_stem : bool, default False

Replace 7x7 conv in input stem with 3 3x3 conv.

avg_down : bool, default False

Use AvgPool instead of stride conv when

downsampling in the bottleneck.

norm_layer : object

Normalization layer used (default: :class:`torch.nn.BatchNorm2d`).

Reference:

- He, Kaiming, et al. "Deep residual learning for image recognition."

Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

- Yu, Fisher, and Vladlen Koltun. "Multi-scale context aggregation by dilated convolutions."

"""

def __init__(self, block, layers, groups=1, bottleneck_width=32,

num_classes=1000, dilated=False, dilation=1,

deep_stem=False, stem_width=64, avg_down=False,

avd=False, norm_layer=nn.BatchNorm2d):

self.cardinality = groups

self.bottleneck_width = bottleneck_width

# ResNet-D params

self.inplanes = stem_width*2 if deep_stem else 64

self.avg_down = avg_down

self.avd = avd

super(SCNet, self).__init__()

conv_layer = nn.Conv2d

if deep_stem:

self.conv1 = nn.Sequential(

conv_layer(3, stem_width, kernel_size=3, stride=2, padding=1, bias=False),

norm_layer(stem_width),

nn.ReLU(inplace=True),

conv_layer(stem_width, stem_width, kernel_size=3, stride=1, padding=1, bias=False),

norm_layer(stem_width),

nn.ReLU(inplace=True),

conv_layer(stem_width, stem_width*2, kernel_size=3, stride=1, padding=1, bias=False),

)

else:

self.conv1 = conv_layer(3, 64, kernel_size=7, stride=2, padding=3,

bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0], norm_layer=norm_layer, is_first=False)

self.layer2 = self._make_layer(block, 128, layers[1], stride=2, norm_layer=norm_layer)

if dilated or dilation == 4:

self.layer3 = self._make_layer(block, 256, layers[2], stride=1,

dilation=2, norm_layer=norm_layer)

self.layer4 = self._make_layer(block, 512, layers[3], stride=1,

dilation=4, norm_layer=norm_layer)

elif dilation==2:

self.layer3 = self._make_layer(block, 256, layers[2], stride=2,

dilation=1, norm_layer=norm_layer)

self.layer4 = self._make_layer(block, 512, layers[3], stride=1,

dilation=2, norm_layer=norm_layer)

else:

self.layer3 = self._make_layer(block, 256, layers[2], stride=2,

norm_layer=norm_layer)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2,

norm_layer=norm_layer)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, norm_layer):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def _make_layer(self, block, planes, blocks, stride=1, dilation=1, norm_layer=None,

is_first=True):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

down_layers = []

if self.avg_down:

if dilation == 1:

down_layers.append(nn.AvgPool2d(kernel_size=stride, stride=stride,

ceil_mode=True, count_include_pad=False))

else:

down_layers.append(nn.AvgPool2d(kernel_size=1, stride=1,

ceil_mode=True, count_include_pad=False))

down_layers.append(nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=1, bias=False))

else:

down_layers.append(nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False))

down_layers.append(norm_layer(planes * block.expansion))

downsample = nn.Sequential(*down_layers)

layers = []

if dilation == 1 or dilation == 2:

layers.append(block(self.inplanes, planes, stride, downsample=downsample,

cardinality=self.cardinality,

bottleneck_width=self.bottleneck_width,

avd=self.avd, dilation=1, is_first=is_first,

norm_layer=norm_layer))

elif dilation == 4:

layers.append(block(self.inplanes, planes, stride, downsample=downsample,

cardinality=self.cardinality,

bottleneck_width=self.bottleneck_width,

avd=self.avd, dilation=2, is_first=is_first,

norm_layer=norm_layer))

else:

raise RuntimeError("=> unknown dilation size: {}".format(dilation))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes,

cardinality=self.cardinality,

bottleneck_width=self.bottleneck_width,

avd=self.avd, dilation=dilation,

norm_layer=norm_layer))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

def scnet50(pretrained=False, **kwargs):

"""Constructs a SCNet-50 model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = SCNet(SCBottleneck, [3, 4, 6, 3],

deep_stem=False, stem_width=32, avg_down=False,

avd=False, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['scnet50']))

return model

def scnet50_v1d(pretrained=False, **kwargs):

"""Constructs a SCNet-50_v1d model described in

`Bag of Tricks `_.

`ResNeSt: Split-Attention Networks `_.

Compared with default SCNet(SCNetv1b), SCNetv1d replaces the 7x7 conv

in the input stem with three 3x3 convs. And in the downsampling block,

a 3x3 avg_pool with stride 2 is added before conv, whose stride is

changed to 1.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = SCNet(SCBottleneck, [3, 4, 6, 3],

deep_stem=True, stem_width=32, avg_down=True,

avd=True, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['scnet50_v1d']))

return model

def scnet101(pretrained=False, **kwargs):

"""Constructs a SCNet-101 model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = SCNet(SCBottleneck, [3, 4, 23, 3],

deep_stem=False, stem_width=64, avg_down=False,

avd=False, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['scnet101']))

return model

def scnet101_v1d(pretrained=False, **kwargs):

"""Constructs a SCNet-101_v1d model described in

`Bag of Tricks `_.

`ResNeSt: Split-Attention Networks `_.

Compared with default SCNet(SCNetv1b), SCNetv1d replaces the 7x7 conv

in the input stem with three 3x3 convs. And in the downsampling block,

a 3x3 avg_pool with stride 2 is added before conv, whose stride is

changed to 1.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = SCNet(SCBottleneck, [3, 4, 23, 3],

deep_stem=True, stem_width=64, avg_down=True,

avd=True, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['scnet101_v1d']))

return model

Res2Net.py (从作者开源仓库获得,无修改)

import torch.nn as nn

import math

import torch.utils.model_zoo as model_zoo

import torch

import torch.nn.functional as F

__all__ = ['Res2Net', 'res2net50']

model_urls = {

'res2net50_26w_4s': 'https://shanghuagao.oss-cn-beijing.aliyuncs.com/res2net/res2net50_26w_4s-06e79181.pth',

'res2net50_48w_2s': 'https://shanghuagao.oss-cn-beijing.aliyuncs.com/res2net/res2net50_48w_2s-afed724a.pth',

'res2net50_14w_8s': 'https://shanghuagao.oss-cn-beijing.aliyuncs.com/res2net/res2net50_14w_8s-6527dddc.pth',

'res2net50_26w_6s': 'https://shanghuagao.oss-cn-beijing.aliyuncs.com/res2net/res2net50_26w_6s-19041792.pth',

'res2net50_26w_8s': 'https://shanghuagao.oss-cn-beijing.aliyuncs.com/res2net/res2net50_26w_8s-2c7c9f12.pth',

'res2net101_26w_4s': 'https://shanghuagao.oss-cn-beijing.aliyuncs.com/res2net/res2net101_26w_4s-02a759a1.pth',

}

class Bottle2neck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None, baseWidth=26, scale=4, stype='normal'):

""" Constructor

Args:

inplanes: input channel dimensionality

planes: output channel dimensionality

stride: conv stride. Replaces pooling layer.

downsample: None when stride = 1

baseWidth: basic width of conv3x3

scale: number of scale.

type: 'normal': normal set. 'stage': first block of a new stage.

"""

super(Bottle2neck, self).__init__()

width = int(math.floor(planes * (baseWidth / 64.0)))

self.conv1 = nn.Conv2d(inplanes, width * scale, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(width * scale)

if scale == 1:

self.nums = 1

else:

self.nums = scale - 1

if stype == 'stage':

self.pool = nn.AvgPool2d(kernel_size=3, stride=stride, padding=1)

convs = []

bns = []

for i in range(self.nums):

convs.append(nn.Conv2d(width, width, kernel_size=3, stride=stride, padding=1, bias=False))

bns.append(nn.BatchNorm2d(width))

self.convs = nn.ModuleList(convs)

self.bns = nn.ModuleList(bns)

self.conv3 = nn.Conv2d(width * scale, planes * self.expansion, kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stype = stype

self.scale = scale

self.width = width

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

spx = torch.split(out, self.width, 1)

for i in range(self.nums):

if i == 0 or self.stype == 'stage':

sp = spx[i]

else:

sp = sp + spx[i]

sp = self.convs[i](sp)

sp = self.relu(self.bns[i](sp))

if i == 0:

out = sp

else:

out = torch.cat((out, sp), 1)

if self.scale != 1 and self.stype == 'normal':

out = torch.cat((out, spx[self.nums]), 1)

elif self.scale != 1 and self.stype == 'stage':

out = torch.cat((out, self.pool(spx[self.nums])), 1)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

class Res2Net(nn.Module):

def __init__(self, block, layers, baseWidth=26, scale=4, num_classes=1000):

self.inplanes = 64

super(Res2Net, self).__init__()

self.baseWidth = baseWidth

self.scale = scale

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,

bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample=downsample,

stype='stage', baseWidth=self.baseWidth, scale=self.scale))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes, baseWidth=self.baseWidth, scale=self.scale))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

def res2net50(pretrained=False, **kwargs):

"""Constructs a Res2Net-50 model.

Res2Net-50 refers to the Res2Net-50_26w_4s.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = Res2Net(Bottle2neck, [3, 4, 6, 3], baseWidth=26, scale=4, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['res2net50_26w_4s']))

return model

def res2net50_26w_4s(pretrained=False, **kwargs):

"""Constructs a Res2Net-50_26w_4s model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = Res2Net(Bottle2neck, [3, 4, 6, 3], baseWidth=26, scale=4, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['res2net50_26w_4s']))

return model

def res2net101_26w_4s(pretrained=False, **kwargs):

"""Constructs a Res2Net-50_26w_4s model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = Res2Net(Bottle2neck, [3, 4, 23, 3], baseWidth=26, scale=4, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['res2net101_26w_4s']))

return model

def res2net50_26w_6s(pretrained=False, **kwargs):

"""Constructs a Res2Net-50_26w_4s model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = Res2Net(Bottle2neck, [3, 4, 6, 3], baseWidth=26, scale=6, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['res2net50_26w_6s']))

return model

def res2net50_26w_8s(pretrained=False, **kwargs):

"""Constructs a Res2Net-50_26w_4s model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = Res2Net(Bottle2neck, [3, 4, 6, 3], baseWidth=26, scale=8, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['res2net50_26w_8s']))

return model

def res2net50_48w_2s(pretrained=False, **kwargs):

"""Constructs a Res2Net-50_48w_2s model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = Res2Net(Bottle2neck, [3, 4, 6, 3], baseWidth=48, scale=2, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['res2net50_48w_2s']))

return model

def res2net50_14w_8s(pretrained=False, **kwargs):

"""Constructs a Res2Net-50_14w_8s model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = Res2Net(Bottle2neck, [3, 4, 6, 3], baseWidth=14, scale=8, **kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['res2net50_14w_8s']))

return model