基于nginx+keepalived的负载均衡、高可用的Web集群项目

目录

项目名称:

基于nginx+keepalived的负载均衡、高可用的Web集群项目

项目配置拓扑图:

项目环境:

项目IP地址划分:

项目描述:

项目步骤:

一、准备10台全新虚拟机,按照IP规划配置好静态IP。建立免密通道,使用Ansible自动化批量部署软件环境

1、按照IP规划配置好10台机器的静态IP地址

2、修改每台机器的主机名

3、生成密钥对

4、上传公钥到其他远程主机

5、安装Ansible

6、编写host inventory(hosts)(主机清单)--要远程控制的主机ip

7、编写playbook批量部署nginx、keepalived、node_exporters、dns等软件

8、手动安装prometheus、grafana、ab软件

9、执行ansible中的 software_install.yaml 程序,创建基础环境

二、模拟中台转发系统,两台负载均衡器上使用nginx实现7层负载均衡,后端nginx集群通过访问NFS服务实现数据同源。

1、负载均衡的实现

2、NFS服务器实现数据同源

nfs服务器的安装

web集群安装nfs

nfs服务器编辑共享文件

创建共享文件夹

将本机器的nfs服务器设置为共享文件夹

将防火墙关闭,防止其他的机器连接不过来

在其他的web服务器上挂载共享目录(共享文件夹)

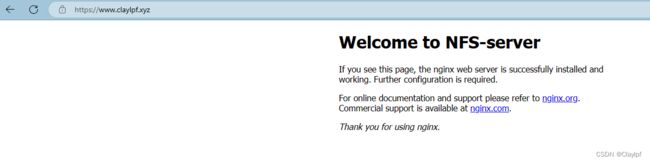

效果测试:

三、使用nginx的https、隐藏版本、身份认证、realip等模块

使用nginx搭建http和https环境

到阿里云购买域名并申请免费SSL证书,然后上传域名绑定的SSL证书到/usr/local/claynginx66/conf/目录下

配置nginx的配置文件:

修改Windows内的hosts文件

效果测试:

隐藏版本:

realip实现

状态统计

四、在负载均衡器上使用keepalived搭建双vip双master高可用架构,并使用健康检测、notify功能监控本机nginx进程。

两个负载均衡器上启用两个arrp实例

nginx-lb1中的keepalived的配置文件

nginx-lb2中的keepalived的配置文件

效果测试:

关闭LB1的nginx进程,测试是否会发生vip漂移

五、搭建DNS主域名服务器,增添两条负载均衡记录,实现基于DNS的负载均衡;访问同一URL,解析出双vip地址。

安装DNS服务器

修改dns配置文件,允许任意ip可以访问本机的53端口,并且允许dns解析。

搭建DNS主域名服务器

效果测试

六、安装部署prometheus+grafana实现对backend服务器和load balance服务器的监控和出图

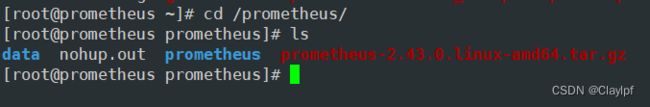

安装部署prometheus成一个服务(官网下载源码包,上传到/prometheus里,解压)

临时和永久修改PATH变量,添加prometheus的路径

查看效果,并进行nohup后台运行

把Prometheus做成一个服务器service来进行管理

安装完成后访问prometheus

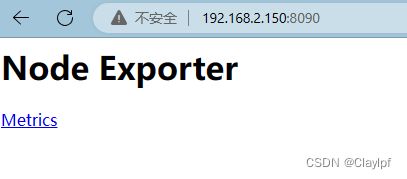

Web集群和LB集群安装node_exporters采集数据

prometheus配置文件中添加安装了node_exporter的web集群和lb集群(prometheus.yml)

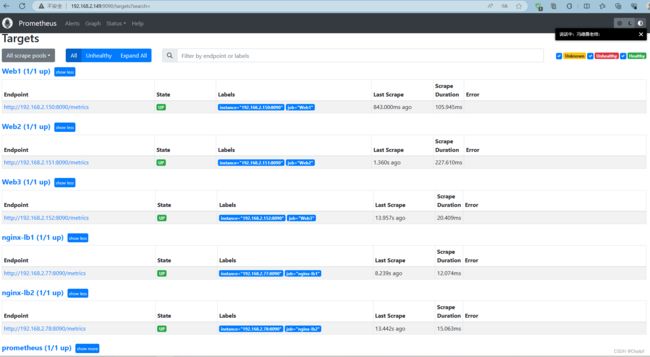

访问prometheus,查看效果

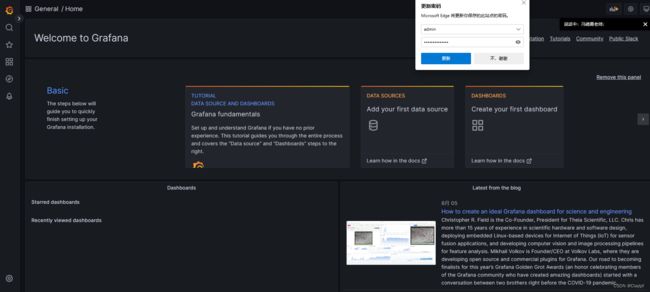

Grafana,安装部署grafana

登录grafana查看效果

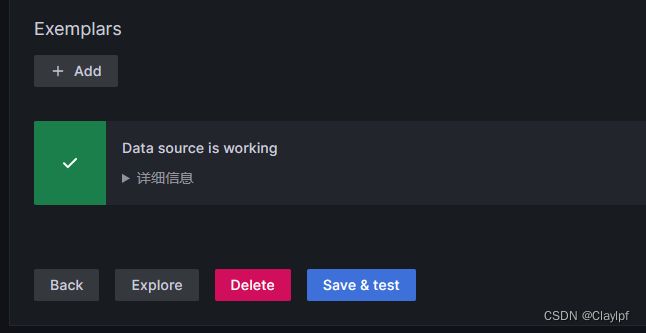

配置Prometheus的数据源,并导入grafana的模板(Dashboards)

配置数据源和导入模板:

配置Prometheus的数据源

使用Dashboards

查看效果

查看保存后的Dashboards模板目录

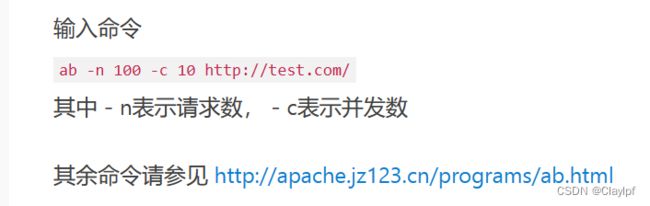

七、ab测试机对web集群和负载均衡器进行压力测试,了解系统性能的瓶颈

安装ab命令,yum install -y httpd-tools压力测试

ab测试:

八、对系统性能资源(如内核参数、nginx参数)进行调优,提升系统性能

内核参数调优(对linux机器的内核进行优化)

1、提高系统的并发性能和吞吐量

2、交换分区调优,当内存使用率为0时在使用交换分区资源,提高内存的利用率。

3、对nginx进行的参数调优

项目遇到的问题:

项目心得

项目名称:

基于nginx+keepalived的负载均衡、高可用的Web集群项目

项目配置拓扑图:

项目环境:

CentOS:CentOS Linux release 7.9.2009 (Core)

Nginx:nginx/1.23.3

DNS:BIND 9.11.4-P2-RedHat-9.11.4-26.P2.el7_9.13

Ansible:ansible 2.9.27

Keepalived: Keepalived v1.3.5 (03/19,2017)

Prometheus: prometheus, version 2.43.0

Grafana: grafana 9.4.7

NFS: nfs v4

ab:ApacheBench, Version 2.3

项目IP地址划分:

Ansible: 192.168.2.230

Windows客户机: 192.168.2.43

Nginx-lb1(负载均衡器): 192.168.2.77

Nginx-lb2(负载均衡器): 192.168.2.78

Prometheus: 192.168.2.149

Web1: 192.168.2.150

Web2: 192.168.2.151

Web3: 192.168.2.152

DNS: 192.168.2.155

NFS: 192.168.2.157

AB: 192.168.2.161

项目描述:

模拟企业构建一个高可用并且高性能的web集群项目,能处理大并发的web业务。使用Ansible实现软件环境的部署,Nginx实现7层负载均衡和搭建web框架,Keepalived搭建双vip架构实现高可用,Prometheus+Grafana实现对web集群以及负载均衡器的系统资源监控,NFS实现web集群的数据同源,DNS搭建主域名服务器实现vip地址的解析。

项目步骤:

一、准备10台全新虚拟机,按照IP规划配置好静态IP。建立免密通道,使用Ansible自动化批量部署软件环境

参考:ansible 的学习_Claylpf的博客-CSDN博客

1、按照IP规划配置好10台机器的静态IP地址

如何配置Centos7.9的静态IP呢:计算机网络 day6 arp病毒 - ICMP协议 - ping命令 - Linux手工配置IP地址_Claylpf的博客-CSDN博客

2、修改每台机器的主机名

[root@localhost ~]# hostnamectl set-hostname ansible

[root@localhost ~]# su - root

上一次登录:三 8月 2 15:00:27 CST 2023从 192.168.2.43pts/0 上

[root@ansible ~]# 3、生成密钥对

[root@ansible ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:kdNejwuLwHMHo3NpOKXMMBVobee+PRGrs5WghEtNO4M root@ansible

The key's randomart image is:

+---[RSA 2048]----+

| oo. |

| o.o .o |

| .o..oB . . |

| @ *.B.. o |

| E ^.S +o. . |

| . o %.+o+ . |

| . . .++.. |

| +.o |

| .o . |

+----[SHA256]-----+

[root@ansible ~]# ls

anaconda-ks.cfg

[root@ansible ~]# ls -a

. .. anaconda-ks.cfg .bash_logout .bash_profile .bashrc .cshrc .ssh .tcshrc

[root@ansible ~]# cd .ssh

[root@ansible .ssh]# ls

id_rsa id_rsa.pub

[root@ansible .ssh]#

4、上传公钥到其他远程主机

[root@ansible ~]# ls -a

. .. anaconda-ks.cfg .bash_logout .bash_profile .bashrc .cshrc .ssh .tcshrc

[root@ansible ~]# cd .ssh

[root@ansible .ssh]# ls

id_rsa id_rsa.pub

[root@ansible .ssh]# ssh-copy-id -i id_rsa.pub 192.168.2.77

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_rsa.pub"

The authenticity of host '192.168.2.77 (192.168.2.77)' can't be established.

ECDSA key fingerprint is SHA256:pwqygWUqVKBQy3ENsB8coZCtFFYJcZYyyxONd+J7268.

ECDSA key fingerprint is MD5:6b:4a:4b:3e:07:ae:f3:40:a1:6d:88:ed:f4:2c:7f:7b.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

[email protected]'s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.2.77'"

and check to make sure that only the key(s) you wanted were added.ssh-copy-id -i ~/.ssh/id_rsa.pub +所需部署软件的远程主机ip地址

5、安装Ansible

[root@ansible ~]# yum install -y epel-release #安装epel源

[root@ansible ~]# yum install -y ansible #安装ansible

[root@ansible ~]# ansible --version #查看ansible版本

[root@ansible .ssh]# ansible --version

ansible 2.9.27

6、编写host inventory(hosts)(主机清单)--要远程控制的主机ip

[root@ansible /]# cd /etc/ansible/

[root@ansible ansible]# ls

ansible.cfg hosts roles

[root@ansible ansible]# vim hosts

[root@ansible ansible]# cat hosts

[web_servers]

192.168.2.150 #web1

192.168.2.151 #web2

192.168.2.152 #web3

[dns_server]

192.168.2.155 #dns

[lb_servers]

192.168.2.77 #lb1

192.168.2.78 #lb2

[nfs_server]

192.168.2.157 #NFS_server

[root@ansible ansible]#

尝试运行shell命令:

[root@ansible ansible]# ansible nfs_server -m shell -a "ip add"

192.168.2.157 | CHANGED | rc=0 >>

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c9:1d:8f brd ff:ff:ff:ff:ff:ff

inet 192.168.2.157/24 brd 192.168.2.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fec9:1d8f/64 scope link

valid_lft forever preferred_lft forever

[root@ansible ansible]#

7、编写playbook批量部署nginx、keepalived、node_exporters、dns等软件

[root@ansible /]# mkdir playbook #创建playbook文件夹

[root@ansible /]# cd playbook/

[root@ansible playbook]# vim software_install.yaml

[root@ansible playbook]# cat software_install.yaml

- hosts: web_servers #web集群

remote_user: root

tasks:

#web主机组中编译安装部署nginx集群

- name: install nginx

script: /etc/ansible/nginx/one_key_install_nginx.sh #调用本地一键安装部署nginx脚本,在远程主机上编译安装

#web主机组中安装nfs,访问nfs服务器,实现数据同源

- name: install nfs

yum: name=nfs-utils state=installed

- hosts: dns_server #dns服务器

remote_user: root

tasks:

- name: install dns

yum: name=bind.* state=installed

- hosts: lb_servers #负载均衡服务器

remote_user: root

tasks:

#lb主机组中编译安装nginx

- name: install nginx

script: /etc/ansible/nginx/one_key_install_nginx.sh

#lb主机组中安装keepalived,实现高可用

- name: install keepalived

yum: name=keepalived state=installed

- hosts: nfs_server #NFS服务器

remote_user: root

tasks:

- name: install nfs

yum: name=nfs-utils state=installed

#调用本地onekey_install_node_exporter脚本,批量安装部署node_exporter,为prometheus采集数据

- hosts: web_servers lb_servers

remote_user: root

tasks:

- name: install node_exporters

script: /etc/ansible/node_exporter/onekey_install_node_exporter.sh

tags: install_exporter

- name: start node_exporters #后台运行node_exporters

shell: nohup node_exporter --web.listen-address 0.0.0.0:8090 &

tags: start_exporters #打标签,方便后面直接跳转到此处批量启动node_exporters

[root@ansible playbook]#

其中包含了 one_key_install_nginx.sh和onekey_install_node_exporter.sh两个脚本文件

one_key_install_nginx.sh

参考:计算机网络day15 HTTP协议 - 工作原理 - URI - 状态码 - nginx实现HTTP和HTTPS协议 - 连接方式和有无状态 - Cookie和Session和token - 报文结构_Claylpf的博客-CSDN博客

[root@ansible playbook]# cd /etc/ansible/

[root@ansible ansible]# ls

ansible.cfg hosts roles

[root@ansible ansible]# mkdir nginx

[root@ansible ansible]# cd nginx/

[root@ansible nginx]# vim one_key_install_nginx.sh

[root@ansible nginx]# cat one_key_install_nginx.sh

#!/bin/bash

#新建一个文件夹用来存放下载的nginx源码包

mkdir -p /nginx

cd /nginx

#新建工具人用户、设置无法登录模式

useradd -s /sbin/nologin clay

#下载nginx

#wget http://nginx.org/download/nginx-1.23.2.tar.gz

curl -O http://nginx.org/download/nginx-1.23.2.tar.gz

#解压nginx源码包

tar xf nginx-1.23.2.tar.gz

#解决软件依赖关系、需要安装的软件包

yum install epel-release -y

yum install gcc gcc-c++ openssl openssl-devel pcre pcre-devel automake make psmisc net-tools lsof vim geoip geoip-devel wget zlib zlib-devel -y

#到达nginx配置文件目录下

cd nginx-1.23.2

#编译前的配置

./configure --prefix=/usr/local/claynginx66 --user=clay --with-http_ssl_module --with-http_v2_module --with-stream --with-http_stub_status_module --with-threads

#编译、开启一个进程同时编译

make -j 1

#编译安装

make install

#启动nginx

/usr/local/claynginx66/sbin/nginx

#永久修改PATH变量

PATH=$PATH:/usr/local/claynginx66/sbin

echo "PATH=$PATH:/usr/local/claynginx66/sbin" >>/root/.bashrc

#设置nginx的开机启动--手动添加

#在/etc/rc.local中添加启动命令

#/usr/local/claynginx66/sbin/nginx

echo "/usr/local/claynginx66/sbin/nginx" >>/etc/rc.local

#给文件可执行权限

chmod +x /etc/rc.d/rc.local

#selinux和firewalld防火墙都需要关闭

service firewalld stop

systemctl disable firewalld

#临时关闭selinux

setenforce 0

#永久关闭selinux (需要开机重启)

#vim /etc/selinx/config

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config

[root@ansible nginx]#

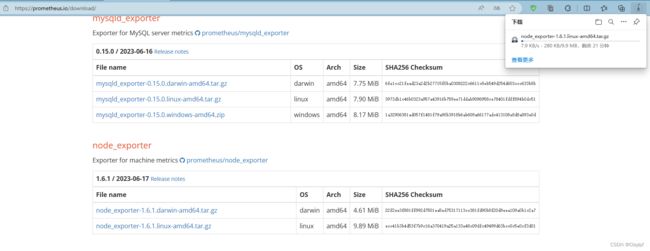

onekey_install_node_exporter.sh(需要自己下载文件node_exporter-1.5.0.linux-amd64.tar.gz,并传输给web_servers和lb_servers内的机器的/root根目录下)

参考:Prometheus监控软件的学习_nfs exporter_Claylpf的博客-CSDN博客

[root@ansible nginx]# cd ..

[root@ansible ansible]# mkdir node_exporter

[root@ansible ansible]# cd node_exporter/

[root@ansible node_exporter]# vim onekey_install_node_exporter.sh

[root@ansible node_exporter]# cat onekey_install_node_exporter.sh

#!/bin/bash

cd ~

tar xf node_exporter-1.5.0.linux-amd64.tar.gz #解压node_exporters源码包

mv node_exporter-1.5.0.linux-amd64 /node_exporter

cd /node_exporter

PATH=/node_exporter:$PATH #加入PATH环境变量

echo "PATH=/node_exporter:$PATH" >>/root/.bashrc #加入开机启动

nohup node_exporter --web.listen-address 0.0.0.0:8090 & #后台运行,监听8090端口

[root@ansible node_exporter]#

我之前下载过:

8、手动安装prometheus、grafana、ab软件

参考:Prometheus监控软件的学习_nfs exporter_Claylpf的博客-CSDN博客

Grafana展示工具的学习_grafana学习_Claylpf的博客-CSDN博客

nginx-Web集群-压力测试(ab工具)_nginx压测工具_Claylpf的博客-CSDN博客

Prometheus

prometheus的配置文件:prometheus.yml

[root@prometheus prometheus]# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: "Web1"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.150:8090"]

- job_name: "Web2"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.151:8090"]

- job_name: "Web3"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.152:8090"]

- job_name: "nginx-lb1"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.77:8090"]

- job_name: "nginx-lb2"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.78:8090"]

[root@prometheus prometheus]#

9、执行ansible中的 software_install.yaml 程序,创建基础环境

[root@ansible playbook]# ansible-playbook software_install.yaml

二、模拟中台转发系统,两台负载均衡器上使用nginx实现7层负载均衡,后端nginx集群通过访问NFS服务实现数据同源。

1、负载均衡的实现

参考:nginx负载均衡器的部署(5层\7层)_负载均衡需要几台服务器_Claylpf的博客-CSDN博客

在两台负载均衡器(nginx-lb1、nginx-lb2)上编辑nginx配置文件,增加负载均衡的功能

[root@nginx-lb1 ~]# vim /usr/local/claynginx66/conf/nginx.conf

#gzip on;

#修改这一条

upstream clayweb { #对其进行负载均衡,命名为clayweb

server 192.168.2.150; #web1

server 192.168.2.151; #web2

server 192.168.2.152; #web3

}

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

#转发到后端服务器

location / {

proxy_pass http://clayweb; #修改这一条

}

#error_page 404 /404.html;

nginx-lb2负载均衡配置同上

效果测试:

2、NFS服务器实现数据同源

参考:搭建NFS服务器_自建nfs服务器_Claylpf的博客-CSDN博客

nfs服务器的安装

[root@nfs ~]# yum install -y nfs-utils # 安装nfs服务

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.163.com

* extras: mirrors.huaweicloud.com

* updates: mirrors.163.com

软件包 1:nfs-utils-1.3.0-0.68.el7.2.x86_64 已安装并且是最新版本

无须任何处理

[root@nfs ~]# service nfs start #启动nfs服务

Redirecting to /bin/systemctl start nfs.service

[root@nfs ~]#

web集群安装nfs

[root@web1 ~]# yum install -y nfs-utils #安装nfs

[root@web1 ~]# service nfs start #启动nfs服务web2、web3同上

nfs服务器编辑共享文件

同192.168.2.0/24网段可以共享文件/web下的内容

[root@nfs ~]# vim /etc/exports

[root@nfs ~]# cat /etc/exports

/web 192.168.2.0/24(rw,all_squash,sync)

[root@nfs ~]#

创建共享文件夹

[root@nfs ~]# mkdir /web

[root@nfs ~]# cd /web/

[root@nfs web]# vim index.html

[root@nfs web]# cat index.html

Welcome to nginx!

Welcome to NFS-server

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

[root@nfs web]#

将本机器的nfs服务器设置为共享文件夹

[root@nfs web]# exportfs -rv

exporting 192.168.2.0/24:/web

[root@nfs web]#

将防火墙关闭,防止其他的机器连接不过来

[root@nfs web]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@nfs web]#

在其他的web服务器上挂载共享目录(共享文件夹)

[root@web-1 html]# mount 192.168.2.157:/web /usr/local/claynginx66/html

web2、web3同上

效果测试:

三、使用nginx的https、隐藏版本、身份认证、realip等模块

使用nginx搭建http和https环境

参考:使用nginx搭建http和https环境_nginx搭建http服务器_Claylpf的博客-CSDN博客

计算机网络day15 HTTP协议 - 工作原理 - URI - 状态码 - nginx实现HTTP和HTTPS协议 - 连接方式和有无状态 - Cookie和Session和token - 报文结构_Claylpf的博客-CSDN博客

域名:计算机网络 day13 TCP拥塞控制 - tcpdump - DHCP协议 - DHCP服务器搭建 - DNS域名解析系统 - DNS域名解析过程_Claylpf的博客-CSDN博客

到阿里云购买域名并申请免费SSL证书,然后上传域名绑定的SSL证书到/usr/local/claynginx66/conf/目录下

[root@nginx-lb2 conf]# ls

9581058_claylpf.xyz_nginx.zip下载解压包工具:

[root@nginx-lb2 conf]# yum install unzip -y

解压9581058_claylpf.xyz_nginx.zip压缩包:

[root@nginx-lb2 conf]# unzip 9581058_claylpf.xyz_nginx.zip

Archive: 9581058_claylpf.xyz_nginx.zip

Aliyun Certificate Download

inflating: claylpf.xyz.pem

inflating: claylpf.xyz.key

配置nginx的配置文件:

[root@nginx-lb1 conf]# cat nginx.conf

#user nobody;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

#HTTP协议的配置

http {

include mime.types;

default_type application/octet-stream;

#(定义访问日志的格式 日志保存在/usr/local/scnginx/logs/access.log文件中)

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/access.log main; #--》日志保存的地址

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65; #--》65秒后用户和web服务器建立的连接就会断开、 保持连接65秒的时间、 连接的超时时间

#gzip on;

#修改这一条

upstream clayweb { #对其进行负载均衡,命名为clayweb

server 192.168.2.150; #web1

server 192.168.2.151; #web2

server 192.168.2.152; #web3

}

server_tokens off; #隐藏版本

limit_conn_zone $binary_remote_addr zone=addr:10m; #创建一个连接区域(开辟了一个名叫addr的内存空间、像一个字典)

limit_req_zone $binary_remote_addr zone=one:10m rate=1r/s; #创建一个连接区域(开辟了一个名叫one的内存空间、像一个字典)、每一秒产生1个可以访问的请求

server {

listen 80;

server_name www.claylpf.xyz; #目的是直接从http协议跳转到https协议去

return 301 https://www.claylpf.xyz; #永久重定向

}

server {

listen 80;

server_name www.feng.com; #可以自己定义域名

access_log logs/feng.com.access.log main;

limit_rate_after 100k; #下载速度达到100k每秒的时候、进行限制

limit_rate 10k; #限制每秒10k的速度

limit_req zone=one burst=5; #同一时间同一ip最多5人同时访问 峰值是5 每一秒通过one的设定、产生一个空位 1r/s

#转发到后端服务器

location / {

proxy_pass http://clayweb; #修改这一条

}

error_page 404 /404.html; #无法找到

error_page 500 502 503 504 /50x.html; #一般是nginx内部出现问题才会出现的提示

location = /50x.html {

root html;

}

# web集群的配置

location = /info {

stub_status on; #返回你的nginx的状态统计信息

#access_log off; #在访问的时候不计入访问日志里面去

auth_basic "sanchuang website";

auth_basic_user_file htpasswd;

#allow 172.20.10.2; #允许172.20.10.2的ip地址访问

#deny all; #拒绝所有用户访问

}

}

# HTTPS server

server {

listen 443 ssl;

server_name www.claylpf.xyz; #证书上对应的域名

ssl_certificate claylpf.xyz.pem; #自己在阿里云上申请的免费的CA证书

ssl_certificate_key claylpf.xyz.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 5m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

#它指示Nginx将所有请求转发到名为my_lb的后端服务器

proxy_pass http://clayweb;

#将客户端的真实IP地址传递给后端服务器

proxy_set_header X-Real-IP $remote_addr;

}

}

}

[root@nginx-lb1 conf]#

重启nginx:

[root@nginx-lb2 conf]# cd ..

[root@nginx-lb2 claynginx66]# cd sbin/

[root@nginx-lb2 sbin]# ./nginx -s reload

修改Windows内的hosts文件

www.claylpf.xyz 192.168.2.77

www.claylpf.xyz 192.168.2.78效果测试:

其中我添加了如下代码,实现了http自动跳转到https

server {

listen 80;

server_name www.claylpf.xyz; #目的是直接从http协议跳转到https协议去

return 301 https://www.claylpf.xyz; #永久重定向

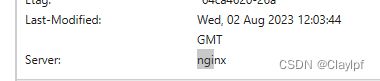

}隐藏版本:

server_tokens off; #nginx.conf配置文件中的http{}中加入server由server: nginx 1.23/2 变成了 nginx

realip实现

后端服务器如何知道真正访问自己的客户的ip?而不只是负载均衡器的ip?

https server{}中加入proxy_set_header X-Real-IP $remote_addr,读取remote_addr变量,获取真实客户机的IP地址

负载均衡器编辑nginx配置文件

location / {

proxy_pass http://clayweb;

proxy_set_header X-Real-IP $remote_addr;

}web集群编辑nginx配置文件

(server{}中的日志格式中加入$http_x_real_ip 获取真实客户的ip地址,在日志中显示)

#(定义访问日志的格式 日志保存在/usr/local/scnginx/logs/access.log文件中)

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/access.log main; #--》日志保存的地址 效果测试

[root@nginx-lb1 logs]# cat access.log

192.168.2.43 - - [02/Aug/2023:19:46:53 +0800] "GET / HTTP/1.1" 200 615 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36 Edg/115.0.1901.188"

192.168.2.43 - - [02/Aug/2023:20:00:43 +0800] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36 Edg/115.0.1901.188"

192.168.2.43 - - [02/Aug/2023:20:00:46 +0800] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36 Edg/115.0.1901.188"

其中192.168.2.43是Windows机器的IP地址

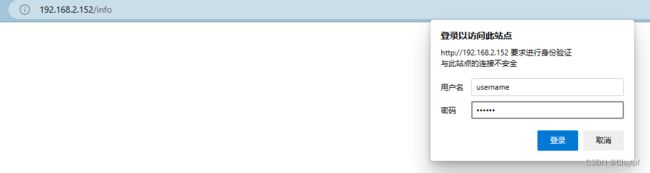

状态统计

web集群编辑nginx配置文件

server{}中定义一个路由,其中加入stub_status on;

location = /info {

# 开启状态统计

stub_status on;

# 查看状态的访问不计入日志

access_log off;

# 启用身份认证

auth_basic "clay_website";

# 指定密码文件,默认在conf目录下查找

auth_basic_user_file htpasswd;

#allow 172.20.10.2; #允许172.20.10.2的ip地址访问

#deny all; #拒绝所有用户访问

}

生成htpasswd文件的账号密码

#下载工具

yum install -y httpd-tools

[root@web-1 conf]# htpasswd -c /usr/local/claynginx66/conf/htpasswd username #自动产生输入密码界面

New password: #我输入的密码是123456

Re-type new password:

Adding password for user username

[root@web-1 conf]#

#用户名:username

#密码: 123456

如果已经存在密码文件,可以使用以下命令来添加或更新用户名和密码:

htpasswd /usr/local/claynginx66/conf/htpasswd username效果测试

四、在负载均衡器上使用keepalived搭建双vip双master高可用架构,并使用健康检测、notify功能监控本机nginx进程。

notify功能

notify功能是 Linux/Unix 系统中的一个重要特性,用于实现进程间的通信和同步。它允许一个进程发送一个信号(通知)给另一个进程,以便后者可以采取相应的动作。notify通常用于在进程之间传递简单的通知信息,例如告知某个事件的发生。

keepalived的安装部署(已使用Ansible的playbook批量安装完成)

两个负载均衡器上启用两个arrp实例

参考:搭建 通过nginx负载均衡器 实现的高可用web服务_web负载均衡搭建_Claylpf的博客-CSDN博客关于高可用-keepalived的健康检测-监控脚本的使用_keepalived 监控脚本_Claylpf的博客-CSDN博客

nginx-lb1中的keepalived的配置文件

[root@nginx-lb1 ~]# cd /etc/keepalived/

[root@nginx-lb1 keepalived]# ls

keepalived.conf

[root@nginx-lb1 keepalived]# vim keepalived.conf

[root@nginx-lb1 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict #记得要注释,否则可能会无法收到vrrp广播

vrrp_garp_interval 0

vrrp_gna_interval 0

}

#定义监控脚本chk_nginx

vrrp_script chk_nginx {

#判断nginx是否在运行,若服务已挂,则返回值为1,执行下面的命令,使其priority值减80,小于backup的priority的值,使其成为backup状态

script "/etc/keepalived/check_nginx.sh"

interval 1

weight -80

}

vrrp_instance VI_1 {

state MASTER #设置为MASTER角色

interface ens33 #收听vrrp广播的网卡为ens33

virtual_router_id 88 #虚拟路由器id

priority 150 #优先级,MASTER

advert_int 1 #vrrp广播延迟

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.2.188 #vip地址

}

#调用监控脚本

track_script {

chk_nginx

}

#定义notify_backup,如果本机负载均衡器lb1的nginx挂了,就关闭keepalived

notify_backup "/etc/keepalived/stop_keepalived"

}

vrrp_instance VI_2 {

state BACKUP

interface ens33

virtual_router_id 99

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.2.199

}

}

[root@nginx-lb1 keepalived]#

nginx-lb2中的keepalived的配置文件

[root@nginx-lb2 ~]# cd /etc/keepalived/

[root@nginx-lb2 keepalived]# ls

keepalived.conf

[root@nginx-lb2 keepalived]# vim keepalived.conf

[root@nginx-lb2 keepalived]# cat keepalived.conf

i! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

#定义监控脚本chk_nginx

vrrp_script chk_nginx {

#判断nginx是否在运行,若服务已挂,则返回值为1,执行下面的命令,使其priority值减80,小于backup的priority的值,使其成为backup状态

script "/etc/keepalived/check_nginx.sh"

interval 1

weight -80

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 88

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.2.188

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 99

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.2.199

}

#调用监控脚本

track_script {

chk_nginx

}

#定义notify_backup,如果本机负载均衡器lb2的nginx挂了,就关闭keepalived

notify_backup "/etc/keepalived/stop_keepalived"

}

[root@nginx-lb2 keepalived]#

定义健康检测监控脚本,notify_backup,实现如果本机nginx挂了,就由MASTER状态进入BACKUP状态,并且关闭keepalived。

定义监控脚本,当nginx挂了,优先级-80

#定义监控脚本chk_nginx

vrrp_script chk_nginx {

#判断nginx是否在运行,若服务已挂,则返回值为1,执行下面的命令,使其priority值减80,小于backup的priority的值,使其成为backup状态

script "/etc/keepalived/check_nginx.sh"

interval 1

weight -80

}外部check_nginx.sh判断nginx是否挂了的脚本

[root@nginx-lb2 keepalived]# cat check_nginx.sh

#!/bin/bash

if /usr/sbin/pidof nginx &>/dev/null;then

exit 0

else

exit 1

fi

[root@nginx-lb2 keepalived]#

记得给脚本chmod +x check_nginx.sh增加可执行权限master实例调用监控脚本

[root@nginx-lb2 keepalived]# chmod +x check_nginx.sh

master实例中定义notify_backu实现本机nginx挂了,就关闭keepalived进程,外部关闭keepalived脚本:

[root@nginx-lb2 keepalived]# cat stop_keepalived.sh

#!/bin/bash

service keepalived stop

[root@nginx-lb2 keepalived]#

记得给脚本stop_keepalived.sh增加可执行权限

[root@nginx-lb2 keepalived]# chmod +x stop_keepalived.sh

[root@nginx-lb2 keepalived]# ll

总用量 12

-rwxr-xr-x. 1 root root 78 8月 2 22:26 check_nginx.sh

-rw-r--r--. 1 root root 2014 8月 2 22:27 keepalived.conf

-rwxr-xr-x. 1 root root 36 8月 2 22:31 stop_keepalived.sh

[root@nginx-lb2 keepalived]#

运行keepalived

[root@nginx-lb1 keepalived]# service keepalived restart

Redirecting to /bin/systemctl restart keepalived.service

效果测试:

nginx-lb1(vip地址192.168.2.188漂移到了lb1上)

[root@nginx-lb1 keepalived]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:90:56:0d brd ff:ff:ff:ff:ff:ff

inet 192.168.2.77/24 brd 192.168.2.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.2.188/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe90:560d/64 scope link

valid_lft forever preferred_lft forever

[root@nginx-lb1 keepalived]#

nginx-lb2(vip地址192.168.2.199漂移到了lb2上)

[root@nginx-lb2 keepalived]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:bc:78:0b brd ff:ff:ff:ff:ff:ff

inet 192.168.2.78/24 brd 192.168.2.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.2.199/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:febc:780b/64 scope link

valid_lft forever preferred_lft forever

[root@nginx-lb2 keepalived]#

关闭LB1的nginx进程,测试是否会发生vip漂移

[root@nginx-lb1 keepalived]# nginx -s stop

查看LB2的ip,发现LB1的vip地址192.168.31.188漂移到了LB2上

[root@nginx-lb2 keepalived]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:bc:78:0b brd ff:ff:ff:ff:ff:ff

inet 192.168.2.78/24 brd 192.168.2.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.2.199/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.2.188/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:febc:780b/64 scope link

valid_lft forever preferred_lft forever

[root@nginx-lb2 keepalived]#

查看LB1上keepalived进程是否启动

[root@nginx-lb1 keepalived]# ps aux|grep keepalived

root 2742 0.0 0.0 112824 992 pts/0 R+ 16:17 0:00 grep --color=auto keepalived

[root@nginx-lb1 keepalived]#

keepalived也挂了,测试成功

五、搭建DNS主域名服务器,增添两条负载均衡记录,实现基于DNS的负载均衡;访问同一URL,解析出双vip地址。

参考:计算机网络 day14 DNS域名劫持、DNS域名污染 - CDN的工作流程 - DNS的记录类型 - 搭建DNS缓存/主域名服务器 - DNAT-SNAT实验项目_Claylpf的博客-CSDN博客

安装DNS服务器

[root@dns ~]# systemctl disable firewalld #关闭防火墙,防止windows客户机无法访问dns服务器

[root@dns ~]# systemctl disable NetworkManager #关闭NetworkManager

[root@dns ~]# yum install bind* -y #安装dns服务的软件包

[root@dns ~]# service named start #启动dns服务

[root@dns ~]# systemctl enable named #开机启动dns服务

修改dns配置文件,允许任意ip可以访问本机的53端口,并且允许dns解析。

[root@dns ~]# vim /etc/named.conf

options {

listen-on port 53 { any; }; 修改 #机器上的任何一个端口,都可以监听53号端口

listen-on-v6 port 53 { any; }; 修改 #IPv6的服务,跟上面的相似

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

allow-query { any; }; 修改 #允许任何一台机器来查询DNS记录

搭建DNS主域名服务器

修改named.rfc1912.zones配置文件,告诉named为claylpf.xyz提供域名解析

[root@dns ~]# vim /etc/named.rfc1912.zones

[root@dns ~]# cat /etc/named.rfc1912.zones

// named.rfc1912.zones:

//

// Provided by Red Hat caching-nameserver package

//

// ISC BIND named zone configuration for zones recommended by

// RFC 1912 section 4.1 : localhost TLDs and address zones

// and http://www.ietf.org/internet-drafts/draft-ietf-dnsop-default-local-zones-02.txt

// (c)2007 R W Franks

//

// See /usr/share/doc/bind*/sample/ for example named configuration files.

//

zone "localhost.localdomain" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "localhost" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "claylpf.xyz" IN {

type master;

file "claylpf.xyz.zone";

allow-update { none; };

};

#添加上面的配置,建议在localhost的后面

zone "1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa" IN {

type master;

file "named.loopback";

allow-update { none; };

};

zone "1.0.0.127.in-addr.arpa" IN {

type master;

file "named.loopback";

allow-update { none; };

};

zone "0.in-addr.arpa" IN {

type master;

file "named.empty";

allow-update { none; };

};

[root@dns ~]#

创建claylpf.xyz主域名的数据文件

[root@dns ~]# cd /var/named/

[root@dns named]# ls

chroot chroot_sdb data dynamic dyndb-ldap named.ca named.empty named.localhost named.loopback slaves

[root@dns named]# cp -a named.localhost claylpf.xyz.zone

[root@dns named]# ls

chroot chroot_sdb claylpf.xyz.zone data dynamic dyndb-ldap named.ca named.empty named.localhost named.loopback slaves

[root@dns named]#

修改claylpf.xyz.zone文件:

[root@dns named]# cat claylpf.xyz.zone

$TTL 1D

@ IN SOA @ rname.invalid. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS @

A 192.168.2.155

www IN A 192.168.2.188 #负载均衡记录指向LB1的vip地址

www IN A 192.168.2.199 #负载均衡记录指向LB2的vip地址

[root@dns named]#

刷新dns服务

[root@dns named]# service named restart

Redirecting to /bin/systemctl restart named.service

效果测试

修改linux客户机的dns服务器的地址为搭建的dns服务器192.168.2.155

[root@claylpf network-scripts]# vim /etc/resolv.conf

[root@claylpf network-scripts]# cat /etc/resolv.conf

# Generated by NetworkManager

#nameserver 114.114.114.114

nameserver 192.168.2.155

[root@claylpf network-scripts]#

查看效果

[root@claylpf named]# nslookup www.claylpf.xyz

Server: 192.168.2.155

Address: 192.168.2.155#53

Name: www.claylpf.xyz

Address: 192.168.2.199

Name: www.claylpf.xyz

Address: 192.168.2.188

[root@dns named]#

六、安装部署prometheus+grafana实现对backend服务器和load balance服务器的监控和出图

Backend 服务器:

Backend 服务器是处理实际请求和执行应用程序逻辑的服务器。它们是提供服务的核心组件,处理来自客户端的请求,生成响应并将其发送回客户端。Backend 服务器可以是 Web 服务器、应用服务器、数据库服务器等。它们通常与业务逻辑紧密相关,并负责处理数据的存储和检索。

主要功能:

- 处理客户端请求。

- 执行业务逻辑和处理数据。

- 与数据库和其他后端服务进行交互。

- 生成响应并返回给客户端。

Load Balancer 服务器:

Load Balancer 服务器是位于客户端和 Backend 服务器之间的中间层。它的主要目标是在多个 Backend 服务器之间分发负载,以实现高可用性、可伸缩性和性能优化。Load Balancer 可以是硬件设备、软件应用或云服务。

主要功能:

- 均衡客户端请求,将流量分发到不同的 Backend 服务器。

- 监控后端服务器的健康状况,避免将请求发送到故障服务器。

- 实现负载分发策略,如轮询、最少连接、IP 哈希等。

- 提供高可用性和故障恢复,将请求重新路由到健康的服务器。

- 在一个典型的 Web 应用场景中,Load Balancer 可以将客户端请求均匀地分发到多个 Backend Web 服务器上,以平衡负载和提高性能。Backend Web 服务器处理请求,执行应用逻辑,从数据库中检索数据,并生成响应。如果某个 Backend 服务器故障,Load Balancer 可以自动将流量路由到其他健康的服务器,确保应用的可用性。

prometheus服务器安装prometheus,客户机安装node_exporters进行采集数据(node_exporters已经通过ansible的playbook安装完成了)。

参考:Prometheus监控软件的学习_nfs exporter_Claylpf的博客-CSDN博客

安装部署prometheus成一个服务(官网下载源码包,上传到/prometheus里,解压)

官网:Prometheus - Monitoring system & time series database

临时和永久修改PATH变量,添加prometheus的路径

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

PATH=/prometheus/prometheus:$PATH --》添加这一条

PATH=/usr/local/mysql/bin:/usr/local/mysql/bin/:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

查看效果,并进行nohup后台运行

[root@prometheus prometheus]# which prometheus

/prometheus/prometheus/prometheus

[root@prometheus prometheus]# nohup prometheus --config.file=/prometheus/prometheus/prometheus.yml &

[1] 5338

[root@prometheus prometheus]# nohup: 忽略输入并把输出追加到"nohup.out"

可以添加nohup prometheus --config.file=/prometheus/prometheus/prometheus.yml &

可以使prometheus在后台运行,屏蔽hup信号,可以防止前台关闭后就无法运行了,可以防止终端关闭后还能继续运行

[root@prometheus prometheus]# ps aux|grep prometheus #检查prometheus的进程号

root 5338 0.4 4.2 798956 42304 pts/0 Sl 06:53 0:00prometheus --config.file=/prometheus/prometheus/prometheus.yml

root 5345 0.0 0.0 112824 988 pts/0 R+ 06:54 0:00 grep --color=auto prometheus

[root@prometheus prometheus]#

[root@prometheus prometheus]# netstat -anpult|grep prometheus #检查prometheus的端口号

tcp6 0 0 :::9090 :::* LISTEN 5338/prometheus

tcp6 0 0 ::1:60070 ::1:9090 ESTABLISHED 5338/prometheus

tcp6 0 0 ::1:9090 ::1:60070 ESTABLISHED 5338/prometheus

[root@prometheus prometheus]#

把Prometheus做成一个服务器service来进行管理

将源码二进制安装的Prometheus,添加成为一个service方式管理

在上面的基础上,对/usr/lib/systemd/system/下的文件今天配置

[root@mysql prometheus]# vim /usr/lib/systemd/system/sshd.service #可以参考这个文件进行修改

[root@mysql prometheus]# vim /usr/lib/systemd/system/prometheus.service

#创建一个prometheus.service文件

[root@mysql prometheus]# cat /usr/lib/systemd/system/prometheus.service

#如果需要自行复制

[Unit]

Description=prometheus

[Service]

ExecStart=/prometheus/prometheus/prometheus --config.file=/prometheus/prometheus/prometheus.yml

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@mysql prometheus]#

[root@mysql prometheus]# systemctl daemon-reload

#说明我已经向systemd注册prometheus.service文件了,意思是重新加载systemd相关服务

[root@mysql prometheus]# service prometheus restart #对prometheus服务进行运行

Redirecting to /bin/systemctl restart prometheus.service

[root@mysql prometheus]#

[root@mysql prometheus]# ps aux|grep prometheus #检查prometheus的进程是否存在

root 5338 0.1 5.3 930420 52784 pts/0 Sl 19:39 0:02 prometheus --config.file=/prometheus/prometheus/prometheus.yml

root 5506 0.0 0.0 112824 992 pts/0 R+ 20:22 0:00 grep --color=auto prometheus

[root@mysql prometheus]# service prometheus stop

Redirecting to /bin/systemctl stop prometheus.service

[root@mysql prometheus]# ps aux|grep prometheus

root 5338 0.1 5.3 930420 52784 pts/0 Sl 19:39 0:02 prometheus --config.file=/prometheus/prometheus/prometheus.yml

root 5524 0.0 0.0 112824 988 pts/0 R+ 20:22 0:00 grep --color=auto prometheus

因为第一次是使用nohup方式启动的prometheus,所以还是需要kill的方式杀死进程

后面就可以使用service的方式启动prometheus了

[root@mysql prometheus]# kill -9 5338 #需要kill原来没有使用service运行的prometheus进程

[root@mysql prometheus]# service prometheus restart

Redirecting to /bin/systemctl restart prometheus.service

[1]+ 已杀死 nohup prometheus --config.file=/prometheus/prometheus/prometheus.yml

[root@mysql prometheus]# ps aux|grep prometheus

root 5541 1.0 3.9 798700 39084 ? Ssl 20:23 0:00 /prometheus/prometheus/prometheus --config.file=/prometheus/prometheus/prometheus.yml

root 5548 0.0 0.0 112824 992 pts/0 R+ 20:23 0:00 grep --color=auto prometheus

[root@mysql prometheus]# service prometheus stop #关闭prometheus,成功关闭

Redirecting to /bin/systemctl stop prometheus.service

[root@mysql prometheus]# ps aux|grep prometheus

root 5567 0.0 0.0 112824 992 pts/0 R+ 20:23 0:00 grep --color=auto prometheus

[root@mysql prometheus]#

验证成功安装完成后访问prometheus

Web集群和LB集群安装node_exporters采集数据

官网下载源码包在ansible机上,使用copy模块上传到web集群和LB集群

[root@ansible ~]# ls

anaconda-ks.cfg node_exporter-1.5.0.linux-amd64.tar.gz

[root@ansible ~]# ansible web_servers -m copy -a "src=~/node_exporter-1.5.0.linux-amd64.tar.gz dest=~"

192.168.2.152 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"checksum": "fb794123ae5c901db4a77e911f300d1db1b3c5ed",

"dest": "/root/node_exporter-1.5.0.linux-amd64.tar.gz",

"gid": 0,

"group": "root",

"mode": "0644",

"owner": "root",

"path": "/root/node_exporter-1.5.0.linux-amd64.tar.gz",

"size": 10181045,

"state": "file",

"uid": 0

}

192.168.2.150 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"checksum": "fb794123ae5c901db4a77e911f300d1db1b3c5ed",

"dest": "/root/node_exporter-1.5.0.linux-amd64.tar.gz",

"gid": 0,

"group": "root",

"mode": "0644",

"owner": "root",

"path": "/root/node_exporter-1.5.0.linux-amd64.tar.gz",

"size": 10181045,

"state": "file",

"uid": 0

}

192.168.2.151 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"checksum": "fb794123ae5c901db4a77e911f300d1db1b3c5ed",

"dest": "/root/node_exporter-1.5.0.linux-amd64.tar.gz",

"gid": 0,

"group": "root",

"mode": "0644",

"owner": "root",

"path": "/root/node_exporter-1.5.0.linux-amd64.tar.gz",

"size": 10181045,

"state": "file",

"uid": 0

}

[root@ansible playbook]# ansible lb_servers -m copy -a "src=~/node_exporter-1.5.0.linux-amd64.tar.gz dest=~"

在ansible上编写playbook脚本(node_exporter_install.yaml )安装node_exporter。

[root@ansible playbook]# cat node_exporter_install.yaml

#调用本地onekey_install_node_exporter脚本,批量安装部署node_exporter,为prometheus采集数据

- hosts: web_servers lb_servers

remote_user: root

tasks:

- name: install node_exporters

script: /etc/ansible/node_exporter/onekey_install_node_exporter.sh

tags: install_exporter

- name: start node_exporters #后台运行node_exporters

shell: nohup node_exporter --web.listen-address 0.0.0.0:8090 &

tags: start_exporters #打标签,方便后面直接跳转到此处批量启动node_exporters

[root@ansible playbook]#

执行playbook

[root@ansible playbook]# ansible-playbook node_exporter_install.yaml

PLAY [web_servers lb_servers] ***************************************************************************************************************************************************************

TASK [Gathering Facts] **********************************************************************************************************************************************************************

ok: [192.168.2.77]

ok: [192.168.2.152]

ok: [192.168.2.78]

ok: [192.168.2.151]

ok: [192.168.2.150]

TASK [install node_exporters] ***************************************************************************************************************************************************************

changed: [192.168.2.151]

changed: [192.168.2.77]

changed: [192.168.2.150]

changed: [192.168.2.152]

changed: [192.168.2.78]

TASK [start node_exporters] *****************************************************************************************************************************************************************

changed: [192.168.2.150]

changed: [192.168.2.151]

changed: [192.168.2.78]

changed: [192.168.2.152]

changed: [192.168.2.77]

PLAY RECAP **********************************************************************************************************************************************************************************

192.168.2.150 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.2.151 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.2.152 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.2.77 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.2.78 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

访问测试:

prometheus配置文件中添加安装了node_exporter的web集群和lb集群(prometheus.yml)

[root@prometheus prometheus]# pwd

/prometheus/prometheus

[root@prometheus prometheus]# vim prometheus.yml

[root@prometheus prometheus]# cat prometheus.yml

bal config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: "Web1"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.150:8090"]

- job_name: "Web2"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.151:8090"]

- job_name: "Web3"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.152:8090"]

- job_name: "nginx-lb1"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.77:8090"]

- job_name: "nginx-lb2"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["192.168.2.78:8090"]

[root@prometheus prometheus]#

重新启动prometheus

[root@prometheus prometheus]# service prometheus restart

Redirecting to /bin/systemctl restart prometheus.service

关闭firewalld防火墙和selinux

#selinux和firewalld防火墙都需要关闭

service firewalld stop

systemctl disable firewalld

#临时关闭selinux

setenforce 0

#永久关闭selinux (需要开机重启)

#vim /etc/selinx/config

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config

访问prometheus,查看效果

Grafana,安装部署grafana

参考:Grafana展示工具的学习_grafana学习_Claylpf的博客-CSDN博客

将我们的grafana 和 Prometheus安装在同一台服务器上,能让我们更快的获取监控数据

[root@localhost /]# mkdir grafana

[root@localhost /]# cd grafana/

[root@localhost grafana]# ls

[root@localhost grafana]# mv ~/grafana-enterprise-9.4.7-1.x86_64.rpm grafana-enterprise-9.4.7-1.x86_64.rpm #安装包放入/grafana目录下

[root@localhost grafana]# ls

grafana-enterprise-9.4.7-1.x86_64.rpm

解压grafana安装包

[root@prometheus grafana]# yum install grafana-enterprise-9.4.7-1.x86_64.rpm -y #将安装包进行解压

已加载插件:fastestmirror

正在检查 grafana-enterprise-9.4.7-1.x86_64.rpm: grafana-enterprise-9.4.7-1.x86_64

启动grafana软件

[root@localhost grafana]# service grafana-server start

Starting grafana-server (via systemctl): [ 确定 ]

[root@localhost grafana]#

[root@localhost grafana]# ps aux|grep grafana

grafana 2627 5.7 10.1 1160788 101016 ? Ssl 10:23 0:02 /usr/share/grafana/bin/grafana server --config=/etc/grafana/grafana.ini --pidfile=/var/run/grafana/grafana-server.pid --packaging=rpm cfg:default.paths.logs=/var/log/grafana cfg:default.paths.data=/var/lib/grafana cfg:default.paths.plugins=/var/lib/grafana/plugins cfg:default.paths.provisioning=/etc/grafana/provisioning

root 2637 0.0 0.0 112824 976 pts/2 S+ 10:23 0:00 grep --color=auto grafana

[root@localhost grafana]# netstat -anpult|grep grafana

tcp6 0 0 :::3000 :::* LISTEN 2627/grafana 登录grafana查看效果

默认的用户名和密码是:

用户名:admin

密码:admin

登陆进入grafana

配置Prometheus的数据源,并导入grafana的模板(Dashboards)

Dashboards :仪表盘,跟汽车上的仪表盘一样,通过一些柱状图等显示数据

官网:Dashboards | Grafana Labs

配置数据源和导入模板:

需要知道哪些模板可以使用,可以去官网寻找,也可以百度

点击如下图所示的Data sources,然后增加数据源

点击Add data source

配置Prometheus的数据源

在其中输入你的Prometheus的IP地址(如:http://192.168.2.149:9090)

保存配置:

使用Dashboards

点击import

输入编号1860

查看效果

除了使用1860模板外,你还可以使用8919号模板(里面是中文的模板)

查看保存后的Dashboards模板目录

七、ab测试机对web集群和负载均衡器进行压力测试,了解系统性能的瓶颈

参考:nginx-Web集群-压力测试(ab工具)_nginx压测工具_Claylpf的博客-CSDN博客

安装ab命令,yum install -y httpd-tools压力测试

[root@localhost ~]# yum install -y httpd-tools

ab测试:

[root@ab ~]# ab -c 150 -n 10000 http://www.claylpf.xyz/

结果为

Concurrency Level: 150

Time taken for tests: 1.613 seconds

Complete requests: 10000

Failed requests: 0

Write errors: 0

Non-2xx responses: 10000

Total transferred: 3480000 bytes

HTML transferred: 1620000 bytes

Requests per second: 6201.34 [#/sec] (mean)

Time per request: 24.188 [ms] (mean)

Time per request: 0.161 [ms] (mean, across all concurrent requests)

Transfer rate: 2107.49 [Kbytes/sec] received

#Requests per second表示的是每秒钟的请求次数(并发连接数、吞吐率),它越大表示我们的nginx越靠近最高压力数,当我们访问改为1000个并发数,发现它从高变低的时候,说明我们发送的请求数超过了nginx集群的最大压力数。多次修改并发数和请求数,每秒请求数最大值约为6200左右。

八、对系统性能资源(如内核参数、nginx参数)进行调优,提升系统性能

内核参数调优(对linux机器的内核进行优化)

1、提高系统的并发性能和吞吐量

ulimit -n 65535 是一个设置用户打开文件描述符限制的命令,可以使用户打开文件的数量变多。

在Linux系统中,每个进程可以打开的文件描述符数量是有限的,而这个限制在一些高负载的应用场景下可能会成为性能瓶颈。

而通过将ulimit -n设置为较大的值,如65535,你提高了进程能够同时打开的文件描述符数量,这样你的应用就能够更好地处理大量连接和文件操作,提高系统的并发性能和吞吐量。

[root@nginx-lb1 ~]# ulimit -n 65535

[root@nginx-lb1 ~]# ulimit -a #查看内核参数

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 7190

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 65535

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 7190

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

2、交换分区调优,当内存使用率为0时在使用交换分区资源,提高内存的利用率。

[root@nginx-lb1 ~]# cat /proc/sys/vm/swappiness30

[root@nginx-lb1 ~]# echo 0 > /proc/sys/vm/swappiness

[root@nginx-lb1 ~]# cat /proc/sys/vm/swappiness03、对nginx进行的参数调优

#user nobody;

worker_processes 2; #增加worker进程数量

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 2048; #增加每个worker进程的最大并发连接数

}

之后再进行多次压力测试

[root@ab ~]# ab -c 3000 -n 30000 http://www.claylpf.xyz/

结果为

Requests per second: 6503.15 [#/sec] (mean)

Time per request: 852.403 [ms] (mean)

Time per request: 0.852 [ms] (mean, across all concurrent requests)

Transfer rate: 400.98 [Kbytes/sec] received

项目遇到的问题:

1、静态配置ip的时候,有几台虚拟机未设置桥接,导致无法上网

2、dns服务器上忘记关闭firewalld、NetworkManager和selinux导致windows客户机域名解析不出来。

3、使用keepalived中的notify_backup功能并调用外部脚本时,由于脚本命名与配置中的脚本名不符,导致一致没有效果

4、搭建双vip高可用架构的时候出现了脑裂现象,发现是因为虚拟路由器id设置错误了。

5、未完全理解ansible中script模块的使用,以为是要将脚本上传到远程主机才可以执行脚本,其实完全可以在在中控机上编写好脚本,远程机上执行。

6、realip获取真实客户机的ip地址时,代码放错了位置,导致没有出现效果。

7、nginx前期编译时没加入realip模块,可以考虑热升级。

8、一键安装nginx脚本中修改PATH变量时,修改的是子进程中的环境变量,即使export PATH变量也只是让子进程以及子进程的子进程可以使用,无法影响到父进程的环境变量,导致安装完nginx后无法在PATH变量中找到nginx命令,简单的解决办法有二个:一是重启,二是在ansibleplaybook中不使用script模块,而是先cp脚本过去后通过shell模块用

9、安装速度慢,可以选择配置yum源或者选择不同的源(阿里、清华等)来下载软件10、Prometheus服务器的时间必须与Windows机器时间保持一致,否则会导致访问Prometheus的时候会出现警告

项目心得

1、通过这次集群项目,我对搭建小型集群项目开始有了经验,如提前规划好整个集群的架构,可以提高项目开展时效率。

2、对基于Nginx的web集群和高可用、高性能有了深入的理解,同时对脑裂和vip漂移现象也有了更加深刻的体会和分析。

3、加强了对7层负载均衡和dns负载均衡的认识,也了解到了nginx的4层负载均衡

4、认识到了系统性能资源的重要性,对压力测试下整个集群的瓶颈有了一个整体概念。

5、对监控也有了的更进一步的认识,监控可以提前看到很多问题,我们可以提前做好预警。

6、对很多软件之间的配合有了一定的理解,如Grafana、prometheus、ansible、nginx、nfs、dns等

7、trouble shooting的能力得到了很大的提升,有利于我在工作中稳定的完成工作任务