hadoop完全分布式+zookeeper集群+NameNode HA+ yarn HA

hadoop完全分布式+zookeeper集群+NameNode HA+ yarn HA

1、centos7基础环境

| 系统 | IP | 主机名 | 用户名 | 密码 |

|---|---|---|---|---|

| centos 7 | 10.1.1.101 | master | root | password |

| centos 7 | 10.1.1.102 | slave1 | root | password |

| centos 7 | 10.1.1.103 | slave2 | root | password |

| 组件 | 版本 | Linux版本下载地址 |

|---|---|---|

| java | 8 | https://www.oracle.com/java/technologies/javase/javase-jdk8-downloads.html |

| hadoop | 2.7.1 | https://archive.apache.org/dist/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz |

| zookeeper | 3.4.8 | https://archive.apache.org/dist/zookeeper/zookeeper-3.4.8/zookeeper-3.4.8.tar.gz |

1.1、关闭防火墙

-

master

[root@master ~]# systemctl stop firewalld [root@master ~]# systemctl disable firewalld Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service. Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service. -

slave1

[root@slave1 ~]# systemctl stop firewalld [root@slave1 ~]# systemctl disable firewalld Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service. Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service. -

slave2

[root@slave2 ~]# systemctl stop firewalld [root@slave2 ~]# systemctl disable firewalld Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service. Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service.

1.2、配置hosts文件

-

master

[root@master ~]# vi /etc/hosts 10.1.1.101 master 10.1.1.102 slave1 10.1.1.103 slave2 [root@master ~]# scp /etc/hosts slave1:/etc/ [root@master ~]# scp /etc/hosts slave2:/etc/

1.3、 配置SSH

-

三台主机生成密钥文件

-

master

[root@master ~]# ssh-keygen -t rsa -P '' Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 94:ad:26:3c:7d:2f:1e:88:be:43:2c:39:50:f0:91:84 root@master The key's randomart image is: +--[ RSA 2048]----+ | .+o. | | E.o. o | | .. o . | | . . o . | | . o+ S . | | + o= o . | | +. . o . | | .. . o | | oo . | +-----------------+ -

slave1

[root@slave1 ~]# ssh-keygen -t rsa -P '' Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 14:36:0b:e8:e3:59:76:f0:26:1d:a8:e7:53:f2:59:27 root@slave1 The key's randomart image is: +--[ RSA 2048]----+ | ...+ | | . oo.+ | | . . +o. | | + *.* E . | | . B BSo o | | o o o | | . | | | | | +-----------------+ -

slave2

[root@slave2 ~]# ssh-keygen -t rsa -P '' Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: ba:8f:b0:86:a3:c9:70:50:cc:bf:db:16:3c:7e:49:65 root@slave2 The key's randomart image is: +--[ RSA 2048]----+ | | | o | | + | | . . E | |. .. So | | . .+.. | |. ..o..+ . | |ooo .=ooo | |oo oo.+o. | +-----------------+

-

-

master分发公钥

-

master

[root@master ~]# ssh-copy-id -i master [root@master ~]# ssh-copy-id -i slave1 [root@master ~]# ssh-copy-id -i slave2

-

1.4、 配置NTP服务

本次实验需要自行配置yum仓库

| 主机名 | IP | NTP服务 | 用户名 | 密码 |

|---|---|---|---|---|

| master | 10.1.1.101 | Server | root | password |

| slave1 | 10.1.1.102 | Client | root | password |

| slave2 | 10.1.1.103 | Client | root | password |

-

yum在线安装NTP服务

- master

[root@master ~]# yum install -y vim ntp [root@master ~]# systemctl start ntpd [root@master ~]# systemctl enable ntpd Created symlink from /etc/systemd/system/multi-user.target.wants/ntpd.service to /usr/lib/systemd/system/ntpd.service. [root@master ~]# systemctl status ntpd ● ntpd.service - Network Time Service Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2021-06-20 09:14:48 CST; 1 day 9h ago Main PID: 12909 (ntpd) CGroup: /system.slice/ntpd.service └─12909 /usr/sbin/ntpd -u ntp:ntp -g Jun 20 09:14:48 master ntpd[12909]: Listen normally on 3 eno16777736 10.1.1.101 UDP 123 Jun 20 09:14:48 master ntpd[12909]: Listen normally on 4 lo ::1 UDP 123 Jun 20 09:14:48 master ntpd[12909]: Listen normally on 5 eno16777736 fe80::20c:29ff:fe5a:51a2 UDP 123 Jun 20 09:14:48 master ntpd[12909]: Listening on routing socket on fd #22 for interface updates Jun 20 09:14:48 master ntpd[12909]: 0.0.0.0 c016 06 restart Jun 20 09:14:48 master ntpd[12909]: 0.0.0.0 c012 02 freq_set kernel 0.000 PPM Jun 20 09:14:48 master ntpd[12909]: 0.0.0.0 c011 01 freq_not_set Jun 20 09:14:55 master ntpd[12909]: 0.0.0.0 c61c 0c clock_step +120960.270610 s Jun 21 18:50:56 master ntpd[12909]: 0.0.0.0 c614 04 freq_mode Jun 21 18:50:57 master ntpd[12909]: 0.0.0.0 c618 08 no_sys_peer-

slave1

[root@slave1 ~]# yum install -y vim ntp [root@slave1 ~]# systemctl start ntpd [root@slave1 ~]# systemctl enable ntpd Created symlink from /etc/systemd/system/multi-user.target.wants/ntpd.service to /usr/lib/systemd/system/ntpd.service. [root@slave1 ~]# systemctl status ntpd ● ntpd.service - Network Time Service Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2021-06-20 04:47:27 CST; 1 day 14h ago Main PID: 11069 (ntpd) CGroup: /system.slice/ntpd.service └─11069 /usr/sbin/ntpd -u ntp:ntp -g Jun 20 04:47:27 slave1 ntpd[11069]: Listen normally on 3 eno16777736 10.1.1.102 UDP 123 Jun 20 04:47:27 slave1 ntpd[11069]: Listen normally on 4 lo ::1 UDP 123 Jun 20 04:47:27 slave1 ntpd[11069]: Listen normally on 5 eno16777736 fe80::20c:29ff:fec8:8c15 UDP 123 Jun 20 04:47:27 slave1 ntpd[11069]: Listening on routing socket on fd #22 for interface updates Jun 20 04:47:27 slave1 ntpd[11069]: 0.0.0.0 c016 06 restart Jun 20 04:47:27 slave1 ntpd[11069]: 0.0.0.0 c012 02 freq_set kernel 0.000 PPM Jun 20 04:47:27 slave1 ntpd[11069]: 0.0.0.0 c011 01 freq_not_set Jun 20 04:47:34 slave1 ntpd[11069]: 0.0.0.0 c61c 0c clock_step +137292.879993 s Jun 21 18:55:47 slave1 ntpd[11069]: 0.0.0.0 c614 04 freq_mode Jun 21 18:55:48 slave1 ntpd[11069]: 0.0.0.0 c618 08 no_sys_peer -

slave2

[root@slave2 ~]# yum install -y vim ntp [root@slave2 ~]# systemctl start ntpd [root@slave2 ~]# systemctl enable ntpd Created symlink from /etc/systemd/system/multi-user.target.wants/ntpd.service to /usr/lib/systemd/system/ntpd.service. [root@slave2 ~]# systemctl status ntpd ● ntpd.service - Network Time Service Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2021-06-20 02:21:35 CST; 1 day 16h ago Main PID: 10686 (ntpd) CGroup: /system.slice/ntpd.service └─10686 /usr/sbin/ntpd -u ntp:ntp -g Jun 20 02:21:35 slave2 ntpd[10686]: Listen normally on 3 eno16777736 10.1.1.103 UDP 123 Jun 20 02:21:35 slave2 ntpd[10686]: Listen normally on 4 lo ::1 UDP 123 Jun 20 02:21:35 slave2 ntpd[10686]: Listen normally on 5 eno16777736 fe80::20c:29ff:fe43:d407 UDP 123 Jun 20 02:21:35 slave2 ntpd[10686]: Listening on routing socket on fd #22 for interface updates Jun 20 02:21:35 slave2 ntpd[10686]: 0.0.0.0 c016 06 restart Jun 20 02:21:35 slave2 ntpd[10686]: 0.0.0.0 c012 02 freq_set kernel 0.000 PPM Jun 20 02:21:35 slave2 ntpd[10686]: 0.0.0.0 c011 01 freq_not_set Jun 20 02:21:44 slave2 ntpd[10686]: 0.0.0.0 c61c 0c clock_step +146100.650069 s Jun 21 18:56:44 slave2 ntpd[10686]: 0.0.0.0 c614 04 freq_mode Jun 21 18:56:45 slave2 ntpd[10686]: 0.0.0.0 c618 08 no_sys_peer

-

配置服务端

-

master

[root@master ~]# echo "driftfile /var/lib/ntp/drift" > /etc/ntp.conf [root@master ~]# echo "restrict default nomodify notrap nopeer noquery" >> /etc/ntp.conf [root@master ~]# echo "restrict 127.0.0.1" >> /etc/ntp.conf [root@master ~]# echo "restrict ::1" >> /etc/ntp.conf [root@master ~]# echo "server 127.127.1.0" >> /etc/ntp.conf [root@master ~]# echo "Fudge 127.127.1.0 stratum 10" >> /etc/ntp.conf [root@master ~]# echo "includefile /etc/ntp/crypto/pw" >> /etc/ntp.conf [root@master ~]# echo "keys /etc/ntp/keys" >> /etc/ntp.conf [root@master ~]# echo "disable monitor" >> /etc/ntp.conf

-

-

配置客户端

-

slave1

[root@slave1 ~]# echo "driftfile /var/lib/ntp/drift" > /etc/ntp.conf [root@slave1 ~]# echo "server master" >> /etc/ntp.conf [root@slave1 ~]# echo "driftfile /var/lib/ntp/drift" >> /etc/ntp.conf [root@slave1 ~]# echo "server master" >> /etc/ntp.conf [root@slave1 ~]# echo "Fudge master stratum 10" >> /etc/ntp.conf [root@slave1 ~]# echo "includefile /etc/ntp/crypto/pw" >> /etc/ntp.conf [root@slave1 ~]# echo "keys /etc/ntp/keys" >> /etc/ntp.conf [root@slave1 ~]# echo "disable monitor" >> /etc/ntp.conf [root@slave1 ~]# scp /etc/ntp.conf slave2:/etc/

-

-

客户端配置后台时间同步脚本

-

slave1

[root@slave1 ~]# echo "*/1 * * * * /usr/sbin/ntpdate -u master > /dev/null 2 >& 1" > /var/spool/cron/update.cron [root@slave1 ~]# crontab /var/spool/cron/update.cron [root@slave1 ~]# systemctl restart crond [root@slave1 ~]# systemctl enable crond -

slave2

[root@slave2 ~]# echo "*/1 * * * * /usr/sbin/ntpdate -u master > /dev/null 2 >& 1" > /var/spool/cron/update.cron [root@slave2 ~]# crontab /var/spool/cron/update.cron [root@slave2 ~]# systemctl restart crond [root@slave2 ~]# systemctl enable crond

-

-

三台机器重启ntp服务

[root@master ~]# systemctl restart ntpd [root@slave1 ~]# systemctl restart ntpd [root@slave2 ~]# systemctl restart ntpd

2、安装java

3.1、卸载openjdk

[root@master ~]# rpm -qa |grep openjdk

通过rpm -e --nodeps "查询出来的rpm包" 去卸载

3.2、安装java

-

将/h3cu下面的java安装到/usr/local/src下

[root@master ~]# tar -xzvf /h3cu/jdk-8u144-linux-x64.tar.gz -C /usr/local/src/ -

将解压后的java文件重命名为java

[root@master ~]# mv /usr/local/src/jdk1.8.0_144 /usr/local/src/java -

配置java环境变量,仅使当前用户生效

[root@master ~]# vi /root/.bash_profile export JAVA_HOME=/usr/local/src/java export PATH=$PATH:$JAVA_HOME/bin -

加载环境变量,查看java的版本信息

[root@master ~]# source /root/.bash_profile [root@master ~]# java -version java version "1.8.0_144" Java(TM) SE Runtime Environment (build 1.8.0_144-b01) Java HotSpot(TM) 64-Bit Server VM (build 25.144-b01, mixed mode) -

将java分发给slave1和slave2

[root@master ~]# scp -r /usr/local/src/java slave1:/usr/local/src/ [root@master ~]# scp -r /usr/local/src/java slave2:/usr/local/src/ [root@master ~]# scp /root/.bash_profile slave1:/root/ [root@master ~]# scp /root/.bash_profile slave2:/root/

3、安装zookeeper集群

将/h3cu下的zookeeper解压到/usr/local/src

[root@master ~]# tar -xvzf /h3cu/zookeeper-3.4.8.tar.gz -C /usr/local/src/

-

将解压后文件重命名为zookeeper

[root@master ~]# mv /usr/local/src/zookeeper-3.4.8 /usr/local/src/zookeeper -

配置zookeeper环境变量,加载环境变量,仅对当前用户生效

[root@master ~]# vi /root/.bash_profile export ZOOKEEPER_HOME=/usr/local/src/zookeeper export PATH=$PATH:$ZOOKEEPER_HOME/bin [root@master ~]# source /root/.bash_profile -

配置zoo.cfg配置文件

dataDir进行修改,server三行写入进去

[root@master ~]# cp /usr/local/src/zookeeper/conf/zoo_sample.cfg /usr/local/src/zookeeper/conf/zoo.cfg [root@master ~]# vi /usr/local/src/zookeeper/conf/zoo.cfg dataDir=/usr/local/src/zookeeper/data server.1=master:2888:3888 server.2=slave1:2888:3888 server.3=slave2:2888:3888 -

配置myid文件

[root@master ~]# mkdir /usr/local/src/zookeeper/data [root@master ~]# echo "1" > /usr/local/src/zookeeper/data/myid -

将文件分发给slave1和slave2

[root@master ~]# scp -r /usr/local/src/zookeeper slave1:/usr/local/src/ [root@master ~]# scp -r /usr/local/src/zookeeper slave2:/usr/local/src/ [root@master ~]# scp /root/.bash_profile slave1:/root/ [root@master ~]# scp /root/.bash_profile slave2:/root/ -

修改slave1和slave2的myid文件

-

slave1

[root@slave1 ~]# echo 2 > /usr/local/src/zookeeper/data/myid -

slave2

[root@slave2 ~]# echo 3 > /usr/local/src/zookeeper/data/myid

-

-

分别启动zk集群

-

master

[root@master ~]# source /root/.bash_profile [root@master ~]# zkServer.sh start ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED -

slave1

[root@slave1 ~]# source /root/.bash_profile [root@slave1 ~]# zkServer.sh start ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED -

slave2

[root@slave2 ~]# source /root/.bash_profile [root@slave2 ~]# zkServer.sh start ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED

-

-

分别查看zk集群的状态

注意:leader和follower是选举出来不是固定在某台机器上

[root@master ~]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Mode: follower [root@master ~]# jps 17120 QuorumPeerMain 17230 Jps [root@slave1 ~]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Mode: leader [root@slave1 ~]# jps 15721 Jps 15050 QuorumPeerMain [root@slave2 ~]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Mode: follower [root@slave2 ~]# jps 14965 QuorumPeerMain 15647 Jps

4、配置hadoop HA

-

将/h3cu下的hadoop解压到/usr/lcoal/src下(master上操作)

[root@master ~]# tar -xzf /h3cu/hadoop-2.7.1.tar.gz -C /usr/local/src/ -

将解压后的hadoop文件重命名为hadoop

[root@master ~]# mv /usr/local/src/hadoop-2.7.1 /usr/local/src/hadoop -

配置hadoop环境变量,仅当前用户生效

[root@master ~]# vi /root/.bash_profile export HADOOP_HOME=/usr/local/src/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin -

加载环境变量,查看hadoop版本

[root@master ~]# source /root/.bash_profile [root@master ~]# hadoop version Hadoop 2.7.1 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 15ecc87ccf4a0228f35af08fc56de536e6ce657a Compiled by jenkins on 2015-06-29T06:04Z Compiled with protoc 2.5.0 From source with checksum fc0a1a23fc1868e4d5ee7fa2b28a58a This command was run using /usr/local/src/hadoop/share/hadoop/common/hadoop-common-2.7.1.jar -

配置slaves

[root@master ~]# vi /usr/local/src/hadoop/etc/hadoop/slaves master slave1 slave2

4.1 配置文件

####hadoop-env.sh

[root@master ~]# vi /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/local/src/java

yarn-env.sh

[root@master ~]# vim /usr/local/src/hadoop/etc/hadoop/yarn-env.sh

export JAVA_HOME=/usr/local/src/java

hdfs-site.xml

命令:

[root@master ~]# vi /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

配置文件内容:

<property>

<name>dfs.permissions.enabledname>

<value>falsevalue>

property>

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>/usr/local/src/hadoop/dfs/name/datavalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>/usr/local/src/hadoop/dfs/data/datavalue>

property>

<property>

<name>dfs.nameservicesname>

<value>myclustervalue>

property>

<property>

<name>dfs.ha.namenodes.myclustername>

<value>nn1,nn2value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1name>

<value>master:8020value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2name>

<value>slave1:8020value>

property>

<property>

<name>dfs.namenode.http-address.mycluster.nn1name>

<value>master:50070value>

property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2name>

<value>slave1:50070value>

property>

<property>

<name>dfs.namenode.shared.edits.dirname>

<value>qjournal://master:8485;slave1:8485;slave2:8485/myclustervalue>

property>

<property>

<name>dfs.client.failover.proxy.provider.myclustername>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvidervalue>

property>

<property>

<name>dfs.ha.automatic-failover.enabledname>

<value>truevalue>

property>

<property>

<name>ha.zookeeper.quorumname>

<value>master:2181,slave1:2181,slave2:2181value>

property>

<property>

<name>dfs.ha.fencing.methodsname>

<value>sshfencevalue>

property>

<property>

<name>dfs.ha.fencing.ssh.private-key-filesname>

<value>/root/.ssh/id_rsavalue>

property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeoutname>

<value>30000value>

property>

<property>

<name>dfs.namenode.handler.countname>

<value>100value>

property>

<property>

<name>dfs.webhdfs.enabledname>

<value>truevalue>

property>

<property>

<name>dfs.blocksizename>

<value>268435456value>

property>

core-site.xml

命令:

[root@master ~]# vim /usr/local/src/hadoop/etc/hadoop/core-site.xml

配置内容:

<property>

<name>fs.defaultFSname>

<value>hdfs://myclustervalue>

property>

<property>

<name>dfs.journalnode.edits.dirname>

<value>/usr/local/src/hadoop/journalnodevalue>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/usr/local/src/hadoop/dfs/tmpvalue>

property>

<property>

<name>io.file.buffer.sizename>

<value>4096value>

property>

<property>

<name>hadoop.proxuuser.hduser.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.hduser.groupsname>

<value>*value>

property>

yarn-site.xml

命令:

[root@master ~]# vim /usr/local/src/hadoop/etc/hadoop/yarn-site.xml

配置内容:

<property>

<name>yarn.resourcemanager.ha.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.cluster-idname>

<value>RMclustervalue>

property>

<property>

<name>yarn.resourcemanager.ha.rm-idsname>

<value>rm1,rm2value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm1name>

<value>mastervalue>

property>

<property>

<name>yarn.resourcemanager.hostname.rm2name>

<value>slave1value>

property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1name>

<value>master:8088value>

property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2name>

<value>slave1:8088value>

property>

<property>

<name>yarn.resourcemanager.zk-addressname>

<value>master:2181,slave1:2181,slave2:2181value>

property>

<property>

<name>yarn.resourcemanager.connect.retry-interval.msname>

<value>2000value>

property>

<property>

<name>yarn.resourcemanager.store.classname>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStorevalue>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>mastervalue>

property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-msname>

<value>5000value>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.classname>

<value>org.apache.hadoop.mapred.ShuffleHandlervalue>

property>

<property>

<name>yarn.resourcemanager.recovery.enabledname>

<value>truevalue>

property>

mapred-site.xml

命令:

[root@master ~]# cp /usr/local/src/hadoop/etc/hadoop/mapred-site.xml.template /usr/local/src/hadoop/etc/hadoop/mapred-site.xml

[root@master ~]# vim /usr/local/src/hadoop/etc/hadoop/mapred-site.xml

配置内容:

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

4.2 格式化操作

分发文件

[root@master ~]# scp -r /usr/local/src/hadoop slave1:/usr/local/src/ &

[root@master ~]# scp -r /usr/local/src/hadoop slave2:/usr/local/src/ &

[root@master ~]# scp -r /root/.bash_profile slave1:/root/ &

[root@master ~]# scp -r /root/.bash_profile slave2:/root/ &

zkfc 格式化

[root@master ~]# hdfs zkfc -formatZK

21/06/23 07:56:01 INFO tools.DFSZKFailoverController: Failover controller configured for NameNode NameNode at master/10.1.1.101:8020

21/06/23 07:56:02 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

21/06/23 07:56:02 INFO zookeeper.ZooKeeper: Client environment:host.name=master

21/06/23 07:56:02 INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_144

...

21/06/23 07:56:07 INFO zookeeper.ZooKeeper: Session: 0x17a34723b95000b closed

21/06/23 07:56:07 INFO zookeeper.ClientCnxn: EventThread shut down

启动journalnode

[root@master ~]# hadoop-daemons.sh start journalnode

slave1: starting journalnode, logging to /usr/local/src/hadoop/logs/hadoop-root-journalnode-slave1.out

slave2: starting journalnode, logging to /usr/local/src/hadoop/logs/hadoop-root-journalnode-slave2.out

master: starting journalnode, logging to /usr/local/src/hadoop/logs/hadoop-root-journalnode-master.out

[root@master ~]# jps

21266 QuorumPeerMain

28184 JournalNode

28233 Jps

[root@slave1 ~]# jps

37478 Jps

37406 JournalNode

33215 QuorumPeerMain

[root@slave2 ~]# jps

36816 Jps

36744 JournalNode

33183 QuorumPeerMain

namenode格式化

[root@master ~]# hdfs namenode -format

21/06/23 08:01:31 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/10.1.1.101

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.1

....

21/06/23 08:01:33 INFO namenode.FSImage: Allocated new BlockPoolId: BP-298621373-10.1.1.101-1624406493315

21/06/23 08:01:33 INFO common.Storage: Storage directory /usr/local/src/hadoop/dfs/name/data has been successfully formatted.

21/06/23 08:01:33 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

21/06/23 08:01:33 INFO util.ExitUtil: Exiting with status 0

21/06/23 08:01:33 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/10.1.1.101

************************************************************/

启动集群,备份namenode数据

[root@master ~]# start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master slave1]

slave1: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-root-namenode-slave1.out

master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-root-namenode-master.out

slave1: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-root-datanode-slave1.out

slave2: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-root-datanode-slave2.out

master: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-root-datanode-master.out

Starting journal nodes [master slave1 slave2]

slave2: journalnode running as process 36744. Stop it first.

slave1: journalnode running as process 37406. Stop it first.

master: journalnode running as process 28184. Stop it first.

Starting ZK Failover Controllers on NN hosts [master slave1]

slave1: starting zkfc, logging to /usr/local/src/hadoop/logs/hadoop-root-zkfc-slave1.out

master: starting zkfc, logging to /usr/local/src/hadoop/logs/hadoop-root-zkfc-master.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-root-resourcemanager-localhost.localdomain.out

slave2: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-root-nodemanager-slave2.out

slave1: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-root-nodemanager-slave1.out

master: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-root-nodemanager-master.out

[root@master ~]# jps

29136 NodeManager

28929 DFSZKFailoverController

21266 QuorumPeerMain

28184 JournalNode

28648 DataNode

29032 ResourceManager

29421 Jps

28431 NameNode

[root@slave1 ~]# jps

37697 DFSZKFailoverController

37768 NodeManager

37580 DataNode

37406 JournalNode

33215 QuorumPeerMain

37871 Jps

[root@slave2 ~]# jps

36976 NodeManager

36854 DataNode

37079 Jps

36744 JournalNode

33183 QuorumPeerMain

slave1 NameNode同步主节点信息

[root@slave1 ~]# hdfs namenode -bootstrapStandby

21/06/23 08:05:33 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = slave1/10.1.1.102

STARTUP_MSG: args = [-bootstrapStandby]

STARTUP_MSG: version = 2.7.1

=====================================================

About to bootstrap Standby ID nn2 from:

Nameservice ID: mycluster

Other Namenode ID: nn1

Other NN's HTTP address: http://master:50070

Other NN's IPC address: master/10.1.1.101:8020

Namespace ID: 321931140

Block pool ID: BP-298621373-10.1.1.101-1624406493315

Cluster ID: CID-d443340a-5baa-411e-9dee-81ab0b4b08b8

Layout version: -63

isUpgradeFinalized: true

=====================================================

21/06/23 08:05:34 INFO common.Storage: Storage directory /usr/local/src/hadoop/dfs/name/data has been successfully formatted.

21/06/23 08:05:35 INFO namenode.TransferFsImage: Opening connection to http://master:50070/imagetransfer?getimage=1&txid=0&storageInfo=-63:321931140:0:CID-d443340a-5baa-411e-9dee-81ab0b4b08b8

21/06/23 08:05:35 INFO namenode.TransferFsImage: Image Transfer timeout configured to 60000 milliseconds

21/06/23 08:05:35 INFO namenode.TransferFsImage: Transfer took 0.00s at 0.00 KB/s

21/06/23 08:05:35 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000000 size 351 bytes.

21/06/23 08:05:35 INFO util.ExitUtil: Exiting with status 0

21/06/23 08:05:35 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at slave1/10.1.1.102

************************************************************/

[root@slave1 ~]# hadoop-daemon.sh start namenode

starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-root-namenode-localhost.localdomain.out

[root@slave1 ~]# jps

38128 Jps

37697 DFSZKFailoverController

37959 NameNode

37768 NodeManager

37580 DataNode

37406 JournalNode

33215 QuorumPeerMain

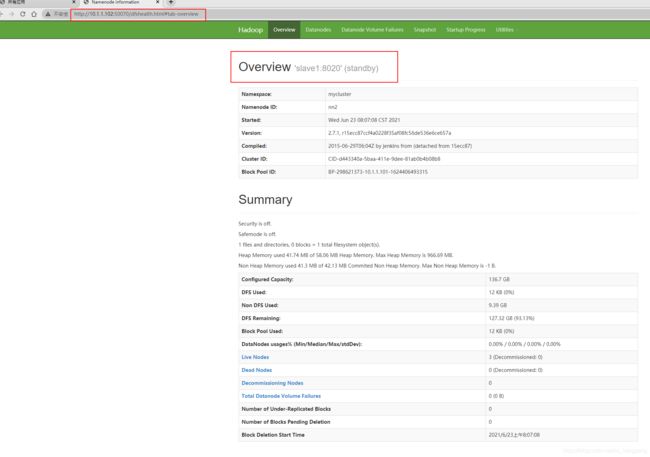

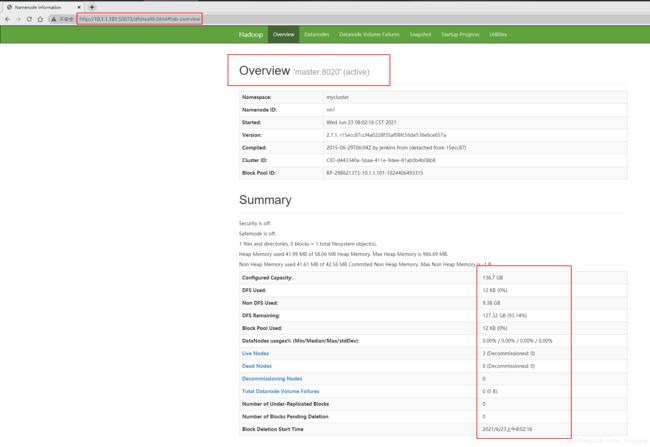

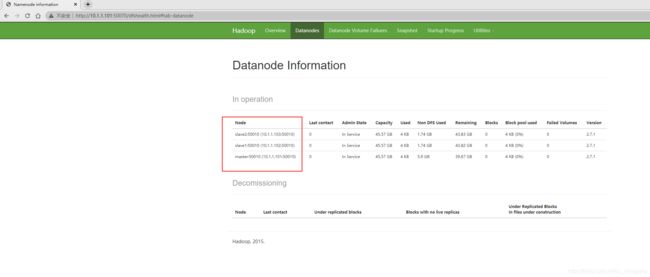

4.3 测试集群

4.3.1 查看web浏览器

4.3.2 运行mapreduce程序

[root@master ~]# vim wordcount.txt

The lives of most men are determined by their environment

They accept the circumstancesamid which fate has thrown them

not only with resignation but even with good will

They arelike streetcars running contentedly on their rails

and they despise the sprightly flivver thatdashes in and

out of the traffic and speeds so jauntily across the open country

I respectthem they are good citizens good husbands and good fathers

and of course somebody hasto pay the taxes but I do not find them exciting

I am fascinated by the men few enough in allconscience

who take life in their own hands and seem to mould it to their own liking

It may bethat we have no such thing as free will

but at all events we have the illusion ofit At acrossroad it does seem

to us that we might go either to the right or the left and the choiceonce made

it is difficult to see that the whole course of the world history obliged us

to takethe turning we didI never met a more interesting man than Mayhew He was

a lawyerin Detroit He was an able anda successful one By the time he was thirty-five

he had a large and a lucrative praaice he hadamassed a competence and he stood on

the threshold of a distinguished career He had ana cute brain anattractive personality

and uprightness There was no reason why he shouldnot become financially or politically

a power in the land One evening he was sitting in his clubwith a group of friends and

they were perhaps a little worse (or the better) for liquor One ofthem had recently come

from Italy and he told them of a house he had seen at Capri a houseon the hill overlooking

the Bay of Naples with a large and shady garden He described to themthe beauty of the

most beautifulisland in the Mediterranean

[root@master ~]# hdfs dfs -put wordcount.txt /

[root@master ~]# hadoop jar /usr/local/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.1.jar wordcount /wordcount.txt /output

21/06/23 08:28:08 INFO input.FileInputFormat: Total input paths to process : 1

21/06/23 08:28:08 INFO mapreduce.JobSubmitter: number of splits:1

21/06/23 08:28:08 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1624407510976_0001

21/06/23 08:28:08 INFO impl.YarnClientImpl: Submitted application application_1624407510976_0001

21/06/23 08:28:08 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1624407510976_0001/

21/06/23 08:28:08 INFO mapreduce.Job: Running job: job_1624407510976_0001

21/06/23 08:28:18 INFO mapreduce.Job: Job job_1624407510976_0001 running in uber mode : false

21/06/23 08:28:18 INFO mapreduce.Job: map 0% reduce 0%

21/06/23 08:28:26 INFO mapreduce.Job: map 100% reduce 0%

21/06/23 08:28:34 INFO mapreduce.Job: map 100% reduce 100%

21/06/23 08:28:34 INFO mapreduce.Job: Job job_1624407510976_0001 completed successfully

21/06/23 08:28:34 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=2463

FILE: Number of bytes written=242401

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1829

HDFS: Number of bytes written=1676

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=6030

Total time spent by all reduces in occupied slots (ms)=4895

Total time spent by all map tasks (ms)=6030

Total time spent by all reduce tasks (ms)=4895

Total vcore-seconds taken by all map tasks=6030

Total vcore-seconds taken by all reduce tasks=4895

Total megabyte-seconds taken by all map tasks=6174720

Total megabyte-seconds taken by all reduce tasks=5012480

Map-Reduce Framework

Map input records=24

Map output records=314

Map output bytes=2969

Map output materialized bytes=2463

Input split bytes=95

Combine input records=314

Combine output records=196

Reduce input groups=196

Reduce shuffle bytes=2463

Reduce input records=196

Reduce output records=196

Spilled Records=392

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=172

CPU time spent (ms)=2110

Physical memory (bytes) snapshot=324399104

Virtual memory (bytes) snapshot=4162691072

Total committed heap usage (bytes)=219676672

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1734

File Output Format Counters

Bytes Written=1676

[root@master ~]# hdfs dfs -cat /output/* | head -5

(or 1

At 1

Bay 1

By 1

Capri 1