最详细的编译paddleOcrGPU C++版本指南(包含遇到坑的解决办法)

前言:

我是之前编译过调用CPU的paddleOcr的C++版本,其实CPU版本与GPU版本并无太大不同,只不过是调用库版本的不同,然后原项目中几个相关参数改一下就可以,但是在这个过程中我找不到关于编译paddleOcrGPU C++版本的详细教程介绍,没有目的的踩了一些坑,在这里详细的记录下来整个过程与解决办法。

配环境的准备工作

首先需要看官方提供的库需要什么什么版本的CUDA,cudnn,tensorRT,官方库地址在这里

https://paddleinference.paddlepaddle.org.cn/user_guides/download_lib.html#windows

我是选的最后一个版本,可以看到上面需要cuda11.6,cudnn8.4,tensorRT8.4.1.5,所以按照需要的版本进行安装,我是参考的这篇https://blog.csdn.net/weixin_42408280/article/details/125933323写的非常好

- cuda下载路径

https://developer.nvidia.com/cuda-toolkit-archive

这个Version10是windows10的意思 - cudnn下载路径

https://developer.nvidia.com/rdp/cudnn-archive - tensorRT下载路径(GA是指稳定版)

https://developer.nvidia.com/nvidia-tensorrt-8x-download

配环境

其实配环境很简单,cuda就直接继续安装就行,会默认安装到C盘,测试就算我该地址到D盘,还是会装到C盘,需要C盘空间足够

- 配置cudnn与TensorRT

将下载好的cudnn和TensorRT分别解压,并分别将两个解压后的文件夹内的:bin, include, lib\ 目录复制到cuda安装路径下,cuda的默认安装路径为:C:\Program Files\NVIDIA GPU Computing Toolkit\CUD

下载Paddle库

地址:https://paddleinference.paddlepaddle.org.cn/user_guides/download_lib.html#windows

解压后的文件

改代码

之前如果部署过cpu版本的就非常简单了,只需要将CMakeLists里调用的静态库(.lib)改成GPU版本的库即可

这是我的CMakeLists.txt

# cmake_minimum_required(VERSION 3.5)

# # 设置c++标准

# set(CMAKE_CXX_STANDARD 17)

# project(PaddleOcr)

# # 默认arm

# set(path lib/arm)

# set(opencvVersion opencv410)#设置opencv版本

# # 头文件

# include_directories(./include)

# include_directories(/home/nvidia/paddleOCR/PaddleOCR-release-2.6/deploy/cpp_infer)

# include_directories(/usr/include)

# include_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/paddle/include)

# include_directories(/home/nvidia/opencv-4.1.0/include/opencv2)

# include_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/third_party/install/glog/include)

# include_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/third_party/AutoLog-main)

# include_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/third_party/install/gflags/include)

# include_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/third_party/install/protobuf/include)

# include_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/third_party/threadpool)

# # 库文件

# link_directories(/usr/lib)

# link_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/third_party/install/glog/lib)

# link_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/paddle/lib)

# link_directories(/home/nvidia/opencv-4.1.0/build/lib)

# link_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/third_party/install/protobuf/lib)

# link_directories(/home/nvidia/paddleOCR/paddle_inference_install_dir/third_party/install/gflags/lib)

# aux_source_directory (src SRC_LIST)

# add_executable (test ${SRC_LIST})

# # c++17

# target_link_libraries(test opencv_highgui opencv_core opencv_imgproc opencv_imgcodecs opencv_calib3d opencv_features2d opencv_videoio protobuf glog gflags paddle_inference pthread)

# # 注意测试

# set (EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin/arm)

##################################################################################################

set(CMAKE_BUILD_TYPE "Release") #option: Debug / Release

if (CMAKE_BUILD_TYPE MATCHES "Debug"

OR CMAKE_BUILD_TYPE MATCHES "None")

message(STATUS "CMAKE_BUILD_TYPE is Debug")

elseif (CMAKE_BUILD_TYPE MATCHES "Release")

message(STATUS "CMAKE_BUILD_TYPE is Release")

endif()

cmake_minimum_required(VERSION 3.5)

# 设置c++标准

set(CMAKE_CXX_STANDARD 17)

project(DsOcr)

set(CMAKE_WINDOWS_EXPORT_ALL_SYMBOLS ON)

option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." ON)

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." ON)

option(WITH_STATIC_LIB "Compile demo with static/shared library, default use static." ON)

option(WITH_TENSORRT "Compile demo with TensorRT." OFF)

macro(safe_set_static_flag)

foreach(flag_var

CMAKE_CXX_FLAGS CMAKE_CXX_FLAGS_DEBUG CMAKE_CXX_FLAGS_RELEASE

CMAKE_CXX_FLAGS_MINSIZEREL CMAKE_CXX_FLAGS_RELWITHDEBINFO)

if(${flag_var} MATCHES "/MD")

string(REGEX REPLACE "/MD" "/MT" ${flag_var} "${${flag_var}}")

endif(${flag_var} MATCHES "/MD")

endforeach(flag_var)

endmacro()

if (WITH_MKL)

ADD_DEFINITIONS(-DUSE_MKL)

endif()

if (MSVC)

add_definitions(-w)

#add_definitions(-W0)

endif()

if (WIN32)

add_definitions("/DGOOGLE_GLOG_DLL_DECL=")

set(CMAKE_C_FLAGS /source-charset:utf-8)

add_definitions(-D_CRT_SECURE_NO_WARNINGS)

add_definitions(-D_CRT_NONSTDC_NO_DEPRECATE)

if(WITH_MKL)

set(FLAG_OPENMP "/openmp")

endif()

set(CMAKE_C_FLAGS_DEBUG "${CMAKE_C_FLAGS_DEBUG} /bigobj /MTd ${FLAG_OPENMP}")

set(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_RELEASE} /bigobj /MT ${FLAG_OPENMP}")

set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} /bigobj /MTd ${FLAG_OPENMP}")

set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} /bigobj /MT ${FLAG_OPENMP}")

if (WITH_STATIC_LIB)

safe_set_static_flag()

add_definitions(-DSTATIC_LIB)

endif()

message("cmake c debug flags " ${CMAKE_C_FLAGS_DEBUG})

message("cmake c release flags " ${CMAKE_C_FLAGS_RELEASE})

message("cmake cxx debug flags " ${CMAKE_CXX_FLAGS_DEBUG})

message("cmake cxx release flags " ${CMAKE_CXX_FLAGS_RELEASE})

endif()

set(opencvVersion opencv410)#设置opencv版本

# 头文件

include_directories(${PROJECT_SOURCE_DIR}/include)

#include_directories(/home/nvidia/paddleOCR/PaddleOCR-release-2.6/deploy/cpp_infer/include)

#include_directories(/home/nvidia/paddleOCR/PaddleOCR-release-2.6/deploy/cpp_infer)

#include_directories(/usr/include)

include_directories(./)

include_directories(./include)

include_directories(./Ds_inference/opencv410/include/opencv4)

include_directories(./Ds_inference/opencv410/include/opencv4/opencv2)

include_directories(./Ds_inference/third_party/install/mklml/include)

include_directories(./Ds_inference/third_party/install/mkldnn/include)

include_directories(./Ds_inference/third_party/install/glog/include)

include_directories(./Ds_inference/third_party/AutoLog-main)

include_directories(./Ds_inference/third_party/install/gflags/include)

include_directories(./Ds_inference/third_party/install/protobuf/include)

include_directories(./Ds_inference/third_party/threadpool)

include_directories(./Ds_inference/paddle_gpu11.6/include)

# 库文件

#link_directories(/usr/lib)

link_directories(./Ds_inference/third_party/install/mklml/lib)

link_directories(./Ds_inference/third_party/install/mkldnn/lib)

link_directories(./Ds_inference/third_party/install/glog/lib)

link_directories(./Ds_inference/opencv410/lib)

link_directories(./Ds_inference/third_party/install/protobuf/lib)

link_directories(./Ds_inference/third_party/install/gflags/lib)

link_directories(./Ds_inference/paddle_gpu11.6/lib)

aux_source_directory (src SRC_LIST)

add_executable (test ${SRC_LIST})

set(LIBRARY_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin/win)

set(output)

target_link_libraries(test

opencv_world410

#opencv_highgui opencv_core opencv_imgproc opencv_imgcodecs opencv_calib3d opencv_features2d opencv_videoio

paddle_inference mklml libiomp5md mkldnn glog gflags_static libprotobuf libcmt shlwapi

)

# 注意测试

set (EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin)

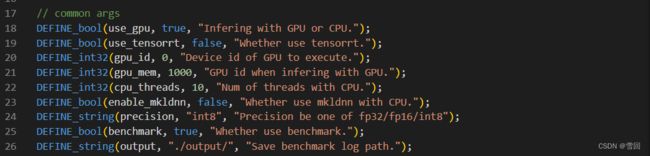

除了改CMakeLists文件,只需要改args.cpp里的参数就可以了

- use_gpu打开

- use_tensorrt我选择true,然后运行的时候会报这个错,我不知道怎么解决,就暂时不使用这个功能

- gpu_id是gpu的编号,默认为一个,即调用一个GPU

- benchmark打开可以看到具体的推理速度等信息

编译出exe可执行文件

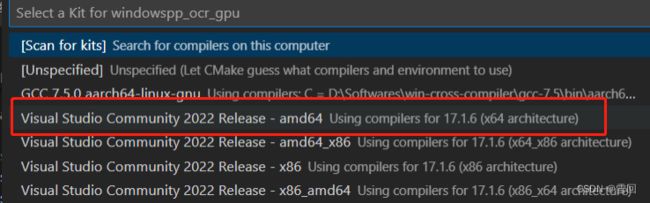

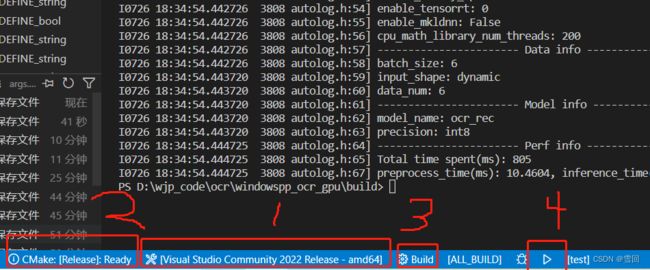

使用vscode编译

如果是x64系统就选择这个

特别注意一定要选好是release版本还是debug版本,我之前没有注意到这个地方,默认选的debug,然后会报好多找不到库的错,然后我就用的visual studio生成exe,vs太庞大了,运行很慢,所以很麻烦,后来才发现是我没有选成release模式。

- 用vscode编译可执行文件非常简单丝滑,先在build文件夹下cmake ..生成sln文件

- 然后按build生成exe文件

- 最后按4那个箭头就可以直接运行生成好的exe文件

遇到的大坑

因为我之前编译过cpu版本的,所以我属于直接复制了之前的项目,然后改了几个参数,到运行的时候报错

我排查了好久,网上根本找不到同种报错的解决办法,最后知道这类错误就属于dll与lib的版本不匹配的问题,我突然想去来我的exe文件夹内还是用的之前的cpu版本的dll,而我的lib使用的gpu版本的lib,我把dll替换为gpu版本的就没有报这个错了,这给了我一个解决办法的思路,如果解决问题时没有方向可以把整个项目清空,从头开始搭建项目,就不会受其他因素影响,有可能就能解决项目的报错。