Qt/C++音视频开发53-本地摄像头推流/桌面推流/文件推流/监控推流等

一、前言

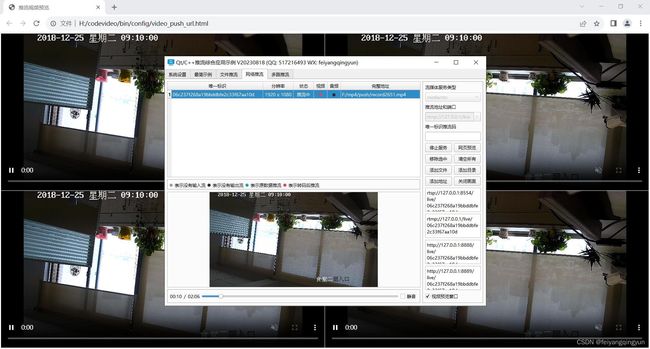

编写这个推流程序,最开始设计的时候是用视频文件推流,后面陆续增加了监控摄像头推流(其实就是rtsp视频流)、网络电台和视频推流(一般是rtmp或者http开头m3u8结尾的视频流)、本地摄像头推流(本地USB摄像头或者笔记本自带摄像头等)、桌面推流(将当前运行环境的系统桌面抓拍推流)。按照分类的话其实就是三大类,第一类就是视频文件(包括本地视频文件和网络视频文件,就是带了文件时长的),第二类就是各种实时视频流(包括监控摄像头rtsp,网络电台rtmp,网络实时视频m3u8等),第三类就是本地设备采集(包括本地摄像头采集和本地电脑桌面采集),每一种类别都可以用对应的通用的代码去处理,基本上就是在打开阶段有所区别,后面采集和解码并推流阶段,代码是一模一样的。

二、效果图

三、体验地址

- 国内站点:https://gitee.com/feiyangqingyun

- 国际站点:https://github.com/feiyangqingyun

- 个人作品:https://blog.csdn.net/feiyangqingyun/article/details/97565652

- 体验地址:https://pan.baidu.com/s/1d7TH_GEYl5nOecuNlWJJ7g 提取码:01jf 文件名:bin_video_push。

四、功能特点

- 支持各种本地视频文件和网络视频文件。

- 支持各种网络视频流,网络摄像头,协议包括rtsp、rtmp、http。

- 支持将本地摄像头设备推流,可指定分辨率和帧率等。

- 支持将本地桌面推流,可指定屏幕区域和帧率等。

- 自动启动流媒体服务程序,默认mediamtx(原rtsp-simple-server),可选用srs、EasyDarwin、LiveQing、ZLMediaKit等。

- 可实时切换预览视频文件,可切换视频文件播放进度,切换到哪里就推流到哪里。

- 推流的清晰度和质量可调。

- 可动态添加文件、目录、地址。

- 视频文件自动循环推流,如果视频源是视频流,在掉线后会自动重连。

- 网络视频流自动重连,重连成功自动继续推流。

- 网络视频流实时性极高,延迟极低,延迟时间大概在100ms左右。

- 极低CPU占用,4路主码流推流只需要占用0.2%CPU。理论上常规普通PC机器推100路毫无压力,主要性能瓶颈在网络。

- 推流可选推流到rtsp/rtmp两种,推流后的数据支持直接rtsp/rtmp/hls/webrtc四种方式访问,可以直接浏览器打开看实时画面。

- 可以推流到外网服务器,然后通过手机、电脑、平板等设备播放对应的视频流。

- 每个推流都可以手动指定唯一标识符(方便拉流/用户无需记忆复杂的地址),没有指定则按照策略随机生成hash值。

- 自动生成测试网页直接打开播放,可以看到实时效果,自动按照数量对应宫格显示。

- 推流过程中可以在表格中切换对应推流项,实时预览正在推流的视频,并可以切换视频文件的播放进度。

- 音视频同步推流,符合264/265/aac格式的自动原数据推流,不符合的自动转码再推流(会占用一定CPU)。

- 转码策略支持三种,自动处理(符合要求的原数据/不符合的转码),仅限文件(文件类型的转码视频),所有转码。

- 表格中实时显示每一路推流的分辨率和音视频数据状态,灰色表示没有输入流,黑色表示没有输出流,绿色表示原数据推流,红色表示转码后的数据推流。

- 自动重连视频源,自动重连流媒体服务器,保证启动后,推流地址和打开地址都实时重连,只要恢复后立即连上继续采集和推流。

- 提供循环推流示例,一个视频源同时推流到多个流媒体服务器,比如打开一个视频同时推流到抖音/快手/B站等,可以作为录播推流,列表循环,非常方便实用。

- 根据不同的流媒体服务器类型,自动生成对应的rtsp/rtmp/hls/flv/ws-flv/webrtc地址,用户可以直接复制该地址到播放器或者网页中预览查看。

- 编码视频格式可以选择自动处理(源头是264就264/源头是265就265),转H264(强制转264),转H265(强制转265)。

- 支持Qt4/Qt5/Qt6任意版本,支持任意系统(windows/linux/macos/android/嵌入式linux等)。

五、相关代码

bool FFmpegSave::initVideoCtx()

{

//没启用视频编码或者不需要视频则不继续

if (!encodeVideo || !needVideo) {

return true;

}

//查找视频编码器(自动处理的话如果源头是H265则采用HEVC作为编码器)

AVCodecx *videoCodec;

if (videoFormat == 0) {

AVCodecID codecID = FFmpegHelper::getCodecId(videoStreamIn);

if (codecID == AV_CODEC_ID_HEVC) {

videoCodec = avcodec_find_encoder(AV_CODEC_ID_HEVC);

} else {

videoCodec = avcodec_find_encoder(AV_CODEC_ID_H264);

}

} else if (videoFormat == 1) {

videoCodec = avcodec_find_encoder(AV_CODEC_ID_H264);

} else if (videoFormat == 2) {

videoCodec = avcodec_find_encoder(AV_CODEC_ID_HEVC);

}

//RTMP流媒体目前只支持H264

if (fileName.startsWith("rtmp://")) {

videoCodec = avcodec_find_encoder(AV_CODEC_ID_H264);

}

if (!videoCodec) {

debug(0, "视频编码", "avcodec_find_encoder");

return false;

}

//创建视频编码器上下文

videoCodecCtx = avcodec_alloc_context3(videoCodec);

if (!videoCodecCtx) {

debug(0, "视频编码", "avcodec_alloc_context3");

return false;

}

//AVCodecContext结构体参数: https://blog.csdn.net/weixin_44517656/article/details/109707539

//放大系数是为了小数位能够正确放大到整型

int ratio = 1000000;

videoCodecCtx->time_base.num = 1 * ratio;

videoCodecCtx->time_base.den = frameRate * ratio;

videoCodecCtx->framerate.num = frameRate * ratio;

videoCodecCtx->framerate.den = 1 * ratio;

//下面这种方式对编译器有版本要求(c++11)

//videoCodecCtx->time_base = {1, frameRate};

//videoCodecCtx->framerate = {frameRate, 1};

//参数说明 https://blog.csdn.net/qq_40179458/article/details/110449653

//大分辨率需要加上下面几个参数设置(否则在32位的库不能正常编码提示 Generic error in an external library)

if ((videoWidth >= 3840 || videoHeight >= 2160)) {

videoCodecCtx->qmin = 10;

videoCodecCtx->qmax = 51;

videoCodecCtx->me_range = 16;

videoCodecCtx->max_qdiff = 4;

videoCodecCtx->qcompress = 0.6;

}

//需要转换尺寸的启用目标尺寸

int width = videoWidth;

int height = videoHeight;

if (encodeVideoScale != "1") {

QStringList sizes = WidgetHelper::getSizes(encodeVideoScale);

if (sizes.count() == 2) {

width = sizes.at(0).toInt();

height = sizes.at(1).toInt();

} else {

float scale = encodeVideoScale.toFloat();

width = videoWidth * scale;

height = videoHeight * scale;

}

}

//初始化视频编码器参数(如果要文件体积小一些画质差一些可以降低码率)

videoCodecCtx->bit_rate = FFmpegHelper::getBitRate(width, height) * encodeVideoRatio;

videoCodecCtx->codec_type = AVMEDIA_TYPE_VIDEO;

videoCodecCtx->width = width;

videoCodecCtx->height = height;

videoCodecCtx->level = 50;

//多少帧一个I帧(关键帧)

videoCodecCtx->gop_size = frameRate;

//去掉B帧只留下I帧和P帧

videoCodecCtx->max_b_frames = 0;

//videoCodecCtx->bit_rate_tolerance = 1;

videoCodecCtx->pix_fmt = AV_PIX_FMT_YUV420P;

videoCodecCtx->profile = FF_PROFILE_H264_MAIN;

if (saveVideoType == SaveVideoType_Mp4) {

videoCodecCtx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

//videoCodecCtx->flags |= (AV_CODEC_FLAG_GLOBAL_HEADER | AV_CODEC_FLAG_LOW_DELAY);

}

//加速选项 https://www.jianshu.com/p/034f5b3e7f94

//加载预设 https://blog.csdn.net/JineD/article/details/125304570

//速度选项 ultrafast/superfast/veryfast/faster/fast/medium/slow/slower/veryslow/placebo

//视觉优化 film/animation/grain/stillimage/psnr/ssim/fastdecode/zerolatency

//设置零延迟(本地采集设备视频流保存如果不设置则播放的时候会越来越模糊)

//测试发现有些文件需要开启才不会慢慢变模糊/有些开启后在部分系统环境会偶尔卡顿(webrtc下)/根据实际需求决定是否开启

av_opt_set(videoCodecCtx->priv_data, "tune", "zerolatency", 0);

//文件类型除外(保证文件的清晰度)

if (videoType > 2) {

av_opt_set(videoCodecCtx->priv_data, "preset", "ultrafast", 0);

//av_opt_set(videoCodecCtx->priv_data, "x265-params", "qp=20", 0);

}

//打开视频编码器

int result = avcodec_open2(videoCodecCtx, videoCodec, NULL);

if (result < 0) {

debug(result, "视频编码", "avcodec_open2");

return false;

}

//创建编码用临时包

videoPacket = FFmpegHelper::creatPacket(NULL);

//设置了视频缩放则转换

if (encodeVideoScale != "1") {

videoFrame = av_frame_alloc();

videoFrame->format = AV_PIX_FMT_YUV420P;

videoFrame->width = width;

videoFrame->height = height;

int align = 1;

int flags = SWS_BICUBIC;

AVPixelFormat format = AV_PIX_FMT_YUV420P;

int videoSize = av_image_get_buffer_size(format, width, height, align);

videoData = (quint8 *)av_malloc(videoSize * sizeof(quint8));

av_image_fill_arrays(videoFrame->data, videoFrame->linesize, videoData, format, width, height, align);

videoSwsCtx = sws_getContext(videoWidth, videoHeight, format, width, height, format, flags, NULL, NULL, NULL);

}

debug(0, "视频编码", "初始化完成");

return true;

}

//https://blog.csdn.net/irainsa/article/details/129289254

bool FFmpegSave::initAudioCtx()

{

//没启用音频编码或者不需要音频则不继续

if (!encodeAudio || !needAudio) {

return true;

}

AVCodecx *audioCodec = avcodec_find_encoder(AV_CODEC_ID_AAC);

if (!audioCodec) {

debug(0, "音频编码", "avcodec_find_encoder");

return false;

}

//创建音频编码器上下文

audioCodecCtx = avcodec_alloc_context3(audioCodec);

if (!audioCodecCtx) {

debug(0, "音频编码", "avcodec_alloc_context3");

return false;

}

//初始化音频编码器参数

audioCodecCtx->bit_rate = FFmpegHelper::getBitRate(audioStreamIn);

audioCodecCtx->codec_type = AVMEDIA_TYPE_AUDIO;

audioCodecCtx->sample_rate = sampleRate;

audioCodecCtx->channel_layout = AV_CH_LAYOUT_STEREO;

audioCodecCtx->channels = channelCount;

//audioCodecCtx->profile = FF_PROFILE_AAC_MAIN;

audioCodecCtx->sample_fmt = AV_SAMPLE_FMT_FLTP;

audioCodecCtx->strict_std_compliance = FF_COMPLIANCE_EXPERIMENTAL;

if (saveVideoType == SaveVideoType_Mp4) {

audioCodecCtx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

}

//打开音频编码器

int result = avcodec_open2(audioCodecCtx, audioCodec, NULL);

if (result < 0) {

debug(result, "音频编码", "avcodec_open2");

return false;

}

//创建编码用临时包

audioPacket = FFmpegHelper::creatPacket(NULL);

debug(0, "音频编码", "初始化完成");

return true;

}

bool FFmpegSave::initStream()

{

AVDictionary *options = NULL;

QByteArray fileData = fileName.toUtf8();

const char *url = fileData.data();

//既可以是保存到文件也可以是推流(对应格式要区分)

const char *format = "mp4";

if (videoIndexIn < 0 && audioCodecName == "mp3") {

format = "mp3";

}

if (fileName.startsWith("rtmp://")) {

format = "flv";

} else if (fileName.startsWith("rtsp://")) {

format = "rtsp";

av_dict_set(&options, "stimeout", "3000000", 0);

av_dict_set(&options, "rtsp_transport", "tcp", 0);

}

//如果存在秘钥则启用加密

QByteArray cryptoKey = this->property("cryptoKey").toByteArray();

if (!cryptoKey.isEmpty()) {

av_dict_set(&options, "encryption_scheme", "cenc-aes-ctr", 0);

av_dict_set(&options, "encryption_key", cryptoKey.constData(), 0);

av_dict_set(&options, "encryption_kid", cryptoKey.constData(), 0);

}

//开辟一个格式上下文用来处理视频流输出(末尾url不填则rtsp推流失败)

int result = avformat_alloc_output_context2(&formatCtx, NULL, format, url);

if (result < 0) {

debug(result, "创建格式", "");

return false;

}

//创建输出视频流

if (!this->initVideoStream()) {

goto end;

}

//创建输出音频流

if (!this->initAudioStream()) {

goto end;

}

//打开输出文件

if (!(formatCtx->oformat->flags & AVFMT_NOFILE)) {

result = avio_open(&formatCtx->pb, url, AVIO_FLAG_WRITE);

if (result < 0) {

debug(result, "打开输出", "");

goto end;

}

}

//写入文件开始符

result = avformat_write_header(formatCtx, &options);

if (result < 0) {

debug(result, "写文件头", "");

goto end;

}

return true;

end:

//关闭释放并清理文件

this->close();

this->deleteFile(fileName);

return false;

}

bool FFmpegSave::initVideoStream()

{

if (needVideo) {

videoIndexOut = 0;

AVStream *stream = avformat_new_stream(formatCtx, NULL);

if (!stream) {

return false;

}

//设置旋转角度(没有编码的数据是源头带有旋转角度的/编码后的是正常旋转好的)

if (!encodeVideo) {

FFmpegHelper::setRotate(stream, rotate);

}

//复制解码器上下文参数(不编码从源头流拷贝/编码从设置的编码器拷贝)

int result = -1;

if (encodeVideo) {

stream->r_frame_rate = videoCodecCtx->framerate;

result = FFmpegHelper::copyContext(videoCodecCtx, stream, true);

} else {

result = FFmpegHelper::copyContext(videoStreamIn, stream);

}

if (result < 0) {

debug(result, "复制参数", "");

return false;

}

}

return true;

}

bool FFmpegSave::initAudioStream()

{

if (needAudio) {

audioIndexOut = (videoIndexOut == 0 ? 1 : 0);

AVStream *stream = avformat_new_stream(formatCtx, NULL);

if (!stream) {

return false;

}

//复制解码器上下文参数(不编码从源头流拷贝/编码从设置的编码器拷贝)

int result = -1;

if (encodeAudio) {

result = FFmpegHelper::copyContext(audioCodecCtx, stream, true);

} else {

result = FFmpegHelper::copyContext(audioStreamIn, stream);

}

if (result < 0) {

debug(result, "复制参数", "");

return false;

}

}

return true;

}

bool FFmpegSave::init()

{

//必须存在输入视音频流对象其中一个

if (fileName.isEmpty() || (!videoStreamIn && !audioStreamIn)) {

return false;

}

//检查推流地址是否正常

if (saveMode != SaveMode_File && !WidgetHelper::checkUrl(fileName, 1000)) {

debug(0, "地址不通", "");

if (!this->isRunning()) {

this->start();

}

return false;

}

//获取媒体信息及检查编码处理

this->getMediaInfo();

this->checkEncode();

//ffmpeg2不支持重新编码的推流

#if (FFMPEG_VERSION_MAJOR < 3)

if (saveMode != SaveMode_File && (encodeVideo || encodeAudio)) {

return false;

}

#endif

//初始化对应视音频编码器

if (!this->initVideoCtx()) {

return false;

}

if (!this->initAudioCtx()) {

return false;

}

//保存264数据直接写文件

if (saveVideoType == SaveVideoType_H264) {

return true;

}

//初始化视音频流

if (!this->initStream()) {

return false;

}

debug(0, "索引信息", QString("视频: %1/%2 音频: %3/%4").arg(videoIndexIn).arg(videoIndexOut).arg(audioIndexIn).arg(audioIndexOut));

return true;

}

void FFmpegSave::save()

{

//从队列中取出数据处理

//qDebug() << TIMEMS << packets.count() << frames.count();

if (packets.count() > 0) {

mutex.lock();

AVPacket *packet = packets.takeFirst();

mutex.unlock();

this->writePacket2(packet, packet->stream_index == videoIndexIn);

FFmpegHelper::freePacket(packet);

}

if (frames.count() > 0) {

mutex.lock();

AVFrame *frame = frames.takeFirst();

mutex.unlock();

if (frame->width > 0) {

FFmpegHelper::encode(this, videoCodecCtx, videoPacket, frame, true);

} else {

FFmpegHelper::encode(this, audioCodecCtx, audioPacket, frame, false);

}

FFmpegHelper::freeFrame(frame);

}

}