(学习笔记)TensorFlow损失函数(定义和使用)详解

本文章只是学习笔记,不具有任何参考价值。

学习网站 http://c.biancheng.net/view/1903.html

在回归中定义了损失函数或目标函数,其目的是找到损失最小化的系数。

那么如何在TensorFlow 中定义损失函数,并根据问题选择合适的损失函数。

声明一个损失函数需要将系数定义为变量,将数据集定义为占位符。可以有一个常学习率或变化的学习率和正则化常数。

在下面的代码中,设m是样本数量,n是特征数量,P是类别数量。这里应该在代码之前定义这些全局参数。

m = 1000

n = 15

P = 2在标准线性回归的情况下,只有一个输入变量和一个输出变量:

# Placeholder for the Training Data

X = tf.placeholder(tf.float32,name='X')

Y = tf.placeholder(tf.float32,name='Y')

# Variables for coefficients initialized to 0

w0 = tf.Variable(0.0)

w1 = tf.Variable(0.0)

# The Linear Regression Mode1

Y_hat = X*w1 + w0

#Loss function

loss = tf.square(Y - Y_hat, name='loss')在多元线性回归的情况下,输入变量不止一个,而输出变量仍为一个。现在可以定义占位符X的大小为[m,n],其中m 是样本数量,n是特征数量,代码如下:

# Placeholder for the Training Data

X = tf.placeholder(tf.float32, name='X',shape = [m,n])

Y = tf.placeholder(tf.float32, name = 'Y')

# Variables for coefficients initialized to 0

w0 = tf.Variable(0.0)

w1 = tf.Variable(tf.random_normal([n,1]))

# The Linear Regression Model

Y_hat = tf.matmul(X, w1) + w0

# Multiple linear regression loss function

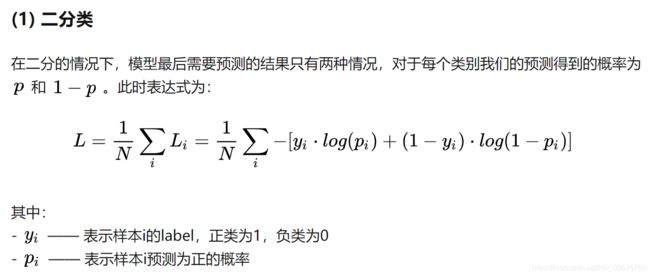

loss = tf.reduce_mean(tf.square(Y - Y_hat, name = 'loss'))在逻辑回归的情况下,损失函数定义为交叉熵。

关于交叉熵的定义参考了

https://zhuanlan.zhihu.com/p/35709485

输出的维数等于训练数据集中类别的数量,其中 P 为类别数量:

# Placeholder for the Training Data

X = tf.Placeholder(tf.float32, name='X',shape=[m,n])

Y = tf.placeholder(tf.float32, name = 'Y',shape=[m,P])

# Variables for coefficients initialized to 0

w0 = tf.Variable(tf.zeros([1,P]),name='bias')

w1 = tf.Variable(tf.random_normal([n,1]),name = 'weights')

# The Linear Regression Model

Y_hat = tf.matmul(X, w1) + w0

# Loss function

entropy = tf.nn.softmax_cross_entropy_with_logits(Y_hat, Y)

loss = tf.reduce_mean(entropy)

如果想把L1正则化加到损失上,那么代码如下:

# Placeholder for the Training Data

X = tf.Placeholder(tf.float32, name='X',shape=[m,n])

Y = tf.placeholder(tf.float32, name = 'Y',shape=[m,P])

# Variables for coefficients initialized to 0

w0 = tf.Variable(tf.zeros([1,P]),name='bias')

w1 = tf.Variable(tf.random_normal([n,1]),name = 'weights')

# The Linear Regression Model

Y_hat = tf.matmul(X, w1) + w0

# Loss function

entropy = tf.nn.softmax_cross_entropy_with_logits(Y_hat, Y)

loss = tf.reduce_mean(entropy)

lamda = tf.constant(0.8)

regularization_param = lamda *tf.reduce_sum(tf.abs(w1))

# New loss

loss += regularization_param

对于L2正则化,代码如下

# Placeholder for the Training Data

X = tf.Placeholder(tf.float32, name='X',shape=[m,n])

Y = tf.placeholder(tf.float32, name = 'Y',shape=[m,P])

# Variables for coefficients initialized to 0

w0 = tf.Variable(tf.zeros([1,P]),name='bias')

w1 = tf.Variable(tf.random_normal([n,1]),name = 'weights')

# The Linear Regression Model

Y_hat = tf.matmul(X, w1) + w0

# Loss function

entropy = tf.nn.softmax_cross_entropy_with_logits(Y_hat, Y)

loss = tf.reduce_mean(entropy)

lamda = tf.constant(0.8)

regularization_param = lamda *tf.nn.12_loss(w1)

# New loss

loss += regularization_param

对正则化L1 和L2 的理解 参考

https://www.bilibili.com/video/BV1Tx411j7tJ?from=search&seid=10856754709215282937