缺页异常处理函数解析(arm64 4.4)

static int __kprobes do_page_fault(unsigned long addr, unsigned int esr,

struct pt_regs *regs)

{

struct task_struct *tsk;

struct mm_struct *mm;

int fault, sig, code;

unsigned long vm_flags = VM_READ | VM_WRITE | VM_EXEC;

unsigned int mm_flags = FAULT_FLAG_ALLOW_RETRY | FAULT_FLAG_KILLABLE;

tsk = current; //获取当前的task结构体

mm = tsk->mm; //获取当前的任务描述符里的内存mm结构

/* Enable interrupts if they were enabled in the parent context. */

if (interrupts_enabled(regs))// 这个判断实际上是通过arm64的pstate寄存器,pstate寄存器用于保存进程或者内核线程的处理器模式以及中断使能标志位等信息。

local_irq_enable();

/*

* If we're in an interrupt or have no user context, we must not take

* the fault.

*/

if (faulthandler_disabled() || !mm) //faulthandler_disabled 是(pagefault_disabled() || in_atomic())宏定义,pagefault_disabled用于判断任务描述符里的pagefault_disabled是否为1,这个通常是用于设置不做缺页异常处理的情况。in_atomic用于判断preempt_count是否为0,不等于0 表示关抢占,不会发生进程调度。

goto no_context; //不继续做缺页异常处理,直接执行内核的缺页异常处理函数 __do_kernel_fault,此函数是不会去分配实际的物理内存页,而是调用do_exit(SIGKILL) 函数触发当前进程或者线程死掉。如果是中断上下文则整个内核panic如果是内核线程则内核线程退掉。

if (user_mode(regs)) //同样根据pstate寄存器里的相应位判断是否属于用户态的异常,也就是异常发生在用户态上下文非内核态上下文。

mm_flags |= FAULT_FLAG_USER; //对于处于用户态上下文的异常设置用户态标志

if (esr & ESR_LNX_EXEC) { //判断传入的esr寄存器里的值与ESR_LNX_EXEC相与表示出现此异常是否由于指令执行产生,如果是指令执行产生则设置VM_EXEC标志。

vm_flags = VM_EXEC;

} else if ((esr & ESR_ELx_WNR) && !(esr & ESR_ELx_CM)) {//ESR_ELx_WNR表示是否因为写导致的异常,ESR_ELx_CM表示异常与条件码相关。

vm_flags = VM_WRITE;

mm_flags |= FAULT_FLAG_WRITE;

}

/*

* PAN bit set implies the fault happened in kernel space, but not

* in the arch's user access functions.

*/

if (IS_ENABLED(CONFIG_ARM64_PAN) && (regs->pstate & PSR_PAN_BIT))//CONFIG_ARM64_PAN表示是否开启pan标志,pan标志开启表示不允许访问被保护的内存。

goto no_context;

if (!down_read_trylock(&mm->mmap_sem)) { //获取任务mm结构的sem读锁,0表示获取失败

if (!user_mode(regs) && !search_exception_tables(regs->pc)) //此处表示获取sem锁失败则判断是否是处于内核上下文且根据pc值在异常表里搜素不到则直接调用__do_kernel_fault打印警告或者panic处理

goto no_context;

retry:

down_read(&mm->mmap_sem);//如果不是处于内核上下文,则申请获取sem读锁,会阻塞。

} else {

/*

* The above down_read_trylock() might have succeeded in which

* case, we'll have missed the might_sleep() from down_read().

*/

might_sleep();//获取sem读锁成功

#ifdef CONFIG_DEBUG_VM

if (!user_mode(regs) && !search_exception_tables(regs->pc))

goto no_context;

#endif

}

fault = __do_page_fault(mm, addr, mm_flags, vm_flags, tsk); //这个函数是真正处理用户态缺页异常的函数,后文继续分析

/*

* If we need to retry but a fatal signal is pending, handle the

* signal first. We do not need to release the mmap_sem because it

* would already be released in __lock_page_or_retry in mm/filemap.c.

*/

if ((fault & VM_FAULT_RETRY) && fatal_signal_pending(current)) //根据上述返回值判断是否返回VM_FAULT_RETRY,如果返回重试且当前任务存在信号挂起则返回让任务去执行信号函数。

return 0;

perf_sw_event(PERF_COUNT_SW_PAGE_FAULTS, 1, regs, addr); //perf相关这里暂不做分析

if (mm_flags & FAULT_FLAG_ALLOW_RETRY) { //前面__do_page_fault函数返回值设置了FAULT_FLAG_ALLOW_RETRY标志,这个标志在什么情况下会设置?后文我们继续分析。

if (fault & VM_FAULT_MAJOR) {

tsk->maj_flt++;

perf_sw_event(PERF_COUNT_SW_PAGE_FAULTS_MAJ, 1, regs,

addr);

} else {

tsk->min_flt++;

perf_sw_event(PERF_COUNT_SW_PAGE_FAULTS_MIN, 1, regs,

addr);

}

if (fault & VM_FAULT_RETRY) { //返回值设置了VM_FAULT_RETRY则再进行重试

/*

* Clear FAULT_FLAG_ALLOW_RETRY to avoid any risk of

* starvation.

*/

mm_flags &= ~FAULT_FLAG_ALLOW_RETRY;

mm_flags |= FAULT_FLAG_TRIED;

goto retry;

}

}

up_read(&mm->mmap_sem); //释放sem读锁

/*

* Handle the "normal" case first - VM_FAULT_MAJOR / VM_FAULT_MINOR

*/

if (likely(!(fault & (VM_FAULT_ERROR | VM_FAULT_BADMAP |

VM_FAULT_BADACCESS)))) //这个表示没有对应的三个标志错误则返回表示处理成功。

return 0;

/*

* If we are in kernel mode at this point, we have no context to

* handle this fault with.

*/

if (!user_mode(regs)) //函数执行到这里表示__do_page_fault执行失败了

goto no_context;

if (fault & VM_FAULT_OOM) { //__do_page_fault函数设置了VM_FAULT_OOM 表示内存不足分配不到内存。

/*

* We ran out of memory, call the OOM killer, and return to

* userspace (which will retry the fault, or kill us if we got

* oom-killed).

*/

pagefault_out_of_memory(); //走到oom处理流程,这里暂不做分析

return 0;

}

if (fault & VM_FAULT_SIGBUS) { //表示__do_page_fault函数设置了VM_FAULT_SIGBUS标志,则初始化信号量结构设置为SIGBUS信号。

/*

* We had some memory, but were unable to successfully fix up

* this page fault.

*/

sig = SIGBUS;

code = BUS_ADRERR;

} else { //其他情况则初始化为SIGSEGV信号

/*

* Something tried to access memory that isn't in our memory

* map.

*/

sig = SIGSEGV;

code = fault == VM_FAULT_BADACCESS ?

SEGV_ACCERR : SEGV_MAPERR;

}

__do_user_fault(tsk, addr, esr, sig, code, regs); //后文继续分析

return 0;

no_context:

__do_kernel_fault(mm, addr, esr, regs);

return 0;

}

上述函数可以看到在缺页异常处理函数里如果是用户态上下文,只有返回值是VM_FAULT_SIGBUS则发送的是SIGBUS信号其他情况都是SIGSEGV信号。

2 __do_user_fault

static void __do_user_fault(struct task_struct *tsk, unsigned long addr,

unsigned int esr, unsigned int sig, int code,

struct pt_regs *regs)

{

struct siginfo si;

if (unhandled_signal(tsk, sig) && show_unhandled_signals_ratelimited()) {

//这里的条件是1、是1号进程 2、对应的信号处理函数是SIG_IGN 或者SIG_DFL 且没有设置ptrace。以上两个条件都满足再次进行打印限制检查,如果检查通过则进行信息打印

pr_info("%s[%d]: unhandled %s (%d) at 0x%08lx, esr 0x%03x\n",

tsk->comm, task_pid_nr(tsk), fault_name(esr), sig,

addr, esr);

show_pte(tsk->mm, addr);

show_regs(regs);

}

//以下是进行信号结构的初始化,

tsk->thread.fault_address = addr;

tsk->thread.fault_code = esr;

si.si_signo = sig;

si.si_errno = 0;

si.si_code = code;

si.si_addr = (void __user *)addr;

force_sig_info(sig, &si, tsk); //递交信号

}

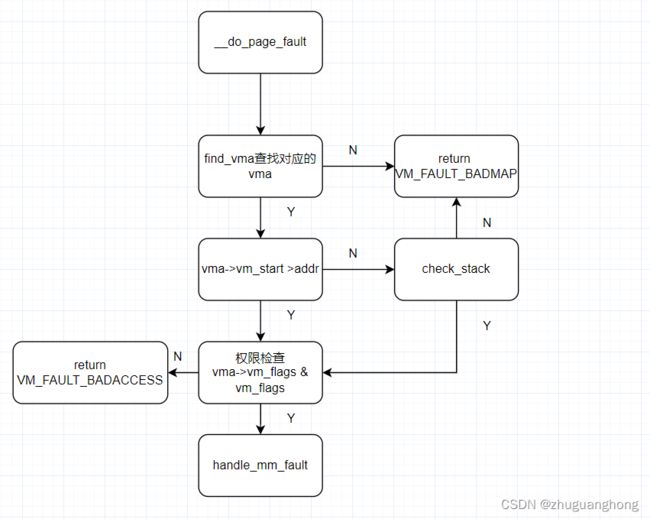

3、__do_page_fault函数分析

分析这个函数需要注意上述几个返回值标志

static int __do_page_fault(struct mm_struct *mm, unsigned long addr,

unsigned int mm_flags, unsigned long vm_flags,

struct task_struct *tsk)

{

struct vm_area_struct *vma;

int fault;

vma = find_vma(mm, addr); //查找虚拟地址对应的vma结构,

fault = VM_FAULT_BADMAP;

if (unlikely(!vma)) //如果没找到对应的vma结构则直接返回VM_FAULT_BADMAP

goto out;

if (unlikely(vma->vm_start > addr)) //检查vma的起始地址是否大于申请的地址,如果大于则检查是不是一个向下增长的栈区。

goto check_stack;

good_area://这里表示检查通过进行世界分配

if (!(vma->vm_flags & vm_flags)) { //进行vma的实际权限与触发异常的权限检查是否一致,如果不一致则返回VM_FAULT_BADACCESS错误。

fault = VM_FAULT_BADACCESS;

goto out;

}

return handle_mm_fault(mm, vma, addr & PAGE_MASK, mm_flags);

check_stack:

if (vma->vm_flags & VM_GROWSDOWN && !expand_stack(vma, addr)) //是否对应的vma结构属于向下增长的栈区,如果是则继续进行分配操作,否则返回VM_FAULT_BADMAP错误

goto good_area;

out:

return fault;

}

这个函数可以看到两点:第一个是当缺页异常处理的虚拟地址没有对应的vma(没有对应的vma表示用户态没有调用申请函数去申请对应的地址,表示非法访问)此时返回的错误是VM_FAULT_BADMAP。第二点是缺页异常处理地址的权限与实际申请的权限不对等则返回VM_FAULT_BADACCESS错误。

4、handle_mm_fault函数

int handle_mm_fault(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, unsigned int flags)

{

int ret;

__set_current_state(TASK_RUNNING); //设置当前任务状态为TASK_RUNNING

count_vm_event(PGFAULT); //更新pgfault计数。

mem_cgroup_count_vm_event(mm, PGFAULT); //更新cgroup里的pgfault事件计数

if (flags & FAULT_FLAG_USER) //如果是用户态上下文则开启cgroup里的oom机制

mem_cgroup_oom_enable();

ret = __handle_mm_fault(mm, vma, address, flags); //实际进行页表填充的函数,后文会继续分析

if (flags & FAULT_FLAG_USER) { //如果是用户态上下文则之前开启的cgroup里的oom机制现在需要关闭。

mem_cgroup_oom_disable();

if (task_in_memcg_oom(current) && !(ret & VM_FAULT_OOM)) //如果当前的任务触发是因为oom机制引起的则进一步检检查本次分配内存页是否发生了oom,

mem_cgroup_oom_synchronize(false); //同步cgroup里的oom状态

}

return ret;

}

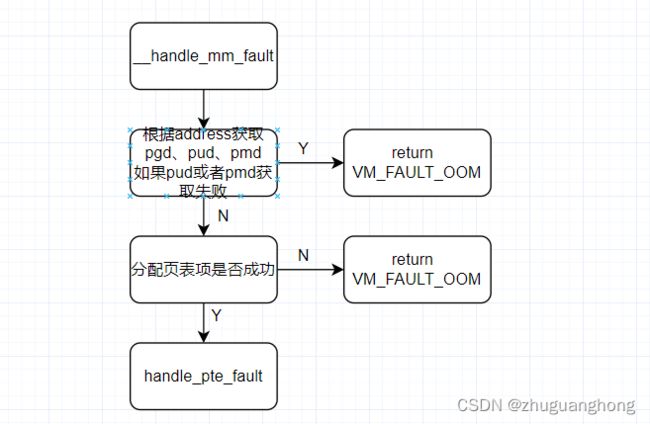

5、 __handle_mm_fault 函数

此函数是真正填充物理页表信息的函数

static int __handle_mm_fault(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, unsigned int flags)

{

pgd_t *pgd;

pud_t *pud;

pmd_t *pmd;

pte_t *pte;

if (unlikely(is_vm_hugetlb_page(vma))) //检查当前的vma是否属于大页页表,如果是则走大页页表pgfault处理函数,这里不展开分析。

return hugetlb_fault(mm, vma, address, flags);

pgd = pgd_offset(mm, address); //获取pgd

pud = pud_alloc(mm, pgd, address); //获取pud

if (!pud)

return VM_FAULT_OOM; //如果不存在页中间目录则直接返回VM_FAULT_OOM,表示无法处理

pmd = pmd_alloc(mm, pud, address); //分配个pmd。

if (!pmd)

return VM_FAULT_OOM; //分配失败则返回VM_FAULT_OOM函数。

if (pmd_none(*pmd) && transparent_hugepage_enabled(vma)) {//如果分配的pmd里面为空且启用了透明大页则进行大页页中间目录创建。

int ret = create_huge_pmd(mm, vma, address, pmd, flags);

if (!(ret & VM_FAULT_FALLBACK)) //创建失败且返回不是VM_FAULT_FALLBACK值则直接出错返回。

return ret;

} else {

pmd_t orig_pmd = *pmd;

int ret;

barrier();

if (pmd_trans_huge(orig_pmd)) { //表示此pmd是属于透明大页的,这里不展开分析此部分。

unsigned int dirty = flags & FAULT_FLAG_WRITE;

/*

* If the pmd is splitting, return and retry the

* the fault. Alternative: wait until the split

* is done, and goto retry.

*/

if (pmd_trans_splitting(orig_pmd))

return 0;

if (pmd_protnone(orig_pmd))

return do_huge_pmd_numa_page(mm, vma, address,

orig_pmd, pmd);

if (dirty && !pmd_write(orig_pmd)) {

ret = wp_huge_pmd(mm, vma, address, pmd,

orig_pmd, flags);

if (!(ret & VM_FAULT_FALLBACK))

return ret;

} else {

huge_pmd_set_accessed(mm, vma, address, pmd,

orig_pmd, dirty);

return 0;

}

}

}

if (unlikely(pmd_none(*pmd)) &&

unlikely(__pte_alloc(mm, vma, pmd, address))) //这里判断分配的页中间目录为空且页表分配失败则直接返回VM_FAULT_OOM;错误码。

return VM_FAULT_OOM;

if (unlikely(pmd_trans_huge(*pmd))) //如果pmd是大页的pmd则直接返回。

return 0;

pte = pte_offset_map(pmd, address); //获取异常地址对应的页表地址

return handle_pte_fault(mm, vma, address, pte, pmd, flags); //填充页表里缺失的物理页面。

}

6、handle_pte_fault

static int handle_pte_fault(struct mm_struct *mm,

struct vm_area_struct *vma, unsigned long address,

pte_t *pte, pmd_t *pmd, unsigned int flags)

{

pte_t entry;

spinlock_t *ptl;

entry = *pte; //获取目标页表

barrier();

if (!pte_present(entry)) { //检查当前申请的页面是否在页表中。如果不在则进行如下的操作

if (pte_none(entry)) {

if (vma_is_anonymous(vma)) //判断vma是个匿名页面

return do_anonymous_page(mm, vma, address,

pte, pmd, flags);

else //非匿名页面

return do_fault(mm, vma, address, pte, pmd,

flags, entry);

} //页表当前被swap出去,

return do_swap_page(mm, vma, address,

pte, pmd, flags, entry);

}

//函数走到这里表示目标页表存在

if (pte_protnone(entry)) //arm64上返回0,无需关注

return do_numa_page(mm, vma, address, entry, pte, pmd);

ptl = pte_lockptr(mm, pmd); //加自旋锁

spin_lock(ptl);//加自旋锁

if (unlikely(!pte_same(*pte, entry))) //pte_same函数用于比较两个页表框是否相同,此处肯定是相同,此函数比较之前会先检查页表框表示的页表是否存在,如果都不存在则直接返回。

goto unlock;

if (flags & FAULT_FLAG_WRITE) { //这里处理,上文提过当检查到esr里记录的原因是因为没有写触发了缺页异常则设置此标志。

if (!pte_write(entry)) //检查对应的页面是否可写,不可写则执行do_wp_page函数,这通常发生在fork的上下文。

return do_wp_page(mm, vma, address,

pte, pmd, ptl, entry);

entry = pte_mkdirty(entry); //表示也存在写权限,此时标记页表为脏页。

}

entry = pte_mkyoung(entry); //将页表项目标记为刚刚使用过。

if (ptep_set_access_flags(vma, address, pte, entry, flags & FAULT_FLAG_WRITE)) {

update_mmu_cache(vma, address, pte);//设置页表的可写的访问控制位

} else {

/*

* This is needed only for protection faults but the arch code

* is not yet telling us if this is a protection fault or not.

* This still avoids useless tlb flushes for .text page faults

* with threads.

*/

if (flags & FAULT_FLAG_WRITE)

flush_tlb_fix_spurious_fault(vma, address); //刷新tlb修复无效的pgfault

}

unlock:

pte_unmap_unlock(pte, ptl);

return 0;

}

7、 do_anonymous_page 函数

static int do_anonymous_page(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, pte_t *page_table, pmd_t *pmd,

unsigned int flags)

{

struct mem_cgroup *memcg;

struct page *page;

spinlock_t *ptl;

pte_t entry;

pte_unmap(page_table); //取消目标页面的映射,如果没有映射则不做处理。

/* File mapping without ->vm_ops ? */

if (vma->vm_flags & VM_SHARED) //如果目标页面是共享页面则返回VM_FAULT_SIGBUS错误。

return VM_FAULT_SIGBUS;

/* Check if we need to add a guard page to the stack */

if (check_stack_guard_page(vma, address) < 0) //检查是否需要stack guard页面。

return VM_FAULT_SIGSEGV;

/* Use the zero-page for reads */

if (!(flags & FAULT_FLAG_WRITE) && !mm_forbids_zeropage(mm)) { //不是因为写权限导致的缺页异常,mm_forbids_zeropage(mm)在arm64上总是返回0 无需关注。

entry = pte_mkspecial(pfn_pte(my_zero_pfn(address), //标记页面为页数页面

vma->vm_page_prot));

page_table = pte_offset_map_lock(mm, pmd, address, &ptl); //获取目标页表框并且加锁

if (!pte_none(*page_table)) //页表框不是空的则直接返回。

goto unlock;

/* Deliver the page fault to userland, check inside PT lock */

if (userfaultfd_missing(vma)) { //检查是否支持Userfaultfd 特性,如果支持则继续调用handle_userfault处理,这里不展开分析

pte_unmap_unlock(page_table, ptl);

return handle_userfault(vma, address, flags,

VM_UFFD_MISSING);

}

goto setpte;

}

if (unlikely(anon_vma_prepare(vma))) //进行anon_vm处理

goto oom;

page = alloc_zeroed_user_highpage_movable(vma, address); //分配一个高端的可移动的物理页面。

if (!page) //分配失败

goto oom;

if (mem_cgroup_try_charge(page, mm, GFP_KERNEL, &memcg)) //更新进程的mem使用信息

goto oom_free_page;

__SetPageUptodate(page);//设置Uptodate 标志表示数据一致

entry = mk_pte(page, vma->vm_page_prot); //创建页表项并且初始化

if (vma->vm_flags & VM_WRITE) //判断对应的vma是否要求了写权限,如果设置了则

entry = pte_mkwrite(pte_mkdirty(entry));//将页面标记为脏且可写

page_table = pte_offset_map_lock(mm, pmd, address, &ptl); //获取申请的页表在页目录中的位置。

if (!pte_none(*page_table)) //检查对应的页是否存在,存在则返回释放刚刚申请的物理页

goto release;

/* Deliver the page fault to userland, check inside PT lock */

if (userfaultfd_missing(vma)) {

pte_unmap_unlock(page_table, ptl);

mem_cgroup_cancel_charge(page, memcg);

page_cache_release(page);

return handle_userfault(vma, address, flags,

VM_UFFD_MISSING);

}

inc_mm_counter_fast(mm, MM_ANONPAGES); //增加计数

page_add_new_anon_rmap(page, vma, address); //更新页面的反向映射

mem_cgroup_commit_charge(page, memcg, false);//更新memcg的统计信息

lru_cache_add_active_or_unevictable(page, vma);//根据vma里的标志将page加到不可移动链表或者激活页表

setpte:

set_pte_at(mm, address, page_table, entry); //设置page到对应的页表项完成映射。

/* No need to invalidate - it was non-present before */

update_mmu_cache(vma, address, page_table); //更新mmu

unlock:

pte_unmap_unlock(page_table, ptl); //释放lock

return 0;

release:

mem_cgroup_cancel_charge(page, memcg);

page_cache_release(page);

goto unlock;

oom_free_page:

page_cache_release(page);

oom:

return VM_FAULT_OOM;

}

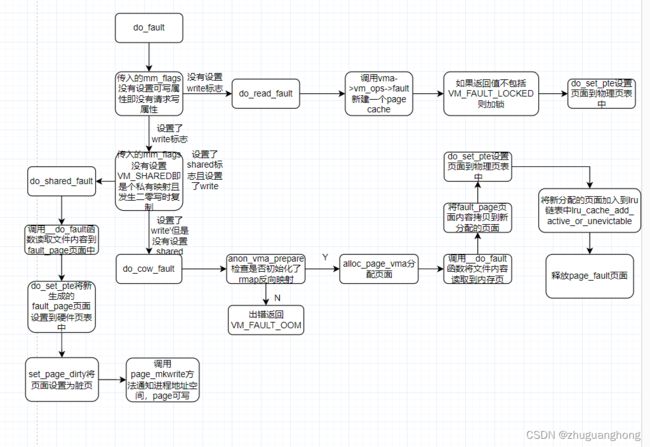

8、do_fault

static int do_fault(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, pte_t *page_table, pmd_t *pmd,

unsigned int flags, pte_t orig_pte)

{

pgoff_t pgoff = (((address & PAGE_MASK)

- vma->vm_start) >> PAGE_SHIFT) + vma->vm_pgoff; //获取偏移量

pte_unmap(page_table); //清空页表项

/* The VMA was not fully populated on mmap() or missing VM_DONTEXPAND */

if (!vma->vm_ops->fault) //判断是否存在vma对应的fault操作函数没有则返回VM_FAULT_SIGBUS

return VM_FAULT_SIGBUS;

if (!(flags & FAULT_FLAG_WRITE)) //如果不是因为写权限造成的异常

return do_read_fault(mm, vma, address, pmd, pgoff, flags,

orig_pte); //做读取映射,后文分析

if (!(vma->vm_flags & VM_SHARED)) //访问了私有映射非,则走到do_cow_fault函数

return do_cow_fault(mm, vma, address, pmd, pgoff, flags,

orig_pte);

return do_shared_fault(mm, vma, address, pmd, pgoff, flags, orig_pte); //访问共享映射页面发生的异常

}

9、do_read_fault

static int do_read_fault(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, pmd_t *pmd,

pgoff_t pgoff, unsigned int flags, pte_t orig_pte)

{

struct page *fault_page;

spinlock_t *ptl;

pte_t *pte;

int ret = 0;

/*

* Let's call ->map_pages() first and use ->fault() as fallback

* if page by the offset is not ready to be mapped (cold cache or

* something).

*/

if (vma->vm_ops->map_pages && fault_around_bytes >> PAGE_SHIFT > 1) {

pte = pte_offset_map_lock(mm, pmd, address, &ptl);

do_fault_around(vma, address, pte, pgoff, flags); //这里是vma里定义了map_pages函数则做缺页异常的

if (!pte_same(*pte, orig_pte))

goto unlock_out;

pte_unmap_unlock(pte, ptl);

}

ret = __do_fault(vma, address, pgoff, flags, NULL, &fault_page);//建立新的page cache

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

return ret;

pte = pte_offset_map_lock(mm, pmd, address, &ptl);

if (unlikely(!pte_same(*pte, orig_pte))) {

pte_unmap_unlock(pte, ptl);

unlock_page(fault_page);

page_cache_release(fault_page);

return ret;

}

do_set_pte(vma, address, fault_page, pte, false, false); //将生成的pte设置到硬件pte entry表项中去。

unlock_page(fault_page);

unlock_out:

pte_unmap_unlock(pte, ptl);

return ret;

}

10、do_fault_around

static void do_fault_around(struct vm_area_struct *vma, unsigned long address,

pte_t *pte, pgoff_t pgoff, unsigned int flags)

{

unsigned long start_addr, nr_pages, mask;

pgoff_t max_pgoff;

struct vm_fault vmf;

int off;

nr_pages = READ_ONCE(fault_around_bytes) >> PAGE_SHIFT; //预读的页面数,通常为16个page

mask = ~(nr_pages * PAGE_SIZE - 1) & PAGE_MASK;

start_addr = max(address & mask, vma->vm_start); //

off = ((address - start_addr) >> PAGE_SHIFT) & (PTRS_PER_PTE - 1);

pte -= off;

pgoff -= off;

/*

* max_pgoff is either end of page table or end of vma

* or fault_around_pages() from pgoff, depending what is nearest.

*/

max_pgoff = pgoff - ((start_addr >> PAGE_SHIFT) & (PTRS_PER_PTE - 1)) +

PTRS_PER_PTE - 1;

max_pgoff = min3(max_pgoff, vma_pages(vma) + vma->vm_pgoff - 1,

pgoff + nr_pages - 1);

/* Check if it makes any sense to call ->map_pages */

while (!pte_none(*pte)) {//从start_addr开始检查pte是否为空,找到空的pte则继续下一步

if (++pgoff > max_pgoff)

return;

start_addr += PAGE_SIZE;

if (start_addr >= vma->vm_end)

return;

pte++;

}

vmf.virtual_address = (void __user *) start_addr;

vmf.pte = pte;

vmf.pgoff = pgoff;

vmf.max_pgoff = max_pgoff;

vmf.flags = flags;

vma->vm_ops->map_pages(vma, &vmf); //对空的pte到max_pgoff进行map_pages操作

}

11、__do_fault

static int __do_fault(struct vm_area_struct *vma, unsigned long address,

pgoff_t pgoff, unsigned int flags,

struct page *cow_page, struct page **page)

{

struct vm_fault vmf;

int ret;

//初始化vmf

vmf.virtual_address = (void __user *)(address & PAGE_MASK);

vmf.pgoff = pgoff;

vmf.flags = flags;

vmf.page = NULL;

vmf.cow_page = cow_page;

ret = vma->vm_ops->fault(vma, &vmf);//调用m_ops->fault方法去分配缺失的页面

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

return ret;

if (!vmf.page)//分配失败

goto out;

if (unlikely(PageHWPoison(vmf.page))) {

if (ret & VM_FAULT_LOCKED)

unlock_page(vmf.page);

page_cache_release(vmf.page);

return VM_FAULT_HWPOISON;

}

if (unlikely(!(ret & VM_FAULT_LOCKED)))//返回值里表示没有加锁则进行加锁操作

lock_page(vmf.page);

else

VM_BUG_ON_PAGE(!PageLocked(vmf.page), vmf.page);

out:

*page = vmf.page;

return ret;

}

12、do_cow_fault

表示处理私有文件时发生了写时复制

static int do_cow_fault(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, pmd_t *pmd,

pgoff_t pgoff, unsigned int flags, pte_t orig_pte)

{

struct page *fault_page, *new_page;

struct mem_cgroup *memcg;

spinlock_t *ptl;

pte_t *pte;

int ret;

if (unlikely(anon_vma_prepare(vma))) //检查rmap

return VM_FAULT_OOM;

new_page = alloc_page_vma(GFP_HIGHUSER_MOVABLE, vma, address); //使用高端内部内存以及可移动的区域分配内存页面

if (!new_page) //分配失败

return VM_FAULT_OOM;

if (mem_cgroup_try_charge(new_page, mm, GFP_KERNEL, &memcg)) {

page_cache_release(new_page);

return VM_FAULT_OOM;

}

ret = __do_fault(vma, address, pgoff, flags, new_page, &fault_page);//将访问文件内容映射到访问异常页面

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

goto uncharge_out;

if (fault_page)

copy_user_highpage(new_page, fault_page, address, vma);//将访问异常的页面内容拷贝到新分配的页面

__SetPageUptodate(new_page);

pte = pte_offset_map_lock(mm, pmd, address, &ptl);

if (unlikely(!pte_same(*pte, orig_pte))) {

pte_unmap_unlock(pte, ptl);

if (fault_page) {

unlock_page(fault_page);

page_cache_release(fault_page);

} else {

/*

* The fault handler has no page to lock, so it holds

* i_mmap_lock for read to protect against truncate.

*/

i_mmap_unlock_read(vma->vm_file->f_mapping);

}

goto uncharge_out;

}

do_set_pte(vma, address, new_page, pte, true, true);//将新分配的页面设置到物理页表项里

mem_cgroup_commit_charge(new_page, memcg, false);

lru_cache_add_active_or_unevictable(new_page, vma);//将新分配的物理页面加到活动链表中

pte_unmap_unlock(pte, ptl);

if (fault_page) {

unlock_page(fault_page);

page_cache_release(fault_page);

} else {

/*

* The fault handler has no page to lock, so it holds

* i_mmap_lock for read to protect against truncate.

*/

i_mmap_unlock_read(vma->vm_file->f_mapping);

}

return ret;

uncharge_out:

mem_cgroup_cancel_charge(new_page, memcg);

page_cache_release(new_page);

return ret;

}

13、do_shared_fault

static int do_shared_fault(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, pmd_t *pmd,

pgoff_t pgoff, unsigned int flags, pte_t orig_pte)

{

struct page *fault_page;

struct address_space *mapping;

spinlock_t *ptl;

pte_t *pte;

int dirtied = 0;

int ret, tmp;

ret = __do_fault(vma, address, pgoff, flags, NULL, &fault_page);//将文件内容读取到内存页面

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

return ret;

if (vma->vm_ops->page_mkwrite) { //如果定义了vm_ops->page_mkwrite方法则调用此方法通知用户态进程内存页可写

unlock_page(fault_page);

tmp = do_page_mkwrite(vma, fault_page, address);

if (unlikely(!tmp ||

(tmp & (VM_FAULT_ERROR | VM_FAULT_NOPAGE)))) {

page_cache_release(fault_page);

return tmp;

}

}

pte = pte_offset_map_lock(mm, pmd, address, &ptl);

if (unlikely(!pte_same(*pte, orig_pte))) {

pte_unmap_unlock(pte, ptl);

unlock_page(fault_page);

page_cache_release(fault_page);

return ret;

}

do_set_pte(vma, address, fault_page, pte, true, false);//将分配出来的页面设置到物理页表中

pte_unmap_unlock(pte, ptl);

if (set_page_dirty(fault_page))

dirtied = 1;

mapping = fault_page->mapping;

unlock_page(fault_page);

if (set_page_dirty(fault_page))

dirtied = 1;

mapping = fault_page->mapping;

unlock_page(fault_page);

if ((dirtied || vma->vm_ops->page_mkwrite) && mapping) {

/*

* Some device drivers do not set page.mapping but still

* dirty their pages

*/

balance_dirty_pages_ratelimited(mapping);

}

if (!vma->vm_ops->page_mkwrite)

file_update_time(vma->vm_file);

return ret;

}

14、do_swap_page

此函数当异常地址对应的页表为present没有置位且pte页表项内容不为空表示物理页面被换出

static int do_swap_page(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, pte_t *page_table, pmd_t *pmd,

unsigned int flags, pte_t orig_pte)

{

......

if (!pte_unmap_same(mm, pmd, page_table, orig_pte))

goto out;

entry = pte_to_swp_entry(orig_pte); //根据pte找到对应的swap entry

if (unlikely(non_swap_entry(entry))) { //找到的entry非法则进行分类处理

if (is_migration_entry(entry)) { //正在迁移的项目

migration_entry_wait(mm, pmd, address); //等待迁移完成

} else if (is_hwpoison_entry(entry)) {

ret = VM_FAULT_HWPOISON; //是hwpoison项则返回VM_FAULT_HWPOISON错误

} else {

print_bad_pte(vma, address, orig_pte, NULL); //这里表示确实没有对应的entry报错

ret = VM_FAULT_SIGBUS;

}

goto out;

}

delayacct_set_flag(DELAYACCT_PF_SWAPIN);

page = lookup_swap_cache(entry); //查找到entry对应的page页

if (!page) {

page = swapin_readahead(entry,

GFP_HIGHUSER_MOVABLE, vma, address); //swapcache找不到则在swap 预读里查找

if (!page) {

/*

* Back out if somebody else faulted in this pte

* while we released the pte lock.

*/

page_table = pte_offset_map_lock(mm, pmd, address, &ptl);

if (likely(pte_same(*page_table, orig_pte)))

ret = VM_FAULT_OOM;

delayacct_clear_flag(DELAYACCT_PF_SWAPIN);

goto unlock; //依旧找不到返回

}

ret = VM_FAULT_MAJOR;

count_vm_event(PGMAJFAULT);

mem_cgroup_count_vm_event(mm, PGMAJFAULT);

} else if (PageHWPoison(page)) {

/*

* hwpoisoned dirty swapcache pages are kept for killing

* owner processes (which may be unknown at hwpoison time)

*/

ret = VM_FAULT_HWPOISON;

delayacct_clear_flag(DELAYACCT_PF_SWAPIN);

swapcache = page;

goto out_release;

}

swapcache = page;

locked = lock_page_or_retry(page, mm, flags); //加锁

delayacct_clear_flag(DELAYACCT_PF_SWAPIN);

if (!locked) {

ret |= VM_FAULT_RETRY;

goto out_release;

}

if (unlikely(!PageSwapCache(page) || page_private(page) != entry.val))

goto out_page;

page = ksm_might_need_to_copy(page, vma, address); //检查页面是否需要新分配还是共用旧的

if (unlikely(!page)) {

ret = VM_FAULT_OOM;

page = swapcache;

goto out_page;

}

if (mem_cgroup_try_charge(page, mm, GFP_KERNEL, &memcg)) {

ret = VM_FAULT_OOM;

goto out_page;

}

/*

* Back out if somebody else already faulted in this pte.

*/

page_table = pte_offset_map_lock(mm, pmd, address, &ptl);

if (unlikely(!pte_same(*page_table, orig_pte)))

goto out_nomap;

if (unlikely(!PageUptodate(page))) {

ret = VM_FAULT_SIGBUS;

goto out_nomap;

}

inc_mm_counter_fast(mm, MM_ANONPAGES);

dec_mm_counter_fast(mm, MM_SWAPENTS);

pte = mk_pte(page, vma->vm_page_prot); //将找到页面转换为pte

if ((flags & FAULT_FLAG_WRITE) && reuse_swap_page(page)) {

pte = maybe_mkwrite(pte_mkdirty(pte), vma);

flags &= ~FAULT_FLAG_WRITE;

ret |= VM_FAULT_WRITE;

exclusive = 1;

}

flush_icache_page(vma, page);

if (pte_swp_soft_dirty(orig_pte))

pte = pte_mksoft_dirty(pte);

set_pte_at(mm, address, page_table, pte); //将pte写入硬件页表内

if (page == swapcache) {

do_page_add_anon_rmap(page, vma, address, exclusive);

mem_cgroup_commit_charge(page, memcg, true);

} else { /* ksm created a completely new copy */

page_add_new_anon_rmap(page, vma, address); //增加换入页面的rmap

mem_cgroup_commit_charge(page, memcg, false);

lru_cache_add_active_or_unevictable(page, vma); //将新换入的页面添加到激活且可移动的页表中

}

swap_free(entry);//释放对应的entry表项

if (vm_swap_full() || (vma->vm_flags & VM_LOCKED) || PageMlocked(page))

try_to_free_swap(page);

unlock_page(page);

if (page != swapcache) {

/*

* Hold the lock to avoid the swap entry to be reused

* until we take the PT lock for the pte_same() check

* (to avoid false positives from pte_same). For

* further safety release the lock after the swap_free

* so that the swap count won't change under a

* parallel locked swapcache.

*/

unlock_page(swapcache);

page_cache_release(swapcache);

}

if (flags & FAULT_FLAG_WRITE) { //且出错的页面需要写权限则进行写时复制

ret |= do_wp_page(mm, vma, address, page_table, pmd, ptl, pte);

if (ret & VM_FAULT_ERROR)

ret &= VM_FAULT_ERROR;

goto out;

}

/* No need to invalidate - it was non-present before */

update_mmu_cache(vma, address, page_table);

.....

}

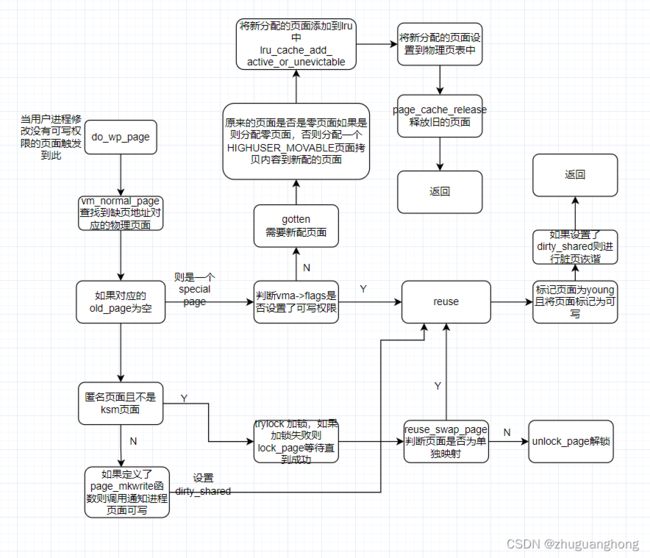

15、do_wp_page

此函数主要是用于访问了没有写权限的页面

static int do_wp_page(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, pte_t *page_table, pmd_t *pmd,

spinlock_t *ptl, pte_t orig_pte)

__releases(ptl)

{

struct page *old_page;

old_page = vm_normal_page(vma, address, orig_pte); //查找到address对应的page

if (!old_page) { //page为空则表示此异常地址对应的页面是special mapping

/*

* VM_MIXEDMAP !pfn_valid() case, or VM_SOFTDIRTY clear on a

* VM_PFNMAP VMA.

*

* We should not cow pages in a shared writeable mapping.

* Just mark the pages writable and/or call ops->pfn_mkwrite.

*/

if ((vma->vm_flags & (VM_WRITE|VM_SHARED)) ==

(VM_WRITE|VM_SHARED))

return wp_pfn_shared(mm, vma, address, page_table, ptl,

orig_pte, pmd); //这里判断当页面可写且共享会重用物理页面不会重新进行映射

pte_unmap_unlock(page_table, ptl);

return wp_page_copy(mm, vma, address, page_table, pmd,

orig_pte, old_page); //分配一个新的页面且会做复制页面内容

}

if (PageAnon(old_page) && !PageKsm(old_page)) { //表示异常地址对应的页面是匿名页面且不是ksm页面

if (!trylock_page(old_page)) {//判断当前页面是否加锁,返回false表示有其他上下文已经加锁

page_cache_get(old_page);//增加page的使用计数

pte_unmap_unlock(page_table, ptl);

lock_page(old_page);//这里加锁等待其他上下文释放锁之后获取锁

page_table = pte_offset_map_lock(mm, pmd, address,

&ptl);

if (!pte_same(*page_table, orig_pte)) {

unlock_page(old_page);

pte_unmap_unlock(page_table, ptl);

page_cache_release(old_page);

return 0;

}

page_cache_release(old_page);

}

if (reuse_swap_page(old_page)) { //判断是否只有一个进程使用此页面,如果是则

/*

* The page is all ours. Move it to our anon_vma so

* the rmap code will not search our parent or siblings.

* Protected against the rmap code by the page lock.

*/

page_move_anon_rmap(old_page, vma, address);

unlock_page(old_page);

return wp_page_reuse(mm, vma, address, page_table, ptl,

orig_pte, old_page, 0, 0); //针对此单匿名映射页面进行重用

}

unlock_page(old_page);

} else if (unlikely((vma->vm_flags & (VM_WRITE|VM_SHARED)) ==

(VM_WRITE|VM_SHARED))) { //这里表示异常地址对应的页面是ksm或者page cache页面

return wp_page_shared(mm, vma, address, page_table, pmd,

ptl, orig_pte, old_page); //调用mkwrite通知进程只读页面现在可写,然后重用此页面。

}

/*

* Ok, we need to copy. Oh, well..

*/

page_cache_get(old_page);

pte_unmap_unlock(page_table, ptl);

return wp_page_copy(mm, vma, address, page_table, pmd,

orig_pte, old_page); //进行写时复制操作,分配新页面将老的页面内容拷贝到新页面里。

}