pyspark入门系列 - 01 统计文档中单词个数

导入SparkConf和SparkContext模块,任何Spark程序都是SparkContext开始的,SparkContext的初始化需要一个SparkConf对象,SparkConf包含了Spark集群配置的各种参数。初始化后,就可以使用SparkContext对象所包含的各种方法来创建和操作RDD和共享变量。

from pyspark import SparkConf

from pyspark import SparkContext

conf = SparkConf().setMaster('local').setAppName('read_txt')

sc = SparkContext(conf=conf)

# 读取目录下的txt文件

rdd = sc.textFile('../data/eclipse_license.txt')

# 统计元素个数(行数)

print(rdd.count())

70

filter

转化操作,通过传入函数定义过滤规则

# 使用filter筛选出包含‘License’的行,并查看第一个字符串

pythonline = rdd.filter(lambda line: 'License' in line)

pythonline.first()

'Eclipse Public License - v 1.0'

# 统计词频,打印前10个

# 1. faltMap: 将返回的数组全部拆散,然后合成到一个数组中

# 2. map: 针对数组中的每一个元素进行操作

# 3. reduceByKey: 根据key进行合并计算

# 4. sortBy: 排序

result = rdd.flatMap(lambda x: x.split(' ')).map(lambda x: (x, 1)).reduceByKey(lambda a, b: a + b)

sorted_result = result.sortBy(lambda x: x[1], ascending=False)

print(sorted_result.collect()[0:10])

# # sorted_result.saveAsTextFile('.result') # 将结果保存到文件

[('the', 98), ('to', 56), ('of', 54), ('and', 48), ('Contributor', 31), ('a', 30), ('in', 28), ('this', 27), ('', 26), ('or', 24)]

# 将经常访问的数据持久化到内存,(需要被重用的中间结果)

sorted_result.persist()

PythonRDD[11] at collect at :8

union() 联合两个rdd

errorsRDD = rdd.filter(lambda x: "error" in x)

LicenseRDD = rdd.filter(lambda x: "License" in x)

unionLinesRDD = errorsRDD.union(LicenseRDD)

unionLinesRDD.collect()

['EXCEPT AS EXPRESSLY SET FORTH IN THIS AGREEMENT, THE PROGRAM IS PROVIDED ON AN "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, EITHER EXPRESS OR IMPLIED INCLUDING, WITHOUT LIMITATION, ANY WARRANTIES OR CONDITIONS OF TITLE, NON-INFRINGEMENT, MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Each Recipient is solely responsible for determining the appropriateness of using and distributing the Program and assumes all risks associated with its exercise of rights under this Agreement , including but not limited to the risks and costs of program errors, compliance with applicable laws, damage to or loss of data, programs or equipment, and unavailability or interruption of operations.',

'Eclipse Public License - v 1.0',

'"Licensed Patents" mean patent claims licensable by a Contributor which are necessarily infringed by the use or sale of its Contribution alone or when combined with the Program.',

'b) Subject to the terms of this Agreement, each Contributor hereby grants Recipient a non-exclusive, worldwide, royalty-free patent license under Licensed Patents to make, use, sell, offer to sell, import and otherwise transfer the Contribution of such Contributor, if any, in source code and object code form. This patent license shall apply to the combination of the Contribution and the Program if, at the time the Contribution is added by the Contributor, such addition of the Contribution causes such combination to be covered by the Licensed Patents. The patent license shall not apply to any other combinations which include the Contribution. No hardware per se is licensed hereunder.']

first,take,collect取值

- first: 取出第一个值

- take:取出n个值

- collect:取出全部数据到内存

unionLinesRDD.take(2)

'Eclipse Public License - v 1.0']

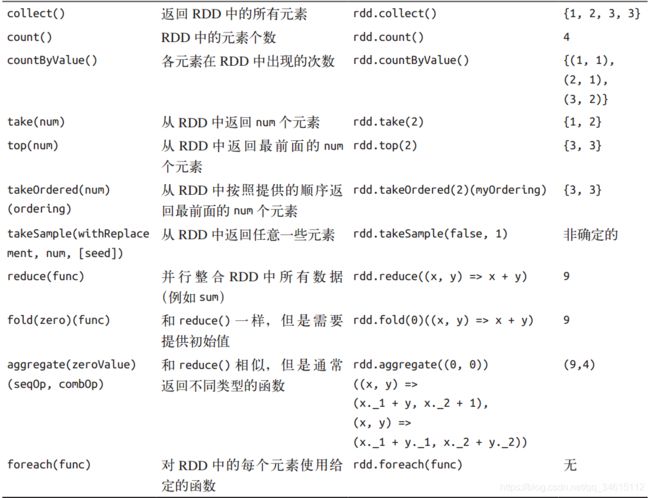

RDD转化操作

RDD行动操作

使用reduce计算上面txt中的总单词数

tmp_rdd = rdd.flatMap(lambda line:line.split(' ')).map(lambda x: (x, 1))

tmp_rdd1 = tmp_rdd.map(lambda x: x[1])

tmp_rdd1.reduce(lambda x, y: x + y)

1724

使用fold计算上面txt中的总单词数

tmp_rdd1.fold(zeroValue=0, op=lambda x, y: x + y)

1724