k8s_3节点集群部署

背景

近期想在自己电脑上部署一套3节点K8s 作为自己平时的学习测试环境。

本来想看一下有没有比较便捷的部署方式如:

rancherdesktop: https://docs.rancherdesktop.io/zh/next/getting-started/installation/

sealos: https://www.sealos.io/docs/getting-started/installation

等等, 但是发现有的前置准备也挺麻烦的。 因此最后决定根据官方的文档来部署,还能更熟悉一下基本的角色组成。

Centos配置修改

- 前提

默认已经配置好3节点的linux环境,配置好ip

2.关闭防火墙和selinux

systemctl stop firewalld && systemctl disable firewalld

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config && setenforce 0

-

关闭swap分区

修改/etc/sysctl.conf

vm.swappiness=0

sysctl -p # 使配置生效 -

修改配置文件 /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

执行命令

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf # 使配置生效

软件部署

docker部署_每个节点都执行

更改yum地址

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

安装依赖

yum install -y docker-ce docker-ce-cli containerd.io

docker 配置文件更改

/etc/docker/daemon.json

{

“registry-mirrors”: [

“https://registry.docker-cn.com”,

“http://hub-mirror.c.163.com”,

“https://docker.mirrors.ustc.edu.cn”

],

“exec-opts”: [

“native.cgroupdriver=systemd”

],

“data-root”: “/xxxx/”

}

然后启动服务

systemctl start docker

systemctl enable docker

k8s部署

1. 添加k8s阿里云YUM软件源

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2. 所有节点安装kubeadm,kubelet和kubectl

备注:这里没有指定具体版本,默认最新版本。

$ yum install -y kubelet kubeadm kubectl

$ systemctl enable kubelet && systemctl start kubelet

3. 初始化master节点

kubeadm init --kubernetes-version=1.27.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.0.1 --ignore-preflight-errors=Swap --image-repository registry.aliyuncs.com/google_containers

这一步问题比较多,容易超时可以失败后先参考该链接操作: https://www.cnblogs.com/ltlinux/p/11803557.html

如果拉取pause镜像超时了。 而且是CRI containerd 报的错参考这个链接

https://www.cnblogs.com/wod-Y/p/17043985.html

顺利初始化完成之后先记录对应的信息如下

kubeadm join 192.168.0.1xx:6443 --token xxxu5zx4v.2g0wo5e445gky

--discovery-token-ca-cert-hash xxxsha256:baf30876a228ab84af89bfbd4b103de933a25a3dff8a605fb945c427afba2

4. 配置文件处理

在master上,将master中的admin.conf 拷贝到node中,这里是scp的方式,使用客户端手动上传也是可以的

scp /etc/kubernetes/admin.conf root@xxxxx:/root/

所有节点执行:

$ HOME = /root

$ mkdir -p $HOME/.kube

$ sudo cp -i $HOME/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

5. 部署网络

主节点执行

6.node节点加入

kubeadm join 192.168.0.1xx:6443 --token xxxu5zx4v.2g0wo5e445gky

--discovery-token-ca-cert-hash xxxsha256:baf30876a228ab84af89bfbd4b103de933a25a3dff8a605fb945c427afba2

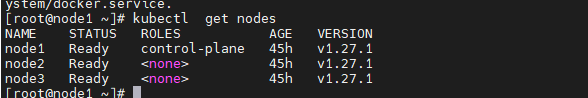

查看节点状态

kubectl get nodes

部署问题汇总

kubeadm init 超时或者下载到本地,但是仍找不到镜像

报错信息:

[ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_container/kube-apiserver:v1.27.1: output: E0419 22:52:40.277582 13517 remote_image.go:171] “PullImage from image service failed” err="rpc error: code = Unknown desc = failed to pull and unpack image “registry.aliyuncs.com/google_container/kube-apiserver:v1.27.1”: failed to resolve reference “registry.aliyuncs.com/google_container/kube-apiserver:v1.27.1”: pull access denied, repository does not exis

解决参考:

https://www.cnblogs.com/ltlinux/p/11803557.html

kubeadm init 报错 running with swap on is not supported

参考关闭swap步骤并重启

kubeadm init 再次执行报错xxx kube-apiserver.yaml already exists

ror execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable–etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable–etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable–etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable–etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

解决方法: 先执行命令 kubeadm reset,再执行 kubeadm init

kubeadm 执行 join 报错: no kind “ClusterConfiguration” is registered

报错信息:

error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to decode cluster configuration data: no kind “ClusterConfiguration” is registered for version “kubeadm.k8s.io/v1beta2” in scheme "k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/scheme/s

解决方法:对应版本不一致,检查版本后再执行。

让 Master 也当作 Node 使用

kubectl taint node --all node-role.kubernetes.io/master-

有提示可以忽略。

将 Master 恢复成 Master Only 状态

kubectl taint node --all node-role.kubernetes.io/master=“”:NoSchedule

备注

IT 内容具有时效性,未避免更新后未同步,请点击查看最新内容:k8s_3 节点集群部署

文章首发于:http://nebofeng.com/2023/04/22/k8s_3%e8%8a%82%e7%82%b9%e9%9b%86%e7%be%a4%e9%83%a8%e7%bd%b2/