DenseNet手写数字识别练习Pytorch实现

伏公子今天看了DenseNet的论文,决定复现一下这个极为先进的网络。这个网络在某年的CVPR上获得了最佳论文,比ResNet还要好。具体原理不是本文想要讲得,本文主要是复现代码。

DenseLayer层:这个是最基本的层,代码如下:

class DenseLayer(torch.nn.Module):

def __init__(self, input_features_num, grow_rate, bn_size, drop_rate):

super().__init__()

self.layer = torch.nn.Sequential(

torch.nn.BatchNorm2d(input_features_num),

torch.nn.ReLU(inplace=True),

torch.nn.Conv2d(in_channels=input_features_num, out_channels=bn_size * grow_rate, kernel_size=1,

stride=1, bias=False),

torch.nn.BatchNorm2d(bn_size * grow_rate),

torch.nn.ReLU(inplace=True),

torch.nn.Conv2d(in_channels=bn_size * grow_rate, out_channels=grow_rate, padding=1, kernel_size=3, stride=1,

bias=False)

)

self.drop_rate = drop_rate

def forward(self, x):

out = self.layer(x)

if self.drop_rate > 0:

out = F.dropout(input=out, p=self.drop_rate, training=self.training)

return torch.cat([out, x], 1)

DenseBlock层,这个相当于一个密集块

class DenseBlock(torch.nn.Module):

def __init__(self, num_layers, input_features_num, grow_rate, bn_size, drop_rate):

super().__init__()

self.block_layer = torch.nn.Sequential()

for i in range(num_layers):

layer = DenseLayer(input_features_num + i * grow_rate, grow_rate, bn_size, drop_rate)

self.block_layer.add_module("DenseLayer%d" % (i + 1), layer)

def forward(self, x):

return self.block_layer(x)

Transition:密集块之间的连接,主要是池化,比较简单

class Transition(torch.nn.Module):

def __init__(self, input_features_num, output_features_num):

super().__init__()

self.layer = torch.nn.Sequential(

torch.nn.BatchNorm2d(input_features_num),

torch.nn.ReLU(inplace=True),

torch.nn.Conv2d(in_channels=input_features_num, out_channels=output_features_num, kernel_size=1, stride=1,

bias=False),

torch.nn.AvgPool2d(kernel_size=2, stride=2)

)

def forward(self, x):

return self.layer(x)

真正的DenseNet:

class DenseNet(torch.nn.Module):

def __init__(self, growth_rate=32, block_config=(6, 12, 24, 16), num_init_features=64,

bn_size=4, compression_rate=0.5, drop_rate=0, num_classes=10):

super().__init__()

# 这是首层

self.features = torch.nn.Sequential(

torch.nn.Conv2d(in_channels=3, out_channels=num_init_features, kernel_size=7, stride=2, bias=False,

padding=3),

torch.nn.BatchNorm2d(num_features=num_init_features),

torch.nn.ReLU(inplace=True),

torch.nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

# 中间的Dense层,输入通道数64

num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = DenseBlock(num_layers, input_features_num=num_features,

grow_rate=growth_rate, bn_size=bn_size, drop_rate=drop_rate)

self.features.add_module("DenseBlock%d" % (i + 1), block)

num_features += num_layers * growth_rate

if i != len(block_config) - 1:

transition = Transition(input_features_num=num_features,

output_features_num=int(compression_rate * num_features))

self.features.add_module("Transition%d" % (i + 1), transition)

num_features = int(num_features * compression_rate)

self.features.add_module("BN_last", torch.nn.BatchNorm2d(num_features))

self.features.add_module("Relu_last", torch.nn.ReLU(inplace=True))

self.features.add_module("AvgPool2d", torch.nn.AvgPool2d(kernel_size=7, stride=1))

self.classifier = torch.nn.Linear(num_features, num_classes)

# params initialization

for m in self.modules():

if isinstance(m, torch.nn.Conv2d):

torch.nn.init.kaiming_normal_(m.weight)

elif isinstance(m, torch.nn.BatchNorm2d):

torch.nn.init.constant_(m.bias, 0)

torch.nn.init.constant_(m.weight, 1)

elif isinstance(m, torch.nn.Linear):

torch.nn.init.constant_(m.bias, 0)

def forward(self, x):

out = self.features(x)

out = out.view(out.size(0), -1)

out = self.classifier(out)

return out

全代码:

DenseNet.py

# DenseNet CNN网络框架练习

import torch.nn

import torch.nn.functional as F

class DenseLayer(torch.nn.Module):

def __init__(self, input_features_num, grow_rate, bn_size, drop_rate):

super().__init__()

self.layer = torch.nn.Sequential(

torch.nn.BatchNorm2d(input_features_num),

torch.nn.ReLU(inplace=True),

torch.nn.Conv2d(in_channels=input_features_num, out_channels=bn_size * grow_rate, kernel_size=1,

stride=1, bias=False),

torch.nn.BatchNorm2d(bn_size * grow_rate),

torch.nn.ReLU(inplace=True),

torch.nn.Conv2d(in_channels=bn_size * grow_rate, out_channels=grow_rate, padding=1, kernel_size=3, stride=1,

bias=False)

)

self.drop_rate = drop_rate

def forward(self, x):

out = self.layer(x)

if self.drop_rate > 0:

out = F.dropout(input=out, p=self.drop_rate, training=self.training)

return torch.cat([out, x], 1)

class DenseBlock(torch.nn.Module):

def __init__(self, num_layers, input_features_num, grow_rate, bn_size, drop_rate):

super().__init__()

self.block_layer = torch.nn.Sequential()

for i in range(num_layers):

layer = DenseLayer(input_features_num + i * grow_rate, grow_rate, bn_size, drop_rate)

self.block_layer.add_module("DenseLayer%d" % (i + 1), layer)

def forward(self, x):

return self.block_layer(x)

class Transition(torch.nn.Module):

def __init__(self, input_features_num, output_features_num):

super().__init__()

self.layer = torch.nn.Sequential(

torch.nn.BatchNorm2d(input_features_num),

torch.nn.ReLU(inplace=True),

torch.nn.Conv2d(in_channels=input_features_num, out_channels=output_features_num, kernel_size=1, stride=1,

bias=False),

torch.nn.AvgPool2d(kernel_size=2, stride=2)

)

def forward(self, x):

return self.layer(x)

class DenseNet(torch.nn.Module):

def __init__(self, growth_rate=32, block_config=(6, 12, 24, 16), num_init_features=64,

bn_size=4, compression_rate=0.5, drop_rate=0, num_classes=10):

super().__init__()

# 这是首层

self.features = torch.nn.Sequential(

torch.nn.Conv2d(in_channels=3, out_channels=num_init_features, kernel_size=7, stride=2, bias=False,

padding=3),

torch.nn.BatchNorm2d(num_features=num_init_features),

torch.nn.ReLU(inplace=True),

torch.nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

# 中间的Dense层,输入通道数64

num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = DenseBlock(num_layers, input_features_num=num_features,

grow_rate=growth_rate, bn_size=bn_size, drop_rate=drop_rate)

self.features.add_module("DenseBlock%d" % (i + 1), block)

num_features += num_layers * growth_rate

if i != len(block_config) - 1:

transition = Transition(input_features_num=num_features,

output_features_num=int(compression_rate * num_features))

self.features.add_module("Transition%d" % (i + 1), transition)

num_features = int(num_features * compression_rate)

self.features.add_module("BN_last", torch.nn.BatchNorm2d(num_features))

self.features.add_module("Relu_last", torch.nn.ReLU(inplace=True))

self.features.add_module("AvgPool2d", torch.nn.AvgPool2d(kernel_size=7, stride=1))

self.classifier = torch.nn.Linear(num_features, num_classes)

# params initialization

for m in self.modules():

if isinstance(m, torch.nn.Conv2d):

torch.nn.init.kaiming_normal_(m.weight)

elif isinstance(m, torch.nn.BatchNorm2d):

torch.nn.init.constant_(m.bias, 0)

torch.nn.init.constant_(m.weight, 1)

elif isinstance(m, torch.nn.Linear):

torch.nn.init.constant_(m.bias, 0)

def forward(self, x):

out = self.features(x)

out = out.view(out.size(0), -1)

out = self.classifier(out)

return out

下面是加入手写数字识别的代码:

import time

import torchvision

import torch.utils.data

from matplotlib import pyplot as plt

import DenseNet

net = DenseNet.DenseNet()

my_optim = torch.optim.Adam(net.parameters())

my_loss = torch.nn.CrossEntropyLoss()

max_epoch = 1

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

net.to(device)

batch_size = 20

data_train = torchvision.datasets.MNIST(root="./data/", transform=torchvision.transforms.Compose(

[torchvision.transforms.ToTensor(), torchvision.transforms.Resize((224, 224)),

torchvision.transforms.Normalize(mean=0.5, std=0.5)]), train=True, download=False)

data_test = torchvision.datasets.MNIST(root="./data/", transform=torchvision.transforms.Compose(

[torchvision.transforms.ToTensor(), torchvision.transforms.Resize((224, 224)),

torchvision.transforms.Normalize(mean=0.5, std=0.5)]), train=False)

data_loader_train = torch.utils.data.DataLoader(dataset=data_train, batch_size=batch_size, shuffle=True)

data_loader_test = torch.utils.data.DataLoader(dataset=data_test, batch_size=batch_size, shuffle=True)

for epoch in range(max_epoch):

running_loss = 0.0

running_correct = 0

start_time = time.time()

print("Epoch {}/{}".format(epoch, max_epoch))

print("-" * 10)

i = 0

for data in data_loader_train:

i += 1

images_train, data_label_train = data

images_train.to(device)

data_label_train.to(device)

outputs = net(images_train.to(device))

_, pred = torch.max(outputs.detach(), 1)

my_optim.zero_grad()

loss = my_loss(outputs, data_label_train.to(device))

loss.backward()

my_optim.step()

running_loss += loss.detach()

running_correct += torch.sum(pred == data_label_train.detach().to(device))

testing_correct = 0

for data in data_loader_test:

images_test, data_label_test = data

data_label_test.to(device)

outputs = net(images_test.to(device))

_, pred = torch.max(outputs.detach(), dim=1)

testing_correct += torch.sum(pred == data_label_test.detach().to(device))

print("Loss is:{:.4f},Train Accuracy is:{:.4f}%,Test Accuracy is:{:.4f}".format(running_loss / len(data_train),

100 * running_correct / len(

data_train),

100 * testing_correct / len(

data_test)))

end_time = time.time()

print("用时:", end_time - start_time)

# 保存方式二,保留参数

torch.save(net.state_dict(), "net_train.fsx")

# 保存方式一,全模型保存

torch.save(net, "all_net.pth")

data_loader_test = torch.utils.data.DataLoader(dataset=data_test, batch_size=4, shuffle=True)

images_test, data_label_test = next(iter(data_loader_test))

data_label_test.to(device)

outputs = net(images_test.to(device))

_, outputs = torch.max(outputs.detach(), dim=1)

print("Predict Label is:", [i for i in outputs.detach()])

print("真实标签:", [i for i in data_label_test])

img = torchvision.utils.make_grid(images_test)

img = img.numpy().transpose(1, 2, 0)

std = [0.5, 0.5, 0.5]

mean = [0.5, 0.5, 0.5]

img = img * std + mean

plt.imshow(img)

plt.show()

由于手写数字识别的图片是黑白的,也就是通道为1,而DenseNet网络一般是处理彩色的图像,所以记得将DenseNet.py大概61行有个in_channels=3,将这里的3变成1即可。

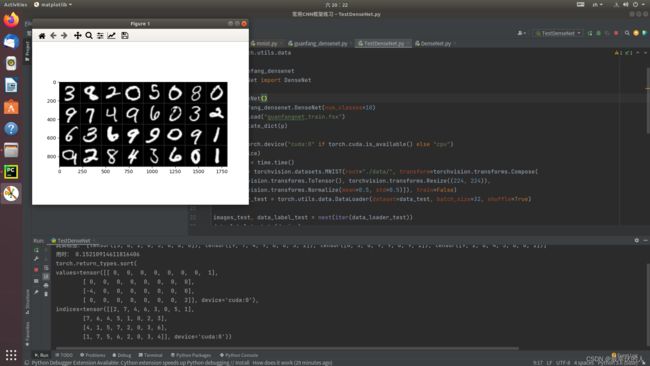

这个DenseNet往往用于大型的项目,在这个实验中,它运行的非常慢,在我的电脑上完整的训练一次就需要10分钟,因此效率并不高,当然准确率也不是很高,只有85%左右(现在一般的都极为精准,接近100%),下面放2张结果图:

这个图片的准确率就不高,看命令行输出的矩阵中有非0的数,矩阵输出我将真实值和预测值做减法,因此有元素不为0就代表那个数据识别错误了。

这个就是全识别对了,矩阵中是数全为0。矩阵输出我将真实值和预测值做减法,因此有元素不为0就代表那个数据识别错误了。

文章写的比较仓促,可能有许多不足的地方,欢迎各位指正。