linux新版本io框架 io_uring

从别的博主那copy过来:

1 io_uring是Linux内核的一个新型I/O事件通知机制,具有以下特点:

高性能:相比传统的select/poll/epoll等I/O多路复用机制,io_uring采用了更高效的ring buffer实现方式,可以在处理大量并发I/O请求时提供更高的吞吐量和低延迟。异步:io_uring支持异步I/O操作,并且可以通过用户空间和内核空间之间的共享内存映射来避免数据拷贝,从而减少了CPU的开销。

事件批处理:io_uring可以将多个I/O操作合并成一个请求进行处理,从而降低了系统调用的次数和上下文切换的开销。

灵活性:io_uring提供了非常灵活的接口和配置选项,可以根据应用程序的需要进行优化和调整。同时,它还支持多线程操作和信号驱动I/O等功能。

2 安装

确认系统内核是5.10以后的版本,还需安装liburing(依赖于内核的三个新的系统调用

io_uring_setup io_uring_register io_uring_enter )

安装liburing库,它对内核三个新的系统进行了封装

git clone https://github.com/axboe/liburing.git

cd liburing/

./configure

make && make install由于github上不去

找了一个国内的源

git clone https://gitee.com/anolis/liburing.git

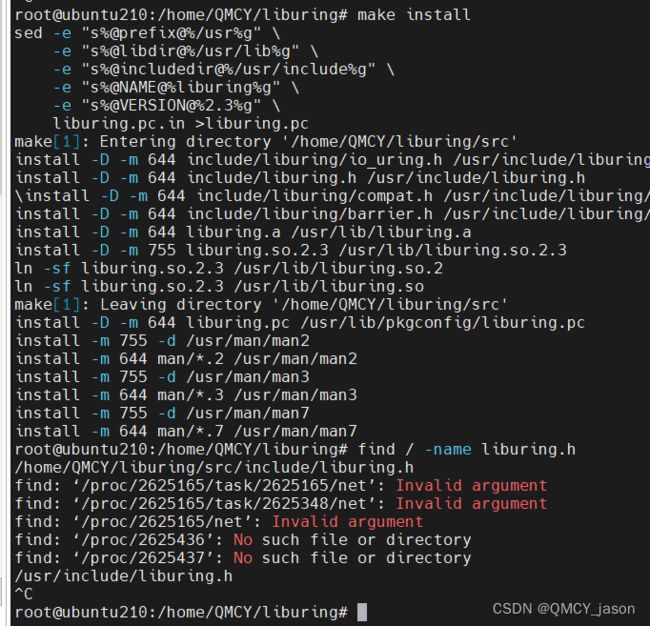

make install 可以看到 将 liburing.h 和静态库 动态库copy到了 系统目录

写一个测试程序

#include

#include

#include

#include

#include

#include

#include

#define ENTRIES_LENGTH 1024

enum {

EVENT_ACCEPT = 0,

EVENT_READ,

EVENT_WRITE

};

typedef struct _conninfo {

int connfd;

int event;

} conninfo;

// sizeof(conninfo) = 8

// 0, 1, 2

// 3, 4, 5

void set_send_event(struct io_uring *ring, int sockfd, void *buf, size_t len, int flags) {

struct io_uring_sqe *sqe = io_uring_get_sqe(ring);

io_uring_prep_send(sqe, sockfd, buf, len, flags);

conninfo info_send = {

.connfd = sockfd,

.event = EVENT_WRITE,

};

memcpy(&sqe->user_data, &info_send, sizeof(info_send));

}

void set_recv_event(struct io_uring *ring, int sockfd, void *buf, size_t len, int flags) {

struct io_uring_sqe *sqe = io_uring_get_sqe(ring);

io_uring_prep_recv(sqe, sockfd, buf, len, flags);

conninfo info_recv = {

.connfd = sockfd,

.event = EVENT_READ,

};

memcpy(&sqe->user_data, &info_recv, sizeof(info_recv));

}

void set_accept_event(struct io_uring *ring, int sockfd, struct sockaddr *addr,

socklen_t *addrlen, int flags) {

struct io_uring_sqe *sqe = io_uring_get_sqe(ring);

io_uring_prep_accept(sqe, sockfd, addr, addrlen, flags);

conninfo info_accept = {

.connfd = sockfd,

.event = EVENT_ACCEPT,

};

memcpy(&sqe->user_data, &info_accept, sizeof(info_accept));

}

int main() {

int sockfd = socket(AF_INET, SOCK_STREAM, 0); // io

struct sockaddr_in servaddr;

memset(&servaddr, 0, sizeof(struct sockaddr_in)); // 192.168.2.123

servaddr.sin_family = AF_INET;

servaddr.sin_addr.s_addr = htonl(INADDR_ANY); // 0.0.0.0

servaddr.sin_port = htons(9999);

if (-1 == bind(sockfd, (struct sockaddr*)&servaddr, sizeof(struct sockaddr))) {

printf("bind failed: %s", strerror(errno));

return -1;

}

listen(sockfd, 10);

//liburing

struct io_uring_params params;

memset(¶ms, 0, sizeof(params));

struct io_uring ring;

io_uring_queue_init_params(ENTRIES_LENGTH, &ring, ¶ms);

struct io_uring_sqe *sqe = io_uring_get_sqe(&ring);

struct sockaddr_in clientaddr;

socklen_t clilen = sizeof(struct sockaddr);

set_accept_event(&ring, sockfd, (struct sockaddr*)&clientaddr, &clilen, 0);

char buffer[1024] = {0};

while (1) {

io_uring_submit(&ring);

struct io_uring_cqe *cqe;

io_uring_wait_cqe(&ring, &cqe);

struct io_uring_cqe *cqes[10];

int cqecount = io_uring_peek_batch_cqe(&ring, cqes, 10);

//printf("cqecount --> %d\n", cqecount);

int i = 0;

for (i = 0;i < cqecount;i ++) {

cqe = cqes[i];

conninfo ci;

memcpy(&ci, &cqe->user_data, sizeof(ci));

if (ci.event == EVENT_ACCEPT) { // recv/send

if (cqe->res < 0) continue;

int connfd = cqe->res;

//printf("accept --> %d\n", connfd);

set_accept_event(&ring, ci.connfd, (struct sockaddr*)&clientaddr, &clilen, 0);

set_recv_event(&ring, connfd, buffer, 1024, 0);

} else if (ci.event == EVENT_READ) {

if (cqe->res < 0) continue;

if (cqe->res == 0) {

close(ci.connfd);

} else {

printf("recv --> %s, %d\n", buffer, cqe->res);

//set_recv_event(&ring, ci.connfd, buffer, 1024, 0);

set_send_event(&ring, ci.connfd, buffer, cqe->res, 0);

}

} else if (ci.event == EVENT_WRITE) { //

//printf("write complete\n");

set_recv_event(&ring, ci.connfd, buffer, 1024, 0);

}

}

io_uring_cq_advance(&ring, cqecount);

}

getchar();

}

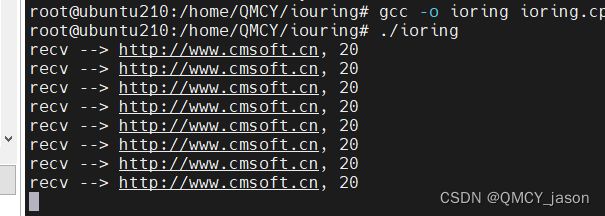

编译程序

gcc -o ioring ioring.cpp -luring -static

之后程序起来之后 用网络调试助手 发送测试文字

先做个记录