搭建BP神经网络

1.数据集下载

2.C语言代码

BP.h

#ifndef BP_H_INCLUDED

#define BP_H_INCLUDED

const int INPUT_LAYER = 784; //输入层维度

const int HIDDEN_LAYER = 40; //隐含层维度

const int OUTPUT_LAYER = 10; //输出层维度

const double LEARN_RATE = 0.3; //学习率

const int TRAIN_TIMES = 10; //迭代训练次数

class BP

{

private:

int input_array[INPUT_LAYER]; //输入向量

int aim_array[OUTPUT_LAYER]; //目标结果

double weight1_array[INPUT_LAYER][HIDDEN_LAYER]; //输入层与隐含层之间的权重

double weight2_array[HIDDEN_LAYER][OUTPUT_LAYER]; //隐含层与输出层之间的权重

double output1_array[HIDDEN_LAYER]; //隐含层输出

double output2_array[OUTPUT_LAYER]; //输出层输出

double deviation1_array[HIDDEN_LAYER]; //隐含层误差

double deviation2_array[OUTPUT_LAYER]; //输出层误差

double threshold1_array[HIDDEN_LAYER]; //隐含层阈值

double threshold2_array[OUTPUT_LAYER]; //输出层阈值

public:

void Init(); //初始化各参数

double Sigmoid(double x); //sigmoid激活函数

void GetOutput1(); //得到隐含层输出

void GetOutput2(); //得到输出层输出

void GetDeviation1(); //得到隐含层误差

void GetDeviation2(); //得到输出层误差

void Feedback1(); //反馈输入层与隐含层之间的权重

void Feedback2(); //反馈隐含层与输出层之间的权重

void Train(); //训练

void Test(); //测试

};

#endif // BP_H_INCLUDED

BP.cpp

#include 测量迭代10轮所用的时间:在main()函数中添加计时器:std::chrono::high_resolution_clock

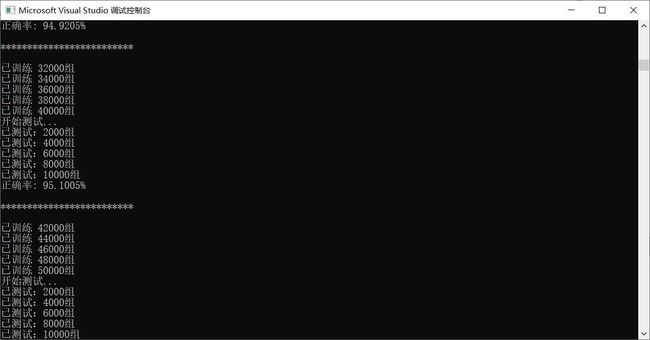

| 学习率 | 0.1 | 0.2 | 0.3 |

|---|---|---|---|

| 准确率 | 94.48% | 95.02% | 95.15% |

| 时间 | 717.224s | 869.209s | 725.855s |

正确率的增长逐渐变得缓慢,程序的运行时间也要相应的加长