kubernetes(k8s)多master集群和负载均衡部署

kubernetes(k8s)多master集群和负载均衡部署

目录

- 一、多master节点集群搭建示意图

- 二、部署多master节点

-

- 2.1 在master1上拷贝重要文件给master2

- 三、搭建nginx负载均衡

-

- 3.1 安装nginx服务

- 3.2 搭建 keepalived 高可用服务

- 3.3 开始修改node节点配置文件统一VIP

一、多master节点集群搭建示意图

我的虚拟机 IP地址规划:

Master节点

master01:192.168.126.10

master02:192.168.126.40

VIP 地址:192.168.126.88

Node节点

node01:192.168.126.20

node02:192.168.126.30

负载均衡

Nginx01:192.168.126.50 master

Nginx02:192.168.126.60 backup

- 本篇博客将在单master节点的K8s集群上搭建 多master节点集群 和 LB负载均衡服务器。

二、部署多master节点

部署多节点,在单节点的基础上部署,前期博文有单节点部署的详细步骤

2.1 在master1上拷贝重要文件给master2

优先关闭防火墙和selinux服务

- master01上操作

复制kubernetes目录到master02

[root@localhost k8s]# scp -r /opt/kubernetes/ root@192.168.126.40:/opt

- 复制master中的三个组件启动脚本kube-apiserver.service kube-controller-manager.service kube-scheduler.service

[root@localhost k8s]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@192.168.126.40:/usr/lib/systemd/system/

- master02上操作

修改配置文件kube-apiserver中的IP

[root@localhost ~]# cd /opt/kubernetes/cfg/

[root@localhost cfg]# vim kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.195.149:2379,https://192.168.195.150:2379,https://192.168.195.151:2379 \

--bind-address=192.168.126.40 \ #修改为本机ip

--secure-port=6443 \

--advertise-address=192.168.126.40 \ #修改为本机ip

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,No

- 特别注意:master02一定要有etcd证书

master2节点与node节点之间的操作信息需要保存在ETCD集群中,所以 master 节点都必须有etcd证书,实现通信。

拷贝master1上已有的 etcd 证书给 master2 使用

[root@localhost k8s]# scp -r /opt/etcd/ root@192.168.126.40:/opt/

root@192.168.126.40's password:

- 启动master02中的三个组件服务

[root@localhost cfg]# systemctl start kube-apiserver.service

[root@localhost cfg]# systemctl start kube-controller-manager.service

[root@localhost cfg]# systemctl start kube-scheduler.service

- 增加环境变量,优化kubectl命令

[root@localhost cfg]# vim /etc/profile

\#末尾添加

export PATH=$PATH:/opt/kubernetes/bin/

[root@localhost cfg]# source /etc/profile

- 验证master2是否加入K8s集群

[root@localhost cfg]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.195.150 Ready <none> 2d12h v1.12.3

192.168.195.151 Ready <none> 38h v1.12.3

三、搭建nginx负载均衡

3.1 安装nginx服务

准备两台虚拟机 搭建 nginx的高可用群集

负载均衡(两台操作一样)

Nginx01:192.168.126.50 master

Nginx02:192.168.126.60 backup

- 安装nginx服务,把nginx.sh和keepalived.conf脚本拷贝到家目录

[root@localhost ~]#ls

anaconda-ks.cfg initial-setup-ks.cfg keepalived.conf nginx.sh original-ks.cfg yum.sh 公共 模板 视频 图片 文档 下载 音乐 桌面

- 关闭防火墙

[root@localhost ~]# systemctl stop firewalld.service

[root@localhost ~]# setenforce 0

- 建立本地yum官方nginx源

//编写repo文件

[root@localhost ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

[root@localhost ~]# yum list

[root@localhost ~]# yum install nginx -y //下载nginx

- 接下来在配置文件设置 nginx的四层负载均衡

[root@localhost ~]# vim /etc/nginx/nginx.conf

events {

worker_connections 1024;

} #在这下面添加以下内容

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.195.149:6443;

server 192.168.195.131:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

[root@localhost ~]# systemctl start nginx #启动nginx服务

[root@localhost ~]# nginx -t #检查配置文件是否有语法错误

[root@localhost ~]# systemctl start nginx 开启服务

[root@localhost ~]# netstat -natp | grep nginx #注意:查看端口6443必须在监听中

3.2 搭建 keepalived 高可用服务

- 部署keepalived服务

[root@localhost ~]# yum install keepalived -y

- 修改配置文件

cp keepalived.conf /etc/keepalived/keepalived.conf

vim /etc/keepalived/keepalived.conf

注意:nginx01是Mster配置如下:(注意master和backup配置的区别)

! Configuration File for keepalived

global_defs {

\# 接收邮件地址

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

\# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh" #把脚本位置改一下

}

vrrp_instance VI_1 {

state MASTER #类型为MASTER

interface ens33 #网卡改成ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.195.88/24 #记得更改虚拟ip地址为之前设置好的地址

}

track_script {

check_nginx

注意:nginx02是Backup配置如下:

! Configuration File for keepalived

global_defs {

\# 接收邮件地址

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

\# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh" #脚本位置

}

vrrp_instance VI_1 {

state BACKUP #备服务器

interface ens33 #网卡ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.195.88/24 #虚拟ip的地址

}

track_script {

check_nginx

}

}

- 创建检测脚本

[root@localhost ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

[root@localhost ~]# chmod +x /etc/nginx/check_nginx.sh //授权

- 开启keepalived 服务

[root@localhost ~]# systemctl start keepalived

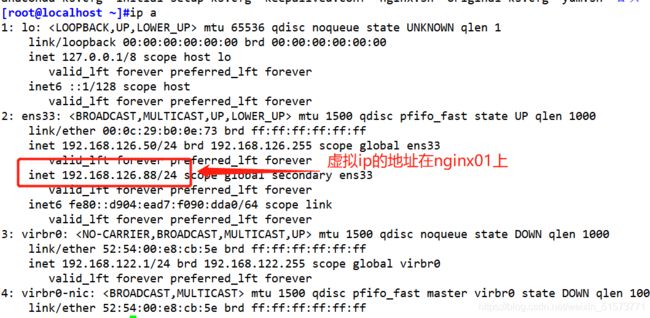

- 查看nginx01地址信息

[root@localhost ~]# ip a

- 查看nginx02地址信息

[root@localhost nginx]# ip a

验证地址漂移(nginx01中使用pkill nginx,再在nginx02中使用ip a 查看)

恢复操作(在nginx01中先启动nginx服务,再启动keepalived服务)

nginx站点/usr/share/nginx/html

3.3 开始修改node节点配置文件统一VIP

修改三个文件(bootstrap.kubeconfig,kubelet.kubeconfig)

[root@localhost cfg]# vim /opt/kubernetes/cfg/bootstrap.kubeconfig

[root@localhost cfg]# vim /opt/kubernetes/cfg/kubelet.kubeconfig

[root@localhost cfg]# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

- 统统修改为VIP

server: https://192.168.126.88:6443

[root@localhost cfg]# systemctl restart kubelet.service

[root@localhost cfg]# systemctl enable kubelet.service

[root@localhost cfg]# systemctl restart kube-proxy.service

[root@localhost cfg]# systemctl enable kube-proxy.service

- 替换完成直接自检

[root@localhost cfg]# grep 100 *

bootstrap.kubeconfig: server: https://192.126.88.100:6443

kubelet.kubeconfig: server: https://192.168.126.88:6443

kube-proxy.kubeconfig: server: https://192.168.126.88:6443

- nginx01上查看nginx的k8s日志

[root@localhost ~]# tail /var/log/nginx/k8s-access.log

[root@localhost ~]#tail /var/log/nginx/k8s-access.log

192.168.126.20 192.168.126.40:6443 - [14/Apr/2021:18:19:17 +0800] 200 1120

192.168.126.20 192.168.126.10:6443 - [14/Apr/2021:18:19:17 +0800] 200 1121 #轮询调度把请求流量分发到两台master上

- master01上操作

测试创建pod

[root@localhost ~]# kubectl run nginx --image=nginx

kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

deployment.apps/nginx created

查看状态

[root@localhost ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-nf9sk 0/1 ContainerCreating 0 33s //正在创建中

[root@localhost ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-nf9sk 1/1 Running 0 80s //创建完成,运行中

- 注意日志问题

[root@localhost ~]# kubectl logs nginx-dbddb74b8-nf9sk

Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-dbddb74b8-nf9sk) #出现报错,需要创建system:anonymous用户才可以

[root@localhost ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous

created

- 查看pod网络

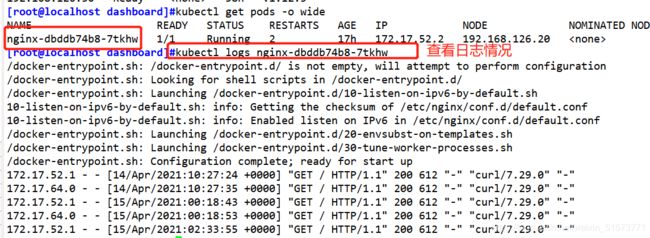

[root@localhost dashboard]#kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-dbddb74b8-7tkhw 1/1 Running 2 17h 172.17.52.2 192.168.126.20 <none>

- 在对应的node节点上操作可以直接访问

curl 172.17.52.2

[root@localhost ~]#curl 172.17.52.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

- 访问就会产生日志

//回到master01操作

[root@localhost ~]# kubectl logs nginx-dbddb74b8-7tkhw