使用Docker搭建ELK,并与SpringBoot集成

1.环境准备:

安装ElasticSearch、Kibana、LogStash。

docker内,下载需要的镜像。然后启动一个镜像。

创建并运行一个ElasticSearch容器:

docker run -e ES_JAVA_OPTS="-Xms256m -Xmx256m" -d -p 9200:9200 -p 9300:9300 --name MyES elasticsearch:6.8.8

#7.6.2 启动需要增加discovery.type=single-node

docker run -e ES_JAVA_OPTS="-Xms256m -Xmx256m" -e discovery.type=single-node -d -p 9200:9200 -p 9300:9300 --name MyES elasticsearch:7.6.2

浏览器访问测试:http://127.0.0.1:9200,应输出如下结果:

{

"name": "WQawbNC",

"cluster_name": "docker-cluster",

"cluster_uuid": "f6QviESlT_e5u3kaZFHoWA",

"version": {

"number": "6.8.8",

"build_flavor": "default",

"build_type": "docker",

"build_hash": "2f4c224",

"build_date": "2020-03-18T23:22:18.622755Z",

"build_snapshot": false,

"lucene_version": "7.7.2",

"minimum_wire_compatibility_version": "5.6.0",

"minimum_index_compatibility_version": "5.0.0"

},

"tagline": "You Know, for Search"

}

创建并运行运行一个Kibana容器:

创建之前,先查看ES在docker中的ip地址,因为我们的kibana在启动的时候需要连接到ES。

#先使用命令 docker ps 查看ES容器ID

docker ps

#输出如下:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a266d1ff5c1b elasticsearch:6.8.8 "/usr/local/bin/dock…" 19 hours ago Up 18 hours 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp MyES

#通过容器ID,查看容器IP地址。以上的a266d1ff5c1b就是我们ES的容器ID

docker inspect --format '{{ .NetworkSettings.IPAddress }}' a266d1ff5c1b

#输出如下:

172.17.0.3

得到了ES容器IP地址之后,创建并运行一个Kibana容器。

#注意,此处的ELASTICSEARCH_URL需替换成上面ES容器的IP地址,否则Kibana连接不到ES

docker run -d --name MyKibana -p 5601:5601 -e ELASTICSEARCH_URL=http://172.17.0.3:9200 kibana:6.8.8

浏览器访问测试:http://127.0.0.1:5601:

创建并运行运行一个LogStash容器:

docker run -d -p 9600:9600 -p 4560:4560 --name MyLogStash logstash:6.8.8

运行后,进入容器内部。修改logstash.yml配置文件:

docker exec -it 容器ID bash

cd config

vi logstash.yml

# 改成如下配置

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.url: http://es所在的ip:9200

xpack.monitoring.elasticsearch.username: elastic

xpack.monitoring.elasticsearch.password: changme

修改pipeline下的logstash.conf文件

input {

tcp {

#模式选择为server

mode => "server"

#ip和端口根据自己情况填写,端口默认4560,对应下文logback.xml里appender中的destination

host => "logstash所在的ip地址"

port => 4560

#格式json

codec => json_lines

}

}

output {

elasticsearch {

action => "index"

#这里是es的地址,多个es要写成数组的形式

hosts => "172.17.0.3:9200"

#用于kibana过滤,可以填项目名称

index => "springboot-logstash"

}

stdout {

codec => rubydebug

}

}

最后重启我们的logstash

docker restart MyLogStash

2. 使ELK与SpringBoot集成

maven相关依赖:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.4.2version>

<relativePath/>

parent>

<groupId>com.elkgroupId>

<artifactId>demoartifactId>

<version>0.0.1-SNAPSHOTversion>

<name>demoname>

<description>Demo project for Spring Bootdescription>

<properties>

<java.version>1.8java.version>

<ch.qos.logback.version>1.2.3ch.qos.logback.version>

properties>

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-loggingartifactId>

dependency>

<dependency>

<groupId>ch.qos.logbackgroupId>

<artifactId>logback-coreartifactId>

<version>${ch.qos.logback.version}version>

dependency>

<dependency>

<groupId>ch.qos.logbackgroupId>

<artifactId>logback-classicartifactId>

<version>${ch.qos.logback.version}version>

dependency>

<dependency>

<groupId>ch.qos.logbackgroupId>

<artifactId>logback-accessartifactId>

<version>${ch.qos.logback.version}version>

dependency>

<dependency>

<groupId>net.logstash.logbackgroupId>

<artifactId>logstash-logback-encoderartifactId>

<version>5.1version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

plugin>

plugins>

build>

project>

logback配置:

<configuration debug="false" scan="true" scanPeriod="1 seconds">

<include resource="org/springframework/boot/logging/logback/base.xml" />

<contextName>logbackcontextName>

<appender name="stash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>127.0.0.1:4560destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder" />

appender>

<root level="info">

<appender-ref ref="stash" />

root>

configuration>

日志记录:

/**

* @author lisw

* @program elk_project

* @description

* @createDate 2021-02-09 13:46:45

* @slogan 长风破浪会有时,直挂云帆济沧海。

**/

@RestController

@RequestMapping("/test")

public class ElkController {

private final Logger logger = LoggerFactory.getLogger(getClass());

@RequestMapping("/elkAdd")

public String elkAdd(){

logger.info("日志记录"+System.currentTimeMillis());

return "1";

}

}

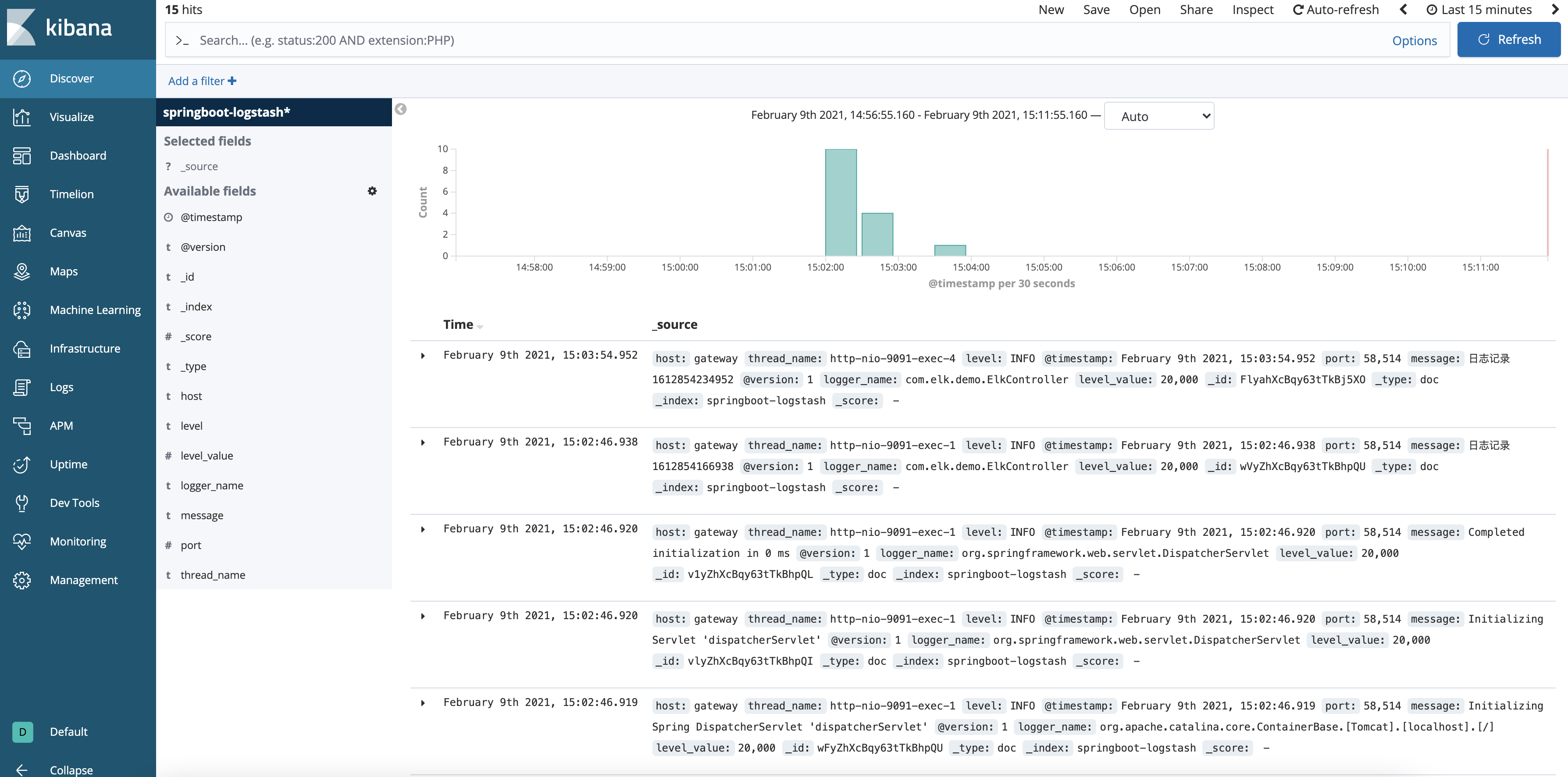

在Kibana中查看创建索引及查看日志: