SDL2绘制ffmpeg解析的mp4文件

文章目录

- 1.FFMPEG利用命令行将mp4转yuv420

- 2.ffmpeg将mp4解析为yuv数据

-

- 2.1 核心api:

- 3.SDL2进行yuv绘制到屏幕

-

- 3.1 核心api

- 4.完整代码

- 5.效果展示

本项目采用生产者消费者模型,生产者线程:使用ffmpeg将mp4格式数据解析为yuv的帧,消费者线程:利用sdl2将解析的yuv的帧进行消费,绘制到屏幕上。未完成的部分:1.解析音频数据,并与视频数据同步。2.增加界面:暂停,播放按钮,支持视频前进和后退。

学习音视频的参考资料与项目:

playdemo_github

雷神的csdn博客

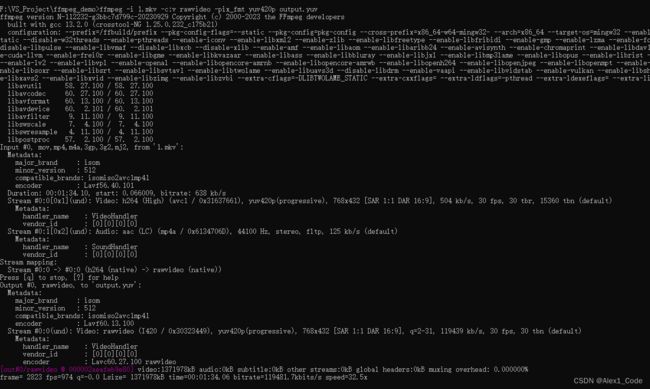

1.FFMPEG利用命令行将mp4转yuv420

ffmpeg -i input.mp4 -c:v rawvideo -pix_fmt yuv420p output.yuv

2.ffmpeg将mp4解析为yuv数据

2.1 核心api:

- av_read_frame:读取一帧数据

- avcodec_send_packet:将数据包发送给解码器

- avcodec_receive_frame:将数据包从解码器中取

- sws_scale:格式转换,将解码后的帧数据转为yuv数据,存储在data[0],data[1],data[2]中

void readFrame()

{

AVPacket* avPacket = av_packet_alloc();

AVFrame* frame = av_frame_alloc();

FILE* fp = fopen("F:/VS_Project/ffmpeg_demo/yuv.data","wb+") ;

while (av_read_frame(formatContext, avPacket) >= 0 && fp)

{

if (avPacket->stream_index == videoStreamIndex)

{

if (avcodec_send_packet(codecContext, avPacket) < 0) {

std::cerr << "发送数据包到解码器失败" << std::endl;

break;

}

/*解码*/

int ret = avcodec_receive_frame(codecContext, frame);

printf("ret:%d\n", ret);

if (ret >= 0)

{

ret = sws_scale(swsContext, frame->data, frame->linesize, 0, codecContext->height, yuvFrame->data, yuvFrame->linesize);

printf("sws_scale ret=%d\n", ret);

std::lock_guard<std::mutex>lck(mtx);

isFinished = false;

memcpy(yuvBuf, yuvFrame->data[0], yuvFrame->width * yuvFrame->height);

memcpy(yuvBuf + yuvFrame->width * yuvFrame->height, yuvFrame->data[1], yuvFrame->width * yuvFrame->height / 4);

memcpy(yuvBuf + yuvFrame->width * yuvFrame->height*5/4, yuvFrame->data[2], yuvFrame->width * yuvFrame->height / 4);

isFinished = true;

condvar.notify_one();

//保存y分量

//fwrite(yuvFrame->data[0], 1, yuvFrame->width * yuvFrame->height, fp);

//保存uv分量

//fwrite(yuvFrame->data[1], 1, yuvFrame->width * yuvFrame->height/4, fp);

//fwrite(yuvFrame->data[2], 1, yuvFrame->width * yuvFrame->height / 4, fp);

}

}

}

fclose(fp);

av_frame_unref(yuvFrame);

av_packet_free(&avPacket);

av_frame_unref(frame);

}

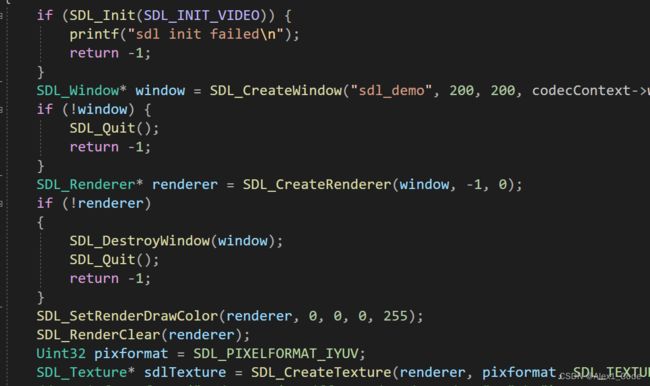

3.SDL2进行yuv绘制到屏幕

3.1 核心api

- SDL_Init

- SDL_CreateWindow

- SDL_CreateRenderer

- SDL_CreateTexture

- SDL_UpdateTexture

- SDL_RenderCopy

- SDL_RenderPresent

- SDL_Delay:控制帧率

int sdl_display()

{

if (SDL_Init(SDL_INIT_VIDEO)) {

printf("sdl init failed\n");

return -1;

}

SDL_Window* window = SDL_CreateWindow("sdl_demo", 200, 200, codecContext->width, codecContext->height, SDL_WINDOW_OPENGL | SDL_WINDOW_RESIZABLE);

if (!window) {

SDL_Quit();

return -1;

}

SDL_Renderer* renderer = SDL_CreateRenderer(window, -1, 0);

if (!renderer)

{

SDL_DestroyWindow(window);

SDL_Quit();

return -1;

}

SDL_SetRenderDrawColor(renderer, 0, 0, 0, 255);

SDL_RenderClear(renderer);

Uint32 pixformat = SDL_PIXELFORMAT_IYUV;

SDL_Texture* sdlTexture = SDL_CreateTexture(renderer, pixformat, SDL_TEXTUREACCESS_STREAMING, codecContext->width, codecContext->height);

//FILE* fp = fopen("F:/VS_Project/ffmpeg_demo/yuv.data", "rb+");

while (1) {

//int ret = fread(yuvBuf, 1, yuvlen, fp);

//if (ret <= 0) {

// break;

//}

std::unique_lock<std::mutex>lck(mtx);

if (condvar.wait_for(lck, std::chrono::seconds(1), [] {

return isFinished;}))

{

isFinished = false;

SDL_UpdateTexture(sdlTexture, NULL, yuvBuf, codecContext->width);

SDL_RenderCopy(renderer, sdlTexture, NULL, NULL);

SDL_RenderPresent(renderer);

//控制帧率25fps

SDL_Delay(40);

}

else {

printf("sdl thread exit!\n");

break;

}

}

SDL_Quit();

return 0;

}

4.完整代码

-使用两个线程,生产者消费者模型

#include