【FFMPEG源码分析】通过ffmpeg截图命令分析ffmpeg.c源码流程

环境搭建

- Ubuntu 20.04

- 开启外设摄像头

- 截图命令:

ffmpeg -f video4linux2 -s 640x480 -i /dev/video0 -ss 0:0:2 -frames 1 /data/ffmpeg-4.2.7/exe_cmd/tmp/out2.jpg

参数解析

int main(int argc, char **argv)

{

.................................;

#if CONFIG_AVDEVICE

avdevice_register_all();

#endif

avformat_network_init();

show_banner(argc, argv, options);

//1. 重点看下参数解析

ffmpeg_parse_options(argc, argv);

//2. 这里面进行具体的数据处理

if (transcode() < 0)

exit_program(1);

}

.................................;

}

int ffmpeg_parse_options(int argc, char **argv)

{

OptionParseContext octx;

uint8_t error[128];

int ret;

memset(&octx, 0, sizeof(octx));

/* split the commandline into an internal representation */

split_commandline(&octx, argc, argv, options, groups,

FF_ARRAY_ELEMS(groups));

/* apply global options */

parse_optgroup(NULL, &octx.global_opts);

/* configure terminal and setup signal handlers */

term_init();

/* open input files */

open_files(&octx.groups[GROUP_INFILE], "input", open_input_file);

init_complex_filters();

/* open output files */

open_files(&octx.groups[GROUP_OUTFILE], "output", open_output_file);

.....................................;

check_filter_outputs();

.....................................;

return ret;

}

split_commandline

//全局变量 设置给split_commandline

const OptionDef options[] = {

/* main options */

CMDUTILS_COMMON_OPTIONS

{ "f", HAS_ARG | OPT_STRING | OPT_OFFSET |

OPT_INPUT | OPT_OUTPUT, { .off = OFFSET(format) },

"force format", "fmt" },

{ "y", OPT_BOOL, { &file_overwrite },

"overwrite output files" },

...............................;

}

enum OptGroup {

GROUP_OUTFILE,

GROUP_INFILE,

};

//全局变量 设置给split_commandline

static const OptionGroupDef groups[] = {

[GROUP_OUTFILE] = { "output url", NULL, OPT_OUTPUT },

[GROUP_INFILE] = { "input url", "i", OPT_INPUT },

};

int split_commandline(OptionParseContext *octx, int argc, char *argv[],

const OptionDef *options,

const OptionGroupDef *groups, int nb_groups)

{

int optindex = 1;

int dashdash = -2;

//针对windows上输入参数字符集的转换如:utf-8等,不必深入。

prepare_app_arguments(&argc, &argv);

init_parse_context(octx, groups, nb_groups);

av_log(NULL, AV_LOG_DEBUG, "Splitting the commandline.\n");

while (optindex < argc) {

const char *opt = argv[optindex++], *arg;

const OptionDef *po;

int ret;

if (opt[0] == '-' && opt[1] == '-' && !opt[2]) {

dashdash = optindex;

continue;

}

// -i之后的作为output的一个group,即全局变量groups中的GROUP_OUTFILE

if (opt[0] != '-' || !opt[1] || dashdash+1 == optindex) {

finish_group(octx, 0, opt);

av_log(NULL, AV_LOG_DEBUG, " matched as %s.\n", groups[0].name);

continue;

}

opt++;

#define GET_ARG(arg) \

do { \

arg = argv[optindex++]; \

if (!arg) { \

av_log(NULL, AV_LOG_ERROR, "Missing argument for option '%s'.\n", opt);\

return AVERROR(EINVAL); \

} \

} while (0)

//-i 以及-i 之前的参数全部存入全局变量groups中的GROUP_INFILE

/* named group separators, e.g. -i */

if ((ret = match_group_separator(groups, nb_groups, opt)) >= 0) {

GET_ARG(arg);

finish_group(octx, ret, arg);

av_log(NULL, AV_LOG_DEBUG, " matched as %s with argument '%s'.\n",

groups[ret].name, arg);

continue;

}

/* normal options */

po = find_option(options, opt);

if (po->name) {

if (po->flags & OPT_EXIT) {

/* optional argument, e.g. -h */

arg = argv[optindex++];

} else if (po->flags & HAS_ARG) {

GET_ARG(arg);

} else {

arg = "1";

}

add_opt(octx, po, opt, arg);

av_log(NULL, AV_LOG_DEBUG, " matched as option '%s' (%s) with "

"argument '%s'.\n", po->name, po->help, arg);

continue;

}

/* AVOptions */

if (argv[optindex]) {

ret = opt_default(NULL, opt, argv[optindex]);

if (ret >= 0) {

av_log(NULL, AV_LOG_DEBUG, " matched as AVOption '%s' with "

"argument '%s'.\n", opt, argv[optindex]);

optindex++;

continue;

} else if (ret != AVERROR_OPTION_NOT_FOUND) {

av_log(NULL, AV_LOG_ERROR, "Error parsing option '%s' "

"with argument '%s'.\n", opt, argv[optindex]);

return ret;

}

}

/* boolean -nofoo options */

if (opt[0] == 'n' && opt[1] == 'o' &&

(po = find_option(options, opt + 2)) &&

po->name && po->flags & OPT_BOOL) {

add_opt(octx, po, opt, "0");

av_log(NULL, AV_LOG_DEBUG, " matched as option '%s' (%s) with "

"argument 0.\n", po->name, po->help);

continue;

}

av_log(NULL, AV_LOG_ERROR, "Unrecognized option '%s'.\n", opt);

return AVERROR_OPTION_NOT_FOUND;

}

if (octx->cur_group.nb_opts || codec_opts || format_opts || resample_opts)

av_log(NULL, AV_LOG_WARNING, "Trailing options were found on the "

"commandline.\n");

av_log(NULL, AV_LOG_DEBUG, "Finished splitting the commandline.\n");

return 0;

}

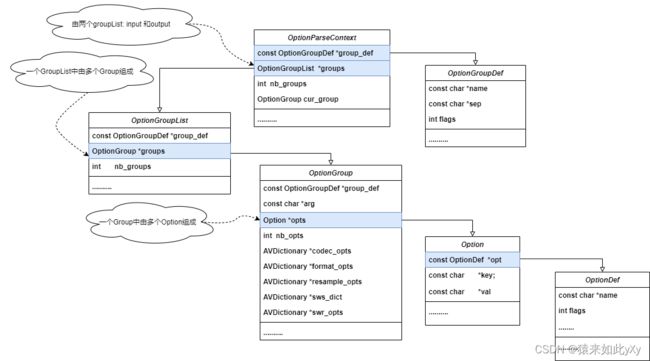

上述代码主要是将ffmpeg命令行参数解析到OptionParseContext中。OptionParseContext的成员变量OptionGroupList *groups由两个,一个为input OptionGroupList另一个为output OptionGroupList。一个OptionGroupList中由多个OptionGroup组成,而一个OptionGroup由多个Option组成。具体关系如下图:

OptionGroupList输入/输出参数以及option的遍历代码如下:

OptionParseContext octx;

for(int i = 0; i < octx.nb_groups; i++) {

OptionGroupList *ll = &octx.groups[i];

av_log(NULL,AV_LOG_ERROR, "OptionGroupList[%d] def:%s\n",i, ll->group_def->name);

for(int j = 0; j < ll->nb_groups; j++) {

OptionGroup *g = &ll->groups[j];

av_log(NULL,AV_LOG_ERROR,"g[%d][%d],arg:%s, def: %s \n",i,j, g->arg, g->group_def->name);

for (int x = 0; x < g->nb_opts; x++) {

Option *opt = &g->opts[x];

av_log(NULL,AV_LOG_ERROR,"g[%d][%d], opts[%d],key:%s, value:%s, def: %s \n",i,j,x, opt->key, opt->val, opt->opt->name);

}

}

}

如命令行: ffmpeg -f video4linux2 -s 640x480 -i /dev/video0 -ss 0:0:2 -frames 1 /data/ffmpeg-4.2.7/exe_cmd/tmp/out2.jpg 解析后遍历结果如下:

OptionGroupList[0] def:output url

g[0][0],arg:/data/ffmpeg-4.2.7/exe_cmd/tmp/out2.jpg, def: output url

g[0][0], opts[0],key:ss, value:0:0:2, def: ss

g[0][0], opts[1],key:frames, value:1, def: frames

OptionGroupList[1] def:input url

g[1][0],arg:/dev/video0, def: input url

g[1][0], opts[0],key:f, value:video4linux2, def: f

g[1][0], opts[1],key:s, value:640x480, def: s

parse_optgroup

这个函数的作中是存储全局的option,如: -v debug 对所有模块都适用

int parse_optgroup(void *optctx, OptionGroup *g)

{

int i, ret;

for (i = 0; i < g->nb_opts; i++) {

Option *o = &g->opts[i];

ret = write_option(optctx, o->opt, o->key, o->val);

if (ret < 0)

return ret;

}

return 0;

}

open_files

参数解析完成之后,就是通过input url和output url找到对应的AVInputFormat和AVOutputFormat。

int ffmpeg_parse_options(int argc, char **argv) {

................................................;

//将input arg group传入进去

open_files(&octx.groups[GROUP_INFILE], "input", open_input_file)

................................................;

}

static int open_files(OptionGroupList *l, const char *inout,

int (*open_file)(OptionsContext*, const char*))

{

int i, ret;

for (i = 0; i < l->nb_groups; i++) {

OptionGroup *g = &l->groups[i];

OptionsContext o;

init_options(&o);

o.g = g;

ret = parse_optgroup(&o, g);

av_log(NULL, AV_LOG_DEBUG, "Opening an %s file: %s.\n", inout, g->arg);

//将input arg option group赋值到OptionsContext后调用open_input_file

ret = open_file(&o, g->arg);

uninit_options(&o);

}

return 0;

}

open_input_file

static int open_input_file(OptionsContext *o, const char *filename)

{

InputFile *f;

AVFormatContext *ic;

AVInputFormat *file_iformat = NULL;

int err, i, ret;

int64_t timestamp;

AVDictionary *unused_opts = NULL;

AVDictionaryEntry *e = NULL;

char * video_codec_name = NULL;

char * audio_codec_name = NULL;

char *subtitle_codec_name = NULL;

char * data_codec_name = NULL;

int scan_all_pmts_set = 0;

.....................................;

if (o->format) {

//在本例中通过video4linux2 找到ff_v4l2_demuxer

if (!(file_iformat = av_find_input_format(o->format))) {

av_log(NULL, AV_LOG_FATAL, "Unknown input format: '%s'\n", o->format);

exit_program(1);

}

}

............................;

//分配AVFormatContext

ic = avformat_alloc_context();

............................;

av_log(NULL, AV_LOG_ERROR, "[yxy]use AVInputFormat:%s for file:%s \n", file_iformat->name, filename);

err = avformat_open_input(&ic, filename, file_iformat, &o->g->format_opts);

/* apply forced codec ids */

for (i = 0; i < ic->nb_streams; i++)

choose_decoder(o, ic, ic->streams[i]);

if (find_stream_info) {

AVDictionary **opts = setup_find_stream_info_opts(ic, o->g->codec_opts);

int orig_nb_streams = ic->nb_streams;

ret = avformat_find_stream_info(ic, opts);

}

............................;

/* update the current parameters so that they match the one of the input stream */

add_input_streams(o, ic);

/* dump the file content */

av_dump_format(ic, nb_input_files, filename, 0);

GROW_ARRAY(input_files, nb_input_files);

f = av_mallocz(sizeof(*f));

//存入全局变量input_files

input_files[nb_input_files - 1] = f;

f->ctx = ic;

f->ist_index = nb_input_streams - ic->nb_streams;

f->start_time = o->start_time;

f->recording_time = o->recording_time;

f->input_ts_offset = o->input_ts_offset;

.......................................;

return 0;

}

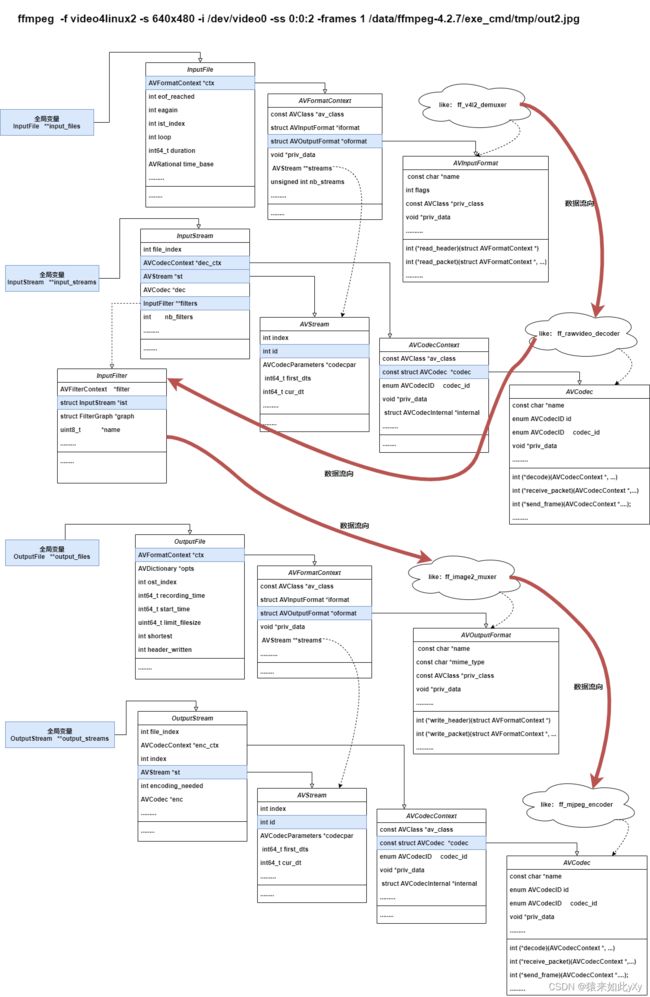

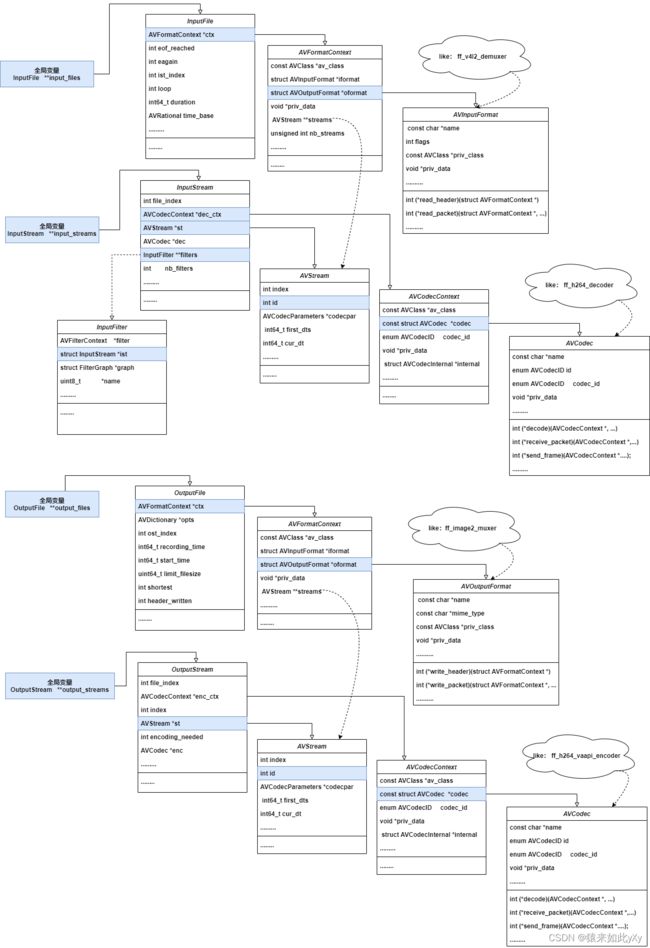

上面open_input的代码主要作用是通过input args options new一个AVFormatContext以及AVFormatContext.AVInputFormat 即ff_v4l2_demuxer, 并将AVFormatContext存入全局变量InputFile *input_files中。另外new出来了InputStream存放在全局变量InputStream **input_streams中。

open_output_file

static int open_output_file(OptionsContext *o, const char *filename)

{

AVFormatContext *oc;

int i, j, err;

OutputFile *of;

OutputStream *ost;

InputStream *ist;

AVDictionary *unused_opts = NULL;

AVDictionaryEntry *e = NULL;

int format_flags = 0;

............................................;

GROW_ARRAY(output_files, nb_output_files);

of = av_mallocz(sizeof(*of));

output_files[nb_output_files - 1] = of;

of->ost_index = nb_output_streams;

of->recording_time = o->recording_time;

of->start_time = o->start_time;

of->limit_filesize = o->limit_filesize;

of->shortest = o->shortest;

av_dict_copy(&of->opts, o->g->format_opts, 0);

av_log(NULL, AV_LOG_ERROR, "[yxy]call avformat_alloc_output_context2 for file:%s \n", filename);

err = avformat_alloc_output_context2(&oc, NULL, o->format, filename);

of->ctx = oc;

if (o->recording_time != INT64_MAX)

oc->duration = o->recording_time;

oc->interrupt_callback = int_cb;

e = av_dict_get(o->g->format_opts, "fflags", NULL, 0);

if (e) {

const AVOption *o = av_opt_find(oc, "fflags", NULL, 0, 0);

av_opt_eval_flags(oc, o, e->value, &format_flags);

}

if (o->bitexact) {

format_flags |= AVFMT_FLAG_BITEXACT;

oc->flags |= AVFMT_FLAG_BITEXACT;

}

/* create streams for all unlabeled output pads */

for (i = 0; i < nb_filtergraphs; i++) {

FilterGraph *fg = filtergraphs[i];

for (j = 0; j < fg->nb_outputs; j++) {

OutputFilter *ofilter = fg->outputs[j];

if (!ofilter->out_tmp || ofilter->out_tmp->name)

continue;

switch (ofilter->type) {

case AVMEDIA_TYPE_VIDEO: o->video_disable = 1; break;

case AVMEDIA_TYPE_AUDIO: o->audio_disable = 1; break;

case AVMEDIA_TYPE_SUBTITLE: o->subtitle_disable = 1; break;

}

init_output_filter(ofilter, o, oc);

}

}

if (!o->nb_stream_maps) {

char *subtitle_codec_name = NULL;

/* pick the "best" stream of each type */

/* video: highest resolution */

if (!o->video_disable && av_guess_codec(oc->oformat, NULL, filename, NULL, AVMEDIA_TYPE_VIDEO) != AV_CODEC_ID_NONE) {

int area = 0, idx = -1;

int qcr = avformat_query_codec(oc->oformat, oc->oformat->video_codec, 0);

for (i = 0; i < nb_input_streams; i++) {

int new_area;

ist = input_streams[i];

new_area = ist->st->codecpar->width * ist->st->codecpar->height + 100000000*!!ist->st->codec_info_nb_frames

+ 5000000*!!(ist->st->disposition & AV_DISPOSITION_DEFAULT);

if (ist->user_set_discard == AVDISCARD_ALL)

continue;

if((qcr!=MKTAG('A', 'P', 'I', 'C')) && (ist->st->disposition & AV_DISPOSITION_ATTACHED_PIC))

new_area = 1;

if (ist->st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO &&

new_area > area) {

if((qcr==MKTAG('A', 'P', 'I', 'C')) && !(ist->st->disposition & AV_DISPOSITION_ATTACHED_PIC))

continue;

area = new_area;

idx = i;

}

}

if (idx >= 0)

new_video_stream(o, oc, idx);

}

/* audio: most channels */

if (!o->audio_disable && av_guess_codec(oc->oformat, NULL, filename, NULL, AVMEDIA_TYPE_AUDIO) != AV_CODEC_ID_NONE) {

int best_score = 0, idx = -1;

for (i = 0; i < nb_input_streams; i++) {

int score;

ist = input_streams[i];

score = ist->st->codecpar->channels + 100000000*!!ist->st->codec_info_nb_frames

+ 5000000*!!(ist->st->disposition & AV_DISPOSITION_DEFAULT);

if (ist->user_set_discard == AVDISCARD_ALL)

continue;

if (ist->st->codecpar->codec_type == AVMEDIA_TYPE_AUDIO &&

score > best_score) {

best_score = score;

idx = i;

}

}

if (idx >= 0)

new_audio_stream(o, oc, idx);

}

/* subtitles: pick first */

MATCH_PER_TYPE_OPT(codec_names, str, subtitle_codec_name, oc, "s");

if (!o->subtitle_disable && (avcodec_find_encoder(oc->oformat->subtitle_codec) || subtitle_codec_name)) {

for (i = 0; i < nb_input_streams; i++)

if (input_streams[i]->st->codecpar->codec_type == AVMEDIA_TYPE_SUBTITLE) {

AVCodecDescriptor const *input_descriptor =

avcodec_descriptor_get(input_streams[i]->st->codecpar->codec_id);

AVCodecDescriptor const *output_descriptor = NULL;

AVCodec const *output_codec =

avcodec_find_encoder(oc->oformat->subtitle_codec);

int input_props = 0, output_props = 0;

if (input_streams[i]->user_set_discard == AVDISCARD_ALL)

continue;

if (output_codec)

output_descriptor = avcodec_descriptor_get(output_codec->id);

if (input_descriptor)

input_props = input_descriptor->props & (AV_CODEC_PROP_TEXT_SUB | AV_CODEC_PROP_BITMAP_SUB);

if (output_descriptor)

output_props = output_descriptor->props & (AV_CODEC_PROP_TEXT_SUB | AV_CODEC_PROP_BITMAP_SUB);

if (subtitle_codec_name ||

input_props & output_props ||

// Map dvb teletext which has neither property to any output subtitle encoder

input_descriptor && output_descriptor &&

(!input_descriptor->props ||

!output_descriptor->props)) {

new_subtitle_stream(o, oc, i);

break;

}

}

}

/* Data only if codec id match */

if (!o->data_disable ) {

enum AVCodecID codec_id = av_guess_codec(oc->oformat, NULL, filename, NULL, AVMEDIA_TYPE_DATA);

for (i = 0; codec_id != AV_CODEC_ID_NONE && i < nb_input_streams; i++) {

if (input_streams[i]->user_set_discard == AVDISCARD_ALL)

continue;

if (input_streams[i]->st->codecpar->codec_type == AVMEDIA_TYPE_DATA

&& input_streams[i]->st->codecpar->codec_id == codec_id )

new_data_stream(o, oc, i);

}

}

} else {

for (i = 0; i < o->nb_stream_maps; i++) {

StreamMap *map = &o->stream_maps[i];

if (map->disabled)

continue;

if (map->linklabel) {

FilterGraph *fg;

OutputFilter *ofilter = NULL;

int j, k;

for (j = 0; j < nb_filtergraphs; j++) {

fg = filtergraphs[j];

for (k = 0; k < fg->nb_outputs; k++) {

AVFilterInOut *out = fg->outputs[k]->out_tmp;

if (out && !strcmp(out->name, map->linklabel)) {

ofilter = fg->outputs[k];

goto loop_end;

}

}

}

loop_end:

if (!ofilter) {

av_log(NULL, AV_LOG_FATAL, "Output with label '%s' does not exist "

"in any defined filter graph, or was already used elsewhere.\n", map->linklabel);

exit_program(1);

}

init_output_filter(ofilter, o, oc);

} else {

int src_idx = input_files[map->file_index]->ist_index + map->stream_index;

ist = input_streams[input_files[map->file_index]->ist_index + map->stream_index];

if (ist->user_set_discard == AVDISCARD_ALL) {

av_log(NULL, AV_LOG_FATAL, "Stream #%d:%d is disabled and cannot be mapped.\n",

map->file_index, map->stream_index);

exit_program(1);

}

if(o->subtitle_disable && ist->st->codecpar->codec_type == AVMEDIA_TYPE_SUBTITLE)

continue;

if(o-> audio_disable && ist->st->codecpar->codec_type == AVMEDIA_TYPE_AUDIO)

continue;

if(o-> video_disable && ist->st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

continue;

if(o-> data_disable && ist->st->codecpar->codec_type == AVMEDIA_TYPE_DATA)

continue;

ost = NULL;

switch (ist->st->codecpar->codec_type) {

case AVMEDIA_TYPE_VIDEO: ost = new_video_stream (o, oc, src_idx); break;

case AVMEDIA_TYPE_AUDIO: ost = new_audio_stream (o, oc, src_idx); break;

case AVMEDIA_TYPE_SUBTITLE: ost = new_subtitle_stream (o, oc, src_idx); break;

case AVMEDIA_TYPE_DATA: ost = new_data_stream (o, oc, src_idx); break;

case AVMEDIA_TYPE_ATTACHMENT: ost = new_attachment_stream(o, oc, src_idx); break;

case AVMEDIA_TYPE_UNKNOWN:

if (copy_unknown_streams) {

ost = new_unknown_stream (o, oc, src_idx);

break;

}

default:

av_log(NULL, ignore_unknown_streams ? AV_LOG_WARNING : AV_LOG_FATAL,

"Cannot map stream #%d:%d - unsupported type.\n",

map->file_index, map->stream_index);

if (!ignore_unknown_streams) {

av_log(NULL, AV_LOG_FATAL,

"If you want unsupported types ignored instead "

"of failing, please use the -ignore_unknown option\n"

"If you want them copied, please use -copy_unknown\n");

exit_program(1);

}

}

if (ost)

ost->sync_ist = input_streams[ input_files[map->sync_file_index]->ist_index

+ map->sync_stream_index];

}

}

}

..............................;

return 0;

}

上面代码主要是AVFormatContext以及AVFormatContext.AVFormatContext即ff_image2_muxer并将AVFormatContext存入全局变量 OutputFile **output_files中,以及new出来各个OutputStream存放在全局变量OutputStream **output_streams。

总结图

将args解析完成和open动作做完之后,所有的信息都放在了四个全局变量中,具体如下图:

transcode

static int transcode(void)

{

int ret, i;

AVFormatContext *os;

OutputStream *ost;

InputStream *ist;

int64_t timer_start;

int64_t total_packets_written = 0;

//重点看下transcode_init 和transcode_step

ret = transcode_init();

.................................;

while (!received_sigterm) {

int64_t cur_time= av_gettime_relative();

.................................;

ret = transcode_step();

if (ret < 0 && ret != AVERROR_EOF) {

av_log(NULL, AV_LOG_ERROR, "Error while filtering: %s\n", av_err2str(ret));

break;

}

/* dump report by using the output first video and audio streams */

print_report(0, timer_start, cur_time);

}

.................................;

/* at the end of stream, we must flush the decoder buffers */

for (i = 0; i < nb_input_streams; i++) {

ist = input_streams[i];

if (!input_files[ist->file_index]->eof_reached) {

process_input_packet(ist, NULL, 0);

}

}

flush_encoders();

term_exit();

..............;

return ret;

}

transcode_init

static int transcode_init(void)

{

int ret = 0, i, j, k;

AVFormatContext *oc;

OutputStream *ost;

InputStream *ist;

char error[1024] = {0};

for (i = 0; i < nb_filtergraphs; i++) {

FilterGraph *fg = filtergraphs[i];

for (j = 0; j < fg->nb_outputs; j++) {

OutputFilter *ofilter = fg->outputs[j];

if (!ofilter->ost || ofilter->ost->source_index >= 0)

continue;

if (fg->nb_inputs != 1)

continue;

for (k = nb_input_streams-1; k >= 0 ; k--)

if (fg->inputs[0]->ist == input_streams[k])

break;

ofilter->ost->source_index = k;

}

}

/* init framerate emulation */

for (i = 0; i < nb_input_files; i++) {

InputFile *ifile = input_files[i];

if (ifile->rate_emu)

for (j = 0; j < ifile->nb_streams; j++)

input_streams[j + ifile->ist_index]->start = av_gettime_relative();

}

/* init input streams, open decoder */

for (i = 0; i < nb_input_streams; i++)

if ((ret = init_input_stream(i, error, sizeof(error))) < 0) {

for (i = 0; i < nb_output_streams; i++) {

ost = output_streams[i];

avcodec_close(ost->enc_ctx);

}

goto dump_format;

}

/* open each encoder */

for (i = 0; i < nb_output_streams; i++) {

// skip streams fed from filtergraphs until we have a frame for them

if (output_streams[i]->filter)

continue;

ret = init_output_stream(output_streams[i], error, sizeof(error));

if (ret < 0)

goto dump_format;

}

/* discard unused programs */

for (i = 0; i < nb_input_files; i++) {

InputFile *ifile = input_files[i];

for (j = 0; j < ifile->ctx->nb_programs; j++) {

AVProgram *p = ifile->ctx->programs[j];

int discard = AVDISCARD_ALL;

for (k = 0; k < p->nb_stream_indexes; k++)

if (!input_streams[ifile->ist_index + p->stream_index[k]]->discard) {

discard = AVDISCARD_DEFAULT;

break;

}

p->discard = discard;

}

}

/* write headers for files with no streams */

for (i = 0; i < nb_output_files; i++) {

oc = output_files[i]->ctx;

if (oc->oformat->flags & AVFMT_NOSTREAMS && oc->nb_streams == 0) {

ret = check_init_output_file(output_files[i], i);

if (ret < 0)

goto dump_format;

}

}

....................................;

}

transcode_step

static int transcode_step(void)

{

OutputStream *ost;

InputStream *ist = NULL;

int ret;

ost = choose_output();

....................;

//处理output filters

if (ost->filter && !ost->filter->graph->graph) {

if (ifilter_has_all_input_formats(ost->filter->graph)) {

ret = configure_filtergraph(ost->filter->graph);

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error reinitializing filters!\n");

return ret;

}

}

}

if (ost->filter && ost->filter->graph->graph) {

if (!ost->initialized) {

char error[1024] = {0};

ret = init_output_stream(ost, error, sizeof(error));

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error initializing output stream %d:%d -- %s\n",

ost->file_index, ost->index, error);

exit_program(1);

}

}

if ((ret = transcode_from_filter(ost->filter->graph, &ist)) < 0)

return ret;

if (!ist)

return 0;

} else if (ost->filter) {

int i;

for (i = 0; i < ost->filter->graph->nb_inputs; i++) {

InputFilter *ifilter = ost->filter->graph->inputs[i];

if (!ifilter->ist->got_output && !input_files[ifilter->ist->file_index]->eof_reached) {

ist = ifilter->ist;

break;

}

}

if (!ist) {

ost->inputs_done = 1;

return 0;

}

} else {

av_assert0(ost->source_index >= 0);

ist = input_streams[ost->source_index];

}

//重点看下process_input

ret = process_input(ist->file_index);

if (ret == AVERROR(EAGAIN)) {

if (input_files[ist->file_index]->eagain)

ost->unavailable = 1;

return 0;

}

if (ret < 0)

return ret == AVERROR_EOF ? 0 : ret;

//在这里面实现output处理

return reap_filters(0);

}

static int process_input(int file_index)

{

InputFile *ifile = input_files[file_index];

AVFormatContext *is;

InputStream *ist;

AVPacket pkt;

int ret, thread_ret, i, j;

int64_t duration;

int64_t pkt_dts;

is = ifile->ctx;

//从demuxer中读取数据出来

ret = get_input_packet(ifile, &pkt);

..................;

//如果需要decoder, decoder完之后写入filter context buffer, 有input filters则filters先处理

process_input_packet(ist, &pkt, 0);

discard_packet:

av_packet_unref(&pkt);

return 0;

}

static int process_input_packet(InputStream *ist, const AVPacket *pkt, int no_eof)

{

int ret = 0, i;

int repeating = 0;

int eof_reached = 0;

AVPacket avpkt;

if (!ist->saw_first_ts) {

ist->dts = ist->st->avg_frame_rate.num ? - ist->dec_ctx->has_b_frames * AV_TIME_BASE / av_q2d(ist->st->avg_frame_rate) : 0;

ist->pts = 0;

if (pkt && pkt->pts != AV_NOPTS_VALUE && !ist->decoding_needed) {

ist->dts += av_rescale_q(pkt->pts, ist->st->time_base, AV_TIME_BASE_Q);

ist->pts = ist->dts; //unused but better to set it to a value thats not totally wrong

}

ist->saw_first_ts = 1;

}

if (ist->next_dts == AV_NOPTS_VALUE)

ist->next_dts = ist->dts;

if (ist->next_pts == AV_NOPTS_VALUE)

ist->next_pts = ist->pts;

if (!pkt) {

/* EOF handling */

av_init_packet(&avpkt);

avpkt.data = NULL;

avpkt.size = 0;

} else {

avpkt = *pkt;

}

if (pkt && pkt->dts != AV_NOPTS_VALUE) {

ist->next_dts = ist->dts = av_rescale_q(pkt->dts, ist->st->time_base, AV_TIME_BASE_Q);

if (ist->dec_ctx->codec_type != AVMEDIA_TYPE_VIDEO || !ist->decoding_needed)

ist->next_pts = ist->pts = ist->dts;

}

// while we have more to decode or while the decoder did output something on EOF

while (ist->decoding_needed) {

int64_t duration_dts = 0;

int64_t duration_pts = 0;

int got_output = 0;

int decode_failed = 0;

ist->pts = ist->next_pts;

ist->dts = ist->next_dts;

switch (ist->dec_ctx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

ret = decode_audio (ist, repeating ? NULL : &avpkt, &got_output,

&decode_failed);

break;

case AVMEDIA_TYPE_VIDEO:

ret = decode_video (ist, repeating ? NULL : &avpkt, &got_output, &duration_pts, !pkt,

&decode_failed);

..................;

break;

case AVMEDIA_TYPE_SUBTITLE:

ret = transcode_subtitles(ist, &avpkt, &got_output, &decode_failed);

...................;

break;

default:

return -1;

}

}

....................;

return !eof_reached;

}

static int decode_video(InputStream *ist, AVPacket *pkt, int *got_output, int64_t *duration_pts, int eof,

int *decode_failed)

{

AVFrame *decoded_frame;

.................;

ret = decode(ist->dec_ctx, decoded_frame, got_output, pkt ? &avpkt : NULL);

...................;

err = send_frame_to_filters(ist, decoded_frame);

.................;

return err < 0 ? err : ret;

}

static int send_frame_to_filters(InputStream *ist, AVFrame *decoded_frame)

{

int i, ret;

AVFrame *f;

..................;

ret = ifilter_send_frame(ist->filters[i], f);

return ret;

}

static int ifilter_send_frame(InputFilter *ifilter, AVFrame *frame)

{

FilterGraph *fg = ifilter->graph;

......................;

ret = av_buffersrc_add_frame_flags(ifilter->filter, frame, AV_BUFFERSRC_FLAG_PUSH);

......................;

return 0;

}

static int reap_filters(int flush)

{

AVFrame *filtered_frame = NULL;

int i;

.......................;

filtered_frame = ost->filtered_frame;

while (1) {

double float_pts = AV_NOPTS_VALUE; // this is identical to filtered_frame.pts but with higher precision

//从filter input graph中读取filtered_frame

ret = av_buffersink_get_frame_flags(filter, filtered_frame,

AV_BUFFERSINK_FLAG_NO_REQUEST);

..................;

switch (av_buffersink_get_type(filter)) {

case AVMEDIA_TYPE_VIDEO:

.......................;

//重点看下video out

do_video_out(of, ost, filtered_frame, float_pts);

break;

case AVMEDIA_TYPE_AUDIO:

.......................;

do_audio_out(of, ost, filtered_frame);

break;

default:

av_assert0(0);

}

av_frame_unref(filtered_frame);

}

}

return 0;

}

static void do_video_out(OutputFile *of,

OutputStream *ost,

AVFrame *next_picture,

double sync_ipts)

{

...........................;

//将数据送入encoder

ret = avcodec_send_frame(enc, in_picture);

// Make sure Closed Captions will not be duplicated

av_frame_remove_side_data(in_picture, AV_FRAME_DATA_A53_CC);

while (1) {

//从endcoder读取数据

ret = avcodec_receive_packet(enc, &pkt);

...................;

frame_size = pkt.size;

//将数据送到muxer

output_packet(of, &pkt, ost, 0);

}

........................;

}

...........................;

}

static void output_packet(OutputFile *of, AVPacket *pkt,

OutputStream *ost, int eof)

{

...........................;

write_packet(of, pkt, ost, 0);

...........................;

}

static void write_packet(OutputFile *of, AVPacket *pkt, OutputStream *ost, int unqueue)

{

AVFormatContext *s = of->ctx;

AVStream *st = ost->st;

.........................;

ret = av_interleaved_write_frame(s, pkt);

.........................;

}

// mux.c

int av_interleaved_write_frame(AVFormatContext *s, AVPacket *pkt)

{

int ret, flush = 0;

ret = prepare_input_packet(s, pkt);

.........;

for (;; ) {

AVPacket opkt;

int ret = interleave_packet(s, &opkt, pkt, flush);

................;

ret = write_packet(s, &opkt);

}

return ret;

}

static int write_packet(AVFormatContext *s, AVPacket *pkt)

{

int ret;

int64_t pts_backup, dts_backup;

......................;

if ((pkt->flags & AV_PKT_FLAG_UNCODED_FRAME)) {

AVFrame *frame = (AVFrame *)pkt->data;

av_assert0(pkt->size == UNCODED_FRAME_PACKET_SIZE);

ret = s->oformat->write_uncoded_frame(s, pkt->stream_index, &frame, 0);

av_frame_free(&frame);

} else {

//向具体的muxer中写数据, 比如本例中为ff_image2_muxer

ret = s->oformat->write_packet(s, pkt);

}

............................;

return ret;

}