Hadoop大数据综合案例1-Hadoop2.7.3伪分布式环境搭建

Hadoop大数据招聘网数据分析综合案例

- Hadoop大数据综合案例1-Hadoop2.7.3伪分布式环境搭建

- Hadoop大数据综合案例2-HttpClient与Python招聘网数据采集

- Hadoop大数据综合案例3-MapReduce数据预处理

- Hadoop大数据综合案例4-Hive数据分析

- Hadoop大数据综合案例5-SSM可视化基础搭建

- Hadoop大数据综合案例6–数据可视化(SpringBoot+ECharts)

环境部署前提

- 配置映射地址 (

/etc/hosts) - 关闭防火墙 (

systemctl stop firewalldsystemctl disable firewalld) - 关闭Linux 安全子系统

SELinux(/etc/sysconfig/selinux|/etc/selinux/config) - 使用

ping baidu.com测试网络是否联通,安装 vim 编辑器 (yum install vim) - 配置主机的 hosts 映射 (

C:\Windows\System32\drivers\etc\hosts)

# 配置映射地址

$ echo 192.168.22.101 node01 > /etc/hosts

# 关闭防火墙

$ systemctl stop firewalld

$ systemctl disable firewalld

# 关闭 linux 安全子系统

$ vi /etc/selinux/config

SELINUX=disabled

伪分布式环境搭建

在 node01 节点搭建伪分布式环境,Java下载地址 Hadoop下载地址

安装 Java 与 Hadoop

# 上传 jdk8 与 hadoop2.7.3 到 usr/local 目录下

# 解压 tar 包到当前目录

[root@node01 local]# tar -zxvf jdk-8u111-linux-x64.tar.gz

[root@node01 local]# tar -zxvf hadoop-2.7.3.tar.gz

#重命名配置

[root@node01 local]# mv jdk1.8.0_111/ jdk

[root@node01 local]# mv hadoop-2.7.3 hadoop

# 配置 jdk 和 hadoop 环境变量

[root@node01 local]# vim + ~/.bash_profile

# JAVA_HOME

export JAVA_HOME=/usr/local/jdk

export PATH=$PATH:$JAVA_HOME/bin

# HADOOP_HOME

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

# 重新加载 环境变量文件,使配置生效

[root@node01 local]# source ~/.bash_profile

# 测试配置是否OK

[root@node01 local]# java -version

java version "1.8.0_111"

[root@node01 local]# hadoop version

Hadoop 2.7.3

配置 Hadoop

参考官方文档 修改 hadoop-env.sh、yarn-env.sh、mapred-env.sh 设置 Hadoop 环境对应的 JDK

[root@node01 local]# vim /usr/local/hadoop/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/local/jdk

[root@node01 local]# vim /usr/local/hadoop/etc/hadoop/yarn-env.sh

export JAVA_HOME=/usr/local/jdk

[root@node01 local]# vim /usr/local/hadoop/etc/hadoop/mapred-env.sh

export JAVA_HOME=/usr/local/jdk

修改 core-site.xml 配置文件

[root@node01 local]# vim + /usr/local/hadoop/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://node01:8020value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/usr/local/hadoop/data/tmpvalue>

property>

configuration>

修改 hdfs-site.xml 配置文件

[root@node01 local]# vim + /usr/local/hadoop/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>1value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>node01:50090value>

property>

configuration>

配置 MapReduce 与 YARN

mapred-site.xml,把mapred-sit.xml.template复制一份,修改为mapred-site.xml并添加如下信息。

[root@node01 local]# cp /usr/local/hadoop/etc/hadoop/mapred-site.xml.template /usr/local/hadoop/etc/hadoop/mapred-site.xml

[root@node01 local]# vim /usr/local/hadoop/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>

yarn-site.xml添加相应配置

[root@node01 local]# vim /usr/local/hadoop/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>node01value>

property>

configuration>

- 在

slaves配置文件中 添加 主机名,它指定了DataNode和NodeManager所在的机器。

# 替换 slaves 中的节点名称

[root@node01 local]# echo node01 > /usr/local/hadoop/etc/hadoop/slaves

配置 SSH 免密登录

生成 SSH 免密登录的密钥对。输入命令 ssh-keygen ,然后 4 个回车即可。接着使用ssh-copy-id node复制公钥输入密码进行免密配置。

[root@node01 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:NntmNW1wpOtDSRSoq1RQPPx5PgPmyNggZ1bmcAm+dyA root@node01

The key's randomart image is:

+---[RSA 2048]----+

| .=.. .o.. |

| .o O .. o |

| EB.+ .+ . |

| . =o+.=..* |

| =.*S=.+* o |

| oo=+.++o |

| . .. + oo |

| . + . |

| |

+----[SHA256]-----+

[root@node01 ~]# ssh-copy-id node01

格式化 HDFS

在主节点 node01 上运行 HDFS 格式化命令

[root@node01 ~]# hdfs namenode -format

20/08/23 17:13:52 INFO common.Storage: Storage directory /usr/local/hadoop-2.7.3/data/namenode has been successfully formatted.

启动 Hadoop 服务测试

[root@node01 hadoop]# start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [node01]

node01: starting namenode, logging to /usr/local/hadoop-2.7.3/logs/hadoop-root-namenode-node01.out

node01: starting datanode, logging to /usr/local/hadoop-2.7.3/logs/hadoop-root-datanode-node01.out

Starting secondary namenodes [node01]

node01: starting secondarynamenode, logging to /usr/local/hadoop-2.7.3/logs/hadoop-root-secondarynamenode-node01.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop-2.7.3/logs/yarn-root-resourcemanager-node01.out

node01: starting nodemanager, logging to /usr/local/hadoop-2.7.3/logs/yarn-root-nodemanager-node01.out

[root@node01 hadoop]# jps

3537 Jps

2931 DataNode

3284 ResourceManager

3097 SecondaryNameNode

3385 NodeManager

2827 NameNode

测试访问

这里使用的是

node01服务名进行访问,需要在本机C:\Windows\System32\drivers\etc\hosts中配置地址映射。

搭建Hive环境

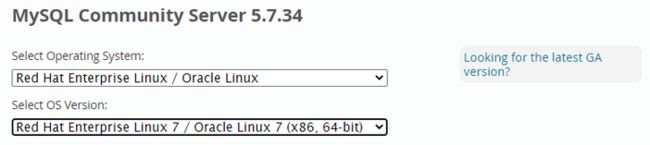

离线安装 MySQL57离线捆绑包

# 上传MySQL离线捆绑包到Linux 系统 /usr/local/mysql57 目录下

[root@node03 mysql57]# tar -xvf mysql-5.7.31-1.el7.x86_64.rpm-bundle.tar #解压到当前目录

# 本地安装mysql

[root@node03 mysql57]# yum localinstall -y install mysql-community-{server,client,common,libs}-*

# 手动初始化data文件夹,服务密码为空字符串

[root@node mysql5.7]# mysqld --user=mysql --initialize-insecure

[root@node mysql5.7]# systemctl start mysqld # 启动 MySQL 服务

[root@node mysql5.7]# systemctl enable mysqld # 设置 MySQL 服务开机启动

[root@node mysql5.7]# mysql -uroot -p # 密码是空字符串,直接回车即可

- 修改原生密码及权限配置

# 修改密码

mysql> ALTER USER 'root'@'localhost' IDENTIFIED BY 'root';

# 设置远程连接

mysql> grant all privileges on *.* to 'root'@'%' identified by 'root' with grant option;

mysql> flush privileges;

配置 Hive

- 解压

Hive安装包 Hive下载地址

[root@node01 local]# tar -zxvf apache-hive-1.2.1-bin.tar.gz

[root@node01 local]# mv apache-hive-1.2.1-bin hive

- 配置

Hive环境变量HIVE_HOME

[root@node01 local]# vim + ~/.bash_profile

export HIVE_HOME=/usr/local/hive

export PATH=$PATH:$HIVE_HOME/bin

[root@node01 local]# source ~/.bash_profile

- 创建

conf/hive-site.xml文件,配置对应的 Metastore 配置信息 ,注意配置文件顶行不要由空行

<configuration>

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&useSSL=falsevalue>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>rootvalue>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>rootvalue>

property>

<property>

<name>hive.cli.print.headername>

<value>truevalue>

property>

<property>

<name>hive.cli.print.current.dbname>

<value>truevalue>

property>

configuration>

- 初始化

MySQL元数据库

由于Hive默认使用的使 derby数据库,在hive依赖库中没有提供 MySQL 的连接驱动包,我们需要拷贝一个到 lib包 中,并运行bin/schematool -initSchema -dbType mysql命令,初始化 mysql 。 - 初始化完毕后,运行

bin/hive启动即可使用

Sqoop 的安装与部署

- 解压到安装目录,并重命名文件夹。 sqoop下载地址

[root@node01 local]# tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /usr/local/

[root@node01 local]# mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoop

- 添加环境变量,并重新加载

[root@node01 lib]# vim ~/.bash_profile

## SQOOP_HOME

export SQOOP_HOME=/usr/local/sqoop

export PATH=$PATH:$SQOOP_HOME/bin

[root@node01 lib]# source ~/.bash_profile

- 这里要使用到

mysql与hive,故需把 mysql 的 驱动程序mysql-connector-java-5.1.46.jar与hive的 相关组件包hive-exec-1.2.1.jar、hive-common-1.2.1.jar拷贝到Sqoop的依赖库lib下。

[root@node01 lib]# cp mysql-connector-java-5.1.46.jar hive-common-1.2.1.jar hive-exec-1.2.1.jar /usr/local/sqoop/lib/

- 测试

[root@node01 bin]# sqoop list-databases --connect jdbc:mysql://node01:3306?useSSL=false --username root --password root

20/09/06 14:12:36 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

20/09/06 14:12:36 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

20/09/06 14:12:37 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

hive

mysql

performance_schema

sys

下一章使用IDEA编写Java爬虫进行招聘网数据获取,并把获取的数据存储HDFS分布式文件系统中。