P30 利用GPU训练(一)

新建python文件 train_gpu_1.py

先把之前的train3复制过来

然后不要import 模型

自己手打CLass Tudui

全部代码:

import torch

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from torch import nn

#准备数据集

train_data=torchvision.datasets.CIFAR10("./P27_datasets",train=True,

transform=torchvision.transforms.ToTensor(),

download=True)

test_data=torchvision.datasets.CIFAR10("./P27_datasets",train=False,

transform=torchvision.transforms.ToTensor(),

download=True)

#获取数据集的长度

train_data_size=len(train_data)

test_data_size=len(test_data)

#如果train_dataset_size=10 输出为

#训练数据集的长度为:10

print("训练数据集的长度为{}:".format(train_data_size))

print("测试数据集的长度为{}:".format(test_data_size))

#加载数据集

train_dataloader=DataLoader(train_data,batch_size=64)

test_dataloader=DataLoader(test_data,batch_size=64)

#创建网络:

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model=nn.Sequential(

nn.Conv2d(3,32,kernel_size=5,stride=1,padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4,64),

nn.Linear(64,10)

)

def forward(self,x):

x=self.model(x)

return x

tudui=Tudui()

#创建损失函数

loss_fn=nn.CrossEntropyLoss()

#创建优化器

learning_rate=0.001

#或者写成科学计数法

#learning_rate=1e-2

optimizer=torch.optim.SGD(tudui.parameters(),lr=learning_rate)

#设置训练网络的一些参数:

#记录训练记录

total_train_step=0

#记录测试的次数

toatl_test_step=0

#训练的轮数

epoch=10

#添加tensorboard

writer=SummaryWriter("./P28_trian3_tensorboard")

for i in range(epoch):

print("--------第{}轮训练开始-----".format(i+1))

#训练开始

for data in train_dataloader:

imgs,targets=data

outputs=tudui(imgs)

loss=loss_fn(outputs,targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

#记录训练次数

total_train_step=total_train_step+1

if total_train_step %100==0:

print("训练次数:{},Loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

#测试步骤开始

total_accuracy=0

total_test_loss=0

with torch.no_grad():

for data in test_dataloader:

imgs,targets=data

outputs=tudui(imgs)

loss=loss_fn(outputs,targets)

total_test_loss=total_test_loss+loss.item()

accuracy=(outputs.argmax(1)==targets).sum()

total_accuracy=total_accuracy+accuracy

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss",total_test_loss,toatl_test_step)

writer.add_scalar("test_accracy",total_accuracy/test_data_size,toatl_test_step)

toatl_test_step=toatl_test_step+1

#每一轮模型保存

torch.save(tudui,"tudui_{}.pth".format(i))

print("模型已保存")

writer.close()

一、返回cuda

1、tudui网络模型转移到cuda上面

2、损失函数转移到cuda

3、数据转移到cuda

import torch

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from torch import nn

#准备数据集

train_data=torchvision.datasets.CIFAR10("./P27_datasets",train=True,

transform=torchvision.transforms.ToTensor(),

download=True)

test_data=torchvision.datasets.CIFAR10("./P27_datasets",train=False,

transform=torchvision.transforms.ToTensor(),

download=True)

#获取数据集的长度

train_data_size=len(train_data)

test_data_size=len(test_data)

#如果train_dataset_size=10 输出为

#训练数据集的长度为:10

print("训练数据集的长度为{}:".format(train_data_size))

print("测试数据集的长度为{}:".format(test_data_size))

#加载数据集

train_dataloader=DataLoader(train_data,batch_size=64)

test_dataloader=DataLoader(test_data,batch_size=64)

#创建网络:

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model=nn.Sequential(

nn.Conv2d(3,32,kernel_size=5,stride=1,padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4,64),

nn.Linear(64,10)

)

def forward(self,x):

x=self.model(x)

return x

tudui=Tudui()

tudui=tudui.cuda()

#创建损失函数

loss_fn=nn.CrossEntropyLoss()

loss_fn=loss_fn.cuda()

#创建优化器

learning_rate=0.001

#或者写成科学计数法

#learning_rate=1e-2

optimizer=torch.optim.SGD(tudui.parameters(),lr=learning_rate)

#设置训练网络的一些参数:

#记录训练记录

total_train_step=0

#记录测试的次数

toatl_test_step=0

#训练的轮数

epoch=10

#添加tensorboard

writer=SummaryWriter("./P28_trian3_tensorboard")

for i in range(epoch):

print("--------第{}轮训练开始-----".format(i+1))

#训练开始

for data in train_dataloader:

imgs,targets=data

imgs=imgs.cuda()

targets=targets.cuda()

outputs=tudui(imgs)

loss=loss_fn(outputs,targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

#记录训练次数

total_train_step=total_train_step+1

if total_train_step %100==0:

print("训练次数:{},Loss:{}".format(total_train_step,loss.item()))

writer.add_scalar("train_loss",loss.item(),total_train_step)

#测试步骤开始

total_accuracy=0

total_test_loss=0

with torch.no_grad():

for data in test_dataloader:

imgs,targets=data

imgs=imgs.cuda()

targets=targets.cuda()

outputs=tudui(imgs)

loss=loss_fn(outputs,targets)

total_test_loss=total_test_loss+loss.item()

accuracy=(outputs.argmax(1)==targets).sum()

total_accuracy=total_accuracy+accuracy

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss",total_test_loss,toatl_test_step)

writer.add_scalar("test_accracy",total_accuracy/test_data_size,toatl_test_step)

toatl_test_step=toatl_test_step+1

#每一轮模型保存

torch.save(tudui,"tudui_{}.pth".format(i))

print("模型已保存")

writer.close()

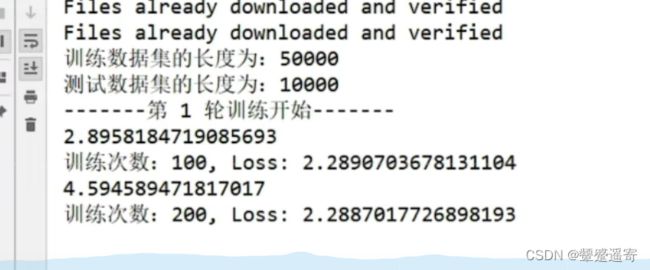

4、运行一下,还是挺快的

训练次数:6800,Loss:1.8188573122024536

训练次数:6900,Loss:1.9893739223480225

训练次数:7000,Loss:1.9036908149719238

整体测试集上的Loss:305.593309879303

整体测试集上的正确率:0.3043999969959259

模型已保存

--------第10轮训练开始-----

训练次数:7100,Loss:2.066310167312622

训练次数:7200,Loss:1.9233098030090332

训练次数:7300,Loss:2.0878043174743652

训练次数:7400,Loss:1.7443344593048096

训练次数:7500,Loss:2.0257949829101562

训练次数:7600,Loss:1.8980073928833008

训练次数:7700,Loss:1.9671798944473267

训练次数:7800,Loss:1.8896164894104004

整体测试集上的Loss:301.40983188152313

整体测试集上的正确率:0.31630000472068787

模型已保存

5、更好的写法:

tudui=Tudui()

if torch.cuda.is_available():

tudui=tudui.cuda()

……

if torch.cuda.is_available():

loss_fn=loss_fn.cuda()

……

if torch.cuda.is_available():

imgs=imgs.cuda()

targets=targets.cuda()

……

if torch.cuda.is_available():

imgs=imgs.cuda()

targets=targets.cuda()

但是这样挺累的。。

6、调整学习率为0.01再跑一遍:

就和up主差不多了 ,正确率50多以上

--------第9轮训练开始-----

训练次数:6300,Loss:1.4029728174209595

训练次数:6400,Loss:1.1114188432693481

训练次数:6500,Loss:1.5977591276168823

训练次数:6600,Loss:1.0503333806991577

训练次数:6700,Loss:1.132214069366455

训练次数:6800,Loss:1.1668838262557983

训练次数:6900,Loss:1.1765074729919434

训练次数:7000,Loss:0.9608389735221863

整体测试集上的Loss:201.16121822595596

整体测试集上的正确率:0.5424999594688416

模型已保存

--------第10轮训练开始-----

训练次数:7100,Loss:1.2530179023742676

训练次数:7200,Loss:1.0047394037246704

训练次数:7300,Loss:1.133361577987671

训练次数:7400,Loss:0.8593324422836304

训练次数:7500,Loss:1.2483118772506714

训练次数:7600,Loss:1.2560092210769653

训练次数:7700,Loss:0.8705365061759949

训练次数:7800,Loss:1.2331193685531616

整体测试集上的Loss:192.12796568870544

整体测试集上的正确率:0.5651999711990356

模型已保存