Prometheus规则定义及基于docker简单邮箱钉钉服务发现

目录

规则文件用于定义Prometheus中的告警规则,即在满足特定条件时生成警报。

记录规则文件用于定义Prometheus中的记录规则,即根据现有的指标数据生成新的时间序列数据

grafana启动

Alertmanager的介绍

告警功能概述

Prometheus监控系统的告警逻辑

启动Alertmanager

启用告警功能:

定义告警发送

在Prometheus上定义告警规则

规则文件用于定义Prometheus中的告警规则,即在满足特定条件时生成警报。

在规则文件中所有的文件都隶属于groups,在groups之下才能定义规则,每个规则都有名字

规则文件:

groups: 规则组定义

- name:

rules:

- name:

expr: PromQL

- name:

expr:

- name: #第二组下的规则

rules:

- name:

expr: 记录规则文件用于定义Prometheus中的记录规则,即根据现有的指标数据生成新的时间序列数据

定义记录规则,记录规则用record来命名,规则文件用name来命名

记录规则文件:

groups:

- name:

rules:

- record:

expr: PromQL

labels: {}

- record:

expr:

labels: {} grafana启动

docker启动grafana需要配置IP号,也可以下载安装进行配置

[root@rocky8 datasources]#pwd

/root/learning-prometheus-master/08-prometheus-components-compose/grafana/grafana/provisioning/datasources

[root@rocky8 datasources]#cat all.yml

# config file version

apiVersion: 1

# list of datasources that should be deleted from the database

deleteDatasources:

- name: Prometheus

orgId: 1

# list of datasources to insert/update depending

# whats available in the database

datasources:

# name of the datasource. Required

- name: 'Prometheus'

type: 'prometheus'

access: 'proxy'

org_id: 1

url: 'http://10.0.0.8:9090'

is_default: true

version: 1

editable: true

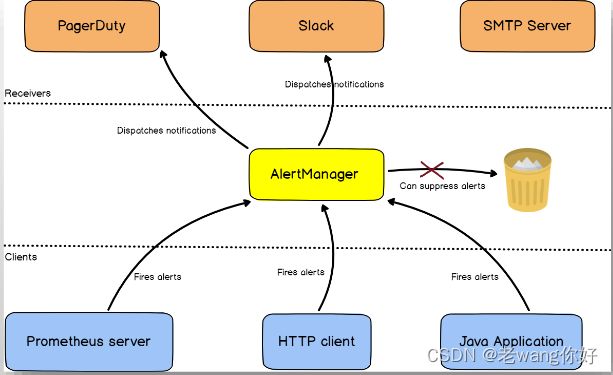

Alertmanager的介绍

告警功能概述

Prometheus对指标的收集、存储同告警能力分属于Prometheus Server和AlertManager两个独立的组件,前者仅负责基于“告警规则”生成告警通知,具体的告警操作则由后者完成;

◼ Alertmanager负责处理由客户端发来的告警通知

◆ 客户端通常是Prometheus Server,但它也支持接收来自其它工具的告警;

◆ Alertmanager对告警通知进行分组、去重后,根据路由规则将其路由到不同的receiver,如Email、短信或PagerDuty等;

Prometheus监控系统的告警逻辑

首先要配置Prometheus成为Alertmanager的告警客户端;

- 反过来,Alertmanager也是应用程序,它自身同样应该纳入prometheus的监控目标;

配置逻辑

在Alertmanager上定义receiver,他们通常是能够基于某个媒介接收告警消息的特定用户;

- Email、WeChat、Pagerduty、Slack和Webhook等是为常见的发送告警信息的媒介;

- 在不同的媒介上,代表告警消息接收人的地址表示方式也会有所不同;

◼ 在Alertmanager上定义路由规则(route),以便将收到的告警通知按需分别进行处理;

◼ 在Prometheus上定义告警规则生成告警通知,发送给Alertmanager;

除了基本的告警通知能力外,Altermanager还支持对告警进行去重、分组、抑制、静默和路由等功能;

启动Alertmanager

[root@rocky8 alertmanager]#cat docker-compose.yml

version: '3.6'

volumes:

alertmanager_data: {}

networks:

monitoring:

driver: bridge

ipam:

config:

- subnet: 172.31.55.0/24

services:

alertmanager:

image: prom/alertmanager:v0.25.0

volumes:

- ./alertmanager/:/etc/alertmanager/

- alertmanager_data:/alertmanager

networks:

- monitoring

restart: always

ports:

- 9093:9093

command:

- '--config.file=/etc/alertmanager/config.yml'

- '--storage.path=/alertmanager'

- '--log.level=debug'

[root@rocky8 alertmanager]#docker-compose up

[root@rocky8 alertmanager]#pwd

/root/learning-prometheus-master/08-prometheus-components-compose/alertmanager

启用告警功能:

1、在prometheus server上,编写并加载alert rule;

2、在prometheus server上,指定要使用的alertmanager;

3、在alertmanager,定义好告警接收人;

- email

- dingtalk

- wechat

- 短信

4、把alertmanager纳入到监控目标中来;

定义告警发送

[root@rocky8 alertmanager]#cat email_template.tmpl 告警二次处理文件

{{ define "email.default.html" }}

{{- if gt (len .Alerts.Firing) 0 -}}

{{ range .Alerts }}

=========start==========

告警程序: prometheus_alert

告警级别: {{ .Labels.severity }}

告警类型: {{ .Labels.alertname }}

告警主机: {{ .Labels.instance }}

告警主题: {{ .Annotations.summary }}

告警详情: {{ .Annotations.description }}

触发时间: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

=========end==========

{{ end }}{{ end -}}

{{- if gt (len .Alerts.Resolved) 0 -}}

{{ range .Alerts }}

=========start==========

告警程序: prometheus_alert

告警级别: {{ .Labels.severity }}

告警类型: {{ .Labels.alertname }}

告警主机: {{ .Labels.instance }}

告警主题: {{ .Annotations.summary }}

告警详情: {{ .Annotations.description }}

触发时间: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

恢复时间: {{ .EndsAt.Format "2006-01-02 15:04:05" }}

=========end==========

{{ end }}{{ end -}}

{{- end }}

[root@rocky8 alertmanager]#cat config.yml 告警文件处理

global:

resolve_timeout: 1m

smtp_smarthost: 'smtp.qq.com:465'

smtp_from: '[email protected]'

smtp_auth_username: '[email protected]'

smtp_auth_password: 'uvytiziidqbbid'

smtp_hello: '@qq.com'

smtp_require_tls: false

route:

# The labels by which incoming alerts are grouped together. For example,

# multiple alerts coming in for job=mysqld-exporter and alertname=mysql_down would

# be batched into a single group.

group_by: ['job', 'alertname']

# When a new group of alerts is created by an incoming alert, wait at

# least 'group_wait' to send the initial notification.

# This way ensures that you get multiple alerts for the same group that start

# firing shortly after another are batched together on the first notification.

group_wait: 10s

# When the first notification was sent, wait 'group_interval' to send a batch

# of new alerts that started firing for that group.

group_interval: 10s

# If an alert has successfully been sent, wait 'repeat_interval' to resend them.

repeat_interval: 5m

receiver: team-devops-email

#receiver: team-devops-wechat

templates:

- '/etc/alertmanager/email_template.tmpl'

- '/etc/alertmanager/wechat_template.tmpl'

# 定义接收者

receivers:

- name: 'team-devops-email'

email_configs:

- to: '[email protected]'

headers:

subject: "{{ .Status | toUpper }} {{ .CommonLabels.env }}:{{ .CommonLabels.cluster }} {{ .CommonLabels.alertname }}"

html: '{{ template "email.default.html" . }}'

send_resolved: true

- name: 'team-devops-wechat'

wechat_configs:

- corp_id: ww4c893118fbf4d07c

to_user: '@all'

agent_id: 1000003

api_secret: WTepmmaqxbBOeTQOuxa0Olzov_hSEWsZWrPX1k6opMk

send_resolved: true

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'job']

在Prometheus上定义告警规则

[root@rocky8 rules]#pwd

/usr/local/prometheus/rules

[root@rocky8 rules]#cat alert-rules-blackbox-exporter.yml

groups:

- name: blackbox

rules:

# Blackbox probe failed

- alert: BlackboxProbeFailed

expr: probe_success == 0

for: 0m

labels:

severity: critical

annotations:

summary: Blackbox probe failed (instance {{ $labels.instance }})

description: "Probe failed\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

# Blackbox slow probe

- alert: BlackboxSlowProbe

expr: avg_over_time(probe_duration_seconds[1m]) > 1

for: 1m

labels:

severity: warning

annotations:

summary: Blackbox slow probe (instance {{ $labels.instance }})

description: "Blackbox probe took more than 1s to complete\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

# Blackbox probe HTTP failure

- alert: BlackboxProbeHttpFailure

expr: probe_http_status_code <= 199 OR probe_http_status_code >= 400

for: 0m

labels:

severity: critical

annotations:

summary: Blackbox probe HTTP failure (instance {{ $labels.instance }})

description: "HTTP status code is not 200-399\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

# Blackbox probe slow HTTP

- alert: BlackboxProbeSlowHttp

expr: avg_over_time(probe_http_duration_seconds[1m]) > 1

for: 1m

labels:

severity: warning

annotations:

summary: Blackbox probe slow HTTP (instance {{ $labels.instance }})

description: "HTTP request took more than 1s\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

# Blackbox probe slow ping

- alert: BlackboxProbeSlowPing

expr: avg_over_time(probe_icmp_duration_seconds[1m]) > 1

for: 1m

labels:

severity: warning

annotations:

summary: Blackbox probe slow ping (instance {{ $labels.instance }})

description: "Blackbox ping took more than 1s\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

在Prometheus上让他加载

[root@rocky8 prometheus]#cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- 10.0.0.18:9093 #指定 alertmanager告警发配置送路径

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

- rules/record-rules-*.yml

- rules/alert-rules-*.yml #指定告警文件加载路径

[root@rocky8 prometheus]#./promtool check config ./prometheus.yml #检查

[root@rocky8 prometheus]#curl -XPOST localhost:9090/-/reload #加载实例

钉钉告警

对应的这两个

然后重新启动就可以了

[root@rocky8 alertmanager]#pwd

/root/learning-prometheus-master/08-prometheus-components-compose/alertmanager-and-dingtalk/alertmanager

[root@rocky8 alertmanager]#cat config.yml

global:

resolve_timeout: 1m

smtp_smarthost: 'smtp.qq.com:465'

smtp_from: '[email protected]'

smtp_auth_username: '[email protected]'

smtp_auth_password: 'ioojgoqmgybfbgfg'

smtp_hello: '@qq.com'

smtp_require_tls: false

route:

# The labels by which incoming alerts are grouped together. For example,

# multiple alerts coming in for job=mysqld-exporter and alertname=mysql_down would

# be batched into a single group.

group_by: ['job', 'alertname']

# When a new group of alerts is created by an incoming alert, wait at

# least 'group_wait' to send the initial notification.

# This way ensures that you get multiple alerts for the same group that start

# firing shortly after another are batched together on the first notification.

group_wait: 10s

# When the first notification was sent, wait 'group_interval' to send a batch

# of new alerts that started firing for that group.

group_interval: 10s

# If an alert has successfully been sent, wait 'repeat_interval' to resend them.

repeat_interval: 5m

receiver: team-devops-dingtalk

#receiver: team-devops-email

#receiver: team-devops-wechat

templates:

- '/etc/alertmanager/email_template.tmpl'

- '/etc/alertmanager/wechat_template.tmpl'

# 定义接收者

receivers:

- name: 'team-devops-email'

email_configs:

- to: '[email protected]'

headers:

subject: "{{ .Status | toUpper }} {{ .CommonLabels.env }}:{{ .CommonLabels.cluster }} {{ .CommonLabels.alertname }}"

html: '{{ template "email.default.html" . }}'

send_resolved: true

- name: 'team-devops-wechat'

wechat_configs:

- corp_id: ww4c893118fbf4d07c

to_user: '@all'

agent_id: 1000008

api_secret: WTepmmaqxbBOeTQOuxa0Olzov_hSEWsZWrPX1k6opMk

send_resolved: true

- name: 'team-devops-dingtalk'

webhook_configs:

- url: http://prometheus-webhook-dingtalk:8060/dingtalk/webhook1/send

send_resolved: true

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'job']