Chiron在K8S中的实践

K8S容器编排

文章目录

-

- 1:k8s集群的安装

-

- 1.1 k8s的架构

- 1.2:修改所有节点IP地址、主机名和host解析

- 1.3:master节点安装etcd

- 1.4:master节点安装kubernetes

- 1.5:所有node节点安装kubernetes

- 6:所有节点配置flannel网络

- 7:配置master为镜像仓库

- 2:什么是k8s,k8s有什么功能?

-

- 2.1 k8s的核心功能

- 2.2 k8s的历史

- 2.3 k8s的安装方式

- 2.4 k8s的应用场景

- 3:k8s常用的资源

-

- 3.1 创建pod资源

- 3.2 ReplicationController资源

- 3.3 service资源

- 3.4 deployment资源

- 3.5 Daemon Set资源

- 3.6 Pet Set资源

- 3.7 Job资源

- 3.8 tomcat+mysql练习

- 4:k8s的附加组件

-

- 4.1 dns服务

- 4.2 namespace命令空间

- 4.3 健康检查

-

- 4.3.1 探针的种类

- 4.3.2 探针的检测方法

- 4.3.3 liveness探针的exec使用:

- 4.3.4 liveness探针的httpGet使用:

- 4.3.5 liveness探针的tcpSocket使用:

- 4.3.6 readiness探针的httpGet使用:

- 4.4 dashboard服务

- 4.5 通过apiservicer反向代理访问service

- 5: k8s弹性伸缩

-

- 5.1 安装heapster监控

- 5.2 弹性伸缩

- 6:持久化存储

-

- 数据持久化类型:

- 6.1 emptyDir:

- 6.2 HostPath:

- 6.3 nfs:

-

- Kubernetes使用NFS共享存储有两种方式:

- 6.4 pvc:

-

- 6.4.1:创建pv和pvc

- 6.4.2:创建mysql-rc,pod模板里使用volume:PVC

- 6.4.3: 验证持久化

- 6.5: 分布式存储glusterfs

- 6.6 k8s 对接glusterfs存储

-

-

- 扩展(不做演示):

-

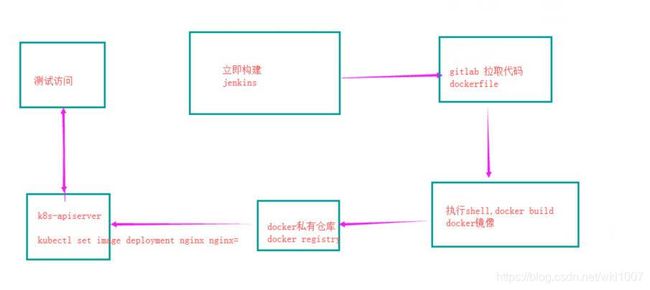

- 7:与jenkins集成实现cd

-

- 7. 1 :将本地代码上传到码云Gitee的仓库中

- 7.2 :安装jenkins,并自动构建docker镜像

-

- 1:安装jenkins

- 2:访问jenkins

- 3:编写Dockerfile,进行本地测试,然后推送到Git仓库中

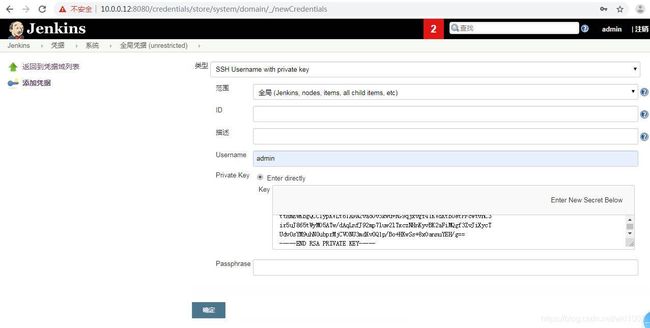

- 4:配置jenkins拉取Git仓库代码凭据

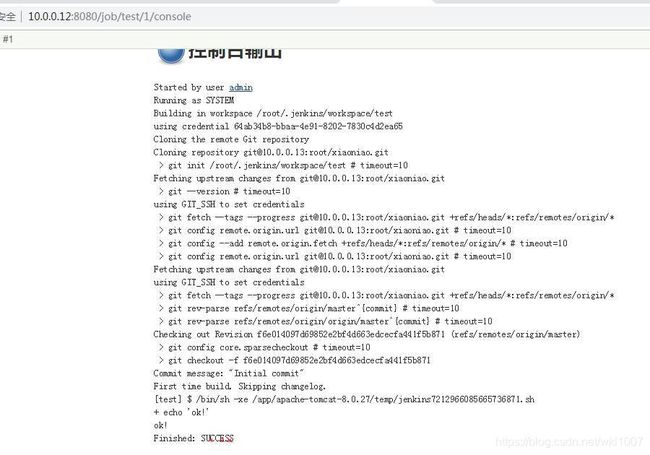

- 5:Jenkins创建自由风格的工程“yiliao”;拉取代码测试

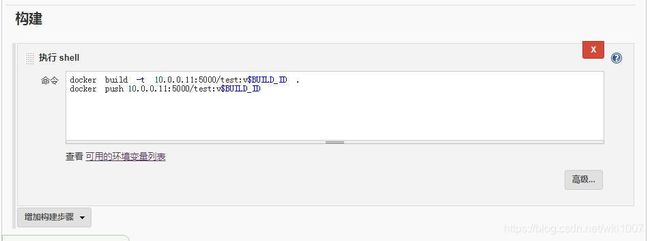

- 6:更改Jenkins配置中执行的shell命令;使其创建Docker镜像,并推送到私有仓库

- 7:Jenkins使用参数化构建,自动构建docker镜像并上传到私有仓库

- 7.3 手动部署实现“yiliao”的发布,升级,回滚;方面理解后面通过 Jenkins自动化部署应用

- 7.4 jenkins自动部署应用到k8s

- 8:部署Prometheus+Grafana

-

- Node节点安装cadvisor和node-exporter

- Master节点部署Prometheus+Grafana

- 回滚到指定版本

- Jenkins使用参数化构建回滚到指定版本;执行Shell语句为:(参数化构建回滚到指定版本)

- 构建工程“yiliao_undo”;不填写构建参数使其回滚到上一版本

- Web浏览器访问验证:标题为“ChironW-2医疗”

- 再次构建工程“yiliao_undo”;填写构建参数指定回滚到“REVISION=3”版本

- Web浏览器访问验证:标题为“ChironW医疗”

-

-

- Master节点部署Prometheus+Grafana

-

1:k8s集群的安装

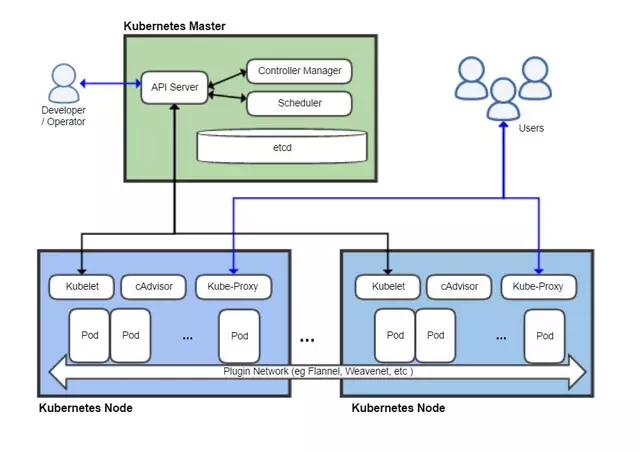

1.1 k8s的架构

除了核心组件,还有一些推荐的Add-ons:

| 组件名称 | 说明 |

|---|---|

| kube-dns | 负责为整个集群提供DNS服务 |

| Ingress Controller | 为服务提供外网入口 |

| Heapster | 提供资源监控 |

| Dashboard | 提供GUI |

| Federation | 提供跨可用区的集群 |

| Fluentd-elasticsearch | 提供集群日志采集、存储与查询 |

1.2:修改所有节点IP地址、主机名和host解析

# 修改所有节点主机名

[root@chiron ~]# hostnamectl set-hostname k8s-master

[root@chiron ~]# hostnamectl set-hostname k8s-node-1

[root@chiron ~]# hostnamectl set-hostname k8s-node-2

# 所有节点需要做hosts解析

[root@chiron ~]# cat <>/etc/hosts

10.0.0.11 k8s-master

10.0.0.12 k8s-node-1

10.0.0.13 k8s-node-2

EOF

1.3:master节点安装etcd

# yum方式安装Etcd

[root@k8s-master ~]# yum install etcd -y

# 备份Etcd主配置文件

[root@k8s-master ~]# cp /etc/etcd/etcd.conf{,.bak}

# 修改Etcd主配置文件

[root@k8s-master ~]# vim /etc/etcd/etcd.conf

6行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

21行:ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379"

或者

[root@k8s-master ~]# grep -Ev '#|^$' /etc/etcd/etcd.conf

[root@k8s-master ~]# sed -i 's#ETCD_LISTEN_CLIENT_URLS="http://localhost:2379"#ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"#g' /etc/etcd/etcd.conf

[root@k8s-master ~]# sed -i 's#ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379"#ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379"#g' /etc/etcd/etcd.conf

[root@k8s-master ~]# grep -Ev '#|^$' /etc/etcd/etcd.conf

# 启动Etcd服务,并设置开机自启动

[root@k8s-master ~]# systemctl start etcd.service && systemctl enable etcd.service

# 创建一个Key并查看

[root@k8s-master ~]# etcdctl set testdir/testkey0 0 && etcdctl get testdir/testkey0

# 检查Etcd节点是否健康

[root@k8s-master ~]# etcdctl -C http://10.0.0.11:2379 cluster-health

etcd原生支持做集群,

作业1:安装部署etcd集群,要求三个节点

1.4:master节点安装kubernetes

# Master节点Yum方式安装K8S

[root@k8s-master ~]# yum install kubernetes-master.x86_64 -y

# 备份apiserver配置文件

[root@k8s-master ~]# cp /etc/kubernetes/apiserver{,.bak}

# 修改apiserver配置文件

[root@k8s-master ~]# vim /etc/kubernetes/apiserver

8行: KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

11行:KUBE_API_PORT="--port=8080"

14行: KUBELET_PORT="--kubelet-port=10250"

17行:KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.11:2379"

23行:KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

或者

[root@k8s-master ~]# grep -Ev '#|^$' /etc/kubernetes/apiserver

[root@k8s-master ~]# sed -i 's#KUBE_API_ADDRESS="--insecure-bind-address=127.0.0.1"#KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"#g' /etc/kubernetes/apiserver

[root@k8s-master ~]# sed -i 's/^# KUBE_API_PORT="--port=8080"/KUBE_API_PORT="--port=8080/g' /etc/kubernetes/apiserver

[root@k8s-master ~]# sed -i 's/^# KUBELET_PORT="--kubelet-port=10250"/KUBELET_PORT="--kubelet-port=10250"/g' /etc/kubernetes/apiserver

[root@k8s-master ~]# sed -i 's#KUBE_ETCD_SERVERS="--etcd-servers=http://127.0.0.1:2379"#KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.11:2379"#g' /etc/kubernetes/apiserver

[root@k8s-master ~]# sed -i 's#KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"#KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"#g' /etc/kubernetes/apiserver

[root@k8s-master ~]# grep -Ev '#|^$' /etc/kubernetes/apiserver

# 备份config配置文件

[root@k8s-master ~]# cp /etc/kubernetes/config{,.bak}

# 修改config配置文件

[root@k8s-master ~]# vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://10.0.0.11:8080"

或者

[root@k8s-master ~]# grep -Ev '#|^$' /etc/kubernetes/config

[root@k8s-master ~]# sed -i 's#KUBE_MASTER="--master=http://127.0.0.1:8080"#KUBE_MASTER="--master=http://10.0.0.11:8080"#g' /etc/kubernetes/config

[root@k8s-master ~]# grep -Ev '#|^$' /etc/kubernetes/config

# 启动 apiserver、controller-manager、scheduler 服务并加入系统服务

[root@k8s-master ~]# systemctl enable kube-apiserver.service kube-controller-manager.service kube-scheduler.service

[root@k8s-master ~]# systemctl restart kube-apiserver.service kube-controller-manager.service kube-scheduler.service

[root@k8s-master ~]# systemctl status kube-apiserver.service kube-controller-manager.service kube-scheduler.service

检查服务是否安装正常

# 检查集群组件间运行状态是否正常

[root@k8s-master ~]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true"}

scheduler Healthy ok

controller-manager Healthy ok

1.5:所有node节点安装kubernetes

# 所有Node节点Yum方式安装K8S

[root@k8s-node ~]# yum install kubernetes-node.x86_64 -y

# 备份config配置文件

[root@k8s-node ~]# cp /etc/kubernetes/config{,.bak}

# 修改config配置文件

[root@k8s-node ~]# vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://10.0.0.11:8080"

或者

[root@k8s-node ~]# grep -Ev '^$|#' /etc/kubernetes/config

[root@k8s-node ~]# sed -i 's#KUBE_MASTER="--master=http://127.0.0.1:8080"#KUBE_MASTER="--master=http://10.0.0.11:8080"#g' /etc/kubernetes/config

[root@k8s-node ~]# grep -Ev '^$|#' /etc/kubernetes/config

# 备份kubelet配置文件

[root@k8s-node ~]# cp /etc/kubernetes/kubelet{,.bak}

# 修改kubelet配置文件

[root@k8s-node ~]# vim /etc/kubernetes/kubelet

5行:KUBELET_ADDRESS="--address=0.0.0.0"

8行:KUBELET_PORT="--port=10250"

11行:KUBELET_HOSTNAME="--hostname-override=10.0.0.12"

# 注意:此处的"hostname-override=10.0.0.x",要根据当前node节点的IP或主机名填写

14行:KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"

或者

[root@k8s-node ~]# grep -Ev '^$|#' /etc/kubernetes/kubelet

[root@k8s-node ~]# sed -i 's#KUBELET_ADDRESS="--address=127.0.0.1"#KUBELET_ADDRESS="--address=0.0.0.0"#g' /etc/kubernetes/kubelet

[root@k8s-node ~]# sed -i 's/^# KUBELET_PORT="--port=10250"/KUBELET_PORT="--port=10250"/g' /etc/kubernetes/kubelet

[root@k8s-node ~]# sed -i 's#KUBELET_API_SERVER="--api-servers=http://127.0.0.1:8080"#KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"#g' /etc/kubernetes/kubelet

# 注意此处"hostname-override=10.0.0.x",要根据当前node节点的IP或主机名填写

[root@k8s-node-1 ~]# sed -i 's#KUBELET_HOSTNAME="--hostname-override=127.0.0.1"#KUBELET_HOSTNAME="--hostname-override=k8s-node-1#g' /etc/kubernetes/kubelet

[root@k8s-node-2 ~]# sed -i 's#KUBELET_HOSTNAME="--hostname-override=127.0.0.1"#KUBELET_HOSTNAME="--hostname-override=k8s-node-2"#g' /etc/kubernetes/kubelet

[root@k8s-node ~]# grep -Ev '^$|#' /etc/kubernetes/kubelet

# 启动 kubelet.service、kube-proxy.service 服务并加入系统服务

[root@k8s-node ~]# systemctl enable kubelet.service kube-proxy.service

[root@k8s-node ~]# systemctl restart kubelet.service kube-proxy.service

[root@k8s-node ~]# systemctl status kubelet.service kube-proxy.service

在master节点检查Nodes节点状态是否正常

[root@k8s-master ~]# kubectl get nodes

NAME STATUS AGE

k8s-node-1 Ready 13s

k8s-node-2 Ready 16s

6:所有节点配置flannel网络

yum install flannel -y

sed -i 's#http://127.0.0.1:2379#http://10.0.0.11:2379#g' /etc/sysconfig/flanneld

##master节点:

# 向Etcd中创建一个值,为flannel网络指定网段;否则 falannel 网络插件服务启动失败

[root@k8s-master ~]# etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16" }'

# 为Master主机安装Docker,方便后面将Master主机作为私有镜像仓库“registry”

[root@k8s-master ~]# yum install docker -y

# 将flanneld.service docker服务设置开机自启动,并重启master主机所有K8S相关服务

[root@k8s-master ~]# systemctl enable flanneld.service docker && systemctl restart flanneld.service docker

[root@k8s-master ~]# systemctl restart kube-apiserver.service kube-controller-manager.service kube-scheduler.service

[root@k8s-master ~]# systemctl status kube-apiserver.service kube-controller-manager.service kube-scheduler.service flanneld.service docker

##所有Node节点:

# 将flanneld.service docker服务启动;并添加到系统开机自启

systemctl enable flanneld.service && systemctl restart flanneld.service

# 查看Node主机"docker0"网卡IP网段;默认为"172.17.0.1/16"网段

[root@k8s-node-1 ~]# ip addr |grep docker0

inet 172.17.0.1/16 scope global docker0

# 重启Docker等Node主机所有K8S相关服务;重启Docker目的在于查看"docker0"网卡网段的变化

systemctl restart docker kubelet.service kube-proxy.service

systemctl status flanneld.service ocker kubelet.service kube-proxy.service

# 再次查看Node主机"docker0"网卡IP网段;已经变为flannel指定网段

[root@k8s-node-1 ~]# ip addr |grep docker0

inet 172.18.37.1/24 scope global docker0

##验证两台Node间Pod是否能够ping通

# 在两台Node主机中下载"busybox"镜像,各自启动一个Pod

docker pull busybox

# 在Node1中启动一个Pod,查看它的IP地址

[root@k8s-node-1 ~]# docker run -it docker.io/busybox:latest

/ # ip addr |grep eth0

inet 172.18.37.2/24 scope global eth0

/ #

# 在Node2启的Pod中ping Node1的Pod地址;发现网络 ping 不通,而 ping 其的网管确是正常的?

[root@k8s-node-2 ~]# docker run -it docker.io/busybox:latest

/ # ping 172.18.37.2

PING 172.18.37.2 (172.18.37.2): 56 data bytes

--- 172.18.37.2 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

/ # ping 172.18.37.1

PING 172.18.37.1 (172.18.37.1): 56 data bytes

64 bytes from 172.18.37.1: seq=0 ttl=61 time=1.700 ms

# 这是因为当Docker服务启动时,会拉起 iptables 防火墙规则,其中"DROP"了"FORWARD"规则链;需要修改"docker.service"文件,使其更改为"ACCEPT";需要在所有节点(包括Master)操作。

# 备份"docker.service"文件

cp /usr/lib/systemd/system/docker.service{,.bak}

# 修改"docker.service"文件

vim /usr/lib/systemd/system/docker.service

#在[Service]区域下增加一行

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

或者

sed -i 'N;12a\ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT' /usr/lib/systemd/system/docker.service

# 参数解释:

# 在某行(指具体行号)前或后加一行内容

# sed -i 'N;4a\ddpdf' a.txt

# sed -i 'N;4i\eepdf' a.txt

# N;4a 代表在第四行之后加入"ddpdf";即"a"代表在之后添加一行数据

# N;4i 代表在第四行之后加入"eepdf";即"i"代表在之前添加一行数据

# 修改之后,需要重新加载"docker.service"文件,并进行重启

systemctl daemon-reload && systemctl restart docker

# 再次在Node2中Pod,ping Node中的Pod,网络正常

[root@k8s-node-2 ~]# docker attach 576a192fcc4b

/ # ping 172.18.37.2

PING 172.18.37.2 (172.18.37.2): 56 data bytes

64 bytes from 172.18.37.2: seq=0 ttl=60 time=1.549 ms

7:配置master为镜像仓库

# 因为我们不是使用HTTPS方式的访问;所以在"/etc/docker/daemon.json"文件中添加"insecure-registries"的意义是信任指定的私有仓库;否则会报如下错误:

# The push refers to a repository [10.0.0.11:5000/centos:6.10]

# Get https://10.0.0.11:5000/v1/_ping: http: server gave HTTP response to HTTPS client

# 所有节点

vi /etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"insecure-registries": ["10.0.0.11:5000"]

}

或者

[root@k8s-master ~]# cat >/etc/docker/daemon.json<

> {

> "registry-mirrors": ["https://registry.docker-cn.com"],

> "insecure-registries": ["10.0.0.11:5000"]

> }

> EOF

systemctl restart docker

# master节点部署registry私有镜像仓库(不带registry认证)

docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registry

# master节点部署registry私有镜像仓库(带registry认证);此处不建议使用,否则后面"kubectl create"创建容器时,会pull不到镜像,除非事先pull到Nodes节点中;

# 比较推荐带认证的私有仓库为:Harbor仓库,且拥有Web管控台。

yum install httpd-tools -y

mkdir /opt/registry-var/auth/ -p

htpasswd -Bbn chiron 123456 >> /opt/registry-var/auth/htpasswd

docker run -d -p 5000:5000 --restart=always --name registry -v /opt/registry-var/auth/:/auth/ -v /opt/myregistry:/var/lib/registry -e "REGISTRY_AUTH=htpasswd" -e "REGISTRY_AUTH_HTPASSWD_REALM=Registry Realm" -e REGISTRY_AUTH_HTPASSWD_PATH=/auth/htpasswd registry

# 提示:上传下载镜像都需要登陆哦

docker login 10.0.0.11:5000

chiron

123456

docker logout 10.0.0.11:5000登出

# 镜像推送与拉取验证:

# 向私有仓库"registry"上传本地镜像tar包;存放到"/opt/images"目录下

[root@k8s-node-1 ~]# mkdir /opt/images && cd /opt/images

[root@k8s-node-1 ~]# wget http://192.168.2.254:8888/docker_nginx1.13.tar.gz

[root@k8s-node-1 ~]# docker load -i docker_nginx1.13.tar.gz

[root@k8s-node-1 ~]# docker tag docker.io/nginx:1.13 10.0.0.11:5000/nginx:1.13

[root@k8s-node-1 ~]# docker images

[root@k8s-node-1 ~]# docker push 10.0.0.11:5000/nginx:1.13

# 前边我们pull过一个"busybox:latest"的镜像,这里一并打个标签上传到"registry"私有仓库,作为后续使用

[root@k8s-node-1 ~]# docker tag docker.io/busybox:latest 10.0.0.11:5000/busybox:latest

[root@k8s-node-1 ~]# docker push 10.0.0.11:5000/busybox:latest

# Registry私有镜像仓库查看镜像是否存在

[root@k8s-master nginx]# ls /opt/myregistry/docker/registry/v2/repositories/nginx/_manifests/tags/

# Web页面访问验证URL地址:

http://10.0.0.11:5000/v2/nginx/tags/list

# 拉取镜像

[root@k8s-node-2 ~]# docker images

[root@k8s-node-2 ~]# docker pull 10.0.0.11:5000/nginx:1.13

[root@k8s-node-2 ~]# docker images

2:什么是k8s,k8s有什么功能?

k8s是一个docker集群的管理工具

k8s是容器的编排工具

2.1 k8s的核心功能

自愈: 重新启动失败的容器,在节点不可用时,替换和重新调度节点上的容器,对用户定义的健康检查不响应的容器会被中止,并且在容器准备好服务之前不会把其向客户端广播。

弹性伸缩: 通过监控容器的cpu的负载值,如果这个平均高于80%,增加容器的数量,如果这个平均低于10%,减少容器的数量

服务的自动发现和负载均衡: 不需要修改您的应用程序来使用不熟悉的服务发现机制,Kubernetes 为容器提供了自己的 IP 地址和一组容器的单个 DNS 名称,并可以在它们之间进行负载均衡。

滚动升级和一键回滚: Kubernetes 逐渐部署对应用程序或其配置的更改,同时监视应用程序运行状况,以确保它不会同时终止所有实例。 如果出现问题,Kubernetes会为您恢复更改,利用日益增长的部署解决方案的生态系统。

私密配置文件管理. web容器里面,数据库的账户密码

2.2 k8s的历史

2014年 docker容器编排工具,立项

2015年7月 发布kubernetes 1.0, 加入cncf基金会 孵化

2016年,kubernetes干掉两个对手,docker swarm,mesos marathon 1.2版

2017年 1.5 -1.9

2018年 k8s 从cncf基金会 毕业项目

2019年: 1.13, 1.14 ,1.15,1.16

cncf :cloud native compute foundation

kubernetes (k8s): 希腊语 舵手,领航 容器编排领域,

谷歌15年容器使用经验,borg容器管理平台,使用golang重构borg,kubernetes

2.3 k8s的安装方式

yum安装 1.5 最容易安装成功,最适合学习的

源码编译安装—难度最大 可以安装最新版

二进制安装—步骤繁琐 可以安装最新版 shell,ansible,saltstack

kubeadm 安装最容易, 网络 可以安装最新版

minikube 适合开发人员体验k8s, 网络

2.4 k8s的应用场景

k8s最适合跑微服务项目!

微服务的好处:

提供更高的并发,可用性更强,发布周期更短

微服务的缺点:

管理难度大,自动化代码上线,Ansible,saltstack;

docker,docker管理平台K8S,弹性伸缩

拓展:

MVC:软件开发架构,同一域名,不同功能,放在不同目录下

微服务:软件开发架构,不同域名,不同功能,放在独立的站点

3:k8s常用的资源

3.1 创建pod资源

pod是最小资源单位.

任何的一个k8s资源都可以由yml清单文件来定义

pod资源:至少由两个容器组成,pod基础容器和业务容器组成(最多1+4)

yaml文件声明,至少启动一个容器,创建一个Pod资源

Kubectl调用docker启动了两个容器

nginx:13 业务容器;提供访问

Pod 容器 实现K8S的高级功能

k8s中yaml的主要组成:

apiVersion: v1 api版本

kind: pod 资源类型

metadata: 属性

spec: 详细

k8s_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

master节点创建后面存放K8S各种资源Yaml文件的存放路径

[root@k8s-master ~]# mkdir -p /root/k8s_yaml/pod && cd /root/k8s_yaml/pod/

[root@k8s-master pod]# vim k8s_pod.yaml

[root@k8s-master pod]# kubectl create -f k8s_pod.yaml

[root@k8s-master pod]# kubectl get pod

# 注意:此处查看到的容器状态非“Running”

[root@k8s-master pod]# kubectl describe pod nginx

# 注意:此处查看到的Pod详情下方会报错如下:

# Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

# 解决方法见下方修改Node节点“/etc/kubernetes/kubelet”文件(此处是为了踩坑)

# 解决完上方踩坑问题,删除旧的pod“nginx”,重新创建一次,则运行正常

[root@k8s-master pod]# kubectl delete pods nginx

[root@k8s-master pod]# kubectl create -f k8s_pod.yaml

[root@k8s-master pod]# kubectl get pod

# 可以查看到Pod更多信息

[root@k8s-master pod]# kubectl get pod -o wide

[root@k8s-master pod]# kubectl describe pod nginx

# 查看到pod的IP后,CURL访问验证是否能访问到nginx首页

[root@k8s-master pod]# curl 172.18.95.2

# 查看为什么启动了两个容器,存在什么不同与关联

[root@k8s-node-2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

fd8681f40db0 10.0.0.11:5000/nginx:1.13 "nginx -g 'daemon ..." 5 minutes ago Up 5 minutes k8s_nginx.91390390_nginx_default_c69aed1e-1012-11ea-bb8d-000c29c6d194_48670ad4

b9aff18feced 10.0.0.11:5000/rhel7/pod-infrastructure:latest "/pod" 5 minutes ago Up 5 minutes k8s_POD.e5ea03c1_nginx_default_c69aed1e-1012-11ea-bb8d-000c29c6d194_20a42fd7

# 查看业务容器“nginx:1.13”的详细信息

[root@k8s-node-2 ~]# docker inspect fd8681f40db0 |tail -20

"SandboxID": "",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": null,

"SandboxKey": "",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "",

"Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"MacAddress": "",

"Networks": {}

}

}

]

# 查看基础容器的信息

[root@k8s-node-2 ~]# docker inspect b9aff18feced |tail -20

"MacAddress": "02:42:ac:12:5f:02",

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "d8db8b8692bd9f06bf1e041c869191cd304d9337bcfad041b7354650359203ba",

"EndpointID": "568288f7bb2bbba16ce0d4ca903b7f6174d74a620380bcf2b46146f1210b0fe9",

"Gateway": "172.18.95.1",

"IPAddress": "172.18.95.2",

"IPPrefixLen": 24,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:12:5f:02"

}

}

}

}

]

# 此处看到 nginxPod 的网卡使用的基础容器:b9aff18feced 的网络资源

[root@k8s-node-2 ~]# docker inspect fd8681f40db0 |grep -i network

"NetworkMode": "container:b9aff18feced757e44b5b4d5e58686523777d4779349c63da7d9cd654731137a",

"NetworkSettings": {

"Networks": {}

node节点:

# 向私有仓库"registry"上传本地镜像tar包;存放到"/opt/images"目录下

# 导入本地上传基础镜像

[root@k8s-node-1 images]# wget http://192.168.2.254:8888/pod-infrastructure-latest.tar.gz

[root@k8s-node-1 images]# docker load -i pod-infrastructure-latest.tar.gz

[root@k8s-node-1 images]# docker tag docker.io/tianyebj/pod-infrastructure:latest 10.0.0.11:5000/rhel7/pod-infrastructure:latest

[root@k8s-node-1 images]# docker push 10.0.0.11:5000/rhel7/pod-infrastructure:latest

# 所有Node节点修改配置文件;使其启动Pod时,从本地私有仓库"registry"拉取基础镜像

vim /etc/kubernetes/kubelet

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.11:5000/rhel7/pod-infrastructure:latest"

或者

grep -Ev '^$|#' /etc/kubernetes/kubelet

sed -i 's#KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"#KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.11:5000/rhel7/pod-infrastructure:latest"#g' /etc/kubernetes/kubelet

grep -Ev '^$|#' /etc/kubernetes/kubelet

# 重启kubelet服务,使配置生效

systemctl restart kubelet.service

pod配置文件2:k8s_pod2.yaml;目的是演示让一个基础Pod,带起两个业务容器(共同使用基础Pod的网络地址)

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- name: busybox

image: 10.0.0.11:5000/busybox:latest

command: ["sleep","10000"]

# Master创建一个基础Pod,两个业务Pod的示例

[root@k8s-master pod]# kubectl create -f k8s_pod2.yaml

[root@k8s-master pod]# kubectl get pod -o wide

[root@k8s-master pod]# kubectl describe pod test

# Node节点查看容器;进行验证

[root@k8s-node-1 images]# docker ps -a

pod是k8s最小的资源单位

3.2 ReplicationController资源

rc:保证指定数量的pod始终存活,rc通过标签选择器来关联pod

k8s资源的常见操作:

# 根据yaml创建资源

kubectl create -f xxx.yaml

# 查看资源状态信息:-o wide 显示更多 --show-labels 查看标签

kubectl get (pod / rc / nodes) -o wide --show-labels

# 查看资源从创建到异常的详细信息

kubectl describe pod nginx

# 删除指定资源

kubectl delete pod nginx 或者kubectl delete -f xxx.yaml

# 编辑指定资源的配置信息

kubectl edit pod nginx

创建一个rc:nginx-rc.yaml

# 创建属于RC相关的yaml文件的存放目录

[root@k8s-master pod]# mkdir /root/k8s_yaml/rc && cd /root/k8s_yaml/rc

[root@k8s-master rc]# vim nginx-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 5 #副本5

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

创建RC并查看RC副本数量状态

[root@k8s-master rc]# kubectl create -f nginx-rc.yaml

replicationcontroller "nginx" created

[root@k8s-master rc]# kubectl get rc

NAME DESIRED CURRENT READY AGE

nginx 5 5 5 43s

[root@k8s-master rc]# kubectl get pod

导入并推送“10.0.0.11:5000/nginx:1.15”镜像;方便下面进行升级与回滚演示

# 向私有仓库"registry"上传本地镜像tar包;存放到"/opt/images"目录下

# 导入本地上传基础镜像

rz docker_nginx1.15.tar.gz

[root@k8s-node-1 images]# docker load -i docker_nginx1.15.tar.gz

[root@k8s-node-1 images]# docker tag docker.io/nginx:latest 10.0.0.11:5000/nginx:1.15

[root@k8s-node-1 images]# docker push 10.0.0.11:5000/nginx:1.15

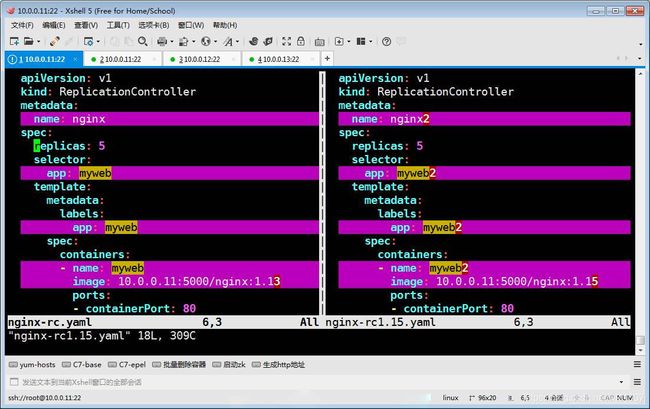

rc的滚动升级

新建一个nginx-rc1.15.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx2

spec:

replicas: 5 #副本5

selector:

app: myweb2

template:

metadata:

labels:

app: myweb2

spec:

containers:

- name: myweb2

image: 10.0.0.11:5000/nginx:1.15

ports:

- containerPort: 80

创建RC – nginx1.13版

[root@k8s-master rc]# kubectl create -f nginx-rc.yaml

[root@k8s-master rc]# kubectl get pod

[root@k8s-master rc]# kubectl get rc

升级

# 滚动升级

[root@k8s-master rc]# kubectl rolling-update nginx -f nginx-rc1.15.yaml --update-period=10s

# 升级过程中可以观察Pod的变化

[root@k8s-master rc]# kubectl get pod -w

回滚

滚动回滚

[root@k8s-master rc]# kubectl rolling-update nginx2 -f nginx-rc.yaml --update-period=1s

参数解释与验证版本方法

参数解释:

--update-period=10s 指定间隔时间,不指定则默认为一分钟

验证:

方法一:访问启动的Nginx容器 curl -I IP:Port 查看返回信息中版本号

方法二:在Node节点查看容器启动时使用的镜像 docker ps -a

方法三:查看标签与RC的Name名称 kubectl get rc -o wide 或者 kubectl get pod -o wide --show-labels

删除其中某个Pod,验证RC是否会始终保证指定数量Pod存活

[root@k8s-master rc]# kubectl get pod

[root@k8s-master rc]# kubectl delete pod nginx-1r7pg

pod "nginx-1r7pg" deleted

[root@k8s-master rc]# kubectl get pod

注意:

当某个Pod被删除时,RC会立即启动一个新的Pod;但是被删除的Pod已经找不到;可以从Pod的名称和运行时间中查看验证到。

当Node2节点服务挂掉,RC会将原本在Node2节点的Pod数量,重新在正常的节点运行相应数量的Pod;但是,当Node2节点恢复正常后,RC并不会重新将之前的Pod运行到Node2中。

删除其中某个Node节点,验证RC是否会始终保证指定数量Pod存活

[root@k8s-master rc]# kubectl get pod -o wide

[root@k8s-master rc]# kubectl get node

[root@k8s-master rc]# kubectl delete node k8s-node-2

[root@k8s-master rc]# kubectl get pod -o wide

[root@k8s-node-2 ~]# systemctl restart kubelet.service

[root@k8s-master rc]# kubectl get node

[root@k8s-master rc]# kubectl get pod -o wide

注意:

当Node2节点服务挂掉,RC会将原本在Node2节点的Pod数量,重新在正常的节点运行相应数量的Pod;但是,当Node2节点恢复正常后,RC并不会重新将之前的Pod运行到Node2中。

修改我们之前创建的Pod – nginx 的标签属性为"myweb";验证RC是否会删除运行时间最小的Pod数量

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx 1/1 Running 0 2m 172.18.95.2 k8s-node-2 app=web

nginx-ctvmk 1/1 Running 0 59m 172.18.37.6 k8s-node-1 app=myweb

nginx-gpg4g 1/1 Running 0 1h 172.18.37.4 k8s-node-1 app=myweb

nginx-hr791 1/1 Running 0 59m 172.18.37.7 k8s-node-1 app=myweb

nginx-m5pwh 1/1 Running 0 2s 172.18.95.4 k8s-node-2 app=myweb

nginx-q1rf7 1/1 Running 0 59m 172.18.37.3 k8s-node-1 app=myweb

[root@k8s-master rc]# kubectl edit pod nginx

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: 2019-11-26T09:49:10Z

labels:

app: myweb #此处原本是:web;更改为:myweb 然后保存

name: nginx

namespace: default

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx 1/1 Running 0 2m 172.18.95.2 k8s-node-2 app=myweb

nginx-ctvmk 1/1 Running 0 1h 172.18.37.6 k8s-node-1 app=myweb

nginx-gpg4g 1/1 Running 0 1h 172.18.37.4 k8s-node-1 app=myweb

nginx-hr791 1/1 Running 0 1h 172.18.37.7 k8s-node-1 app=myweb

nginx-q1rf7 1/1 Running 0 1h 172.18.37.3 k8s-node-1 app=myweb

# 这里可以看到运行时间最小的Pod -- "nginx-m5pwh" 被删除了;

# 说明 rc通过标签选择器来关联pod;如果有别的Pod混进来了,RC会默认删除运行时间最小的Pod

3.3 service资源

service帮助pod暴露端口和做负载均衡

创建一个service:k8s_svc.yaml

# 查看现有Pod;此处只保留了RC创建的资源

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx-2wrjn 1/1 Running 0 3m 172.18.95.3 k8s-node-2 app=myweb

nginx-9hfzl 1/1 Running 0 3m 172.18.37.2 k8s-node-1 app=myweb

nginx-bshlt 1/1 Running 0 3m 172.18.37.3 k8s-node-1 app=myweb

nginx-ltktb 1/1 Running 0 3m 172.18.95.2 k8s-node-2 app=myweb

nginx-zngfd 1/1 Running 0 3m 172.18.95.4 k8s-node-2 app=myweb

# 创建属于Service相关的yaml文件的存放目录

[root@k8s-master rc]# mkdir /root/k8s_yaml/svc && cd /root/k8s_yaml/svc

[root@k8s-master svc]# vim k8s_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort #ClusterIP

ports:

- port: 80 #clusterIP

nodePort: 30000 #node port

targetPort: 80 #pod port

selector:

app: myweb

创建service,查看SVC与RC的标签是否一致,并在外部浏览器进行访问验证

[root@k8s-master svc]# kubectl create -f k8s_svc.yaml

service "myweb" created

[root@k8s-master svc]# kubectl get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.254.0.1 <none> 443/TCP 1d <none>

myweb 10.254.218.48 <nodes> 80:30000/TCP 1m app=myweb

[root@k8s-master svc]# kubectl get rc -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

nginx 5 5 5 19m myweb 10.0.0.11:5000/nginx:1.13 app=myweb

# 浏览器访问:http://node_IP:30000;访问正常,出现Nginx的首页;

# 验证SVC实现负载的效果详情见下方。

#扩容或缩容 Deployment、ReplicaSet、Replication Controller或 Job 中Pod数量。

# 查看RC:nginx的Pod数量

[root@k8s-master svc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx-2wrjn 1/1 Running 0 3m 172.18.95.3 k8s-node-2 app=myweb

nginx-9hfzl 1/1 Running 0 3m 172.18.37.2 k8s-node-1 app=myweb

nginx-bshlt 1/1 Running 0 3m 172.18.37.3 k8s-node-1 app=myweb

nginx-ltktb 1/1 Running 0 3m 172.18.95.2 k8s-node-2 app=myweb

nginx-zngfd 1/1 Running 0 3m 172.18.95.4 k8s-node-2 app=myweb

# 修改rc:nginx的pod数量

[root@k8s-master svc]# kubectl scale rc nginx --replicas=2

replicationcontroller "nginx" scaled

或者

[root@k8s-master svc]# kubectl edit rc nginx

apiVersion: v1

kind: ReplicationController

metadata:

creationTimestamp: 2019-11-23T06:51:57Z

generation: 7

labels:

app: myweb

name: nginx

namespace: default

resourceVersion: "84460"

selfLink: /api/v1/namespaces/default/replicationcontrollers/nginx

uid: be236c49-0dbd-11ea-9d96-000c29c6d194

spec:

replicas: 2 # 此处原本数值为“5”;现修改为“2”;然后观察RC创建的Pod数量

selector:

app: myweb

# 再次观察RC:nginx的Pod数量

[root@k8s-master svc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx-9hfzl 1/1 Running 0 28m 172.18.37.2 k8s-node-1 app=myweb

nginx-bshlt 1/1 Running 0 28m 172.18.37.3 k8s-node-1 app=myweb

# 编辑现有svc:myweb的标签等配置信息

[root@k8s-master svc]# kubectl edit svc myweb

apiVersion: v1

kind: Service

metadata:

creationTimestamp: 2019-11-23T07:09:09Z

name: myweb

namespace: default

resourceVersion: "84421"

selfLink: /api/v1/namespaces/default/services/myweb

uid: 24d4ec0a-0dc0-11ea-9d96-000c29c6d194

spec:

clusterIP: 10.254.198.29

ports:

- nodePort: 30000

port: 80

protocol: TCP

targetPort: 80

selector:

app: myweb

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

"/tmp/kubectl-edit-fplln.yaml" 26L, 656C written

service "myweb" edited

# 修改剩余两个Pod的首页index.html;方便下面验证负载均衡

# Service默认只支持RR(轮询模式)

# Round-Robin(RR),轮询调度,通信中信道调度的一种策略,该调度策略使用户轮流使用共享资源,不会考虑瞬时信道条件。

[root@k8s-master svc]# kubectl exec -it nginx-9hfzl /bin/bash

root@nginx-9hfzl:/# echo Chiron-web01 > /usr/share/nginx/html/index.html

root@nginx-9hfzl:/# exit

exit

[root@k8s-master svc]# kubectl exec -it nginx-bshlt /bin/bash

root@nginx-bshlt:/# echo Chiron-web02 > /usr/share/nginx/html/index.html

root@nginx-bshlt:/# exit

exit

# 验证

[root@k8s-master svc]# curl http://10.0.0.12:30000/

Chiron-web02

[root@k8s-master svc]# curl http://10.0.0.12:30000/

Chiron-web01

# 查看指定SVC(service)的详细信息;如VIP、Pod、Port信息等

[root@k8s-master svc]# kubectl describe svc myweb

Name: myweb

Namespace: default

Labels: <none>

Selector: app=myweb

Type: NodePort

IP: 10.254.218.48

Port: <unset> 80/TCP

NodePort: <unset> 30000/TCP

Endpoints: 172.18.37.2:80,172.18.37.3:80

Session Affinity: None

No events.

扩展nodePort范围;默认支持的范围为"30000-32767",在大型网络架构中,port端口不够分配可以修改此值

[root@k8s-master svc]# vim /etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=3000-50000"

或者

[root@k8s-master svc]# grep -Ev '^$|#' /etc/kubernetes/apiserver

[root@k8s-master svc]# sed -i 's/KUBE_API_ARGS=""/KUBE_API_ARGS="--service-node-port-range=3000-50000"/g' /etc/kubernetes/apiserver

[root@k8s-master svc]# grep -Ev '^$|#' /etc/kubernetes/apiserver

[root@k8s-master svc]# systemctl restart kube-apiserver.service

命令行创建service资源暴露随机端口方法:

kubectl expose rc nginx --type=NodePort --port=80

kubectl get service

service默认使用iptables来实现负载均衡, k8s 1.8以后的新版本中推荐使用lvs(四层负载均衡 传输层tcp,udp)

3.4 deployment资源

有rc在滚动升级之后,会造成服务访问中断,于是k8s引入了deployment资源;

造成RC在滚动升级之后访问中断的原因是:

RC滚动升级时,要保证RC定义的名称和标签等值不能一致;

这样就会造成滚动升级之后,原有的SVC与新的RC之间标签不一致;

这样SVC和Pod之间就没有办法进行关联;用户访问自然就中断了。

Deployment资源相比RC资源的好处:

升级服务不中断;

不依赖于Yaml文件;

通过改配置文件直接进行升级;

RC标签选择器,不支持模糊匹配;

Deployment标签选择器,支持模糊匹配

创建一个Deployment:k8s_svc.yaml

# 创建属于Deployment相关的yaml文件的存放目录

[root@k8s-master svc]# mkdir /root/k8s_yaml/deploy && cd /root/k8s_yaml/deploy

[root@k8s-master deploy]# vim k8s_deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

# 创建Deploy资源

[root@k8s-master deploy]# kubectl create -f k8s_deploy.yaml

deployment "nginx-deployment" created

Deployment资源关联Service资源实现负载均衡

# 查看所有资源

[root@k8s-master deploy]# kubectl get all

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 3 6m

NAME DESIRED CURRENT READY AGE

rc/nginx 5 5 5 41m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d

svc/myweb 10.254.218.48 <nodes> 80:30000/TCP 3h

NAME DESIRED CURRENT READY AGE

rs/nginx-deployment-2807576163 3 3 3 6m

NAME READY STATUS RESTARTS AGE

po/nginx-3xb2r 1/1 Running 0 41m

po/nginx-c8g2g 1/1 Running 0 41m

po/nginx-deployment-2807576163-2np1q 1/1 Running 0 6m

po/nginx-deployment-2807576163-5r55r 1/1 Running 0 6m

po/nginx-deployment-2807576163-nsntt 1/1 Running 0 6m

po/nginx-tq9j1 1/1 Running 0 41m

po/nginx-w3d06 1/1 Running 0 41m

po/nginx-xln8n 1/1 Running 0 41m

# 为Deployment资源:nginx-deployment;创建service资源并暴露随机端口

[root@k8s-master deploy]# kubectl expose deployment nginx-deployment --port=80 --target-port=80 --type=NodePort

service "nginx-deployment" exposed

# 再次查看所有资源

[root@k8s-master deploy]# kubectl get all

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 3 15m

NAME DESIRED CURRENT READY AGE

rc/nginx 5 5 5 50m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d

svc/myweb 10.254.218.48 <nodes> 80:30000/TCP 4h

svc/nginx-deployment 10.254.133.177 <nodes> 80:31469/TCP 3s

NAME DESIRED CURRENT READY AGE

rs/nginx-deployment-2807576163 3 3 3 15m

NAME READY STATUS RESTARTS AGE

po/nginx-3xb2r 1/1 Running 0 50m

po/nginx-c8g2g 1/1 Running 0 50m

po/nginx-deployment-2807576163-2np1q 1/1 Running 0 15m

po/nginx-deployment-2807576163-5r55r 1/1 Running 0 15m

po/nginx-deployment-2807576163-nsntt 1/1 Running 0 15m

po/nginx-tq9j1 1/1 Running 0 50m

po/nginx-w3d06 1/1 Running 0 50m

po/nginx-xln8n 1/1 Running 0 50m

# 验证访问正常;如果想验证是否负载均衡了,可以依据SVC中方法进行验证

[root@k8s-master deploy]# curl -I 10.0.0.12:31469

HTTP/1.1 200 OK

Server: nginx/1.13.12

[root@k8s-master deploy]# curl -I 10.0.0.13:31469

HTTP/1.1 200 OK

Server: nginx/1.13.12

注意:

Deployment资源在升级回滚时,不再像RC一样需要依据Yaml配置文件;

可通过直接更改配置文件,既能达到立即升级的效果。

[root@k8s-master deploy]# kubectl edit deployment nginx-deployment

spec:

containers:

- image: 10.0.0.11:5000/nginx:1.15 #将原有"1.13"的镜像更改为"1.15"

imagePullPolicy: IfNotPresent

name: nginx

# 查看资源的变化

[root@k8s-master deploy]# kubectl get all

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 3 30m

NAME DESIRED CURRENT READY AGE

rc/nginx 5 5 5 1h

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d

svc/myweb 10.254.218.48 <nodes> 80:30000/TCP 4h

svc/nginx-deployment 10.254.133.177 <nodes> 80:31469/TCP 15m

NAME DESIRED CURRENT READY AGE

rs/nginx-deployment-2807576163 0 0 0 30m

rs/nginx-deployment-3014407781 3 3 3 5m

NAME READY STATUS RESTARTS AGE

po/nginx-3xb2r 1/1 Running 0 1h

po/nginx-c8g2g 1/1 Running 0 1h

po/nginx-deployment-3014407781-k5crz 1/1 Running 0 5m

po/nginx-deployment-3014407781-mh9hq 1/1 Running 0 5m

po/nginx-deployment-3014407781-wqdc7 1/1 Running 0 5m

po/nginx-tq9j1 1/1 Running 0 1h

po/nginx-w3d06 1/1 Running 0 1h

po/nginx-xln8n 1/1 Running 0 1h

# 验证访问到的最新版本号;并且服务未中断

[root@k8s-master deploy]# curl -I 10.0.0.12:31469

HTTP/1.1 200 OK

Server: nginx/1.15.5

[root@k8s-master deploy]# curl -I 10.0.0.13:31469

HTTP/1.1 200 OK

Server: nginx/1.15.5

# 服务不中断的原因,在于Deployment资源启动的Pod,拥有两个标签,升级回滚过程中变化的只会是另一个标签,而标签“app=nginx”是始终不会变化的,此处也证明了Deployment资源是支持模糊匹配的。

[root@k8s-master deploy]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-3xb2r 1/1 Running 0 1h app=myweb

nginx-c8g2g 1/1 Running 0 1h app=myweb

nginx-deployment-3014407781-k5crz 1/1 Running 0 18m app=nginx,pod-template-hash=3014407781

nginx-deployment-3014407781-mh9hq 1/1 Running 0 18m app=nginx,pod-template-hash=3014407781

nginx-deployment-3014407781-wqdc7 1/1 Running 0 18m app=nginx,pod-template-hash=3014407781

nginx-tq9j1 1/1 Running 0 1h app=myweb

nginx-w3d06 1/1 Running 0 1h app=myweb

nginx-xln8n 1/1 Running 0 1h app=myweb

deployment升级和回滚命令总结:

命令行创建deployment:

kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

命令行升级版本:

kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.15

查看deployment所有历史版本:

kubectl rollout history deployment nginx

deployment回滚到上一个版本:

kubectl rollout undo deployment nginx

deployment回滚到指定版本:

kubectl rollout undo deployment nginx --to-revision=2

命令行创建deployment:

# 查看我们之前将Deployment资源从"1.13"升级到"1.15"的历史版本记录;是没有做记录的改变;后面我们将通过下述方法进行有历史版本记录的演示

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

1 <none>

2 <none>

# 新建一个Deployment资源:nginx;并记录命令与版本

[root@k8s-master deploy]# kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

deployment "nginx" created

# 参数解释:

--image:指定镜像版本

--replicas:指定副本数

--record:记录命令,方便查看每次版本的变化;在资源注释中记录当前的kubectl命令。

如果设置为false,则不记录命令。

如果设置为true,则记录命令。

如果未设置,则默认为仅在现有注释值存在时更新该值。

# 再次查看Deployment资源历史版本操作,与上面对比,发现有完整的历史命令操作;同事也方便后期回滚到指定的版本

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

# 弊端;为Deployment资源进行版本升级时,不能再通过修改配置文件“kubectl edit deployment nginx”进行升级了;否则记录的历史版本操作命令会如下,不方便我们查看做了哪些操作

[root@k8s-master deploy]# kubectl edit deployment nginx

[root@k8s-master deploy]# kubectl edit deployment nginx

# 修改镜像版本为“1.15”与“1.17”后,查看内历史版本只会记录命令,而不清楚执行了哪些操作

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl edit deployment nginx

3 kubectl edit deployment nginx

# 注意:

kubectl run 命令:在K8S中默认启动一个Deployment资源

docker run 命令:在Docker中默认启动一个Pod容器

命令行升级版本;并且能通过查看历史版本做了哪些操作

# Node节点上传"nginx:1.17"的镜像到仓库中

[root@k8s-node-1 images]# wget http://192.168.2.254:8888/docker_nginx.tar.gz

[root@k8s-node-1 images]# docker load -i docker_nginx.tar.gz

[root@k8s-node-1 images]# docker tag nginx:latest 10.0.0.11:5000/nginx:1.17

[root@k8s-node-1 images]# docker push 10.0.0.11:5000/nginx:1.17

# 首先清除Deployment资源“nginx”然后新建

[root@k8s-master deploy]# kubectl delete deployment nginx

deployment "nginx" deleted

[root@k8s-master deploy]# kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

deployment "nginx" created

# 升级版本为“1.15”

[root@k8s-master deploy]# kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.15

deployment "nginx" image updated

# 升级版本为“1.17”

[root@k8s-master deploy]# kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.17

deployment "nginx" image updated

# 查看Pod,找到其中一个Pod的IP地址;验证版本“1.17”

[root@k8s-master deploy]# kubectl get pod -o wide

[root@k8s-master deploy]# curl -I 172.18.37.4

HTTP/1.1 200 OK

Server: nginx/1.17.5

# 通过过滤RS也能查看到每次版本的不同;因为Deployment资源升级时,会新生成RS资源,而旧的不会被删除

[root@k8s-master deploy]# kubectl get rs -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

nginx-1147444844 3 3 3 2m nginx 10.0.0.11:5000/nginx:1.17 pod-template-hash=1147444844,run=nginx

nginx-847814248 0 0 0 2m nginx 10.0.0.11:5000/nginx:1.13 pod-template-hash=847814248,run=nginx

nginx-997629546 0 0 0 2m nginx 10.0.0.11:5000/nginx:1.15 pod-template-hash=997629546,run=nginx

nginx-deployment-2807576163 0 0 0 1h nginx 10.0.0.11:5000/nginx:1.13 app=nginx,pod-template-hash=2807576163

nginx-deployment-3014407781 3 3 3 1h nginx 10.0.0.11:5000/nginx:1.15 app=nginx,pod-template-hash=3014407781

# 查看带有升级详情的历史记录

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.15

3 kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.17

查看deployment所有历史版本:

kubectl rollout history deployment nginx

deployment回滚到上一个版本;查看历史记录有什么变化?

[root@k8s-master deploy]# kubectl rollout undo deployment nginx

deployment "nginx" rolled back

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

3 kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.17

4 kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.15

# 结论:

不指定版本号回滚,默认回滚到上一个版本“1.15”,

并且“1.15”原本版本号“2”变成了“4”;即版本“4”相当于是拿版本“2”的命令重新执行了一次;

且更新了版本号,由最大版本值向下进行迭代。

deployment回滚到指定版本;查看历史记录有什么变化?

[root@k8s-master deploy]# kubectl rollout undo deployment nginx --to-revision=1

deployment "nginx" rolled back

[root@k8s-master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

3 kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.17

4 kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.15

5 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

# 参数解释:

--to-revision:指定回滚的版本号

# 结论如上所属

3.5 Daemon Set资源

DaemonSet确保集群中每个(部分)node运行一份pod副本,当node加入集群时创建pod,当node离开集群时回收pod;如果删除DaemonSet,其创建的所有pod也被删除;

DaemonSet中的pod覆盖整个集群;

当需要在集群内每个node运行同一个类型pod,使用DaemonSet是有价值的,以下是典型使用场景:

运行集群存储守护进程,如glusterd、ceph。

运行集群日志收集守护进程,如fluentd、logstash、filebeat。

运行节点监控守护进程,如Prometheus Node Exporter, collectd, Datadog agent, New Relic agent, or Ganglia gmond, cadvisor, node-exporter。

创建一个DaemonSet:k8s_daemon_set.yaml

# 创建属于DaemonSet相关的yaml文件的存放目录

[root@k8s-master deploy]# mkdir /root/k8s_yaml/daemon && cd /root/k8s_yaml/daemon

[root@k8s-master daemon]# vim k8s_daemon_set.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: daemon

spec:

template:

metadata:

labels:

app: daemon

spec:

containers:

- name: daemon

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

# 创建DaemonSet资源

[root@k8s-master daemon]# kubectl create -f k8s_daemon_set.yaml

daemonset "daemon" created

# 查看DaemonSet创建的Pod;并且是一个节点分配一个

[root@k8s-master daemon]# kubectl get pod -o wide|grep daemon

daemon-0bpjx 1/1 Running 0 2s 172.18.95.4 k8s-node-2

daemon-8wrb8 1/1 Running 0 2s 172.18.37.8 k8s-node-1

# 查看DaemonSet状态

[root@k8s-master daemon]# kubectl get daemonset

NAME DESIRED CURRENT READY NODE-SELECTOR AGE

daemon 2 2 2 <none> 1m

3.6 Pet Set资源

在Kubernetes中,大多数的Pod管理都是基于无状态、一次性的理念。例如Replication Controller、Deployment,它只是简单的保证可提供服务的Pod数量。

无数据的容器,无状态的容器

PetSet,是由一组有状态的Pod组成,每个Pod有自己特殊且不可改变的ID,且每个Pod中都有自己独一无二、不能删除的数据。

有数据的容器,有状态的容器

当应用有以下任意要求时,Pet Set的价值就体现出来了。

● 稳定的、唯一的网络标识。

● 稳定的、持久化的存储。

● 有序的、优雅的部署和扩展。

● 有序的、优雅的删除和停止。

一个有序的index(比如PetSet的名字叫mysql,那么第一个启起来的Pet就叫mysql-0,第二个叫mysql-1,如此下去。当一个Pet down掉后,新创建的Pet会被赋予跟原来Pet一样的名字,通过这个名字就能匹配到原来的存储,实现状态保存。)

当应用有以下任意要求时,StatefulSet的价值就体现出来了。

● 稳定的、唯一的网络标识。

● 稳定的、持久化的存储。

● 有序的、优雅的部署和扩展。

● 有序的、优雅的删除和停止。

3.7 Job资源

对于ReplicaSet、ReplicationController等类型的控制器而言,它希望pod保持预期数目、持久运行下去,除非用户明确删除,否则这些对象一直存在,它们针对的是耐久性任务,如web服务等。

对于非耐久性任务,比如压缩文件,任务完成后,pod需要结束运行,不需要pod继续保持在系统中,这个时候就要用到Job。因此说Job是对ReplicaSet、ReplicationController等持久性控制器的补充。

通常执行一次性任务,执行结束就删除。还可以设置其定期运行。

3.8 tomcat+mysql练习

在k8s中容器之间相互访问,通过VIP地址!

Yaml文件:

::::::::::::::

./mysql-rc.yaml

::::::::::::::

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

::::::::::::::

./mysql-svc.yaml

::::::::::::::

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306

selector:

app: mysql

::::::::::::::

./tomcat-rc.yaml

::::::::::::::

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 1

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

::::::::::::::

./tomcat-svc.yaml

::::::::::::::

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30008

selector:

app: myweb

::::::::::::::

mysql_pvc.yaml

::::::::::::::

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: tomcat-mysql

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

::::::::::::::

mysql_pv.yaml

::::::::::::::

apiVersion: v1

kind: PersistentVolume

metadata:

name: tomcat-mysql

labels:

type: nfs001

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

path: "/data/pv"

server: 10.0.0.11

readOnly: false

::::::::::::::

mysql-rc-pvc.yaml

::::::::::::::

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

volumeMounts:

- name: nfs

mountPath: /var/lib/mysql

volumes:

- name: nfs

persistentVolumeClaim:

claimName: tomcat-mysql

前期准备:

# 清空前面创建的所有资源

[root@k8s-master deploy]# kubectl get all

[root@k8s-master deploy]# kubectl delete svc --all

[root@k8s-master deploy]# kubectl delete rc --all

[root@k8s-master deploy]# kubectl delete deployment --all

[root@k8s-master deploy]# kubectl get all

# 上传事先写好的Yaml文件的压缩包到Master节点(与上方Yaml文件一致)

[root@k8s-master k8s_yaml]# wget http://192.168.2.254:8888/tomcat_demo.zip

# 解压并进入该目录

[root@k8s-master k8s_yaml]# unzip tomcat_demo.zip && cd /root/k8s_yaml/tomcat_demo/

# 将文件名包含"pv"的yaml文件存放到“bak_pv”目录下,因为我们还没涉及到持久化存储的知识

[root@k8s-master tomcat_demo]# mkdir bak_pv && mv *pv*.yaml bak_pv && ls

bak_pv mysql-rc.yaml mysql-svc.yaml tomcat-rc.yaml tomcat-svc.yaml

# 上传本地镜像包并导入到镜像仓库中

[root@k8s-node-1 images]# wget http://192.168.2.254:8888/tomcat-app-v2.tar.gz

[root@k8s-node-1 images]# wget http://192.168.2.254:8888/docker-mysql-5.7.tar.gz

[root@k8s-node-1 images]# docker load -i tomcat-app-v2.tar.gz

[root@k8s-node-1 images]# docker load -i docker-mysql-5.7.tar.gz

[root@k8s-node-1 images]# docker tag docker.io/kubeguide/tomcat-app:v2 10.0.0.11:5000/tomcat-app:v2

[root@k8s-node-1 images]# docker tag docker.io/mysql:5.7 10.0.0.11:5000/mysql:5.7

[root@k8s-node-1 images]# docker push 10.0.0.11:5000/mysql:5.7

[root@k8s-node-1 images]# docker push 10.0.0.11:5000/tomcat-app:v2

操作步骤:

# 创建Mysql5.7的RC和SVC;SVC的目的在于,如果容器重启了,还能关联到SVC,Tomcat容器需要连接Mysql数据库,也就是SVC的VIP

# 创建RC -- Mysql5.7

[root@k8s-master tomcat_demo]# kubectl create -f mysql-rc.yaml

replicationcontroller "mysql" created

# 创建SVC -- mysql5.7

[root@k8s-master tomcat_demo]# kubectl create -f mysql-svc.yaml

service "mysql" created

# 查看创建的资源,记录下“svc/mysql”的VIP地址,用于Tomcat容器连接

[root@k8s-master tomcat_demo]# kubectl get all

NAME DESIRED CURRENT READY AGE

rc/mysql 1 1 1 46s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 2d

svc/mysql 10.254.34.217 <none> 3306/TCP 39s

NAME READY STATUS RESTARTS AGE

po/mysql-nb2g8 1/1 Running 0 46s

# 修改“tomcat-rc.yaml”中Mysql的地址;因为没有创建DNS,所以需要将主机名替换为刚刚获取的“svc/mysql”的VIP地址

[root@k8s-master tomcat_demo]# vim tomcat-rc.yaml

- name: MYSQL_SERVICE_HOST

value: '10.254.34.217'

- name: MYSQL_SERVICE_PORT

value: '3306'

# 创建RC -- tomcat(myweb)

[root@k8s-master tomcat_demo]# kubectl create -f tomcat-rc.yaml

replicationcontroller "myweb" created

# 创建SVC -- tomcat(myweb)

[root@k8s-master tomcat_demo]# kubectl create -f tomcat-svc.yaml

service "myweb" created

# 查看所有资源

[root@k8s-master tomcat_demo]# kubectl get all

NAME DESIRED CURRENT READY AGE

rc/mysql 1 1 1 11m

rc/myweb 1 1 1 58s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 2d

svc/mysql 10.254.34.217 <none> 3306/TCP 11m

svc/myweb 10.254.100.94 <nodes> 8080:30008/TCP 53s

NAME READY STATUS RESTARTS AGE

po/mysql-nb2g8 1/1 Running 0 11m

po/myweb-9ghl7 1/1 Running 0 58s

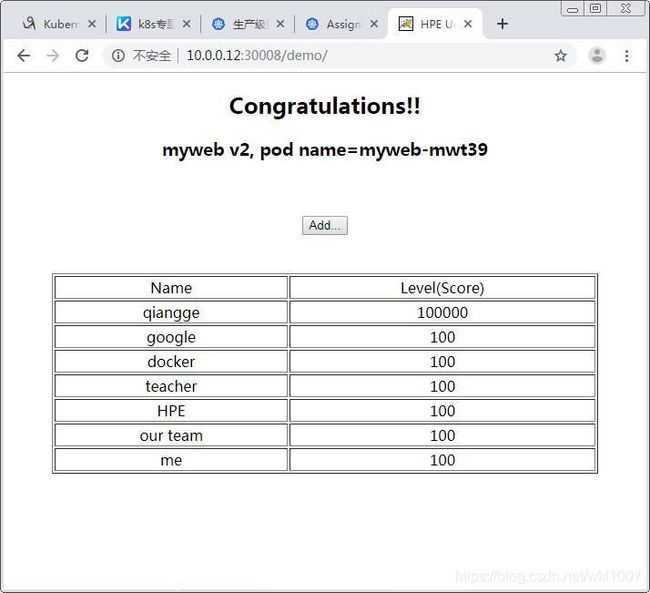

# 验证:浏览器访问

http://10.0.0.12:30008/demo/

http://10.0.0.13:30008/demo/

# 随后点击“ADD”插入一条数据,进行数据插入验证

# 登录mysql -- Pod;查看数据库中是否有相同数据

[root@k8s-master tomcat_demo]# kubectl exec -it mysql-nb2g8 /bin/bash

# 默认创建的库为“HPE_APP”

root@mysql-nb2g8:/# mysql -uroot -p123456

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| HPE_APP |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> use HPE_APP;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+-------------------+

| Tables_in_HPE_APP |

+-------------------+

| T_USERS |

+-------------------+

1 row in set (0.00 sec)

mysql> select * from T_USERS;

+----+-----------+-------+

| ID | USER_NAME | LEVEL |

+----+-----------+-------+

| 1 | me | 100 |

| 2 | our team | 100 |

| 3 | HPE | 100 |

| 4 | teacher | 100 |

| 5 | docker | 100 |

| 6 | google | 100 |

| 7 | Chiron | 10000 |

+----+-----------+-------+

7 rows in set (0.00 sec)

mysql> exit

Bye

root@mysql-nb2g8:/# exit

exit

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-WyPvFkEO-1576134541636)(F:\Docker与K8S训练营\Mysql+Tomcat.jpg)]

4:k8s的附加组件

k8s集群中dns服务的作用,就是将SVC的名字解析成VIP地址

4.1 dns服务

安装dns服务(指定Node2节点进行部署)

1:上传本地dns_docker镜像包并导入dns_docker镜像包(node2节点)

[root@k8s-node-2 ~]# mkdir /opt/images && cd /opt/images

[root@k8s-node-2 images]# wget http://192.168.2.254:8888/docker_k8s_dns.tar.gz

[root@k8s-node-2 images]# docker load -i docker_k8s_dns.tar.gz

[root@k8s-node-2 images]# docker images|grep gcr

gcr.io/google_containers/kubedns-amd64 1.9 26cf1ed9b144 3 years ago 47 MB

gcr.io/google_containers/dnsmasq-metrics-amd64 1.0 5271aabced07 3 years ago 14 MB

gcr.io/google_containers/kube-dnsmasq-amd64 1.4 3ec65756a89b 3 years ago 5.13 MB

gcr.io/google_containers/exechealthz-amd64 1.2 93a43bfb39bf 3 years ago 8.37 MB

2:将DNS服务的相关Yaml文件上传到Master节点指定位置

# Master节点创建存放DNS服务相关的Yaml文件存放位置

[root@k8s-master tomcat_demo]# mkdir /root/k8s_yaml/dns && cd /root/k8s_yaml/dns

rz skydns-rc.yaml skydns-svc.yaml

3:修改skydns-rc.yaml, 指定在node2 创建dns服务

spec:

nodeName: k8s-node-2 #固定在Node2节点创建资源

containers:

- name: kubedns

image: gcr.io/google_containers/kubedns-amd64:1.9

4:修改skydns-svc.yaml,指定创建SVC资源的clusterIP – VIP

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.254.230.254 #固定SVC的VIP地址

5:创建dns服务资源RC/Deployment

[root@k8s-master dns]# kubectl create -f skydns-rc.yaml

deployment "kube-dns" created

[root@k8s-master dns]# kubectl create -f skydns-svc.yaml

service "kube-dns" created

6:检查

[root@k8s-master dns]# kubectl get all --namespace=kube-system

或者

[root@k8s-master dns]# kubectl get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 40s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.254.230.254 <none> 53/UDP,53/TCP 39s

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1656925611 1 1 1 40s

NAME READY STATUS RESTARTS AGE

po/kube-dns-1656925611-96k7m 4/4 Running 0 40s

7:修改所有node节点kubelet的配置文件:指定docker启动容器使用K8S-DNS服务器

vim /etc/kubernetes/kubelet

KUBELET_ARGS="--cluster_dns=10.254.230.254 --cluster_domain=cluster.local"

或者

grep -Ev '^$|#' /etc/kubernetes/kubelet

sed -i 's#KUBELET_ARGS=""#KUBELET_ARGS="--cluster_dns=10.254.230.254 --cluster_domain=cluster.local"#g' /etc/kubernetes/kubelet

grep -Ev '^$|#' /etc/kubernetes/kubelet

# 重启服务

systemctl restart kubelet

8:示例Mysql+Tomcat,将之前我们修改的“tomcat-rc.yaml”中Mysql的地址;由VIP替换为原来的主机名“mysql”

# 清除之前创建的Mysql+Tomcat资源

[root@k8s-master dns]# cd /root/k8s_yaml/tomcat_demo/

[root@k8s-master tomcat_demo]# kubectl delete -f .

replicationcontroller "mysql" deleted

service "mysql" deleted

replicationcontroller "myweb" deleted

service "myweb" deleted

# 修改“tomcat-rc.yaml”中Mysql的地址

[root@k8s-master tomcat_demo]# vim tomcat-rc.yaml

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

# 创建Mysql+Tomcat资源

[root@k8s-master tomcat_demo]# kubectl create -f .

replicationcontroller "mysql" created

service "mysql" created

replicationcontroller "myweb" created

service "myweb" created

# 访问验证服务是否正常;浏览器亦可

[root@k8s-master tomcat_demo]# curl -I http://10.0.0.13:30008/demo/

HTTP/1.1 200 OK

Server: Apache-Coyote/1.1

# 服务器进入Tomcat容器中验证;看到resolv.conf中先走k8S的DNS解析

[root@k8s-master tomcat_demo]# kubectl exec -it myweb-qjc5x bash

root@myweb-qjc5x:/usr/local/tomcat# cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.254.230.254

nameserver 180.76.76.76

nameserver 223.5.5.5

options ndots:5

# 当ping -- Mysql时,将返回mysql的VIP地址

# 注意:在容器中,ping -- IP 是永远不通的

root@myweb-qjc5x:/usr/local/tomcat# ping mysql

PING mysql.default.svc.cluster.local (10.254.100.255): 56 data bytes

4.2 namespace命令空间

namespace做资源隔离

默认K8S的Namespace为:default

生产中一般建议,一个业务对应一个单独的Namepace

# 查看K8S集群中所有namespace

[root@k8s-master tomcat_demo]# kubectl get namespace

NAME STATUS AGE

default Active 2d

kube-system Active 2d

# 查看Pod,默认选择的Namespace为:default

[root@k8s-master tomcat_demo]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-dr2fz 1/1 Running 0 57m

myweb-qjc5x 1/1 Running 0 57m

[root@k8s-master tomcat_demo]# kubectl get pod -n default

NAME READY STATUS RESTARTS AGE

mysql-dr2fz 1/1 Running 0 57m

myweb-qjc5x 1/1 Running 0 57m

# 查看指定Namespace:kube-system的资源

[root@k8s-master tomcat_demo]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

kube-dns-1656925611-c2270 4/4 Running 0 58m

# 将Namespace:default中Mysql+Tomcat资源删除

[root@k8s-master tomcat_demo]# kubectl delete -f .

replicationcontroller "mysql" deleted

service "mysql" deleted

replicationcontroller "myweb" deleted

service "myweb" deleted

# 创建Namespace:tomcat

[root@k8s-master tomcat_demo]# kubectl create namespace tomcat

namespace "tomcat" created

# 查看Namespace列表

[root@k8s-master tomcat_demo]# kubectl get namespace

NAME STATUS AGE

default Active 2d

kube-system Active 2d

tomcat Active 48s

# 更改“yaml”文件;指定Namespace;不指定就默认选择Namespace为:default

# 测试在第三行之后追加一行指定Namespace;并查看前五行效果

[root@k8s-master tomcat_demo]# sed '3a \ \ namespace: tomcat' mysql-svc.yaml |head -5

apiVersion: v1

kind: Service

metadata:

namespace: tomcat #新增的行

name: mysql

# 将当前目录下所有“Yaml”文件指定Namespace

[root@k8s-master tomcat_demo]# sed -i '3a \ \ namespace: tomcat' *.yaml

# 创建Mysql+Tomcat资源

[root@k8s-master tomcat_demo]# kubectl create -f .

replicationcontroller "mysql" created

service "mysql" created

replicationcontroller "myweb" created

service "myweb" created

# 查看验证Mysql+Tomcat资源;分配到了Namespace:tomcat中

[root@k8s-master tomcat_demo]# kubectl get all -n default

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 2d

[root@k8s-master tomcat_demo]# kubectl get all -n tomcat

NAME DESIRED CURRENT READY AGE

rc/mysql 1 1 1 40s

rc/myweb 1 1 1 40s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/mysql 10.254.170.16 <none> 3306/TCP 40s

svc/myweb 10.254.130.189 <nodes> 8080:30008/TCP 39s

NAME READY STATUS RESTARTS AGE

po/mysql-pwtsp 1/1 Running 0 40s

po/myweb-jbvd6 1/1 Running 0 40s

# 访问Tomcat依旧是正常的

[root@k8s-master tomcat_demo]# curl -I http://10.0.0.13:30008/demo/

HTTP/1.1 200 OK

Server: Apache-Coyote/1.1

4.3 健康检查

4.3.1 探针的种类

livenessProbe:健康状态检查,周期性检查服务是否存活,检查结果失败,将重启容器

readinessProbe:可用性检查,周期性检查服务是否可用,不可用将从service的endpoints中移除

4.3.2 探针的检测方法

- exec:执行一段命令 返回值为0, 非0

- httpGet:检测某个 http 请求的返回状态码 2xx,3xx正常, 4xx,5xx错误

- tcpSocket:测试某个端口是否能够连接

# Master节点创建存放Health健康检查相关的Yaml文件存放位置

[root@k8s-master tomcat_demo]# mkdir /root/k8s_yaml/health && cd /root/k8s_yaml/health

4.3.3 liveness探针的exec使用:

nginx_pod_exec.yaml – 通过检测文件是否存在(即执行一段命令返回的状态码是否正常)

apiVersion: v1

kind: Pod

metadata:

name: exec

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 1

扩展:

K8S中容器的初始命令:

args:

command:

Dockerfile中容器的初始命令:

CMD:

ENTRYPOINT:

# 创建nginx_pod_exec资源

[root@k8s-master health]# kubectl create -f nginx_pod_exec.yaml

pod "exec" created

# 查看nginx_pod_exec资源的Pod状态;发现每过一段时间,Pod将执行一次重启

[root@k8s-master health]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

exec 1/1 Running 0 2s

NAME READY STATUS RESTARTS AGE

exec 1/1 Running 1 1m

exec 1/1 Running 2 2m

# 查看nginx_pod_exec资源的Pod详情,也是可以看到Pod的状态变化

[root@k8s-master ~]# kubectl describe pod exec

container "nginx" is unhealthy, it will be killed and re-created.

4.3.4 liveness探针的httpGet使用:

nginx_pod_httpGet.yaml – 通过检测服务访问的URL返回的状态码是否正常(即观察访问的值是否为“302”等)

apiVersion: v1

kind: Pod

metadata:

name: httpget

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /index.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

# 创建nginx_pod_httpGet资源

[root@k8s-master health]# kubectl create -f nginx_pod_httpGet.yaml

pod "httpget" created

# 进入容器删除首页

[root@k8s-master health]# kubectl exec -it httpget bash

root@httpget:/# rm -rf /usr/share/nginx/html/index.html

root@httpget:/# exit

exit

# 查看容器状态,发现立马进行了一次重启;这时容器有了首页就正常了

[root@k8s-master ~]# kubectl get pod httpget -w

NAME READY STATUS RESTARTS AGE

httpget 1/1 Running 0 1m

httpget 1/1 Running 1 2m

# 查看容器详情,发现检测到Nginx状态404,就有了下面的重启操作

[root@k8s-master ~]# kubectl describe pod httpget

Liveness probe failed: HTTP probe failed with statuscode: 404

4.3.5 liveness探针的tcpSocket使用:

nginx_pod_tcpSocket.yaml – 通过检测“80”端口是否启用,来判断容器的正常与否(想要正常,需进入容器将“80”端口的Nginx服务启动)

apiVersion: v1

kind: Pod

metadata:

name: tcpsocket

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- tail -f /etc/hosts

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 3

# 创建nginx_pod_tcpSocket资源

[root@k8s-master health]# kubectl create -f nginx_pod_tcpSocket.yaml

pod "tcpsocket" created

# 因为我们未进入容器将Nginx服务启动;80端口检测不到,经过一个周期,容器被重启了

[root@k8s-master ~]# kubectl get pod tcpsocket -w

NAME READY STATUS RESTARTS AGE

tcpsocket 1/1 Running 0 15s

tcpsocket 1/1 Running 1 48s

# 此时我们进入容器将Nginx服务启动

[root@k8s-master health]# kubectl exec -it tcpsocket bash

root@tcpsocket:/# /usr/sbin/nginx

root@tcpsocket:/# exit

exit

# 这时再看到Pod状态和详情,发现没有任何变化

[root@k8s-master ~]# kubectl get pod tcpsocket -w

[root@k8s-master ~]# kubectl describe pod tcpsocket

4.3.6 readiness探针的httpGet使用:

nginx-rc-httpGet.yaml – 通过检测服务访问的URL返回的状态码是否正常(即容器启动并不会显示正常关联到SVC;只有一切正常时,才会关联到SVC;这就诠释了“可用性”)

apiVersion: v1

kind: ReplicationController

metadata:

name: readiness

spec:

replicas: 2

selector:

app: readiness

template:

metadata:

labels:

app: readiness

spec:

containers:

- name: readiness

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /Chiron.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

# 创建nginx-rc-httpGet资源

[root@k8s-master health]# kubectl create -f nginx-rc-httpGet.yaml

replicationcontroller "readiness" created

# 查看Pod状态,发现是运行的,但是容器并没有准备好

[root@k8s-master health]# kubectl get pod |grep readiness

readiness-db8cw 0/1 Running 0 1m

readiness-rmpjc 0/1 Running 0 1m

# 创建SVC资源,管理POD:readiness

[root@k8s-master health]# kubectl expose rc readiness --port=80 --target-port=80 --type=NodePort

service "readiness" exposed

# 查看SVC:readiness资源详情;并没有关联到Pod

[root@k8s-master health]# kubectl describe svc readiness

Name: readiness

Namespace: default

Labels: app=readiness

Selector: app=readiness

Type: NodePort

IP: 10.254.227.196

Port: <unset> 80/TCP

NodePort: <unset> 32089/TCP

Endpoints:

Session Affinity: None

No events.

# 进入其中一个Pod,添加Chiron.html页面

[root@k8s-master health]# kubectl exec -it readiness-db8cw bash

root@readiness-db8cw:/# echo 'web01' > /usr/share/nginx/html/Chiron.html

root@readiness-db8cw:/# exit

exit

# 再次查看SVC:readiness资源详情;关联到了一个;验证了“可用性”

[root@k8s-master health]# kubectl describe svc readiness

Name: readiness

Namespace: default

Labels: app=readiness

Selector: app=readiness

Type: NodePort

IP: 10.254.227.196

Port: <unset> 80/TCP

NodePort: <unset> 32089/TCP

Endpoints: 172.18.95.4:80

Session Affinity: None

No events.

# 查看Pod:readiness状态;一个正常(准备好的),一个异常(未准备好)

[root@k8s-master health]# kubectl get pod -o wide |grep readiness

readiness-db8cw 1/1 Running 0 10m 172.18.95.4 k8s-node-2

readiness-rmpjc 0/1 Running 0 10m 172.18.37.6 k8s-node-1

4.4 dashboard服务

1:Node2节点上传并导入镜像,打标签

rz kubernetes-dashboard-amd64_v1.4.1.tar.gz

[root@k8s-node-2 images]# docker load -i kubernetes-dashboard-amd64_v1.4.1.tar.gz

[root@k8s-node-2 images]# docker tag index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1 10.0.0.10:5000/kubernetes-dashboard-amd64:v1.4.1

2:Master节点创建dashborad的deployment和service

# 创建dashborad相关的Yaml文件存放位置

[root@k8s-master health]# mkdir /root/k8s_yaml/dashboard && cd /root/k8s_yaml/dashboard

# 上传事先准备好的Yaml文件

rz dashboard.yaml && rz dashboard-svc.yaml

# 修改dashboard.yaml文件,指定Pod创建时运行在Node2节点上

[root@k8s-master dashboard]# vim dashboard.yaml

spec:

nodeName: k8s-node-2

containers:

- name: kubernetes-dashboard

# 创建dashborad相关资源

[root@k8s-master dashboard]# kubectl create -f dashboard.yaml

deployment "kubernetes-dashboard-latest" created

[root@k8s-master dashboard]# kubectl create -f dashboard-svc.yaml

service "kubernetes-dashboard" created

# 查看Namespace:kube-system 中的资源

[root@k8s-master dashboard]# kubectl get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 4h

deploy/kubernetes-dashboard-latest 1 1 1 1 2s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.254.230.254 <none> 53/UDP,53/TCP 4h

svc/kubernetes-dashboard 10.254.1.236 <none> 80/TCP 1s

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1656925611 1 1 1 4h

rs/kubernetes-dashboard-latest-1975875540 1 1 1 2s

NAME READY STATUS RESTARTS AGE

po/kube-dns-1656925611-c2270 4/4 Running 0 4h

po/kubernetes-dashboard-latest-1975875540-lnd2d 1/1 Running 0 2s

3:访问Dashboard:http://10.0.0.11:8080/ui/

4.5 通过apiservicer反向代理访问service

第一种:NodePort类型

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30008

第二种:ClusterIP类型

type: ClusterIP

ports:

- port: 80

targetPort: 80

http://10.0.0.11:8080/api/v1/proxy/namespaces/命令空间/services/service的名字/

http://10.0.0.13:30008/

http://10.0.0.11:8080/api/v1/proxy/namespaces/tomcat/services/myweb/ #同样可以访问Tomcat

5: k8s弹性伸缩

k8s弹性伸缩,需要附加插件heapster监控

扩展:

默认在Node节点中Kubelet集成了"cAdvisor"

http://10.0.0.12:4194/containers/

http://10.0.0.13:4194/containers/

5.1 安装heapster监控

1:在Node2中上传并导入镜像,打标签

# 创建放置Heapster监控用到的镜像包目录

[root@k8s-node-2 images]# mkdir /opt/images/heapster && cd /opt/images/heapster

# 上传本地的镜像包

[root@k8s-node-2 images]# wget http://192.168.2.254:8888/docker_heapster_grafana.tar.gz

[root@k8s-node-2 images]# wget http://192.168.2.254:8888/docker_heapster.tar.gz

[root@k8s-node-2 images]# wget http://192.168.2.254:8888/docker_heapster_influxdb.tar.gz

# 批量导入images

[root@k8s-node-2 heapster]# for n in `ls *.tar.gz`;do docker load -i $n ;done

# 为导入的images打标签

[root@k8s-node-2 heapster]# docker tag docker.io/kubernetes/heapster_grafana:v2.6.0 10.0.0.11:5000/heapster_grafana:v2.6.0

[root@k8s-node-2 heapster]# docker tag docker.io/kubernetes/heapster_influxdb:v0.5 10.0.0.11:5000/heapster_influxdb:v0.5

[root@k8s-node-2 heapster]# docker tag docker.io/kubernetes/heapster:canary 10.0.0.11:5000/heapster:canary

2:Maste节点创建存放“heapster”相关“Yaml”文件的命令;上传事先准备好的Yaml配置文件

[root@k8s-master dashboard]# mkdir /root/k8s_yaml/heapster && cd /root/k8s_yaml/heapster

[root@k8s-master heapster]# wget http://192.168.2.254:8888/grafana-service.yaml

[root@k8s-master heapster]# wget http://192.168.2.254:8888/heapster-service.yaml

[root@k8s-master heapster]# wget http://192.168.2.254:8888/influxdb-service.yaml

[root@k8s-master heapster]# wget http://192.168.2.254:8888/heapster-controller.yaml

[root@k8s-master heapster]# wget http://192.168.2.254:8888/influxdb-grafana-controller.yaml

3:修改配置文件指定在“Node2节点”创建资源

# 修改配置文件:heapster-controller.yaml

spec:

nodeName: k8s-node-2 #指定节点

containers:

- name: heapster

image: 10.0.0.11:5000/heapster:canary

imagePullPolicy: IfNotPresent #更改镜像下载策略;只要本地有镜像,就不进行更新

# 修改配置文件:influxdb-grafana-controller.yaml

spec:

nodeName: k8s-node-2

containers:

- name: influxdb

image: 10.0.0.11:5000/heapster_influxdb:v0.5

# 查看所有与Namespace中的Pod资源

[root@k8s-master heapster]# kubectl get pod --all-namespaces -o wide

# 创建资源

[root@k8s-master heapster]# kubectl create -f /root/k8s_yaml/heapster/.

service "monitoring-grafana" created

replicationcontroller "heapster" created

service "heapster" created

replicationcontroller "influxdb-grafana" created

service "monitoring-influxdb" created

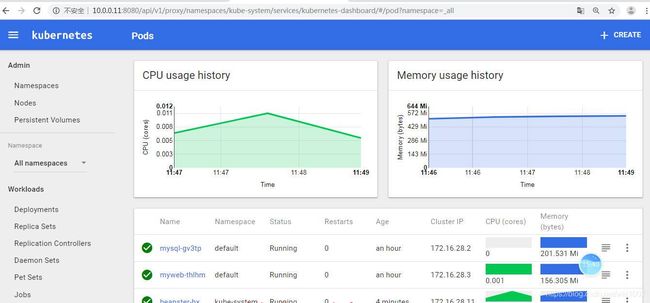

4:打开dashboard验证

5.2 弹性伸缩

1:在deploy目录中创建弹性伸缩RC的配置文件

[root@k8s-master heapster]# cd /root/k8s_yaml/deploy

[root@k8s-master deploy]# vim k8s_deploy_nginx.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

# 创建deploy_nginx资源

[root@k8s-master deploy]# kubectl create -f k8s_deploy_nginx.yaml

deployment "nginx" created

# 获取Pod的IP地址

[root@k8s-master deploy]# kubectl get pod -o wide |grep nginx

nginx-2807576163-pkgp9 1/1 Running 0 1m 172.18.37.9 k8s-node-1

# 查看资源(这里只截取了deploy_nginx资源)

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 1 1 1 1 8m

NAME REFERENCE TARGET CURRENT MINPODS MAXPODS AGE

hpa/nginx Deployment/nginx 10% 0% 1 8 4m

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/readiness 3 3 0 4h readiness 10.0.0.11:5000/nginx:1.15 app=readiness

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 3d <none>

svc/readiness 10.254.227.196 <nodes> 80:32089/TCP 4h app=readiness

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-2807576163 1 1 1 8m nginx 10.0.0.11:5000/nginx:1.13 app=nginx,pod-template-hash=2807576163

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-2807576163-pkgp9 1/1 Running 0 8m 172.18.37.9 k8s-node-1

[root@k8s-master deploy]# kubectl get hpa nginx

NAME REFERENCE TARGET CURRENT MINPODS MAXPODS AGE

nginx Deployment/nginx 10% 0% 1 8 1m

2:创建弹性伸缩规则

# 创建SVC,与当前deploy_nginx资源进行关联

[root@k8s-master deploy]# kubectl expose deployment nginx --type=NodePort --port=80

service "nginx" exposed

# 查看SVC资源;记录SVC:nginx 的随机端口;作为后面压测时使用

# 启用SVC是为了,当压力过大,Pod水平扩展时,SVC会做负载,压测时流量会相对平均分配

[root@k8s-master deploy]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 3d

nginx 10.254.249.86 <nodes> 80:30138/TCP 11s

readiness 10.254.227.196 <nodes> 80:32089/TCP 4h

[root@k8s-master deploy]# kubectl autoscale deploy nginx --max=8 --min=1 --cpu-percent=10

deployment "nginx" autoscaled

3:压力测试

# 安装httpd-tools工具,进行压力测试

[root@k8s-master deploy]# yum install -y httpd-tools

# 查看“ab”命令是否存在

[root@k8s-master deploy]# which ab

/usr/bin/ab

# 压力测试

[root@k8s-master deploy]# ab -n 1000000 -c 40 http://10.0.0.12:30138/index.html

# 注意:

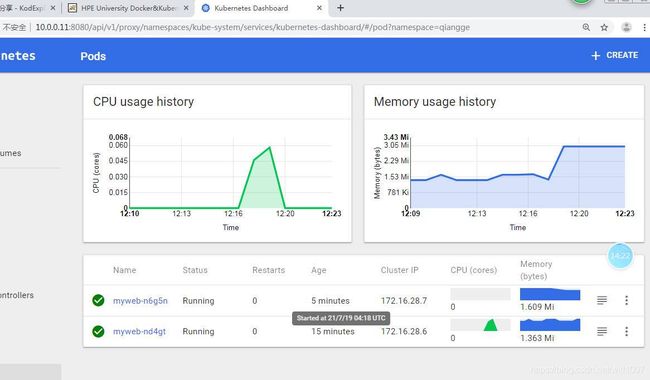

当压力减小时,水平扩展的Pod默认是五分钟后才会根据实际情况进行水平缩容;运行时间越少,将会优先删除

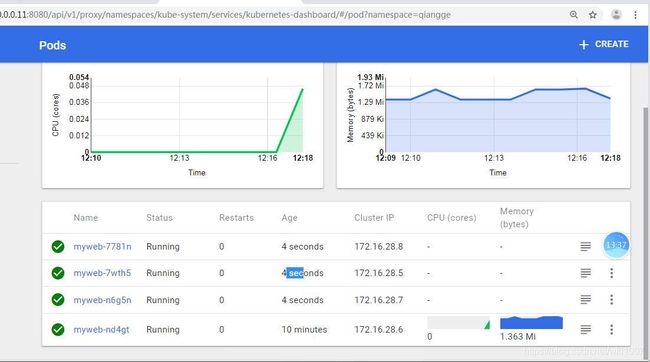

扩容截图

缩容:

6:持久化存储

前面我们部署的Mysql+Tomcat,是没有做持久化存储的,当Mysql_Pod重启后,之前添加的数据将丢失不存在。

数据持久化类型:

6.1 emptyDir:

emptryDir,顾名思义是一个空目录,它的生命周期和所属的 Pod 是完全一致的。emptyDir 类型的 Volume 在 Pod 分配到 Node 上时会被创建,Kubernetes 会在 Node 上自动分配一个目录,因此无需指定 Node 宿主机上对应的目录文件。这个目录的初始内容为空,当 Pod 从 Node 上移除(Pod 被删除或者 Pod 发生迁移)时,emptyDir 中的数据会被永久删除。

emptyDir Volume 主要用于某些应用程序无需永久保存的临时目录,在多个容器之间共享数据等。缺省情况下,emptryDir 是使用主机磁盘进行存储的。你也可以使用其它介质作为存储,比如:网络存储、内存等。设置 emptyDir.medium 字段的值为 Memory 就可以使用内存进行存储,使用内存做为存储可以提高整体速度,但是要注意一旦机器重启,内容就会被清空,并且也会受到容器内存的限制。

emptyDir类型的volume在pod分配到node上时被创建,kubernetes会在node上自动分配 一个目录,因此无需指定宿主机node上对应的目录文件。这个目录的初始内容为空,当Pod从node上移除时,emptyDir中的数据会被永久删除。

emptyDir Volume主要用于某些应用程序无需永久保存的临时目录,多个容器的共享目录等。

[root@k8s-master deploy]# cd /root/k8s_yaml/tomcat_demo/

# 创建mysql-rc-V2.yaml文件;添加“emptyDir”持久化机制;后面创建的Mysql-Pod资源使用这个文件

[root@k8s-master tomcat_demo]# vim mysql-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: tomcat

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

volumes:

- name: mysql

emptyDir: {}

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

# 删除旧的Namespace:Tomcat中所有资源

[root@k8s-master tomcat_demo]# kubectl delete -f /root/k8s_yaml/tomcat_demo/.

replicationcontroller "mysql" deleted

service "mysql" deleted

replicationcontroller "myweb" deleted

service "myweb" deleted

# 将旧版本mysql-rc.yaml文件移动到下一级目录中;不影响后面的批量创建资源

[root@k8s-master tomcat_demo]# mv mysql-rc.yaml bak_pv/

# 批量创建Mysql+Tomcat资源

[root@k8s-master tomcat_demo]# kubectl create -f /root/k8s_yaml/tomcat_demo/.

replicationcontroller "mysql" created

service "mysql" created

replicationcontroller "myweb" created

service "myweb" created

# 查看创建的资源Tomcat:Pod运行的节点

[root@k8s-master tomcat_demo]# kubectl get pod -o wide -n tomcat

NAME READY STATUS RESTARTS AGE IP NODE

mysql-c2v1j 1/1 Running 0 26s 172.18.37.7 k8s-node-1

myweb-0lzls 1/1 Running 0 26s 172.18.37.2 k8s-node-1

# 访问Web_URL,插入一条数据:Name=Chiron Level=10000

http://10.0.0.13:30008/demo/index.jsp

# 在“Node1节点”删除刚刚创建的Pod资源

[root@k8s-node-1 images]# docker rm -f `docker ps |grep my`

9213f64fe0f2

ffd769cd457b

7b05a7711987

861e6f27af6e

# 等待RC新起Pod,然后访问Web_URL,插入的数据依然存在

http://10.0.0.13:30008/demo/index.jsp

# 缺点:不能删除Mysql-Pod,如果删除Pod,数据将丢失

[root@k8s-master tomcat_demo]# kubectl delete pod -n tomcat mysql-c2v1j

pod "mysql-c2v1j" deleted

[root@k8s-master tomcat_demo]# kubectl get pod -o wide -n tomcat

NAME READY STATUS RESTARTS AGE IP NODE

mysql-vn36w 1/1 Running 0 27s 172.18.37.10 k8s-node-1

myweb-0lzls 1/1 Running 0 14m 172.18.37.2 k8s-node-1

# 等待Mysql新起Pod,然后访问Web_URL,插入的数据已经丢失

http://10.0.0.13:30008/demo/index.jsp

# 在“Node1节点”查看Mysql-Pod的volumes-mysql数据目录

[root@k8s-node-1 images]# find / -name "mysql" |grep volumes

/var/lib/kubelet/pods/57970a59-1250-11ea-b36a-000c29c6d194/volumes/kubernetes.io~empty-dir/mysql

/var/lib/kubelet/pods/57970a59-1250-11ea-b36a-000c29c6d194/volumes/kubernetes.io~empty-dir/mysql/mysql

# 进入mysql数据目录,并查看目录下有哪些东西

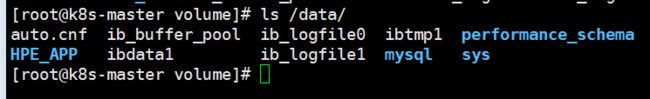

[root@k8s-node-1 images]# cd /var/lib/kubelet/pods/57970a59-1250-11ea-b36a-000c29c6d194/volumes/kubernetes.io~empty-dir/mysql && ls

auto.cnf HPE_APP ib_buffer_pool ibdata1 ib_logfile0 ib_logfile1 ibtmp1 mysql performance_schema sys

# 我们访问tomcat时,数据库是“HPE_APP”,查看它的下面有哪些表

[root@k8s-node-1 mysql]# ls HPE_APP/

db.opt T_USERS.frm T_USERS.ibd

6.2 HostPath:

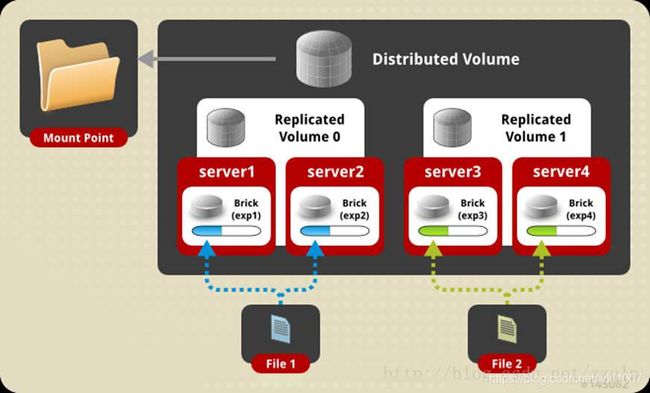

hostPath Volume为pod挂载宿主机上的目录或文件,使得容器可以使用宿主机的高速文件系统进行存储。

缺点: