Flink入门三之dataStream API、flink连kafka

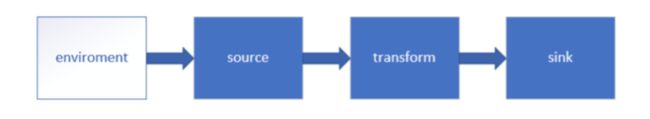

Flink流处理API

运行环境

Environment

getExecutionEnvironment

创建一个执行环境,表示当前执行程序的上下文。 如果程序是独立调用的,则此方法返回本地执行环境;如果从命令行客户端调用程序以提交到集群,则此方法返回此集群的执行环境,也就是说,getExecutionEnvironment 会根据查询运行的方式决定返回什么样的运行环境,是最常用的一种创建执行环境的方式。

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

createLocalEnvironment

本地执行环境

LocalStreamEnvironment env = StreamExecutionEnvironment.createLocalEnvironment(1);

createRemoteEnvironment

远程执行环境

返回集群执行环境,将 Jar 提交到远程服务器。需要在调用时指定 JobManager的 IP 和端口号,并指定要在集群中运行的 Jar 包。

StreamExecutionEnvironment env =

StreamExecutionEnvironment.createRemoteEnvironment("jobmanage-hostname", 6123, "YOURPATH//WordCount.jar");

source

Kafka 读数据

package com.guigu.sc.source;

import com.guigu.sc.beans.SensorReading;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

import java.util.Arrays;

import java.util.Properties;

public class SourceTest3_Kafka {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

// kafka 配置项

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "localhost:9092");

properties.setProperty("group.id", "consumer-group");

properties.setProperty("key.deserializer",

"org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer",

"org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("auto.offset.reset", "latest");

// 1.从kafaka 读数据

DataStream<String> dataStream = env.addSource(

new FlinkKafkaConsumer011<String>("sensor", new SimpleStringSchema(), properties));

env.execute();

}

}

自定义source

模拟数据源做测试时比较有用

除了以上的 source 数据来源,我们还可以自定义 source。需要做的,只是传入一个 SourceFunction 就可以。具体调用如下

package com.guigu.sc.source;

import com.guigu.sc.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import java.util.HashMap;

import java.util.Random;

public class SourceTest4_UDF {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

// 自定义数据源

DataStream<SensorReading> dataStream = env.addSource(new MySensor());

dataStream.print();

env.execute();

}

public static class MySensor implements SourceFunction<SensorReading>{

private boolean running = true;

public void run(SourceContext<SensorReading> ctx) throws Exception {

Random random = new Random();

HashMap<String, Double> sensorTempMap = new HashMap<String, Double>();

for( int i = 0; i < 10; i++ ){

sensorTempMap.put("sensor_" + (i + 1), 60 + random.nextGaussian() * 20); // 高斯分布均值 该值 正负60之间

}

while (running) {

for( String sensorId: sensorTempMap.keySet() ){

Double newTemp = sensorTempMap.get(sensorId) + random.nextGaussian();

sensorTempMap.put(sensorId, newTemp);

ctx.collect( new SensorReading(sensorId, System.currentTimeMillis(), newTemp));

}

Thread.sleep(2000L);

}

public void cancel() {

this.running = false;

}

}

}

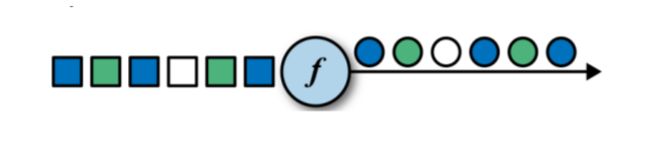

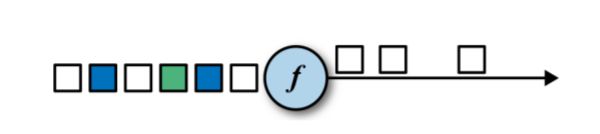

Transform

转换算子(转换计算)

map,flatmap,Filter,KeyBy ——基本转换算子

map

flatmap

filter

package com.guigu.apiTest.transform;

import com.guigu.sc.beans.SensorReading;

import com.guigu.sc.source.SourceTest4_UDF;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class TransformTest1_Base {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

// 自定义数据源

env.setParallelism(1);

DataStream<SensorReading> dataStream = env.addSource(new SourceTest4_UDF.MySensor());

// 1.map, sensorReading 转字符串 输出长度

DataStream<Integer> mapStream = dataStream.map(

//匿名类

new MapFunction<SensorReading, Integer>() {

@Override

public Integer map(SensorReading sensorReading) throws Exception {

return sensorReading.toString().length();

}

}

);

//2.flatmap, 按逗号切分字段

DataStream<String> flatMapStream = dataStream.flatMap(

new FlatMapFunction<SensorReading, String>() {

@Override

public void flatMap(SensorReading value, Collector<String> out) throws Exception {

String[] fields = value.toString().split(",");

for (String field : fields) {

out.collect(field);

}

}

}

);

// 3. filter, 条件筛选,筛选sensor_1开头的id对应的数据

DataStream<SensorReading> filterStream = dataStream.filter(

new FilterFunction<SensorReading>() {

@Override

public boolean filter(SensorReading sensorReading) throws Exception {

// 返回true标识筛选出来

return sensorReading.toString().contains("sensor_1");

}

}

);

// 打印数据

mapStream.print("map");

flatMapStream.print("flatmap");

filterStream.print("filter");

env.execute();

}

}

KeyBy

DataStream → KeyedStream:逻辑地将一个流拆分成不相交的分区,每个分区包含具有相同 key 的元素,在内部以 hash 的形式实现的。

滚动聚合算子

所有聚合操作,只有分组后才能聚合。以下可以针对 KeyedStream 的每一个支流做聚合。

- sum() 求和

- min() 只取最小

- max() 只取最大

- minBy() 返回具有最小值的整个元素

- maxBy() 返回具有最大值的整个元素

package com.guigu.apiTest.transform;

import com.guigu.sc.beans.SensorReading;

import com.guigu.sc.source.SourceTest4_UDF;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class TransformTest2_RollingAggregation {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

// 设置并行度为1

env.setParallelism(1);

// 自定义数据源

DataStream<SensorReading> dataStream = env.addSource(new SourceTest4_UDF.MySensor());

// 分组

KeyedStream<SensorReading, Tuple> keyedStream = dataStream.keyBy("id"); // javaBean 一个field

// keySelector

//KeyedStream keyedStream2 = dataStream.keyBy(SensorReading::getId); // 传方法引用

// 滚动聚合,不停更新

DataStream<SensorReading> resultStream = keyedStream.maxBy("temperature"); // 传key,传位置的话就必须要是元组类型

resultStream.print();

env.execute();

}

}

Reduce

KeyedStream → DataStream:一个分组数据流的聚合操作,合并当前的元素和上次聚合的结果,产生一个新的值,返回的流中包含每一次聚合的结果,而不是

只返回最后一次聚合的最终结果。(一般化的聚合)

多流转换算子

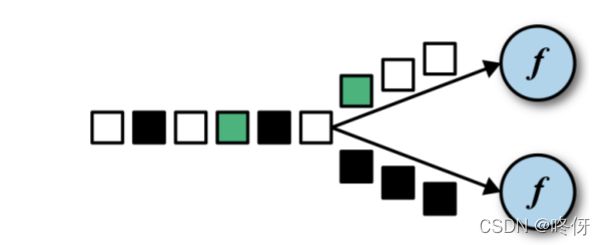

Split 和 Select

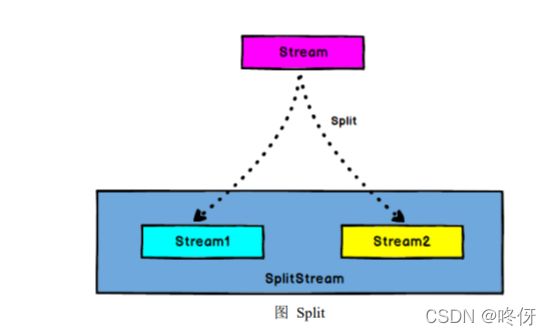

Split

DataStream → SplitStream:根据某些特征把一个 DataStream 拆分成两个或者多个 DataStream。

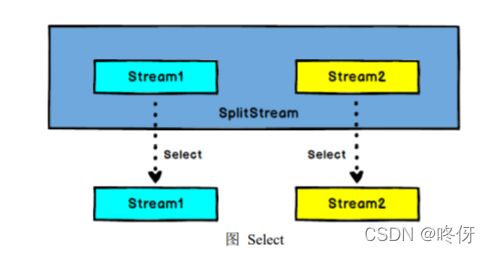

Select

需求:传感器数据按照温度高低(以 30 度为界),拆分成两个流。

过时方法,可以用底层process 替代

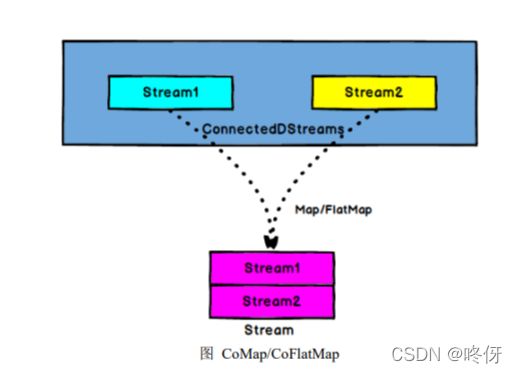

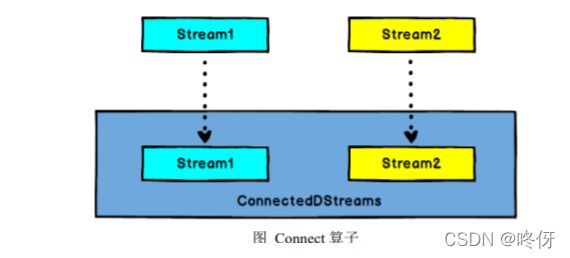

Connect 和 CoMap

DataStream,DataStream → ConnectedStreams:连接两个保持他们类型的数据流,两个数据流被 Connect 之后,只是被放在了一个同一个流中,内部依然保持各自的数据和形式不发生任何变化,两个流相互独立。

CoMap,CoFlatMape

ConnectedStreams → DataStream:作用于 ConnectedStreams 上,功能与 map和 flatMap 一样,对 ConnectedStreams 中的每一个 Stream 分别进行 map 和 flatMap处理。

应用:

connect()经常被应用于使用一个控制流对另一个数据流进行控制的场景,控制流可以是阈值、规则、机器学习模型或其他参数。

package com.guigu.apiTest.transform;

import com.guigu.sc.beans.SensorReading;

import com.guigu.sc.source.SourceTest4_UDF;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.collector.selector.OutputSelector;

import org.apache.flink.streaming.api.datastream.ConnectedStreams;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SplitStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoMapFunction;

import java.util.Collections;

public class TransformTest5_connect_comap {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

// 设置并行度为1

env.setParallelism(1);

// 自定义数据源

DataStream<SensorReading> inputStream = env.addSource(new SourceTest4_UDF.MySensor());

// split 分流,方法过时

SplitStream<SensorReading> splitStream = inputStream.split(new OutputSelector<SensorReading>() {

@Override

public Iterable<String> select(SensorReading sensorReading) {

return (sensorReading.getTemperature() > 30) ? Collections.singletonList("high") :

Collections.singletonList("low");

}

});

// select

DataStream<SensorReading> highStream = splitStream.select("high");

DataStream<SensorReading> lowStream = splitStream.select("low");

// 2. 合流, 将 high 流转换为二元组类型 (id, temperature),与low流链接合并之后输出状态信息

DataStream<Tuple2<String, Double>> warningStream = highStream.map(

new MapFunction<SensorReading, Tuple2<String, Double>>() {

@Override

public Tuple2<String, Double> map(SensorReading sensorReading) throws Exception {

return new Tuple2<>(sensorReading.getId(), sensorReading.getTemperature());

}

}

);

// 合流, high流输出报警,low输出健康状态

ConnectedStreams<Tuple2<String, Double>, SensorReading> connectedStreams =

warningStream.connect(lowStream);

// 返回Object流,因为connect两个流类型可以不一样

DataStream<Object> resultStream = connectedStreams.map(

new CoMapFunction<Tuple2<String, Double>, SensorReading, Object>() {

//处理第一个流

@Override

public Object map1(Tuple2<String, Double> stringDoubleTuple2) throws Exception {

return new Tuple3<>(stringDoubleTuple2.f0, stringDoubleTuple2.f1, "warning");

}

// 处理第二个流

@Override

public Object map2(SensorReading sensorReading) throws Exception {

return new Tuple2<>(sensorReading.getId(), "healthy");

}

}

);

resultStream.print();

env.execute();

}

}

Flink不保证map1 和 map2 的执行顺序,两个方法的调用顺序依赖于两个数据流中数据流入的先后顺序。

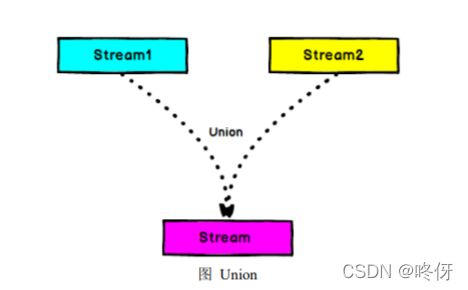

Union

Union必须是相同数据类型才能进行合流。DataStream → DataStream:对两个或者两个以上的 DataStream 进行 union 操

作,产生一个包含所有 DataStream 元素的新 DataStream

// union联合多条流

DataStream<SensorReading> unionStream = highStream.union(lowStream);

connect Union区别

-

Union 之前两个流的类型必须是一样,Connect 可以不一样,在之后的 coMap中再去调整成为一样的。

-

Connect 只能操作两个流,Union 可以操作多个。

UDF——更细粒度的控制流

Flink 暴露了所有 udf 函数的接口(实现方式为接口或者抽象类)。例如MapFunction, FilterFunction, ProcessFunction 等等。

自定义算子接口

例如实现以下FilterFunction接口(过滤出含flink字段的字符串)

DataStream<String> flinkTweets = tweets.filter(new FlinkFilter()); //tweets 也是一个流

public static class FlinkFilter implements FilterFunction<String> {

@Override

public boolean filter(String value) throws Exception {

return value.contains("flink");

}

}

//匿名类

DataStream<String> flinkTweets = tweets.filter(new FilterFunction<String>() {

@Override

public boolean filter(String value) throws Exception {

return value.contains("flink");

}

});

我们 filter 的字符串"flink"还可以当作参数传进去。

DataStream<String> tweets = env.readTextFile("INPUT_FILE ");

DataStream<String> flinkTweets = tweets.filter(new KeyWordFilter("flink"));

public static class KeyWordFilter implements FilterFunction<String> {

private String keyWord;

KeyWordFilter(String keyWord) { this.keyWord = keyWord; }

@Override

public boolean filter(String value) throws Exception {

return value.contains(this.keyWord);

}

}

匿名函数形式

DataStream<String> tweets = env.readTextFile("INPUT_FILE");

DataStream<String> flinkTweets = tweets.filter( tweet -> tweet.contains("flink") );

富函数 Rich Functions

“富函数”是 DataStream API 提供的一个函数类的接口,所有 Flink 函数类都有其 Rich 版本。它与常规函数的不同在于,可以获取运行环境的上下文,并拥有一些生命周期方法,所以可以实现更复杂的功能。

- RichMapFunction

- RichFlatMapFunction.

- RichFilterFunction

Rich Function 有一个生命周期的概念。典型的生命周期方法有: (通过重写做一些操作)

-

open()方法是 rich function 的初始化方法,当一个算子例如 map 或者 filter

被调用之前 open()会被调用。

-

close()方法是生命周期中的最后一个调用的方法,做一些清理工作。

-

getRuntimeContext()方法提供了函数的 RuntimeContext 的一些信息,例如函

数执行的并行度,任务的名字,以及 state 状态

public static class MyMapFunction extends RichMapFunction<SensorReading,

Tuple2<Integer, String>> {

@Override

public Tuple2<Integer, String> map(SensorReading value) throws Exception {

return new Tuple2<>(getRuntimeContext().getIndexOfThisSubtask(), value.getId());

}

@Override

public void open(Configuration parameters) throws Exception {

System.out.println("my map open");

// 以下可以做一些初始化工作,例如建立一个和 HDFS 的连接

}

@Override

public void close() throws Exception {

System.out.println("my map close");

// 以下做一些清理工作,例如断开和 HDFS 的连接

}

}

数据重区分操作

-

keyBy

-

forward 直传,只在当前分区计算

-

broadcast 广播,向下游子任务都广播一份

-

shuffe 洗牌,随机发牌给下游并行子任务上

-

rebalance 均匀分布下游的操作实例里 (round-robin)

-

rescale() 分组均衡,组内轮询

-

global() 所有的output丢给下游第一个实例(汇总数据, 慎用

-

partitionCustom 用户自定义重分区方式

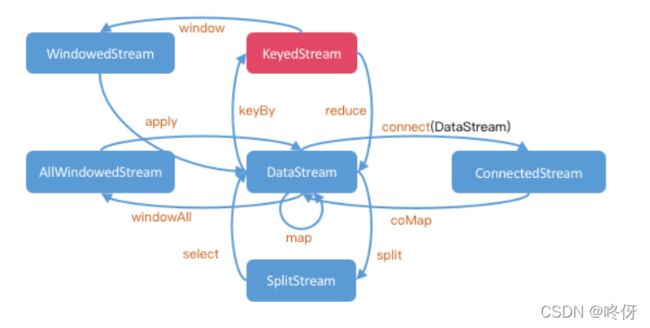

DataStream 关系图

Sink

Flink 没有类似于 spark 中 foreach 方法,让用户进行迭代的操作。虽有对外的

输出操作都要利用 Sink 完成。最后通过类似如下方式完成整个任务最终输出操作。

stream.addSink(new MySink(xxxx))

kafka

输出到kafka

package com.guigu.wc;

import com.guigu.wc.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer011;

public class SinkTest1_Kafka {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

// 自定义数据源

env.setParallelism(4);

DataStream<SensorReading> inputStream = env.addSource(new SourceTest4_UDF.MySensor());

DataStream<String> mapStream = inputStream.map(new MapFunction<SensorReading, String>() {

@Override

public String map(SensorReading sensorReading) throws Exception {

return sensorReading.toString();

}

});

// 与kafka连接

mapStream.addSink(new FlinkKafkaProducer011<String>("localhost:9092",

"test", new SimpleStringSchema()));

env.execute();

}

}

kafka数据管道,kafka进 kafka出。

package com.guigu.wc;

import com.guigu.wc.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer011;

public class SinkTest1_Kafka {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

// kafka 配置项

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "localhost:9092");

properties.setProperty("group.id", "consumer-group");

properties.setProperty("key.deserializer",

"org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer",

"org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("auto.offset.reset", "latest");

// 1.从kafaka 读数据

DataStream<String> dataStream = env.addSource(

new FlinkKafkaConsumer011<String>("sensor", new SimpleStringSchema(), properties));

// 与kafka连接

dataStream.addSink(new FlinkKafkaProducer011<String>("localhost:9092",

"test", new SimpleStringSchema()));

env.execute();

}

}